Table of Contents |

guest 2025-02-18 |

Release Notes 24.11 (November 2024)

Upcoming Features

Release Notes 25.3 (March 2025)

Getting Started

Try it Now: Data Grids

Trial Servers

Explore LabKey Server with a trial in LabKey Cloud

Introduction to LabKey Server: Trial

Exploring LabKey Collaboration

Exploring Laboratory Data

Exploring LabKey Studies

Exploring LabKey Security

Exploring Project Creation

Extending Your Trial

LabKey Server trial in LabKey Cloud

Design Your Own Study

Explore LabKey Biologics with a Trial

Install LabKey for Evaluation

Tutorials

Set Up for Tutorials: Trial

Set Up for Tutorials: Non-Trial

Navigation and UI Basics

LabKey Server Editions

Training

LabKey Server

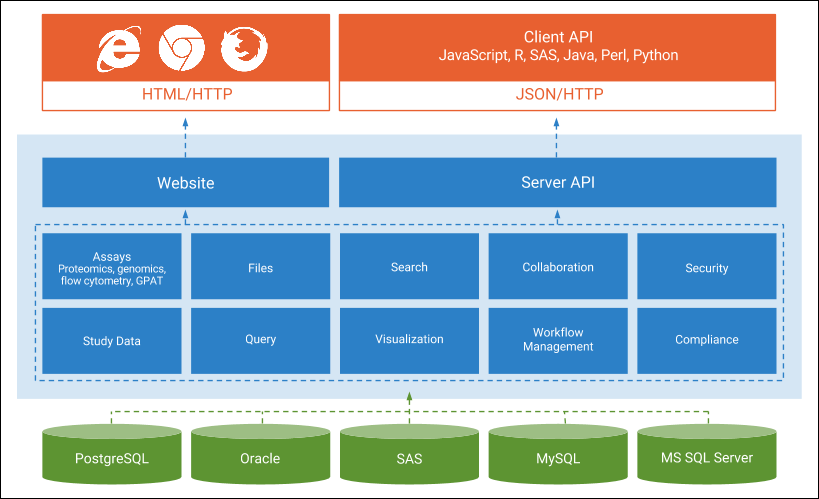

Introduction to LabKey Server

Navigate the Server

Data Basics

LabKey Data Structures

Preparing Data for Import

Field Editor

Field Types and Properties

Text Choice Fields

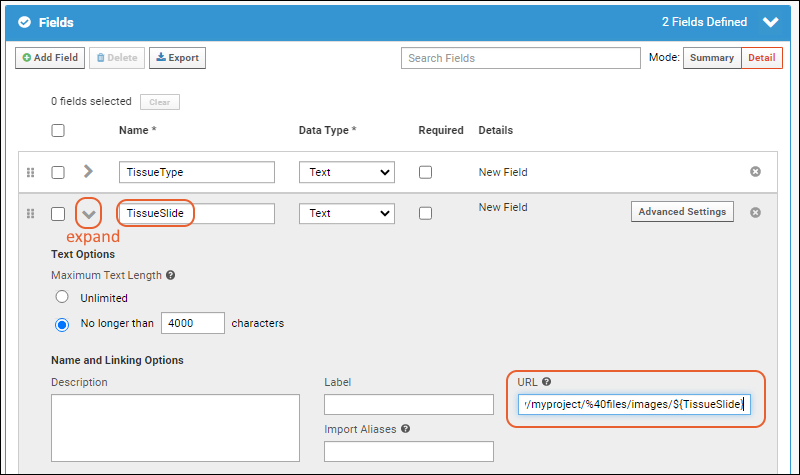

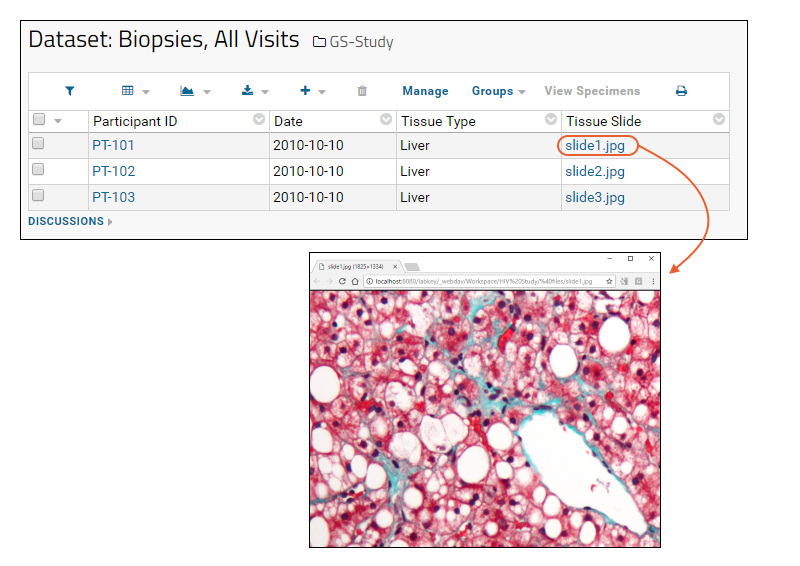

URL Field Property

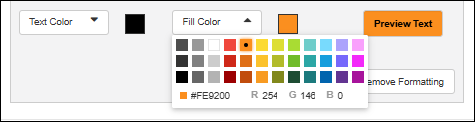

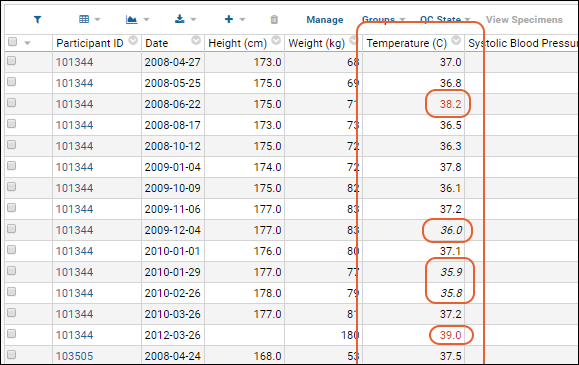

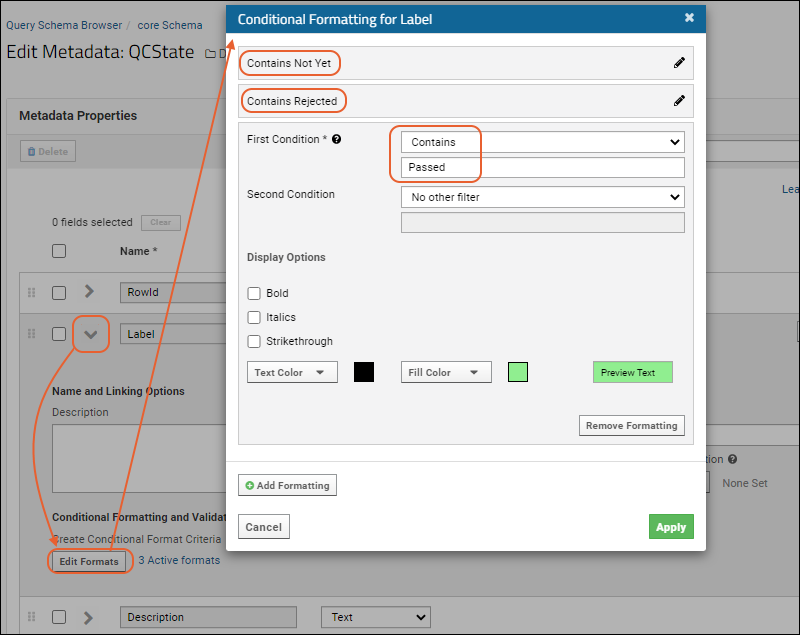

Conditional Formats

String Expression Format Functions

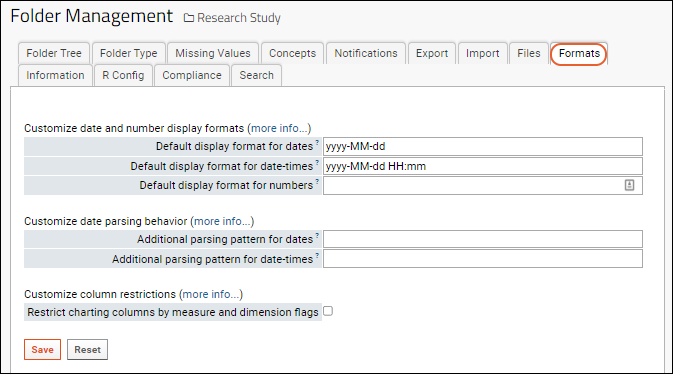

Date & Number Display Formats

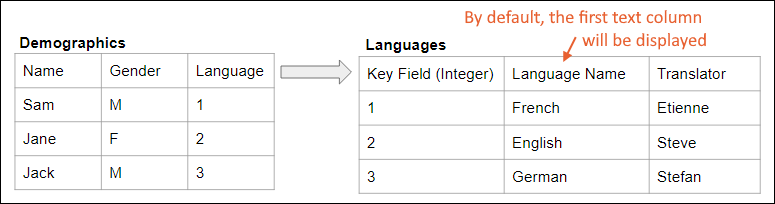

Lookup Columns

Protecting PHI Data

Data Grids

Data Grids: Basics

Import Data

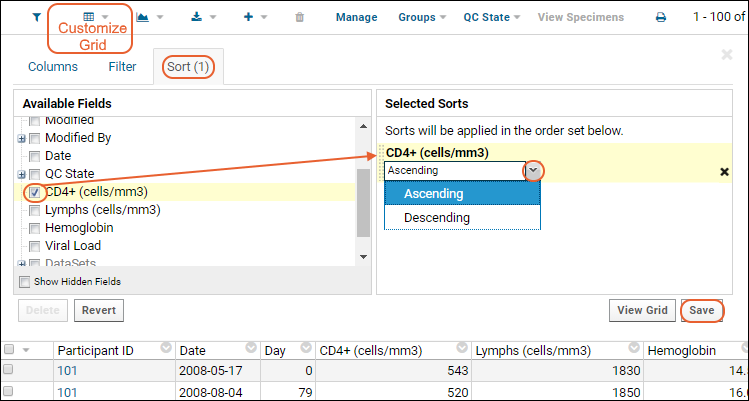

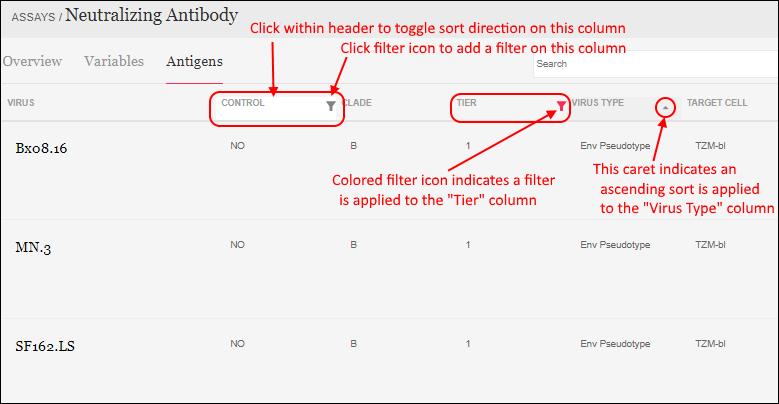

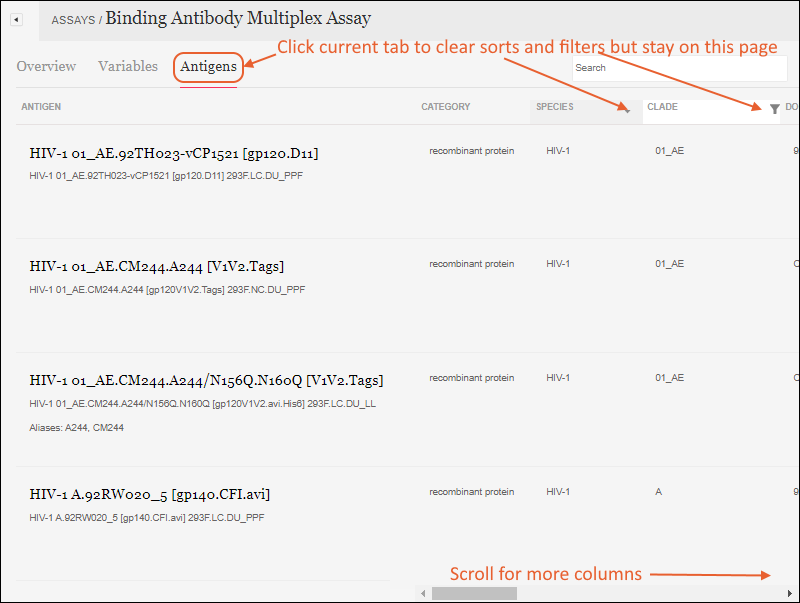

Sort Data

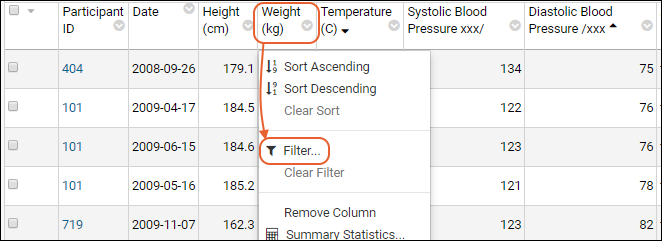

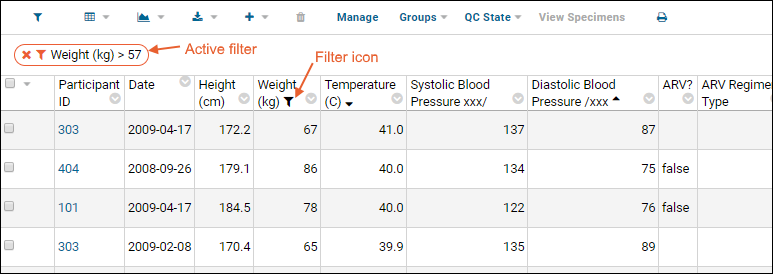

Filter Data

Filtering Expressions

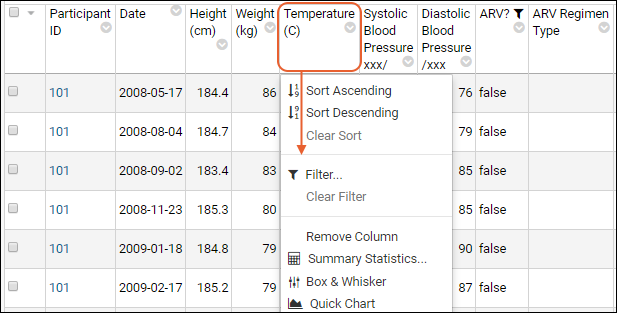

Column Summary Statistics

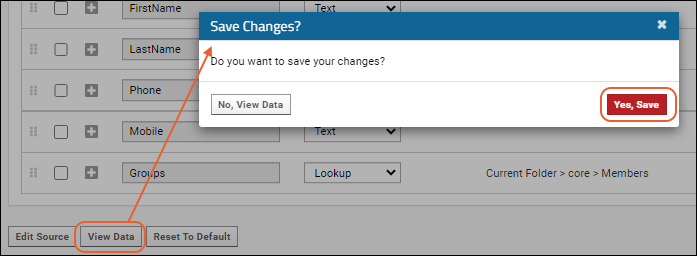

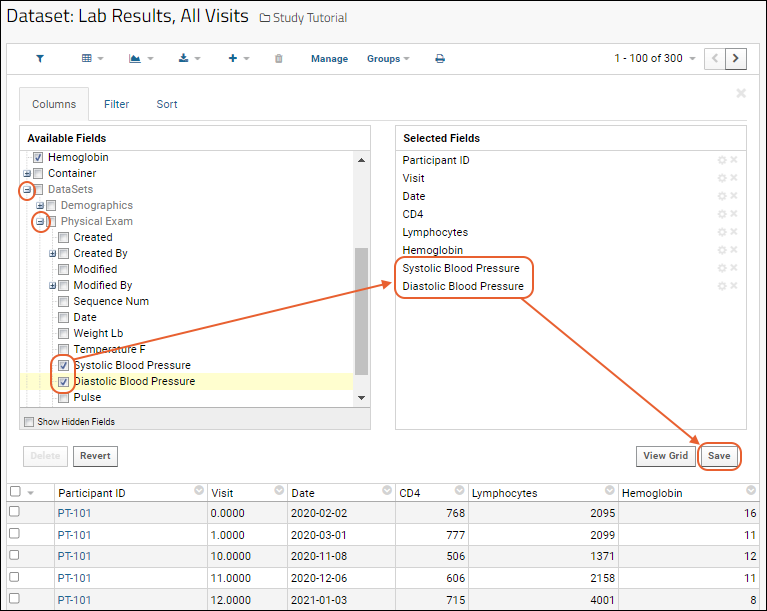

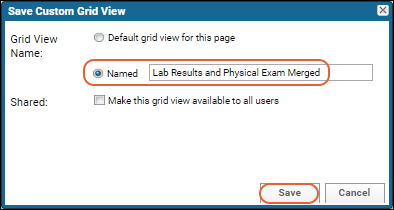

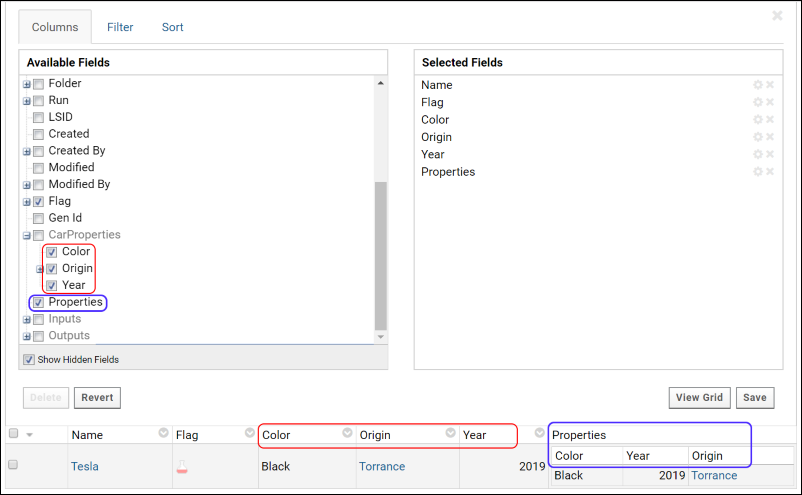

Customize Grid Views

Saved Filters and Sorts

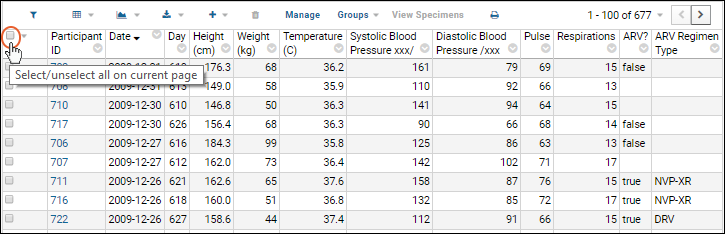

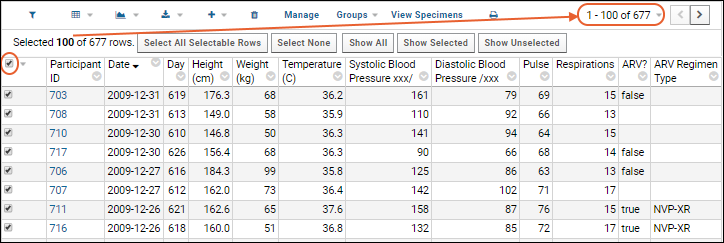

Select Rows

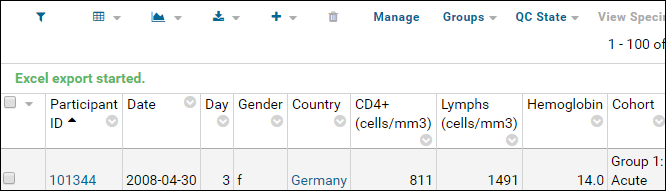

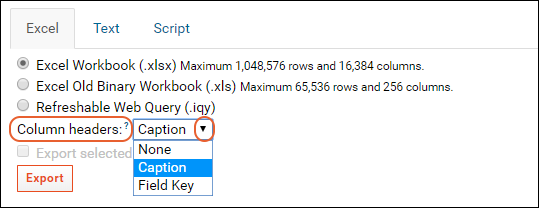

Export Data Grid

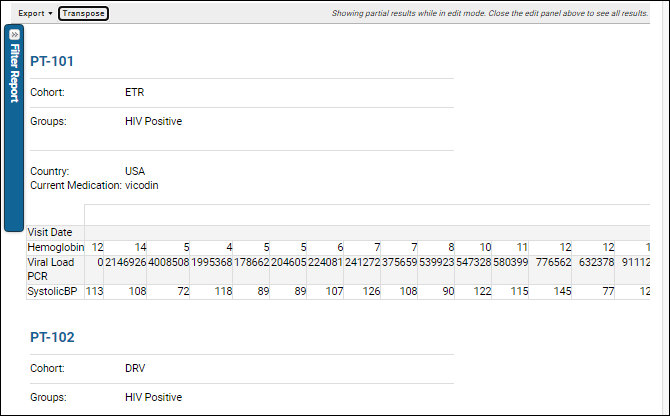

Participant Details View

Query Scope: Filter by Folder

Reports and Charts

Jupyter Reports

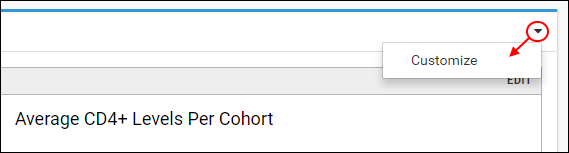

Report Web Part: Display a Report or Chart

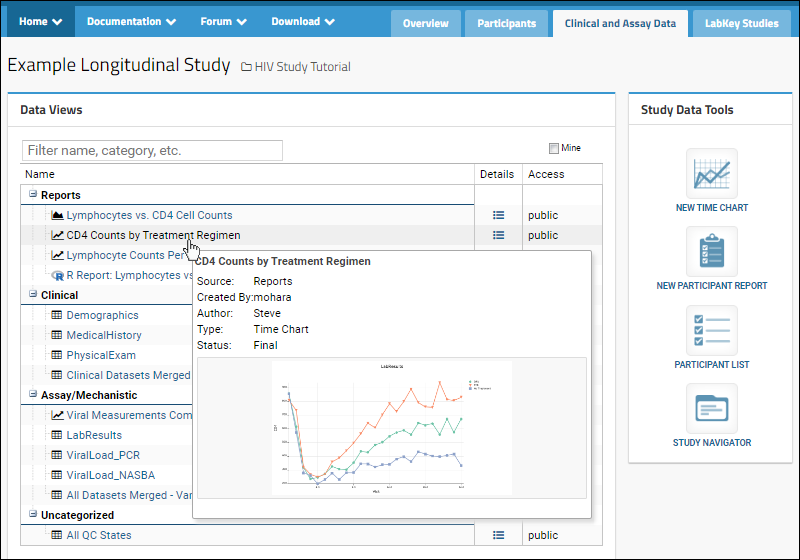

Data Views Browser

Query Snapshots

Attachment Reports

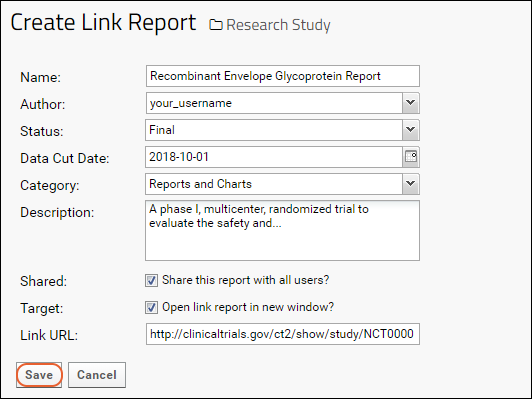

Link Reports

Participant Reports

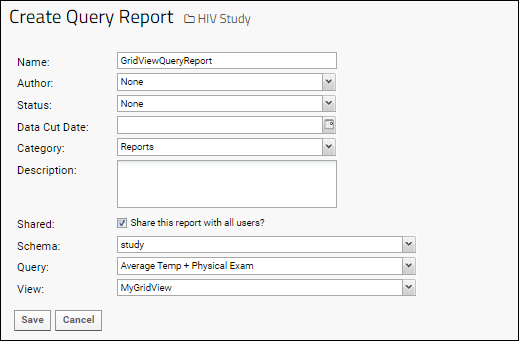

Query Reports

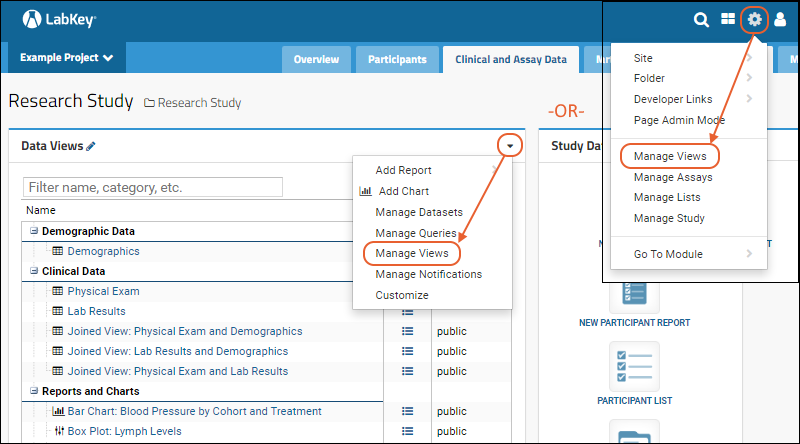

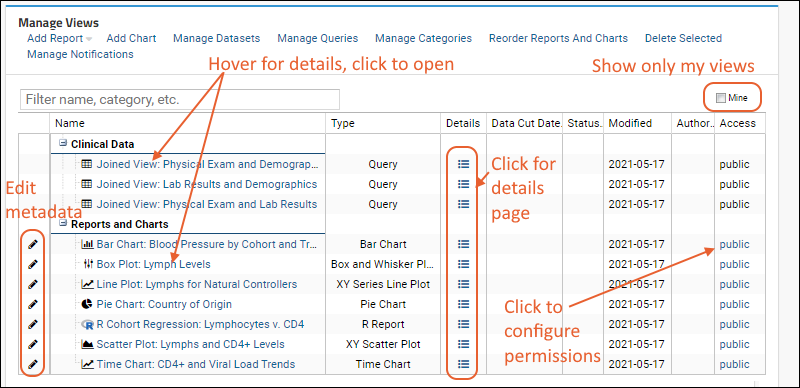

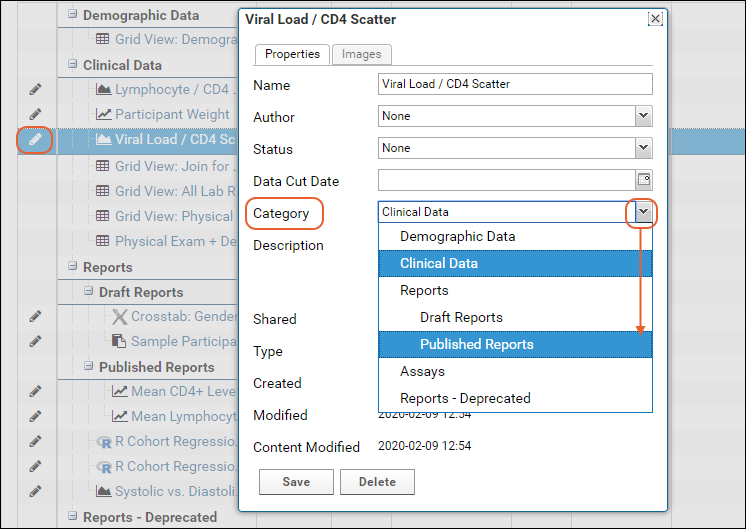

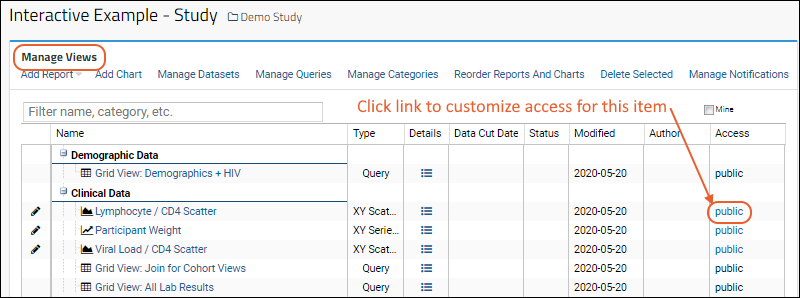

Manage Data Views

Manage Study Notifications

Manage Categories

Manage Thumbnail Images

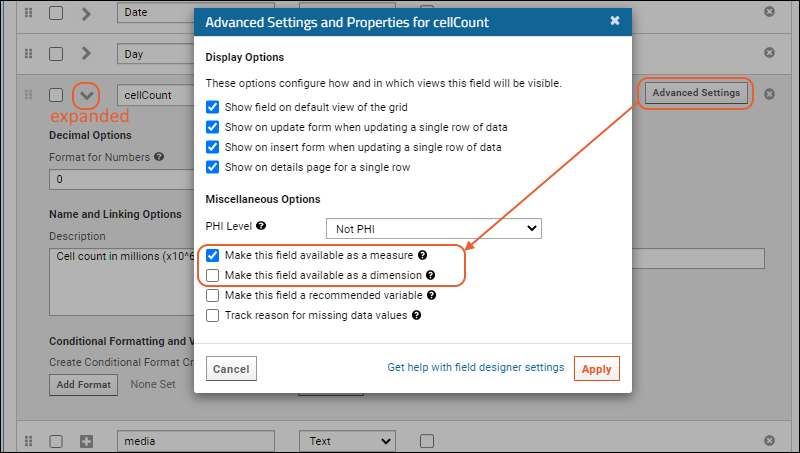

Measure and Dimension Columns

Visualizations

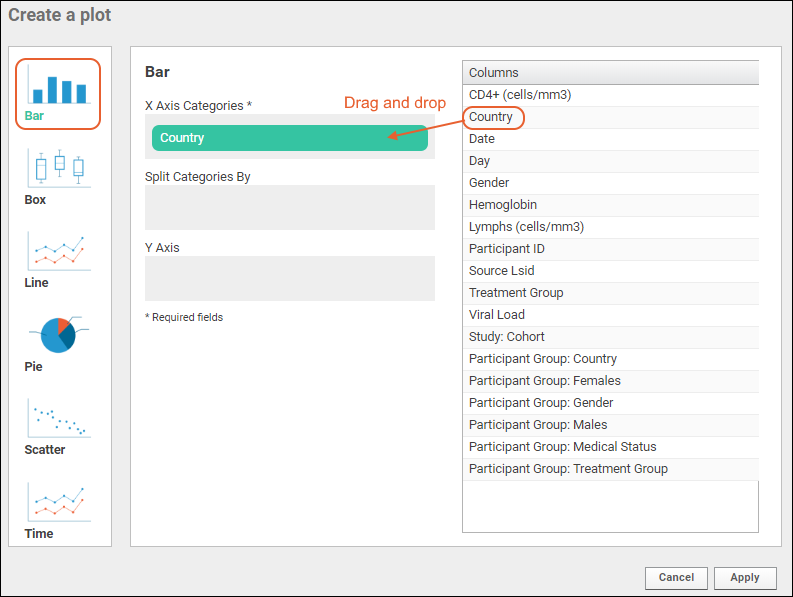

Bar Charts

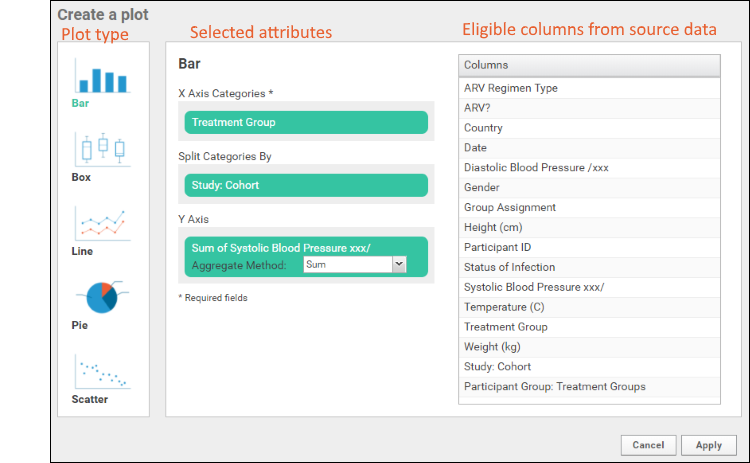

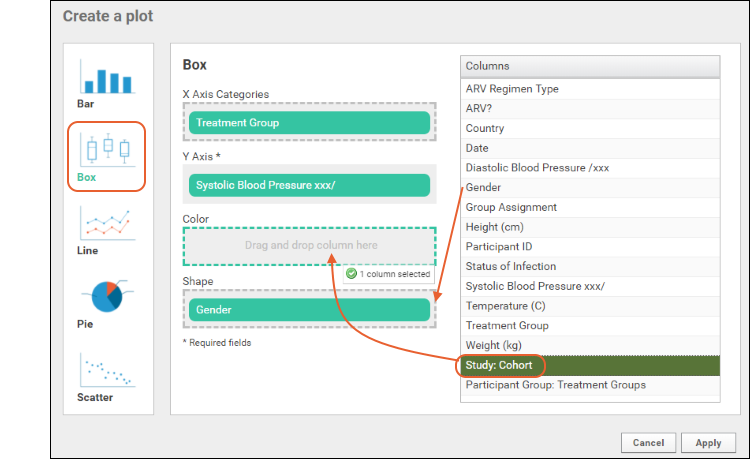

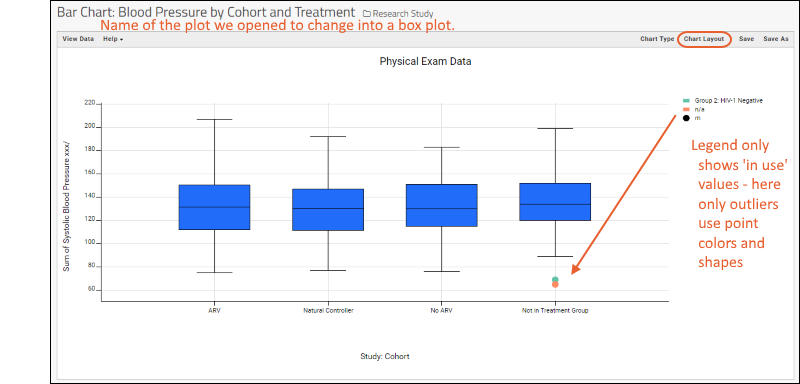

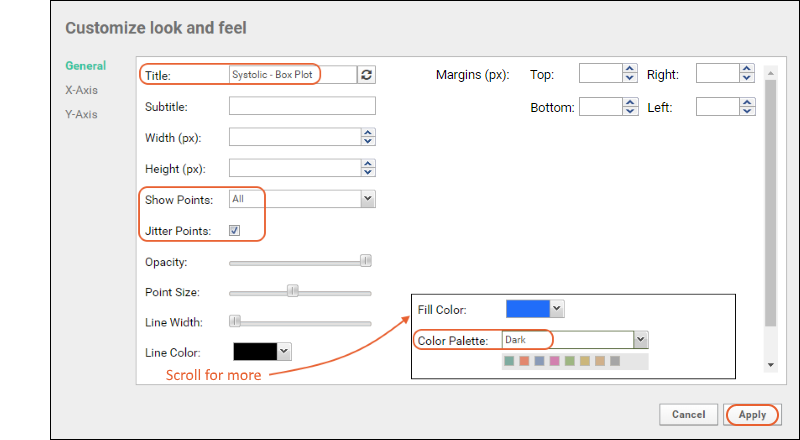

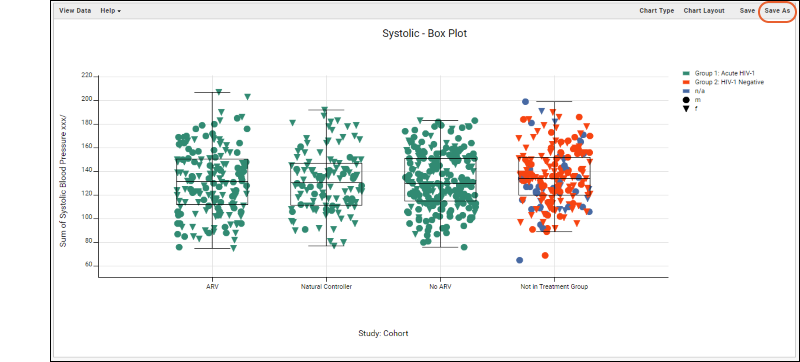

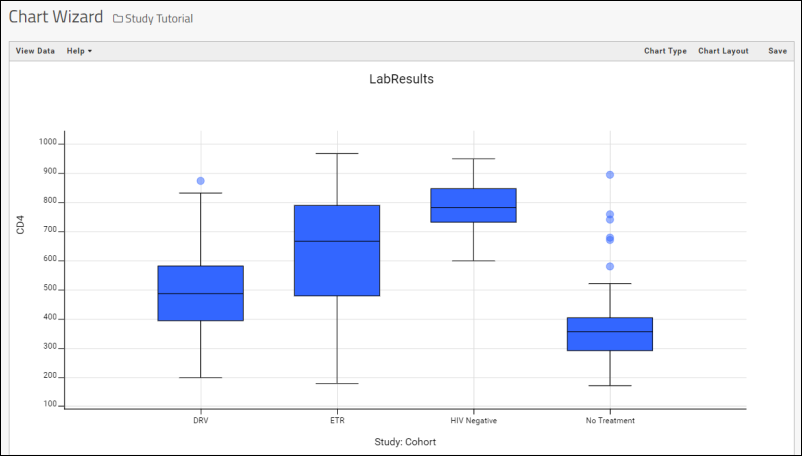

Box Plots

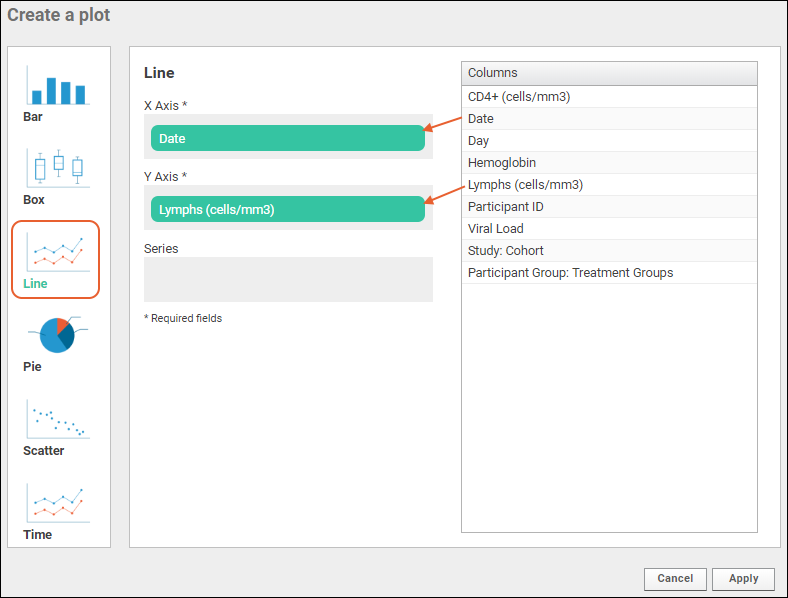

Line Plots

Pie Charts

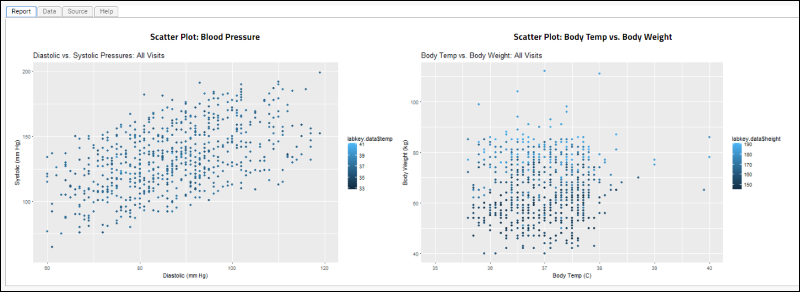

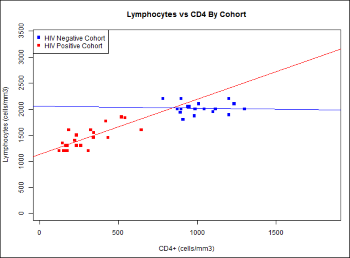

Scatter Plots

Time Charts

Column Visualizations

Quick Charts

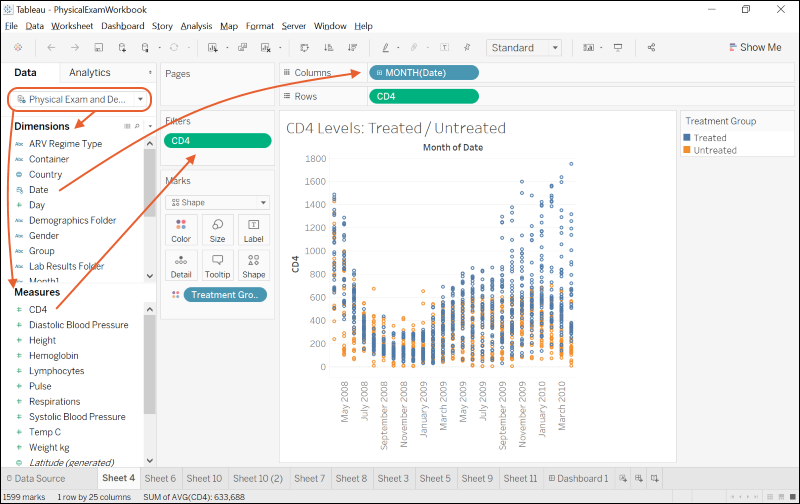

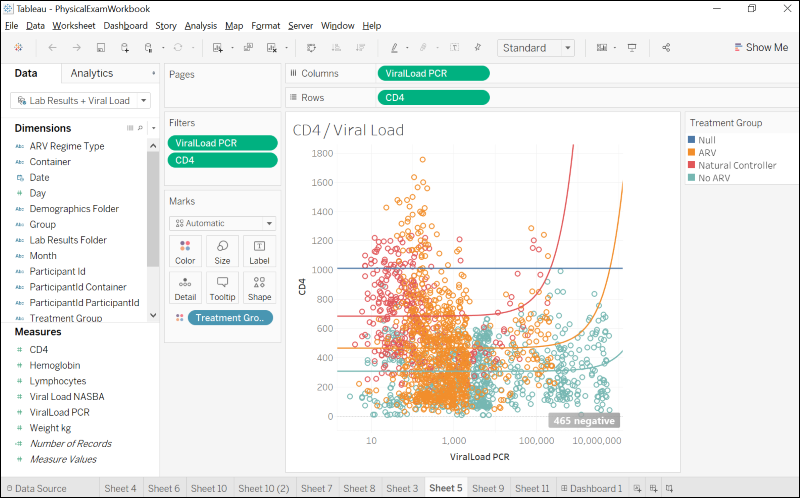

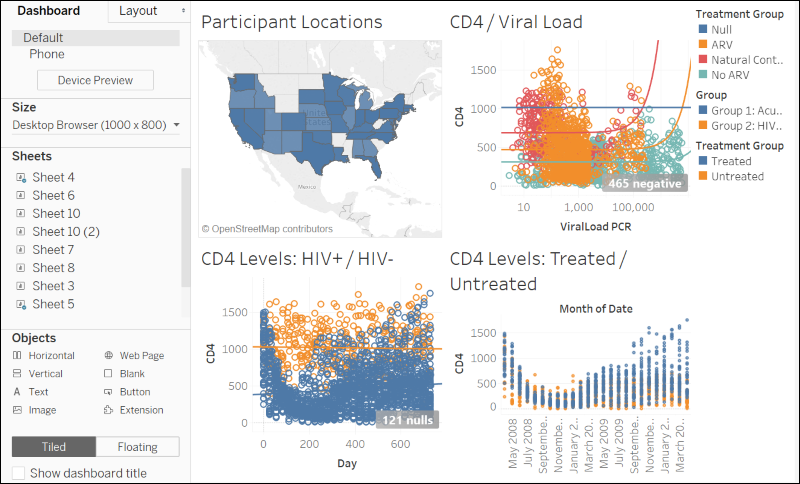

Integrate with Tableau

Lists

Tutorial: Lists

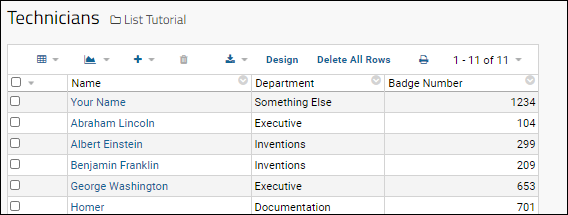

Step 1: Set Up List Tutorial

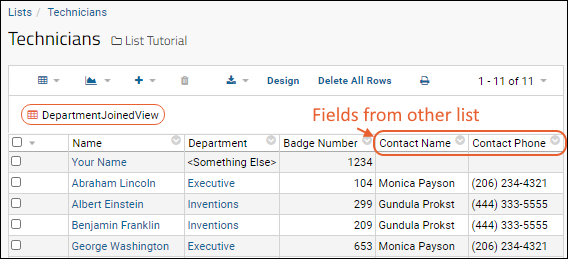

Step 2: Create a Joined Grid

Step 3: Add a URL Property

Create Lists

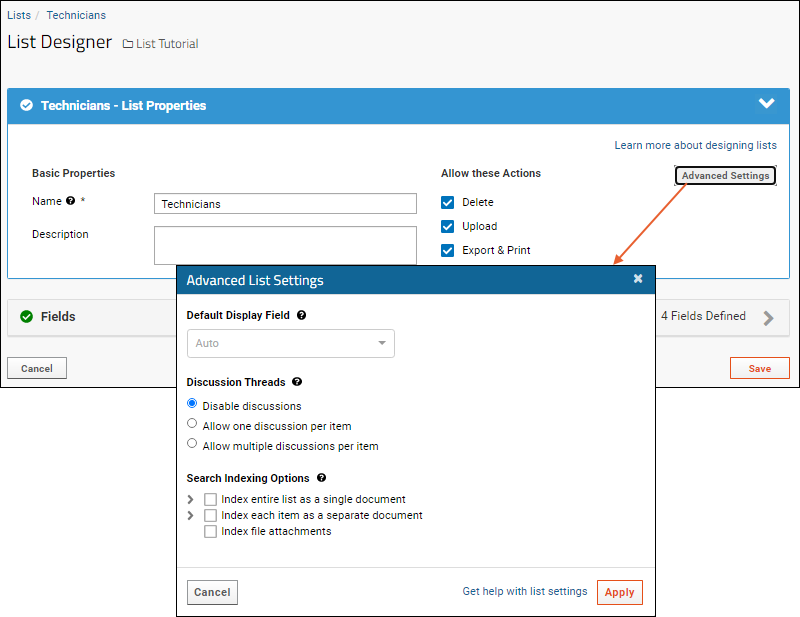

Edit a List Design

Populate a List

Manage Lists

Export/Import a List Archive

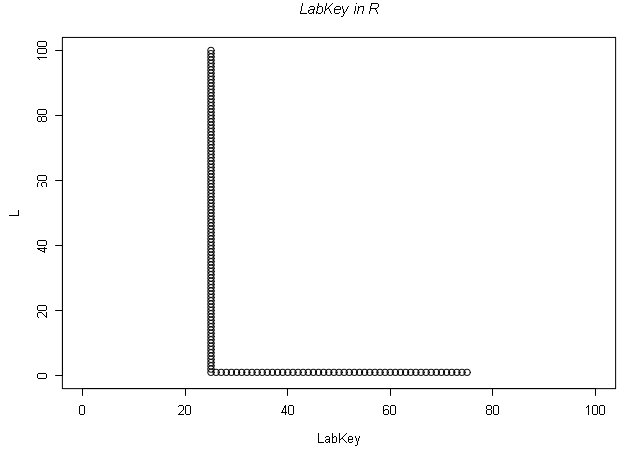

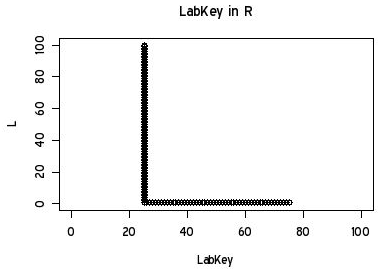

R Reports

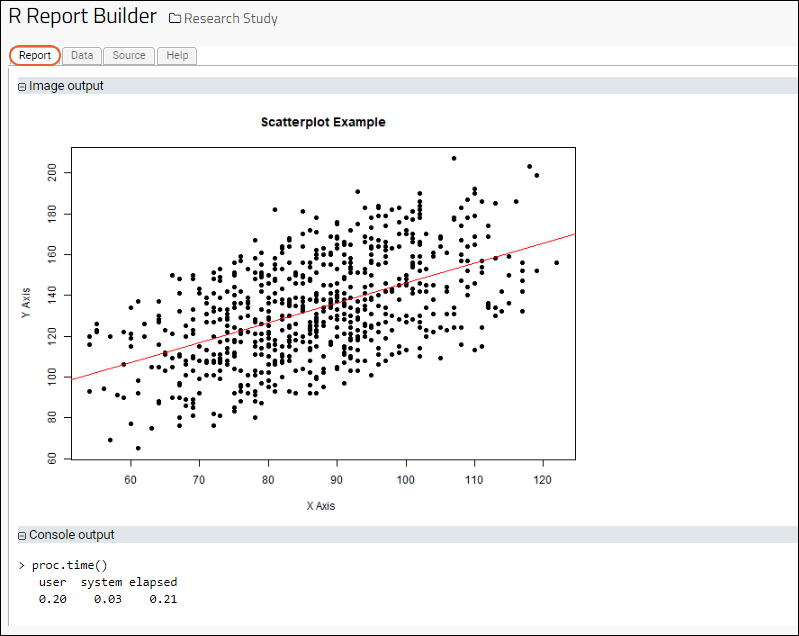

R Report Builder

Saved R Reports

R Reports: Access LabKey Data

Multi-Panel R Plots

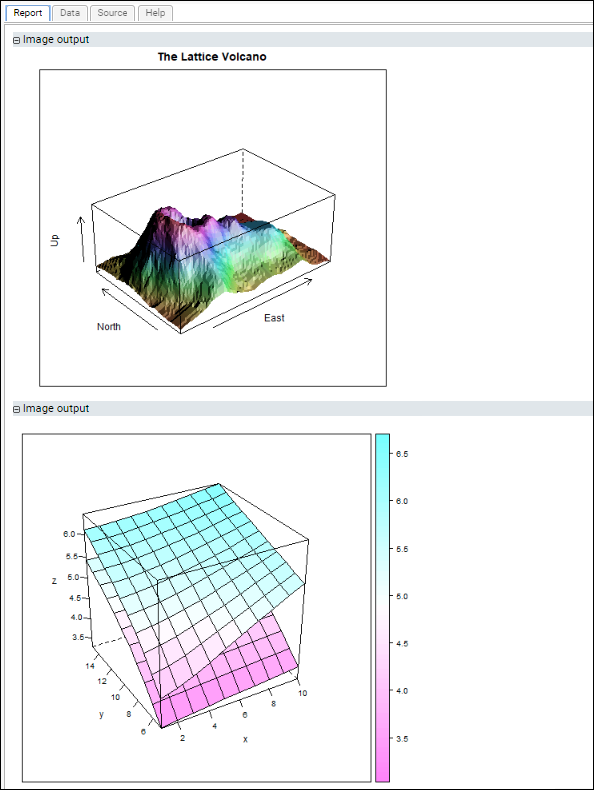

Lattice Plots

Participant Charts in R

R Reports with knitr

Premium Resource: Show Plotly Graph in R Report

Input/Output Substitutions Reference

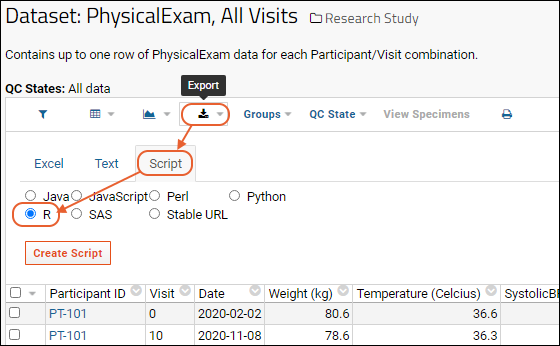

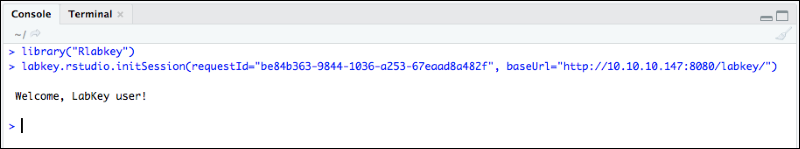

Tutorial: Query LabKey Server from RStudio

FAQs for LabKey R Reports

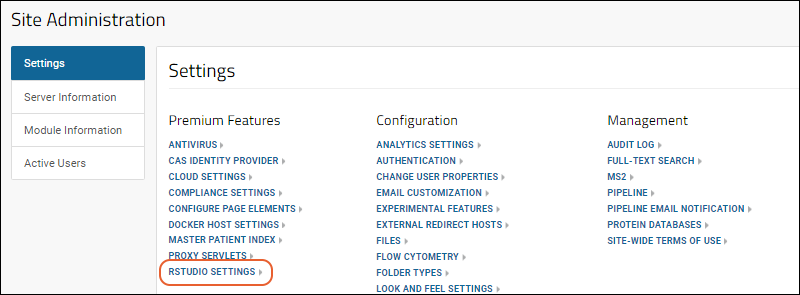

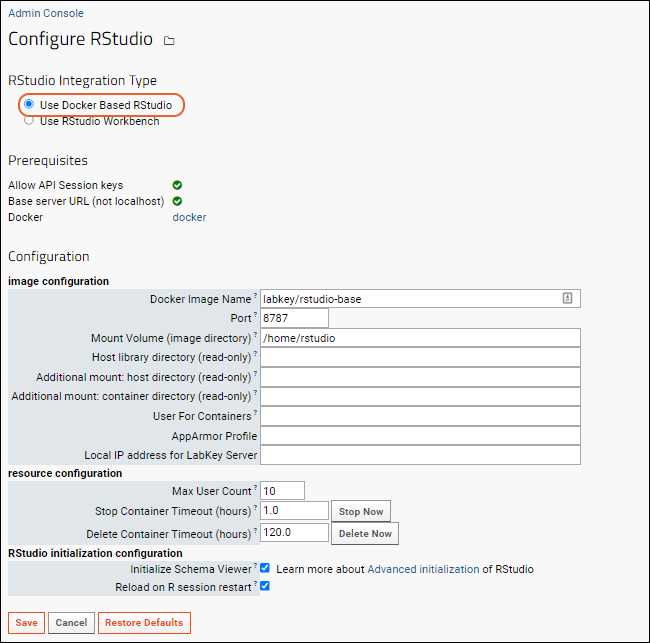

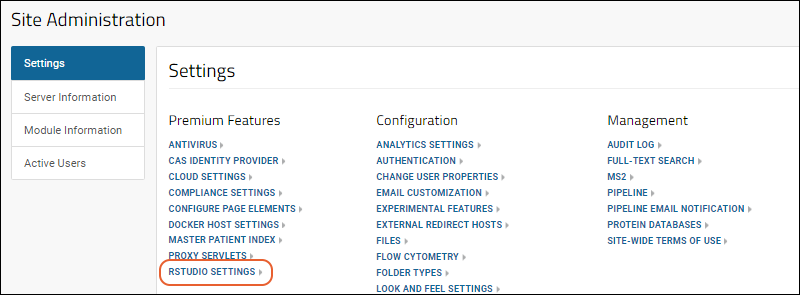

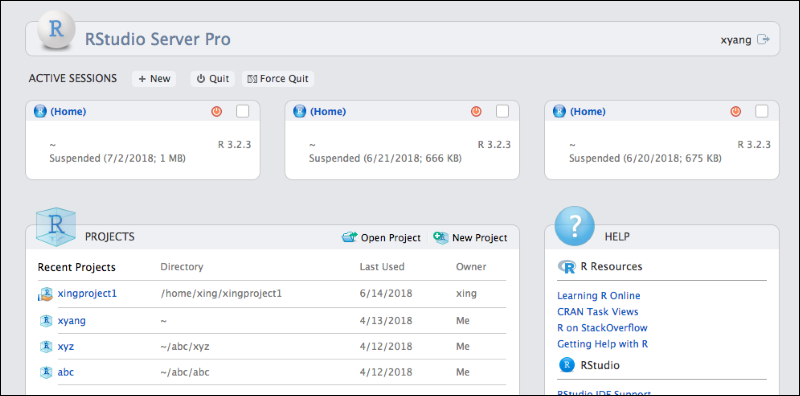

Premium RStudio Integration

Connect to RStudio

Set Up Docker with TLS

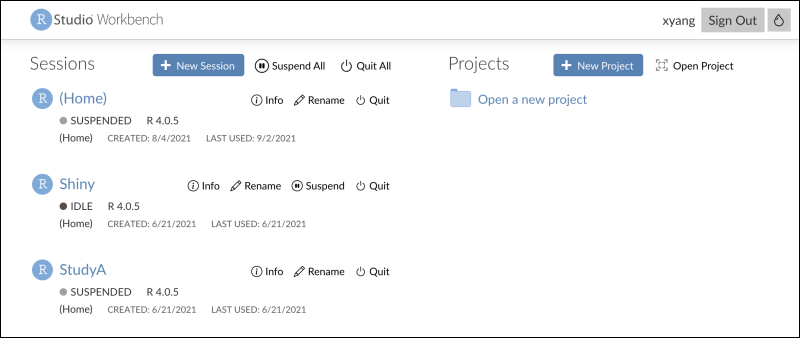

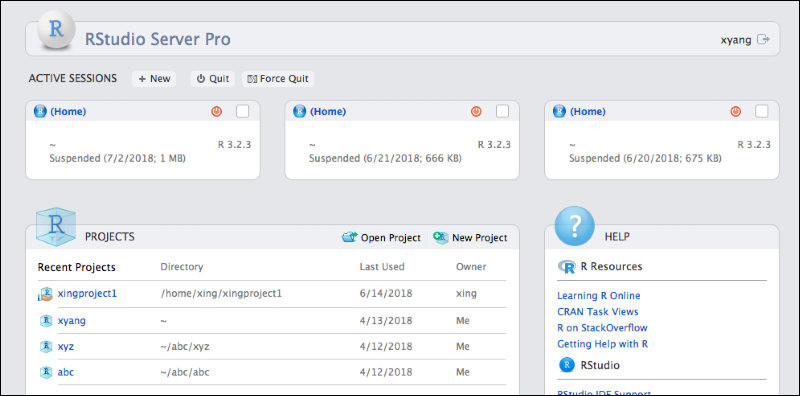

Connect to RStudio Workbench

Set Up RStudio Workbench

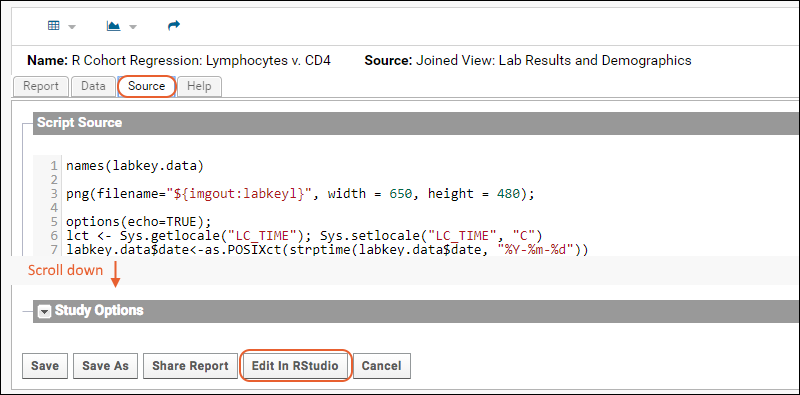

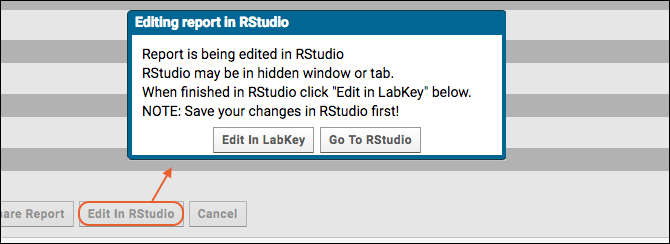

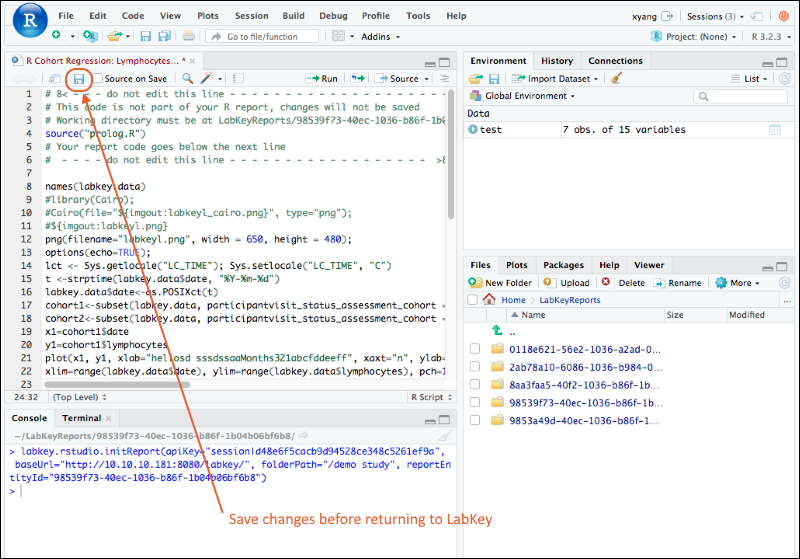

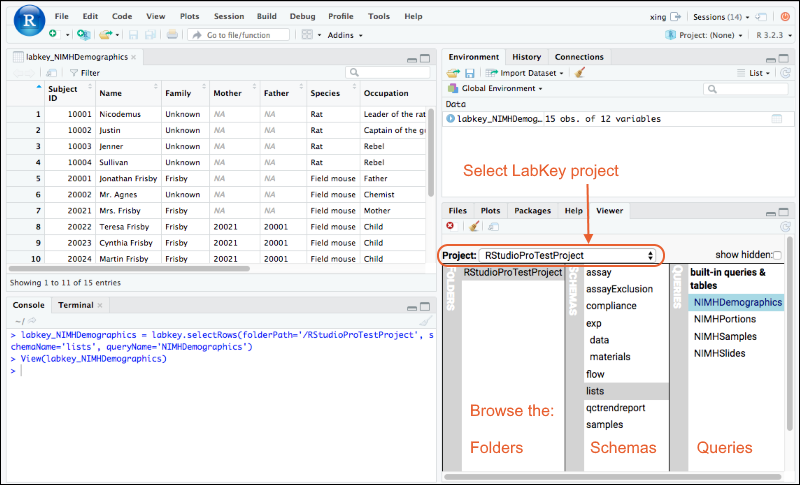

Edit R Reports in RStudio

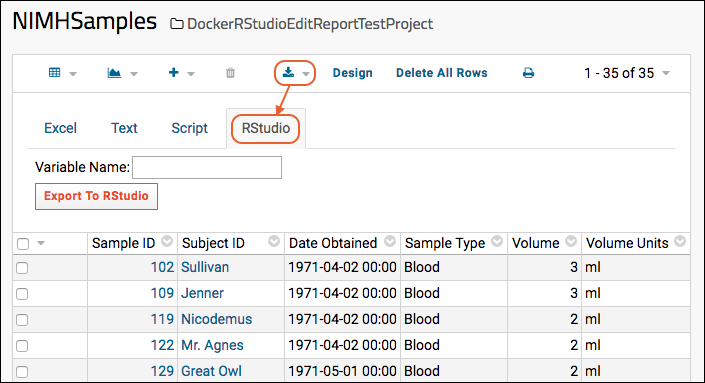

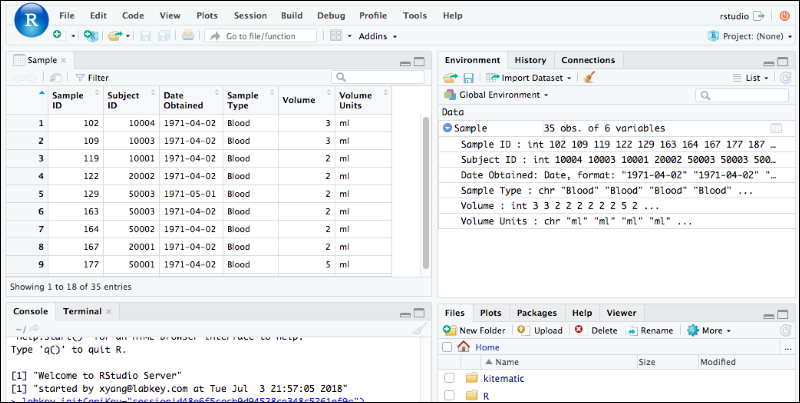

Export Data to RStudio

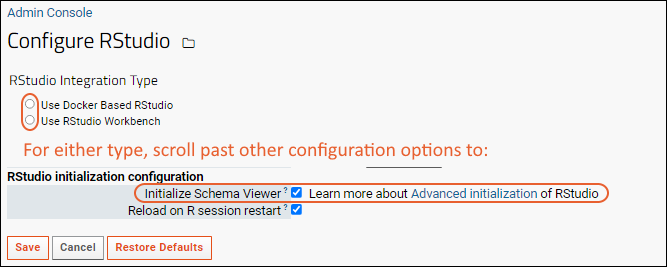

Advanced Initialization of RStudio

SQL Queries

LabKey SQL Tutorial

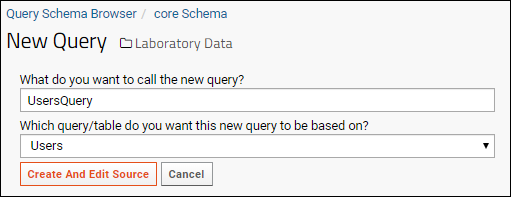

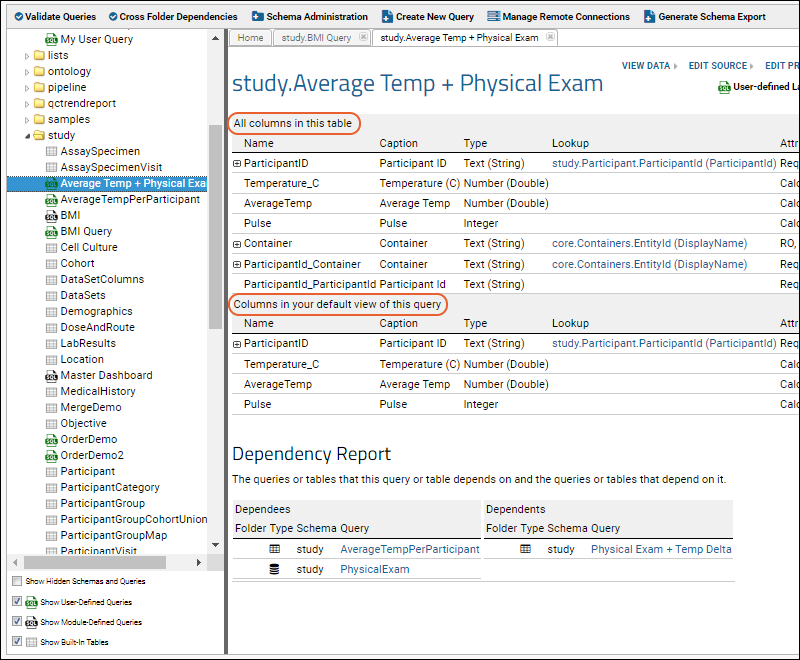

SQL Query Browser

Create a SQL Query

Edit SQL Query Source

LabKey SQL Reference

Lookups: SQL Syntax

LabKey SQL Utility Functions

Query Metadata

Query Metadata: Examples

Edit Query Properties

Trace Query Dependencies

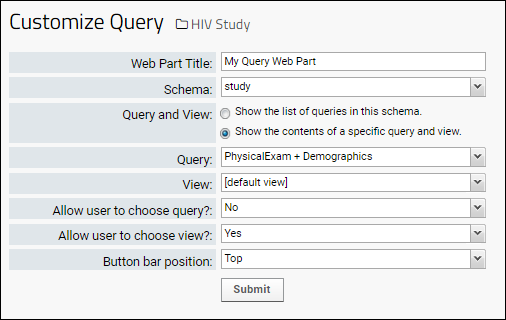

Query Web Part

LabKey SQL Examples

JOIN Queries

Calculated Columns

Premium Resource: Display Calculated Columns from Queries

Pivot Queries

Queries Across Folders

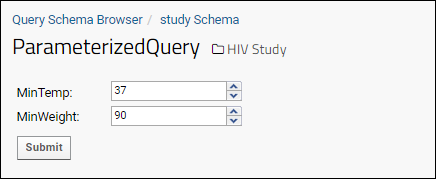

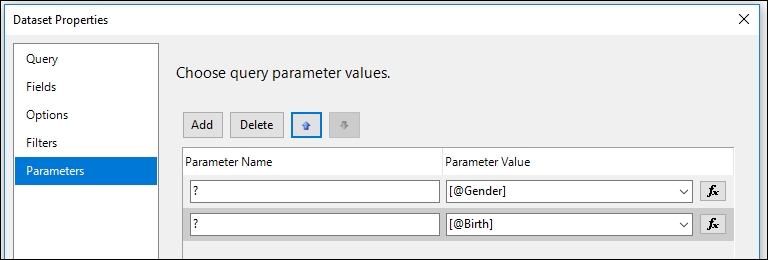

Parameterized SQL Queries

More LabKey SQL Examples

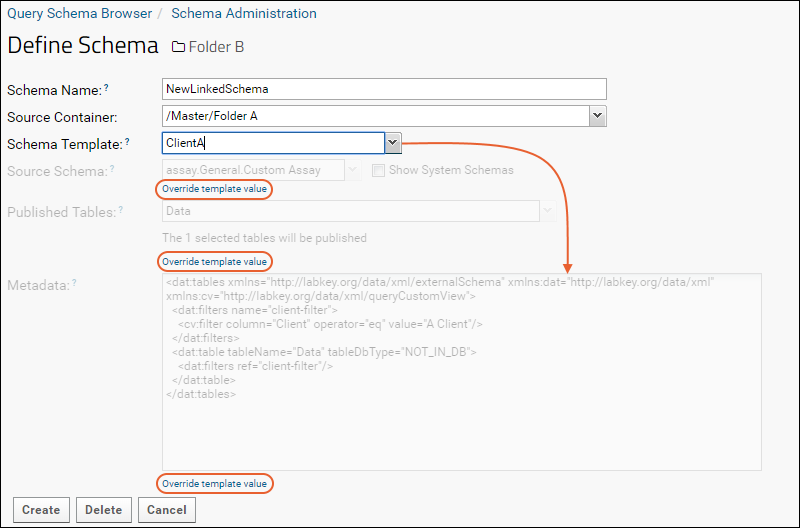

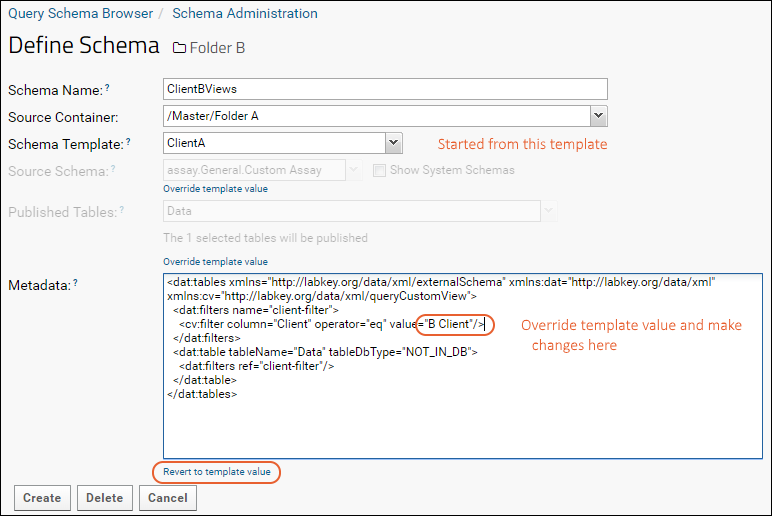

Linked Schemas and Tables

Controlling Data Scope

Ontology Integration

Load Ontologies

Concept Annotations

Ontology Column Filtering

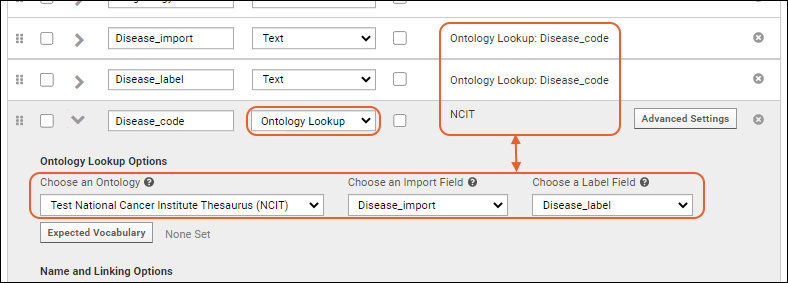

Ontology Lookup

Ontology SQL

Data Quality Control

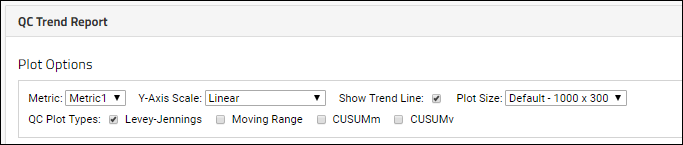

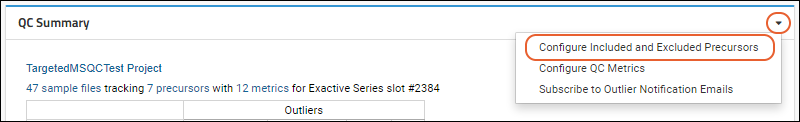

Quality Control Trend Reports

Define QC Trend Report

Use QC Trend Reports

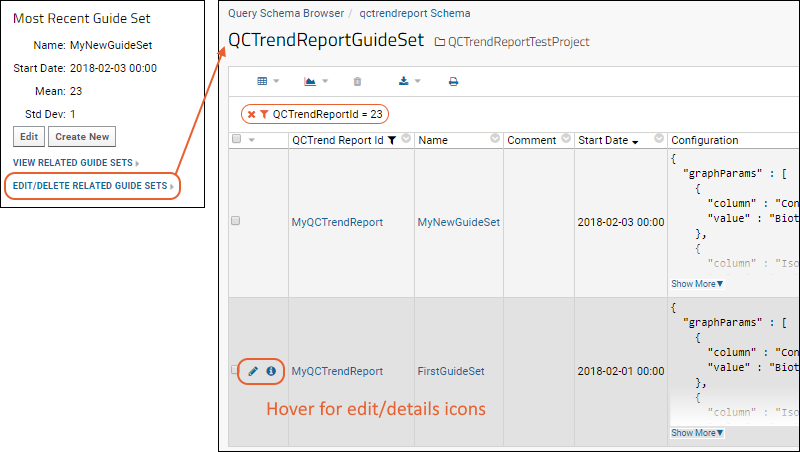

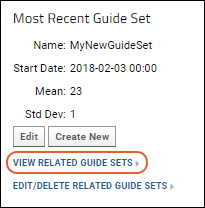

QC Trend Report Guide Sets

Search

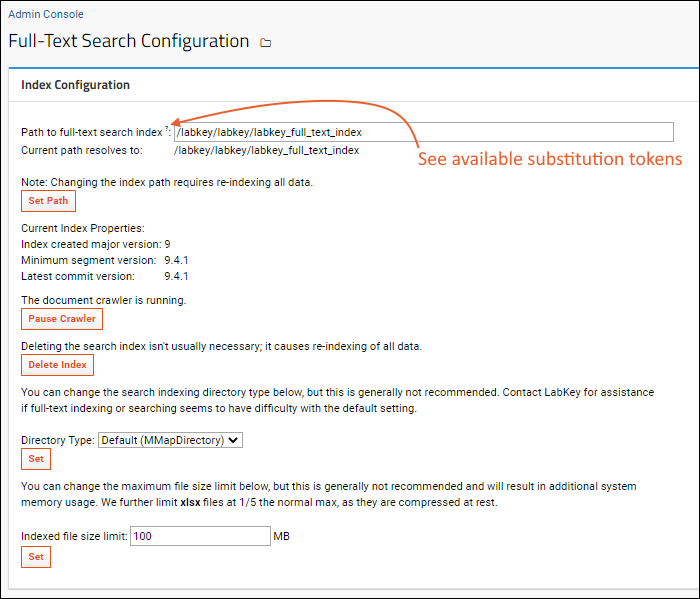

Search Administration

Integration with Spotfire

Premium Resource: Embed Spotfire Visualizations

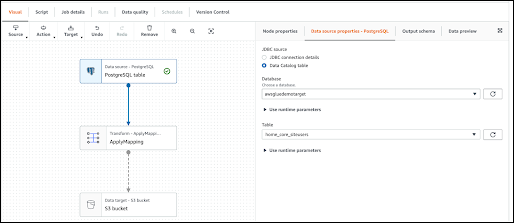

Integration with AWS Glue

LabKey Natural Language Processing (NLP)

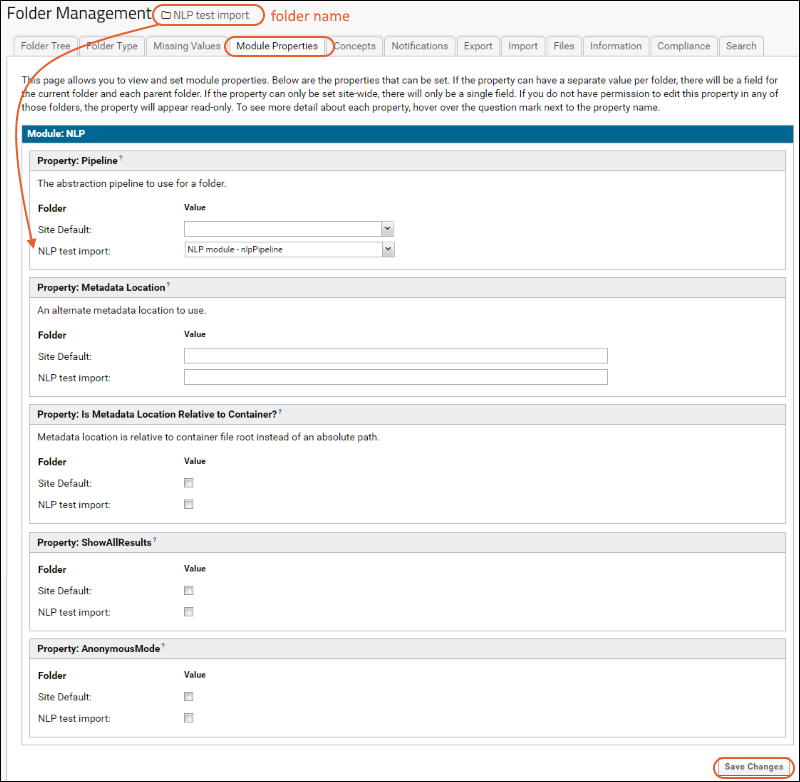

Natural Language Processing (NLP) Pipeline

Metadata JSON Files

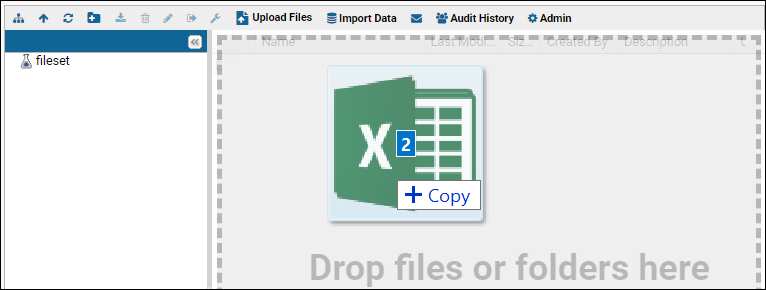

Document Abstraction Workflow

Automatic Assignment for Abstraction

Manual Assignment for Abstraction

Abstraction Task Lists

Document Abstraction

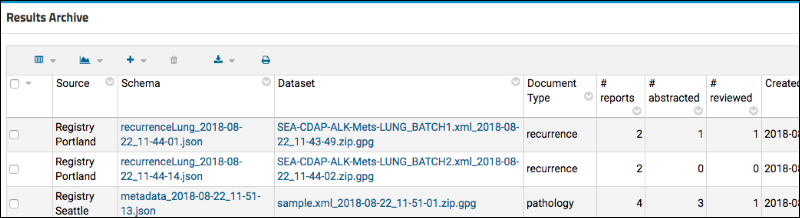

Review Document Abstraction

Review Multiple Result Sets

NLP Result Transfer

Premium Resource: Bulk Editing

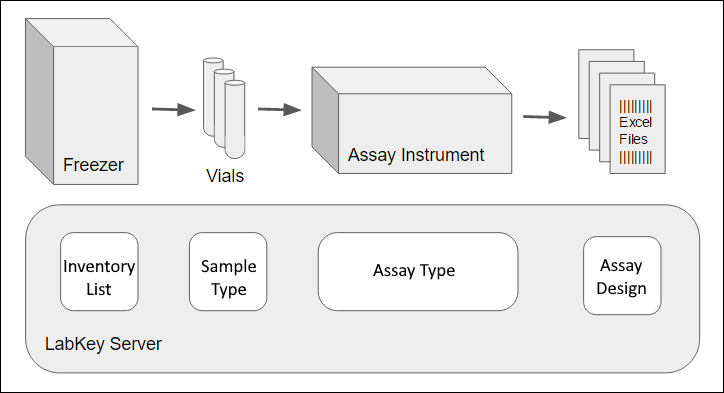

Assay Data

Tutorial: Import Experimental / Assay Data

Step 1: Assay Tutorial Setup

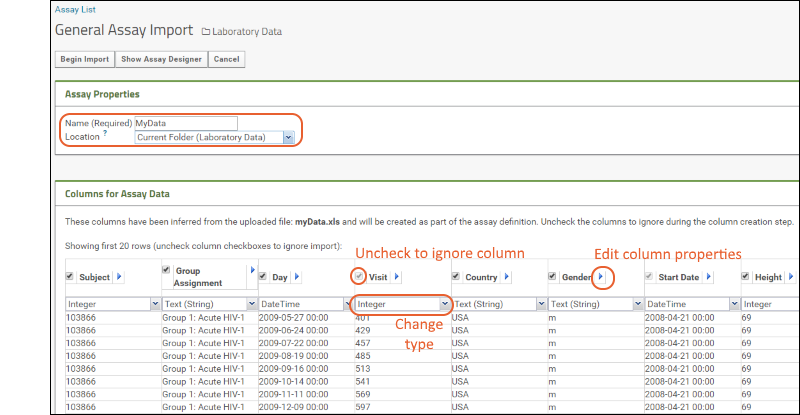

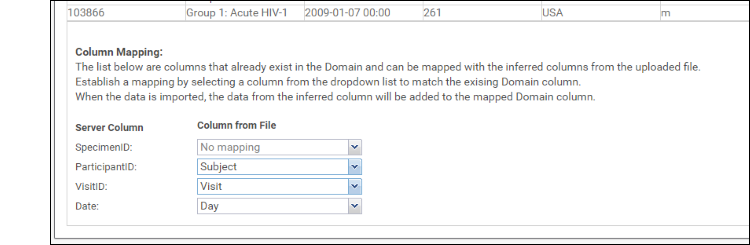

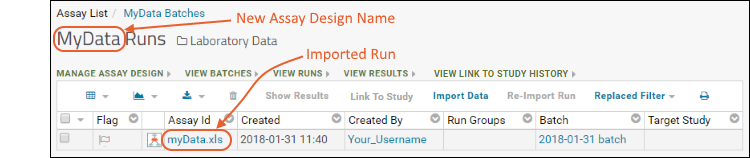

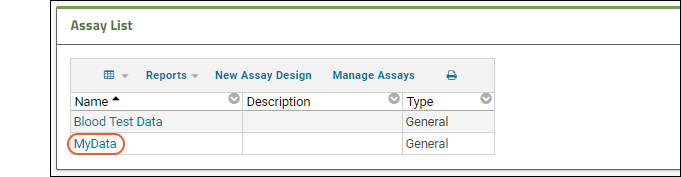

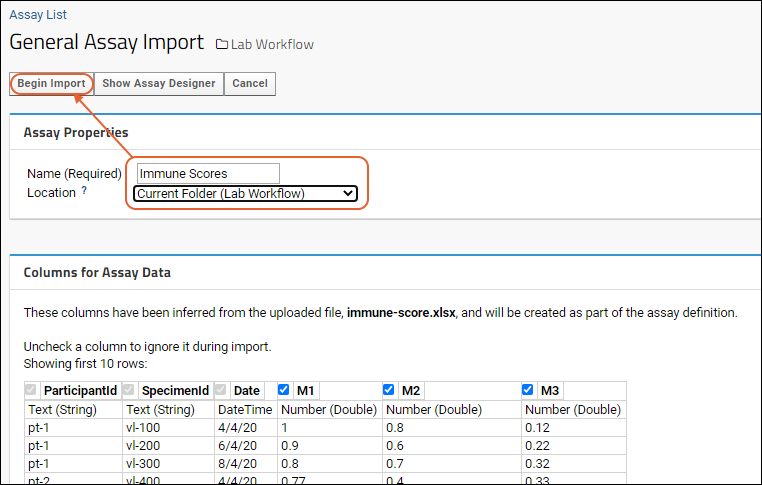

Step 2: Infer an Assay Design from Spreadsheet Data

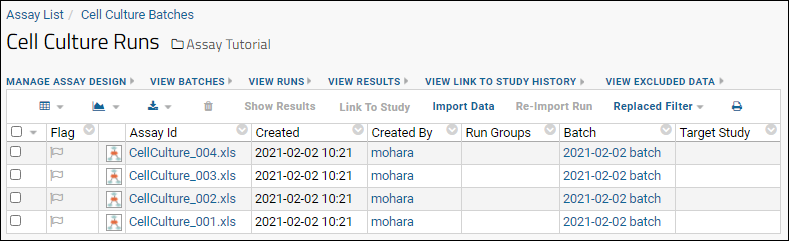

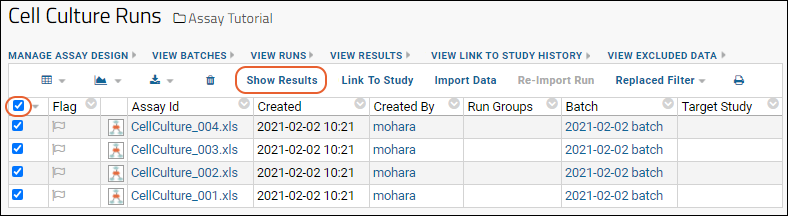

Step 3: Import Assay Data

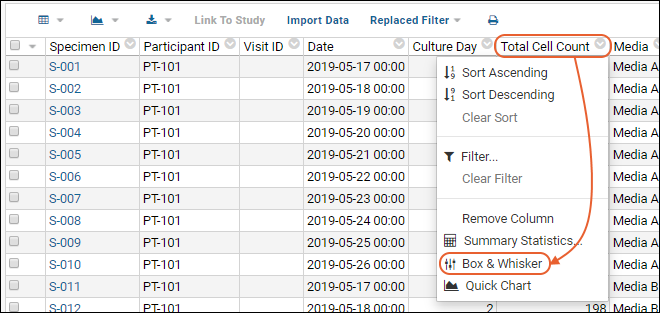

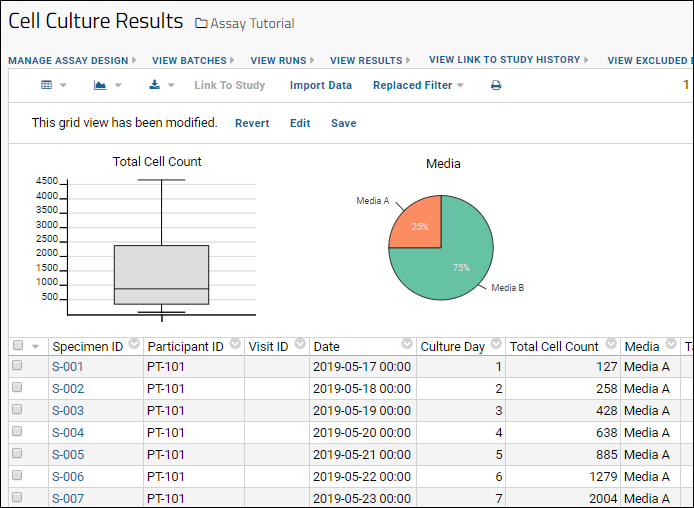

Step 4: Visualize Assay Results

Step 5: Collect Experimental Metadata

Tutorial: Assay Data Validation

Assay Administrator Guide

Set Up Folder For Assays

Design a New Assay

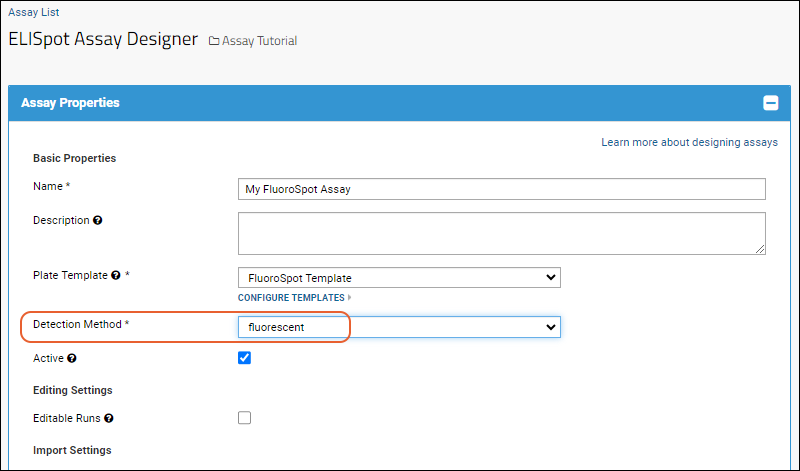

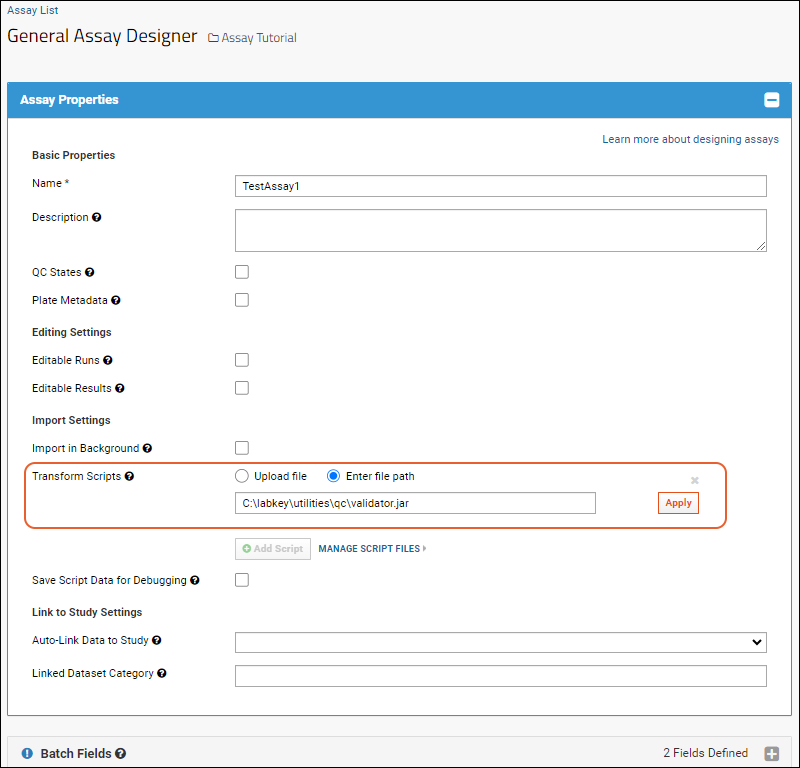

Assay Design Properties

Design a Plate-Based Assay

Customize Plate Templates

Specialty Plate-Based Assays

Participant/Visit Resolver Field

Manage an Assay Design

Export/Import Assay Design

Assay QC States: Admin Guide

Improve Data Entry Consistency & Accuracy

Assay Transform Script

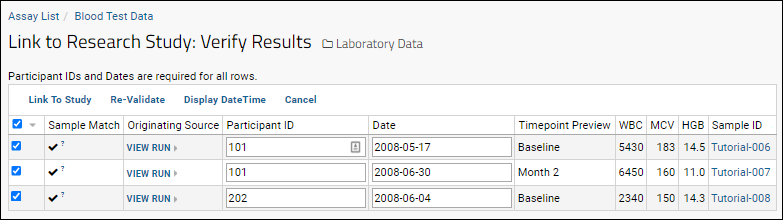

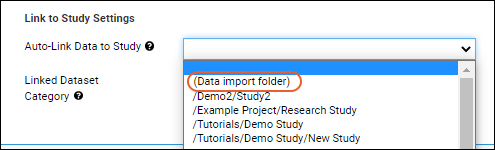

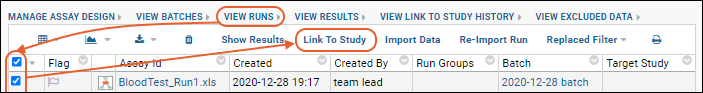

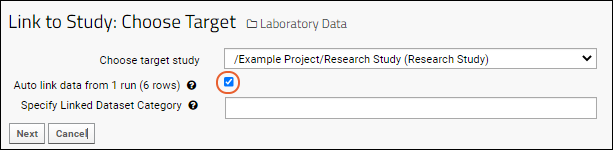

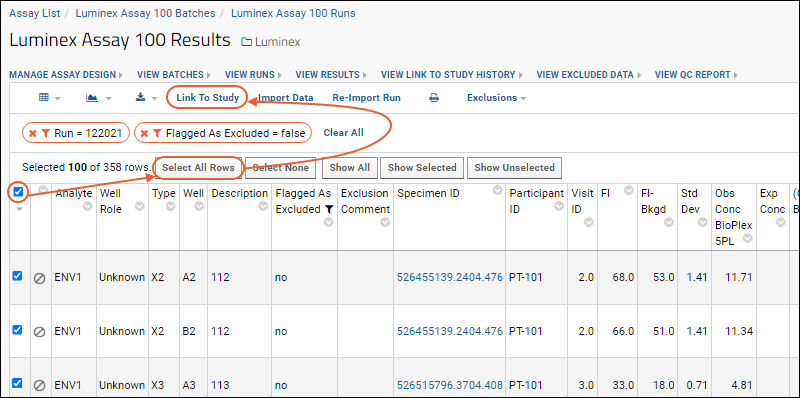

Link Assay Data into a Study

Link-To-Study History

Assay Feature Matrix

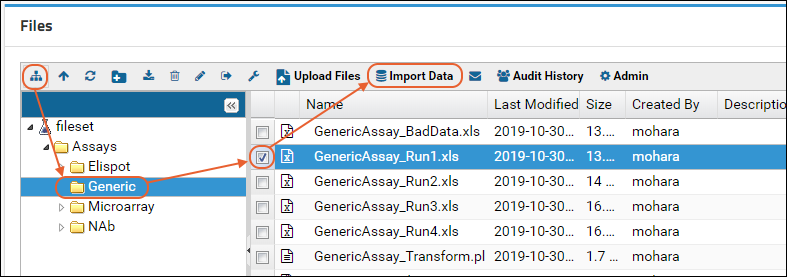

Assay User Guide

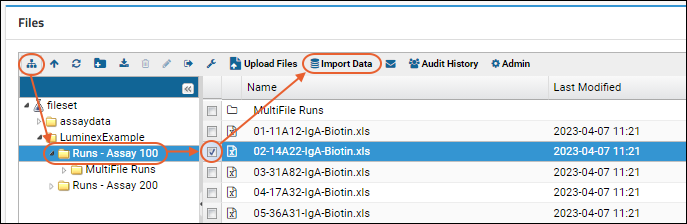

Import Assay Runs

Multi-File Assay Runs

Work with Assay Runs

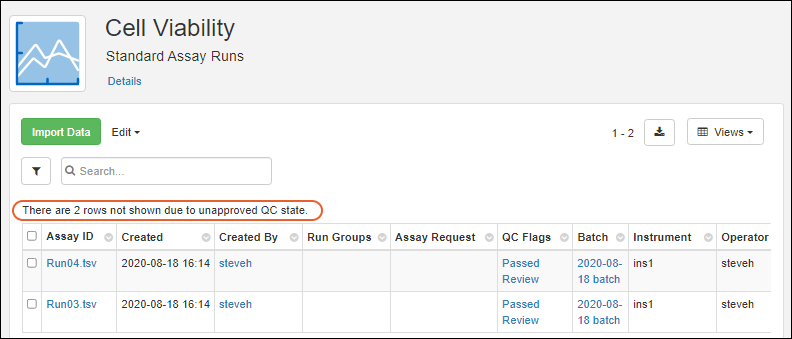

Assay QC States: User Guide

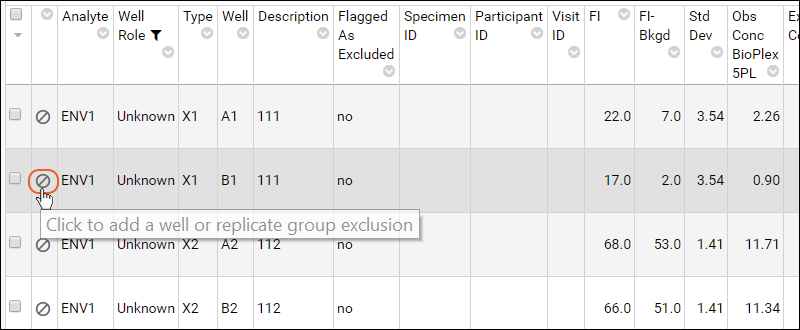

Exclude Assay Data

Re-import Assay Runs

Export Assay Data

Assay Terminology

ELISA Assay

Tutorial: ELISA Assay

ELISA Run Details View

ELISA Assay Reference

Enhanced ELISA Assay Support

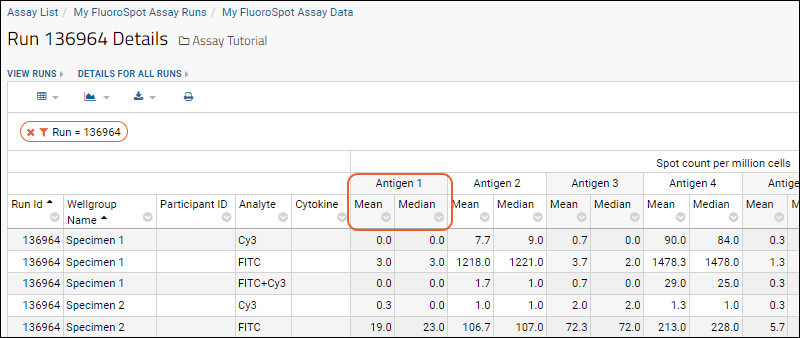

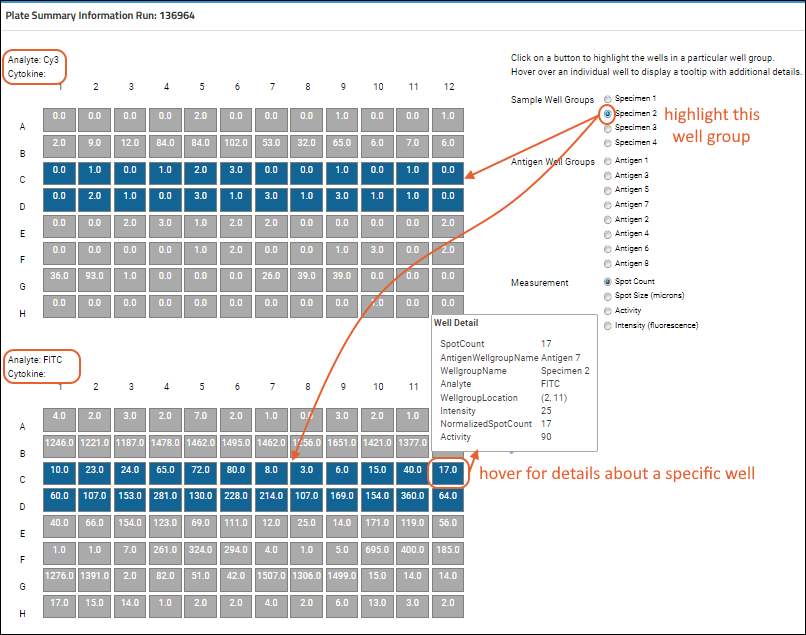

ELISpot Assay

Tutorial: ELISpot Assay Tutorial

Import ELISpot Data

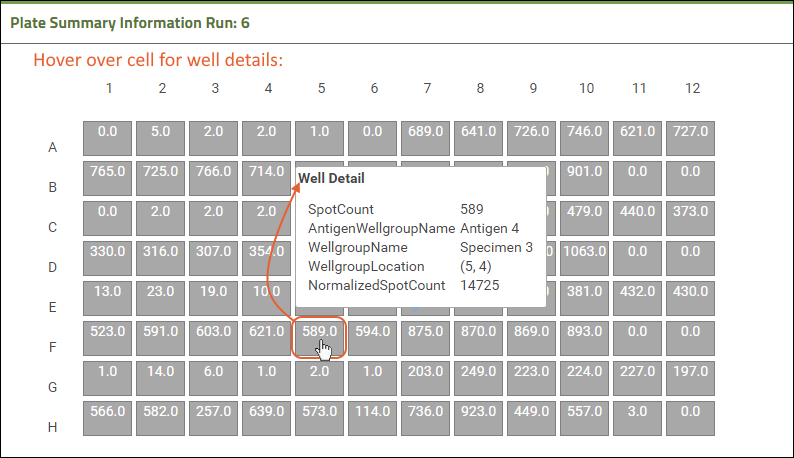

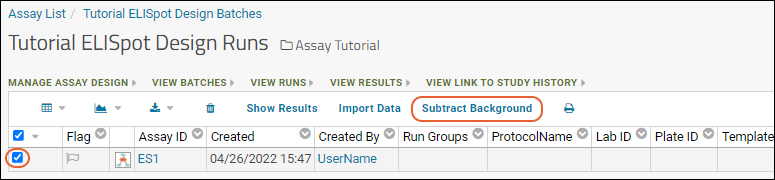

Review ELISpot Data

ELISpot Properties and Fields

Flow Cytometry

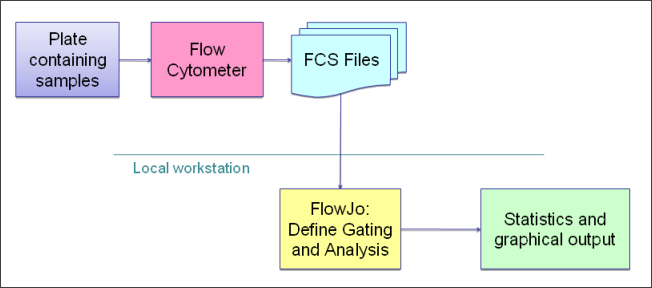

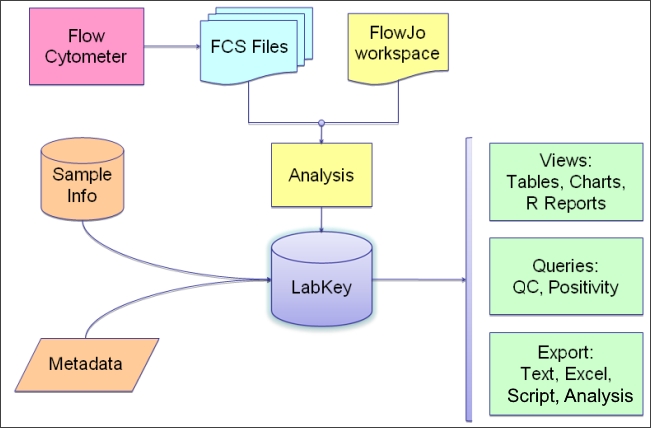

Flow Cytometry Overview

LabKey Flow Module

Set Up a Flow Folder

Tutorial: Explore a Flow Workspace

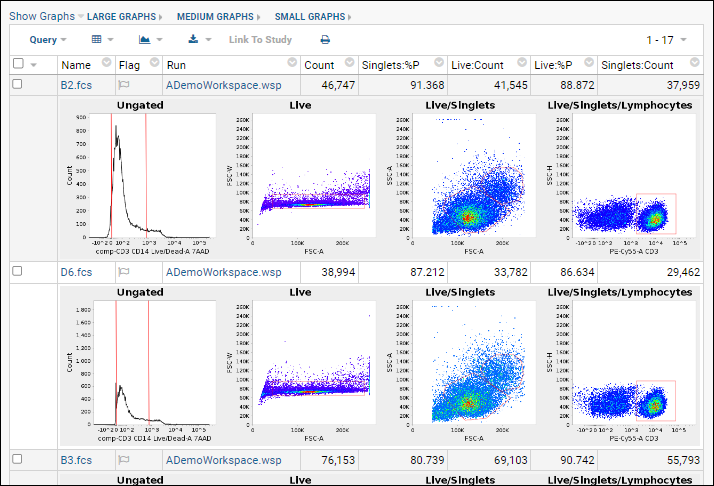

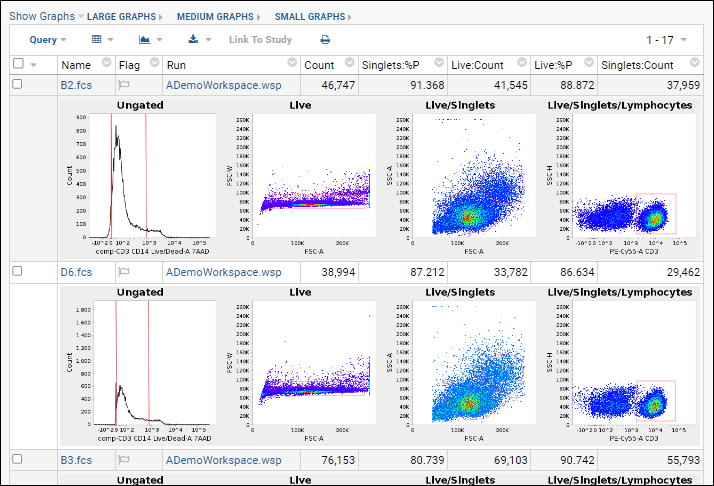

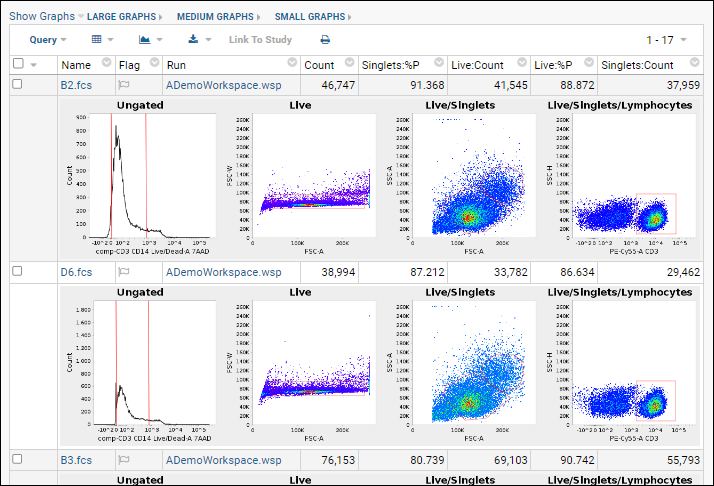

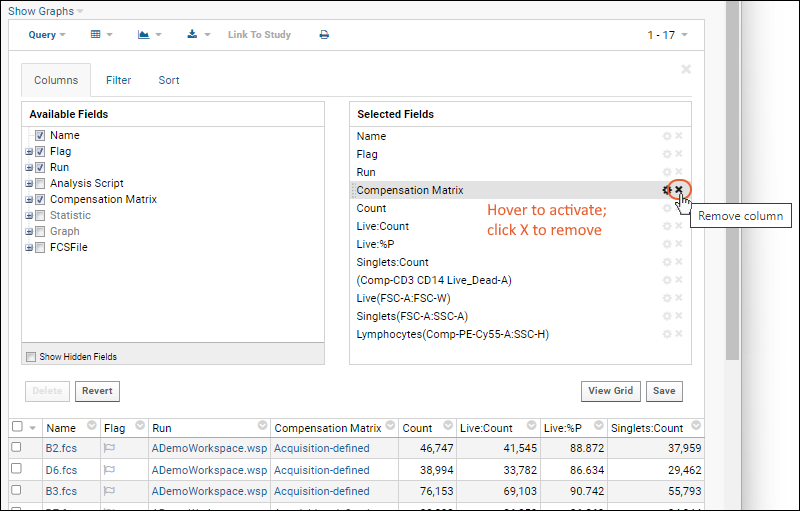

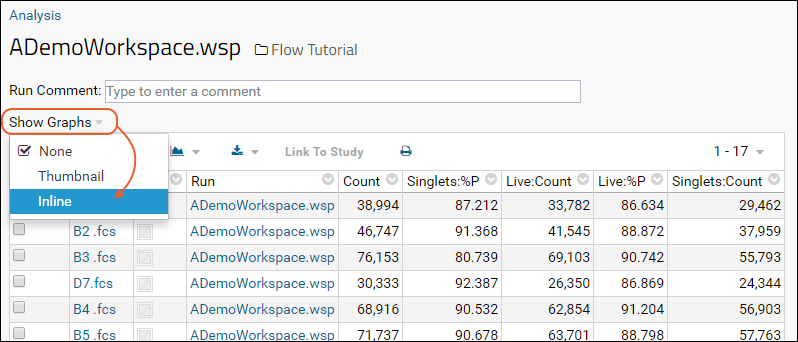

Step 1: Customize Your Grid View

Step 2: Examine Graphs

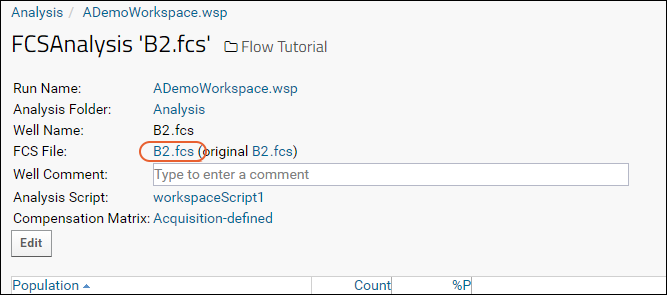

Step 3: Examine Well Details

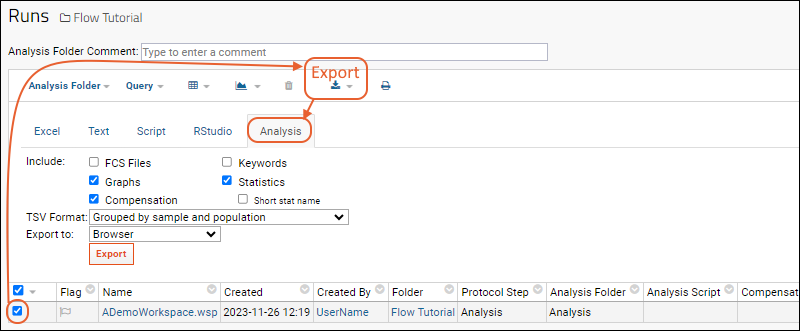

Step 4: Export Flow Data

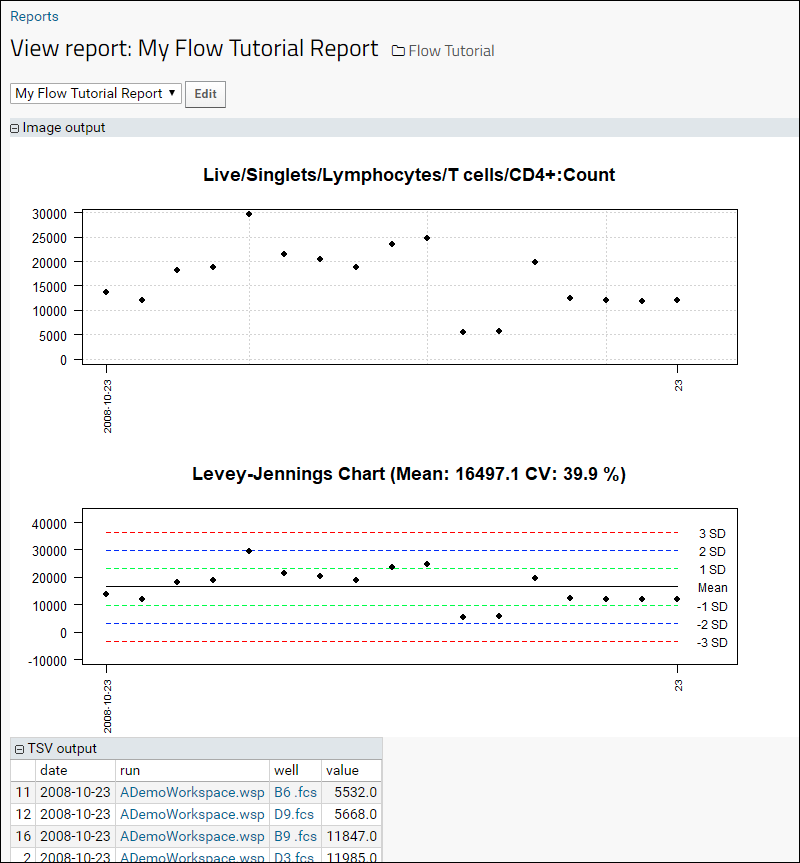

Step 5: Flow Quality Control

Tutorial: Set Flow Background

Import a Flow Workspace and Analysis

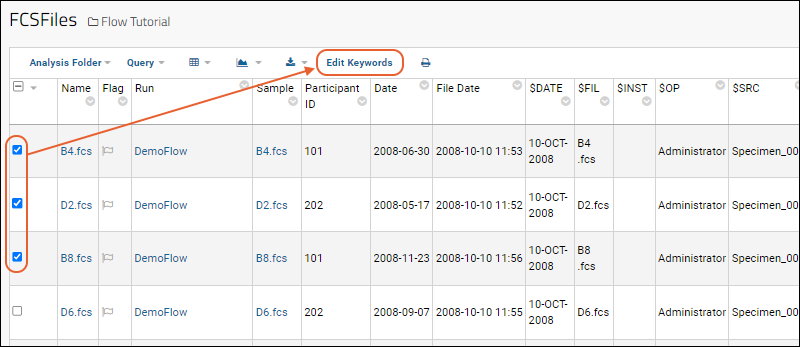

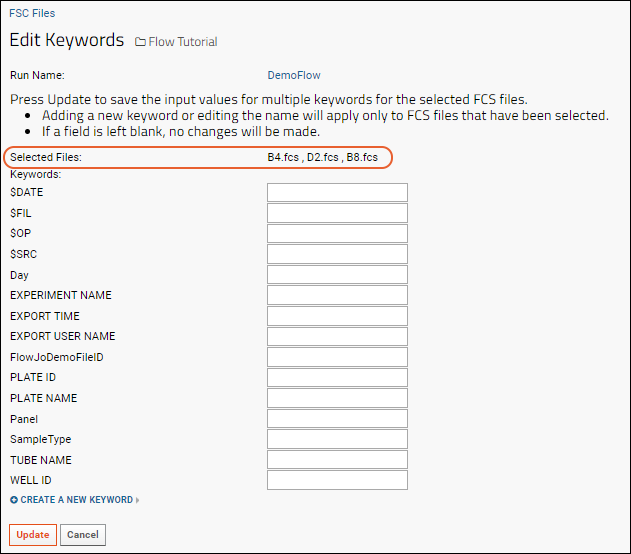

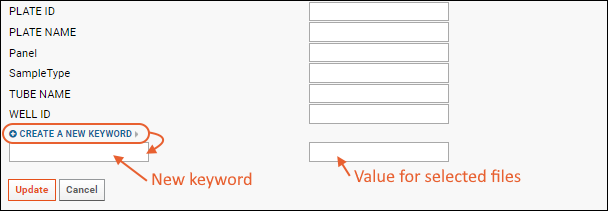

Edit Keywords

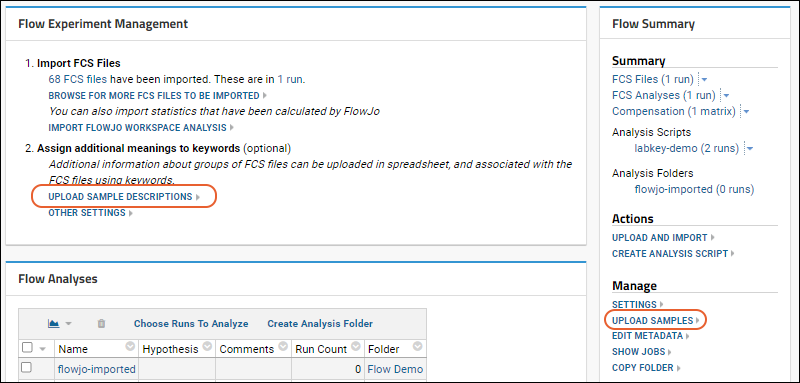

Add Sample Descriptions

Add Statistics to FCS Queries

Flow Module Schema

Analysis Archive Format

Add Flow Data to a Study

FCS keyword utility

FluoroSpot Assay

Luminex

Luminex Assay Tutorial Level I

Set Up Luminex Tutorial Folder

Step 1: Create a New Luminex Assay Design

Step 2: Import Luminex Run Data

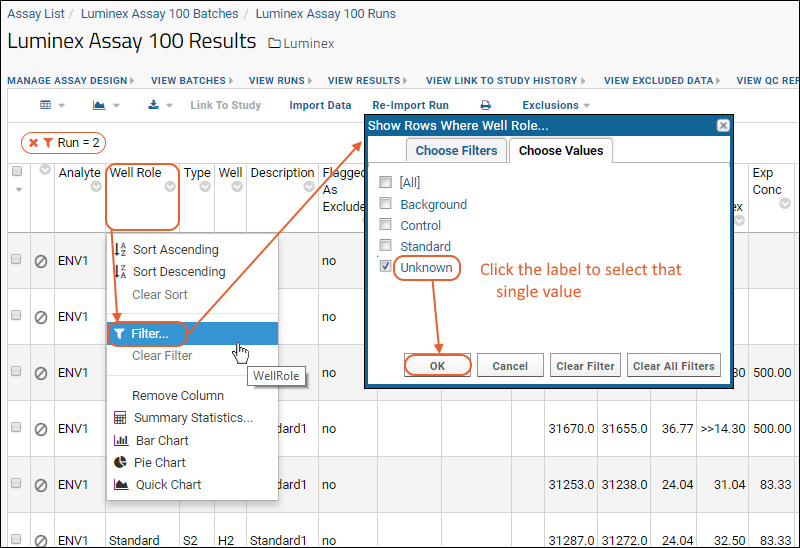

Step 3: Exclude Analytes for QC

Step 4: Import Multi-File Runs

Step 5: Link Luminex Data to Study

Luminex Assay Tutorial Level II

Step 1: Import Lists and Assay Archives

Step 2: Configure R, Packages and Script

Step 3: Import Luminex Runs

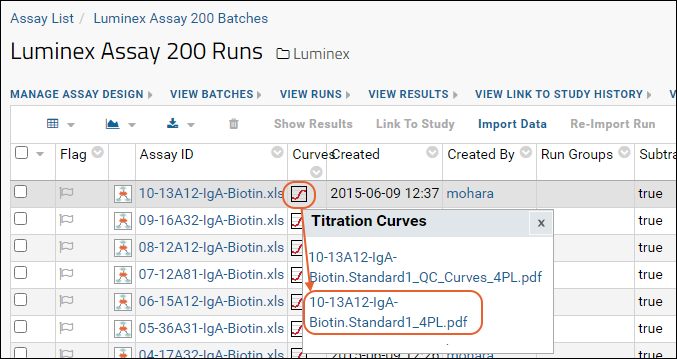

Step 4: View 4pl Curve Fits

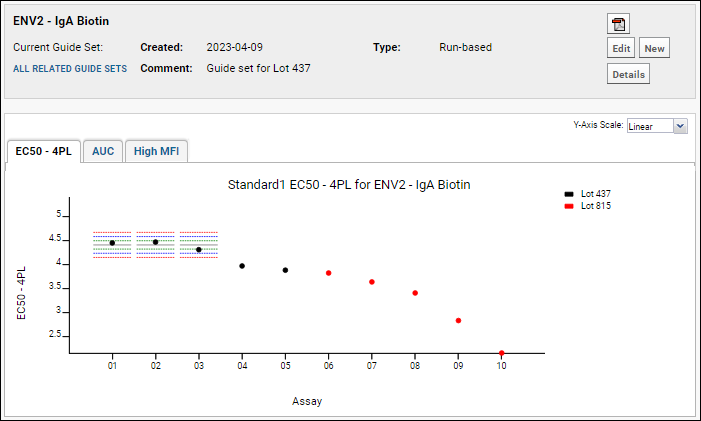

Step 5: Track Analyte Quality Over Time

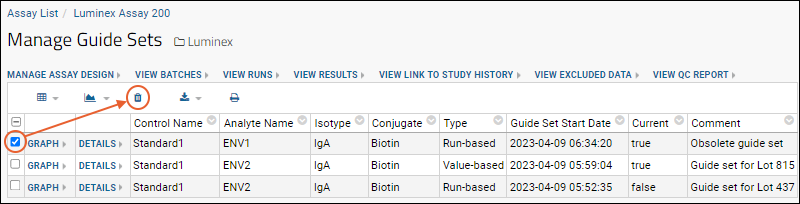

Step 6: Use Guide Sets for QC

Step 7: Compare Standard Curves Across Runs

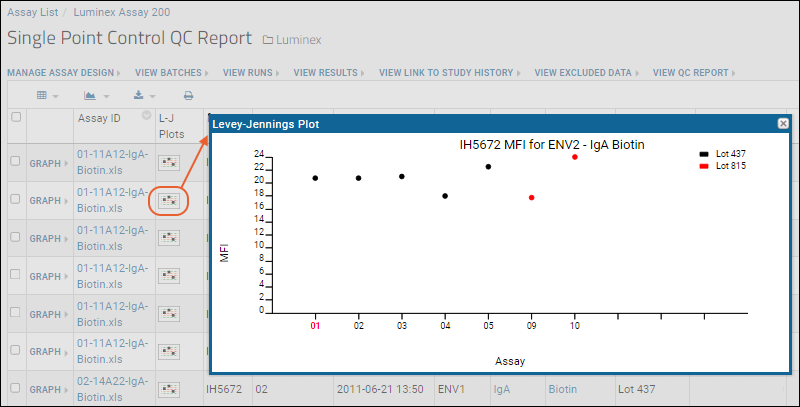

Track Single-Point Controls in Levey-Jennings Plots

Luminex Calculations

Luminex QC Reports and Flags

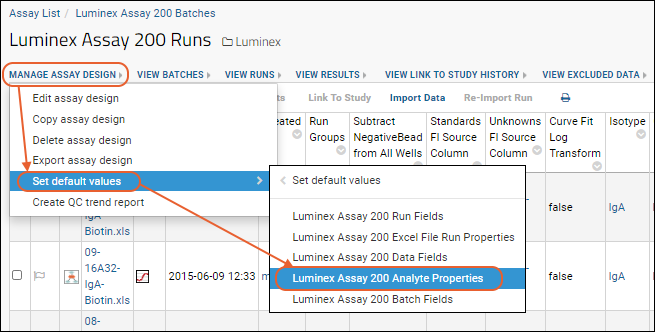

Luminex Reference

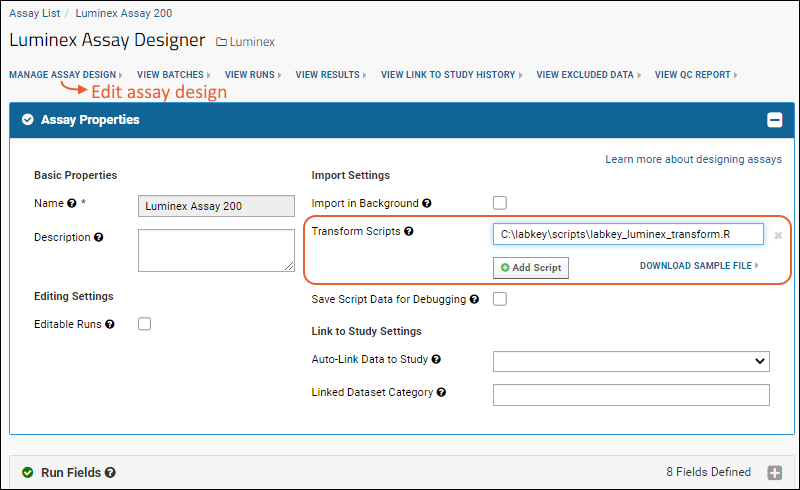

Review Luminex Assay Design

Luminex Properties

Luminex File Formats

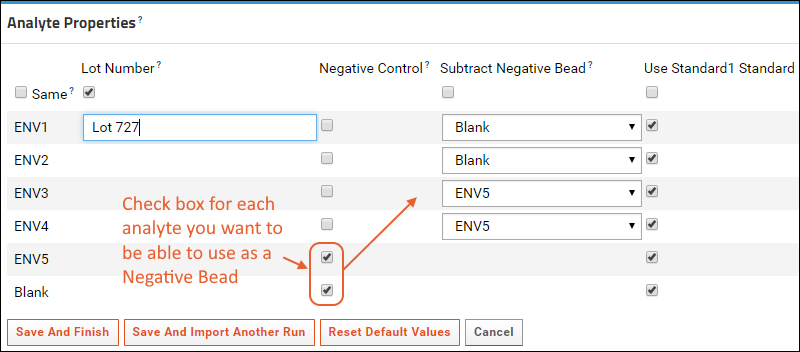

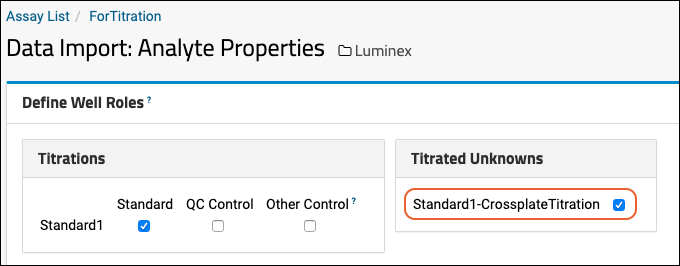

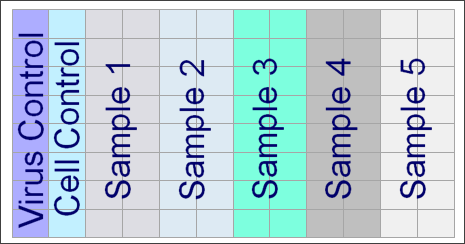

Review Well Roles

Luminex Conversions

Customize Luminex Assay for Script

Review Fields for Script

Troubleshoot Luminex Transform Scripts and Curve Fit Results

Mass Spectrometry

NAb (Neutralizing Antibody) Assays

Tutorial: NAb Assay

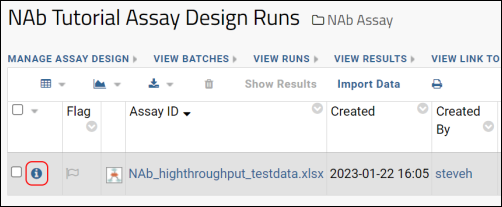

Step 1: Create a NAb Assay Design

Step 2: Import NAb Assay Data

Step 3: View High-Throughput NAb Data

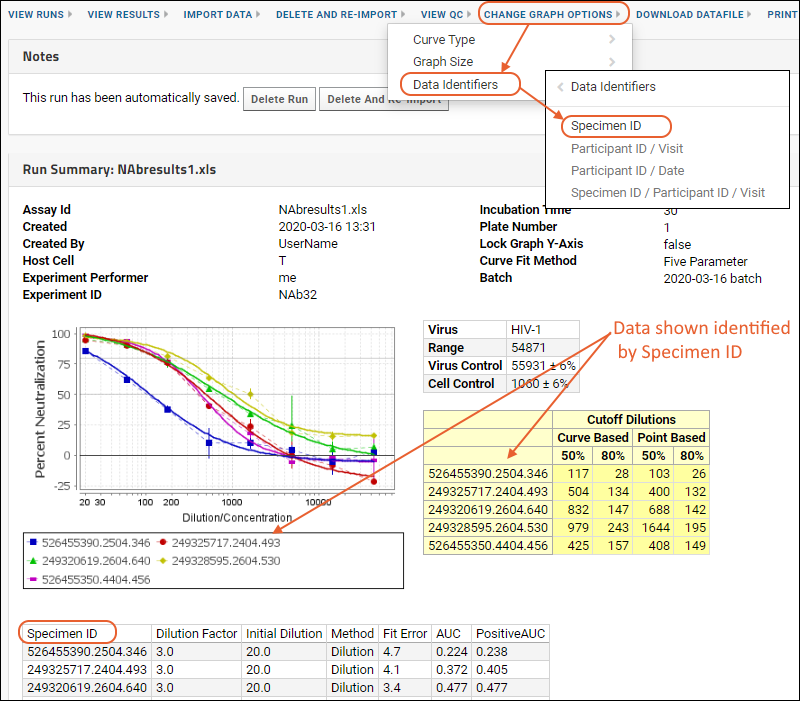

Step 4: Explore NAb Graph Options

Work with Low-Throughput NAb Data

Use NAb Data Identifiers

NAb Assay QC

Work with Multiple Viruses per Plate

NAb Plate File Formats

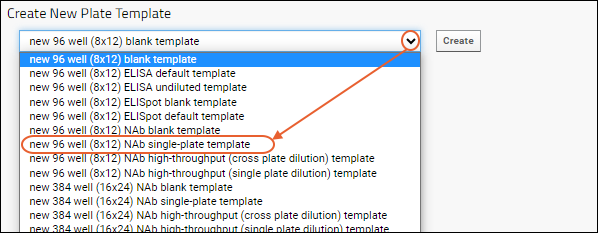

Customize NAb Plate Template

NAb Assay Reference

Assay Request Tracker

Premium Resource: Using the Assay Request Tracker

Premium Resource: Assay Request Tracker Administration

Experiment Framework

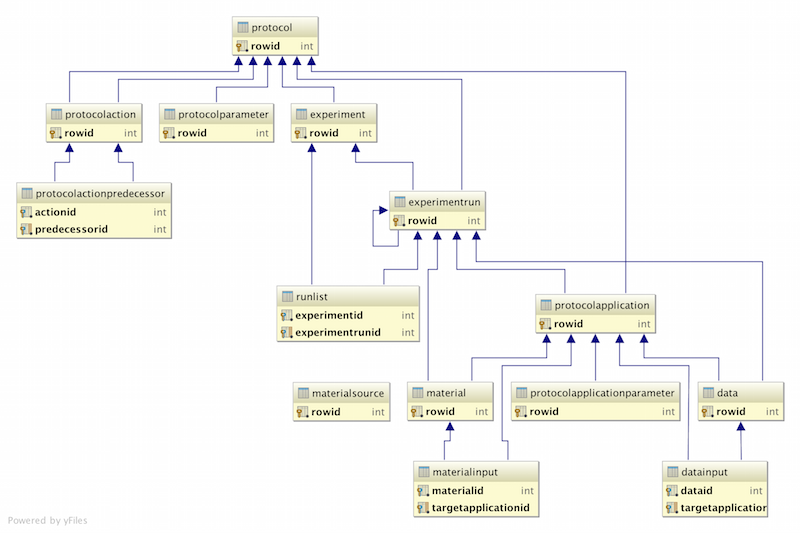

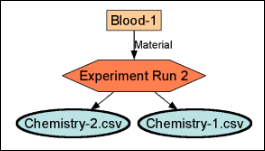

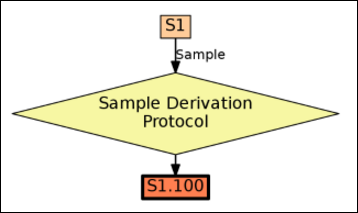

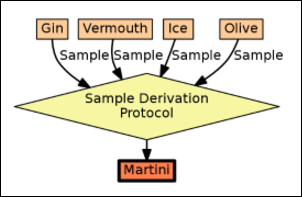

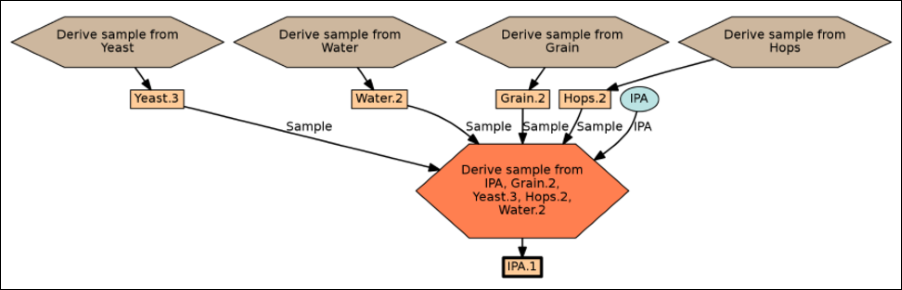

Experiment Terminology

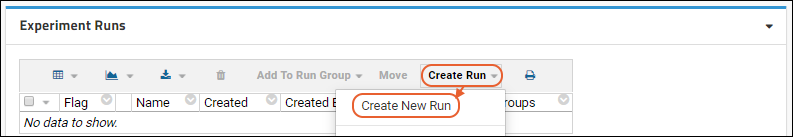

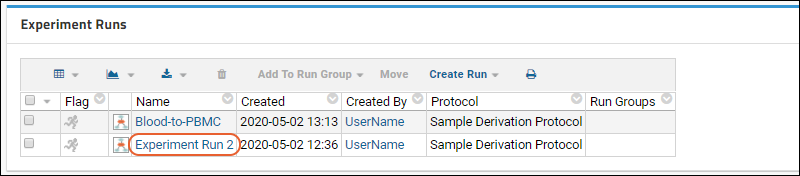

Experiment Runs

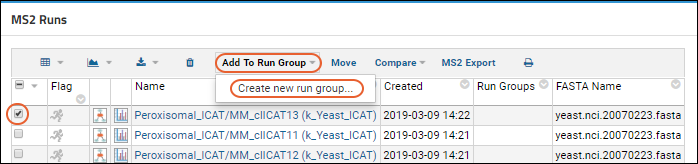

Run Groups

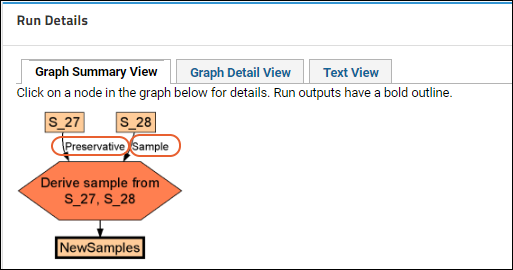

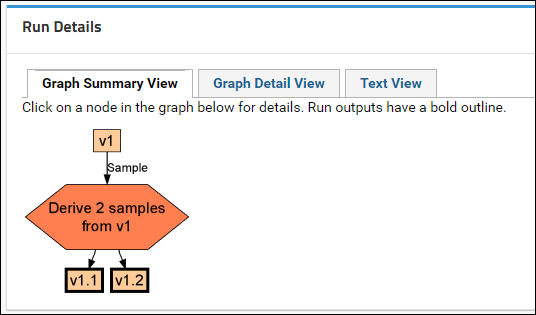

Experiment Lineage Graphs

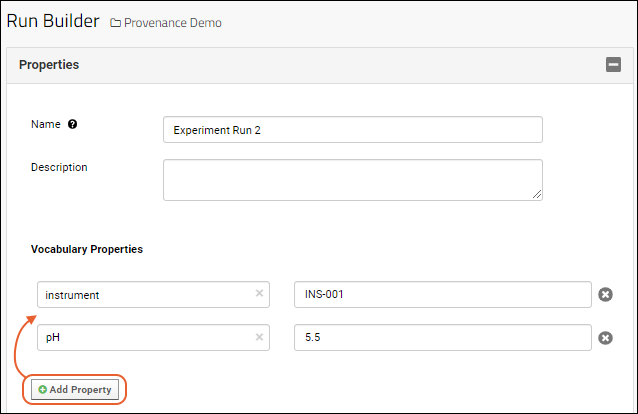

Provenance Module: Run Builder

Life Science Identifiers (LSIDs)

LSID Substitution Templates

Record Lab Workflow

Tutorial: Lab Workflow Folder

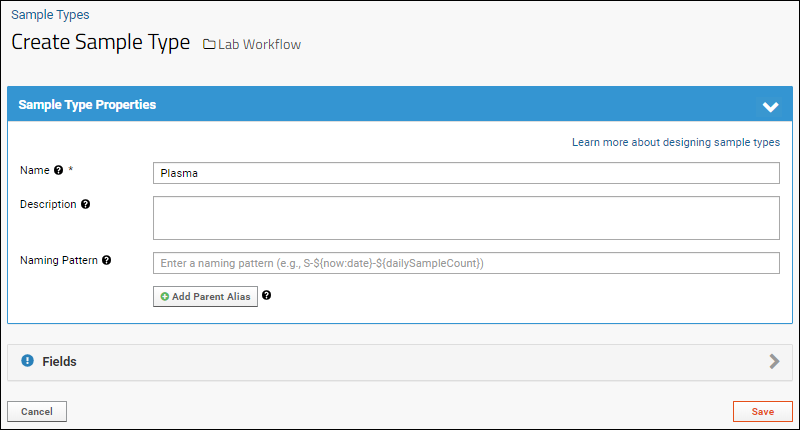

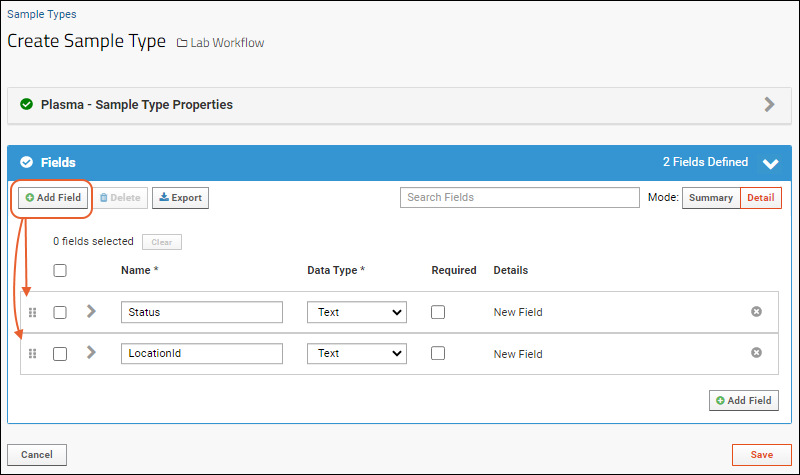

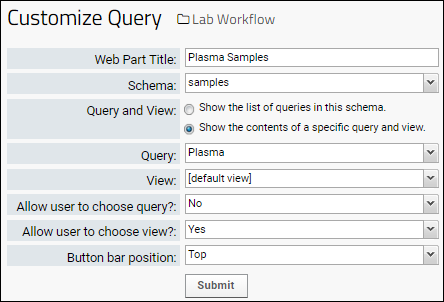

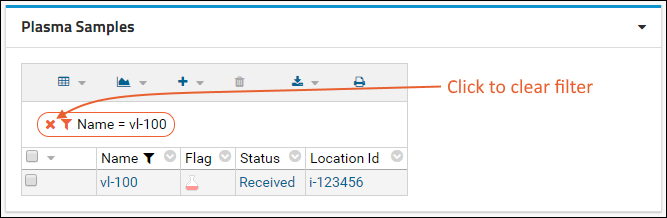

Step 1: Create the User Interface

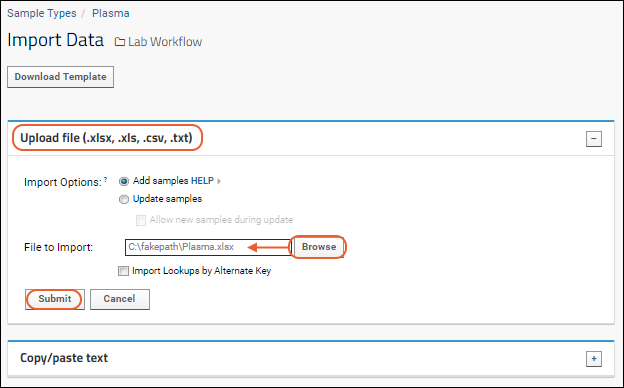

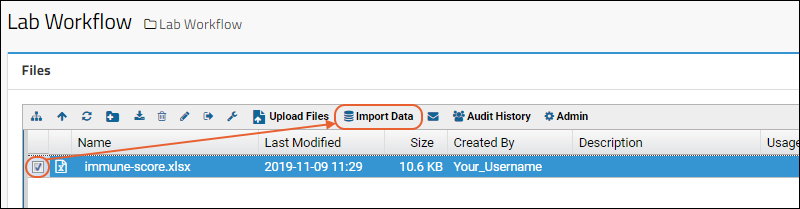

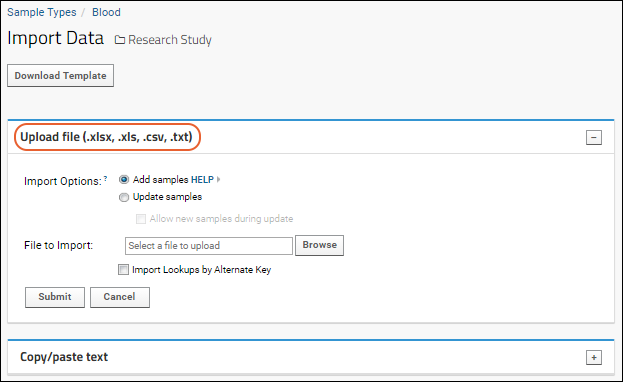

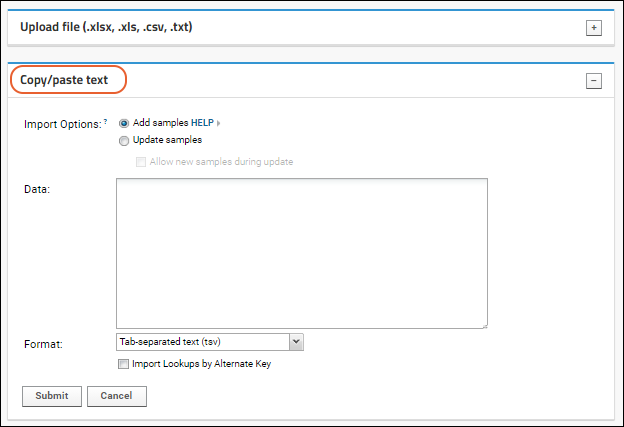

Step 2: Import Lab Data

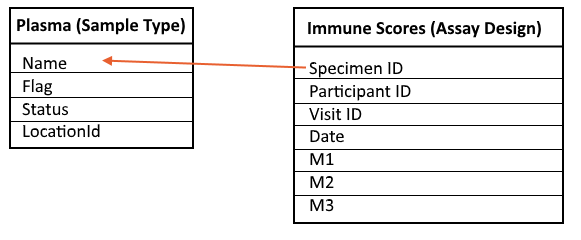

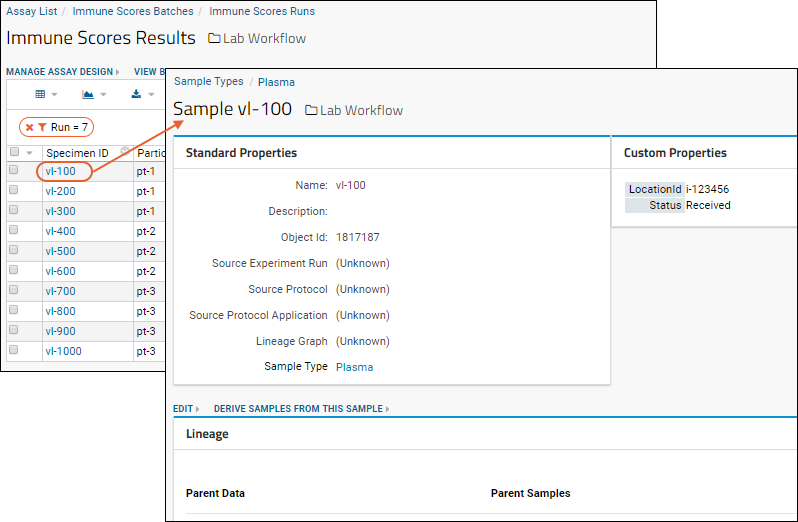

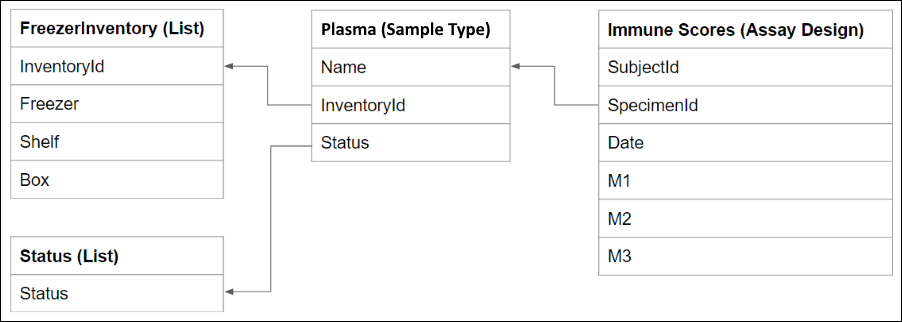

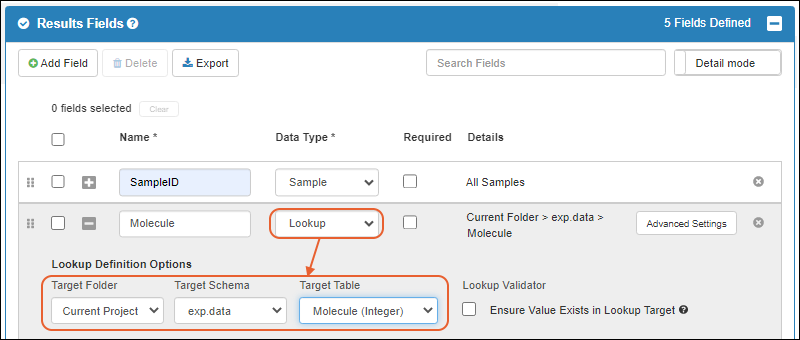

Step 3: Create a Lookup from Assay Data to Samples

Step 4: Using and Extending the Lab Workspace

Reagent Module

Samples

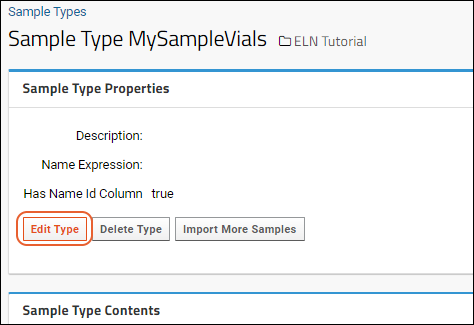

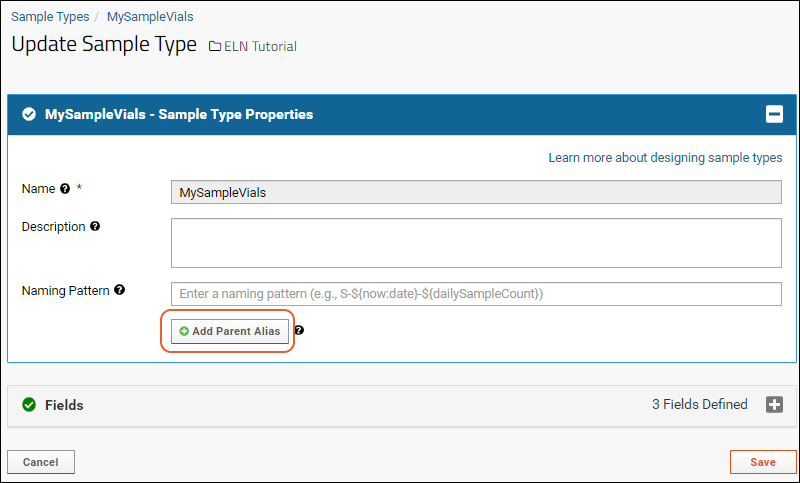

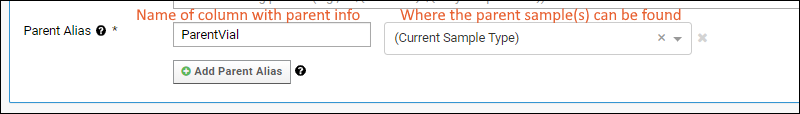

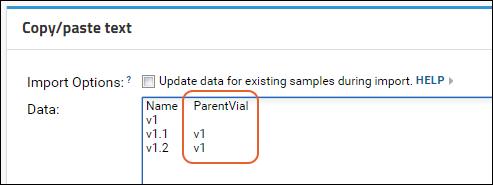

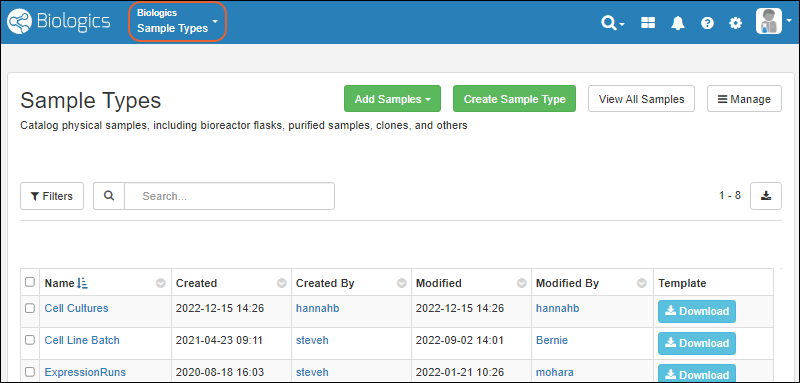

Create Sample Type

Sample Naming Patterns

Aliquot Naming Patterns

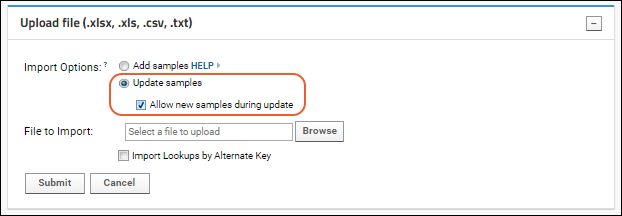

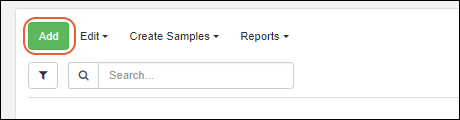

Add Samples

Premium Resource: Split Large Sample Upload

Manage Sample Types and Samples

Link Assay Data to Samples

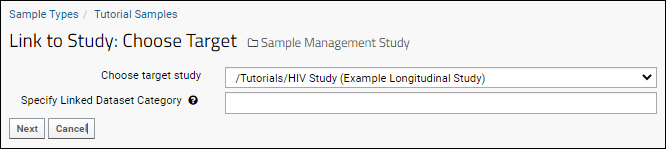

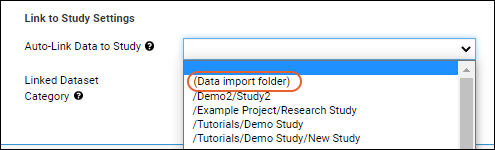

Link Sample Data to Study

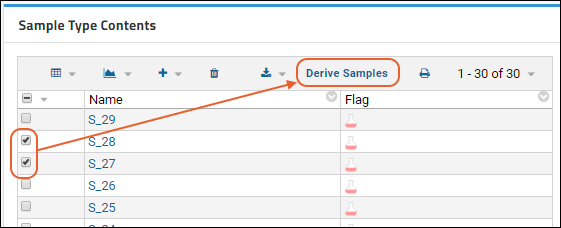

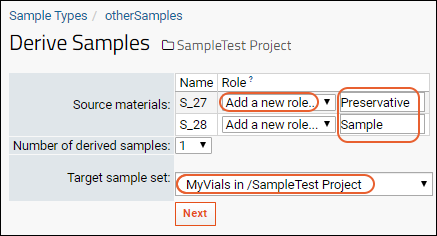

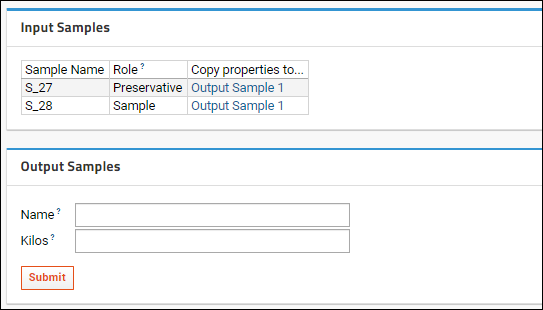

Sample Parents: Derivation and Lineage

Sample Types: Examples

Barcode Fields

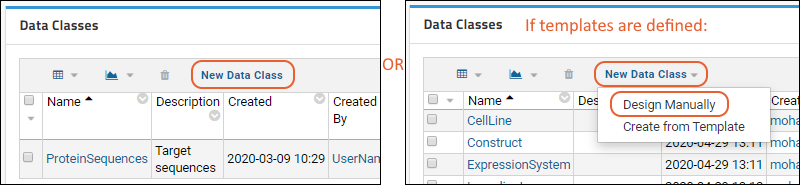

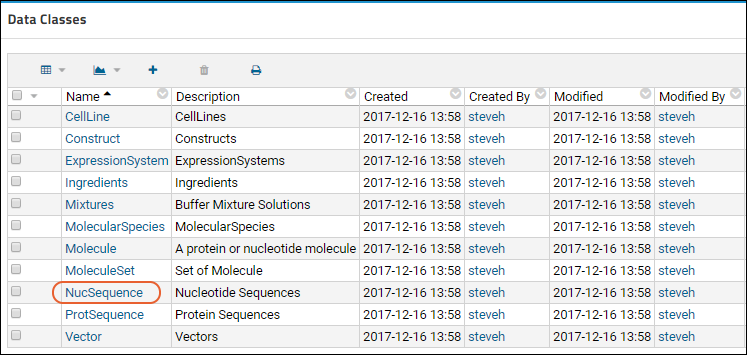

Data Classes

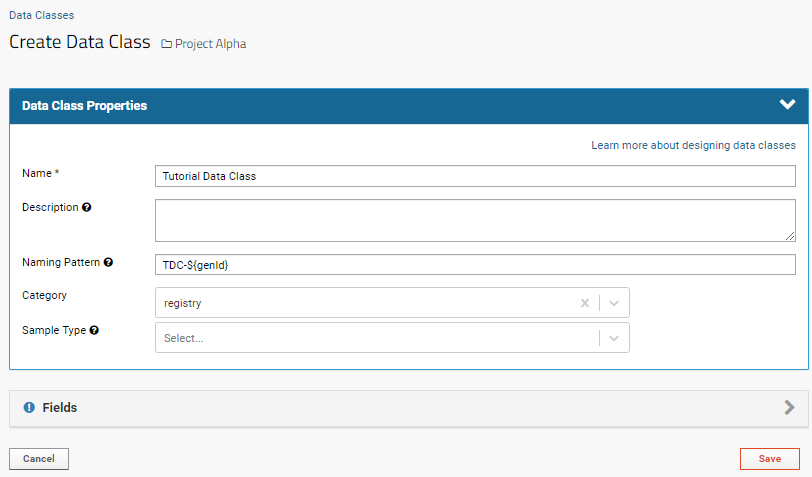

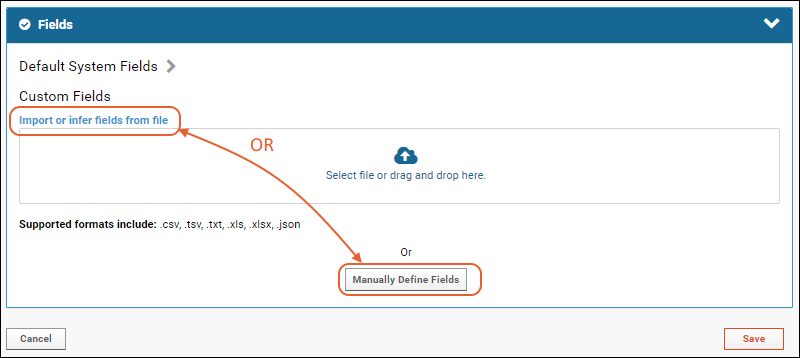

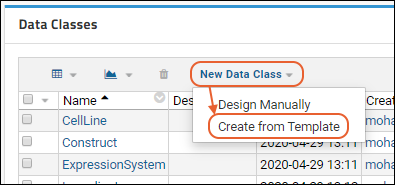

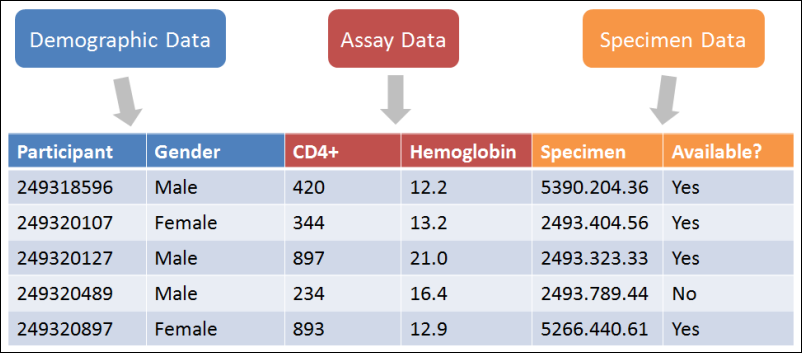

Create Data Class

Studies

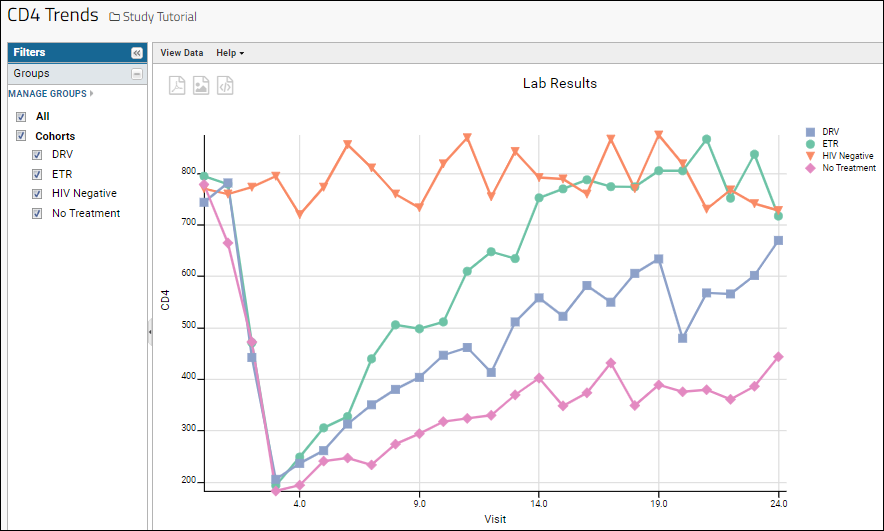

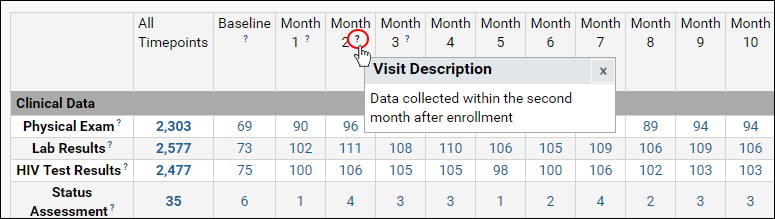

Tutorial: Longitudinal Studies

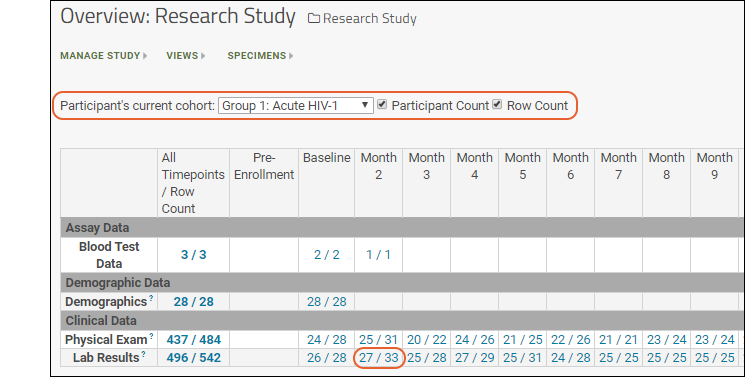

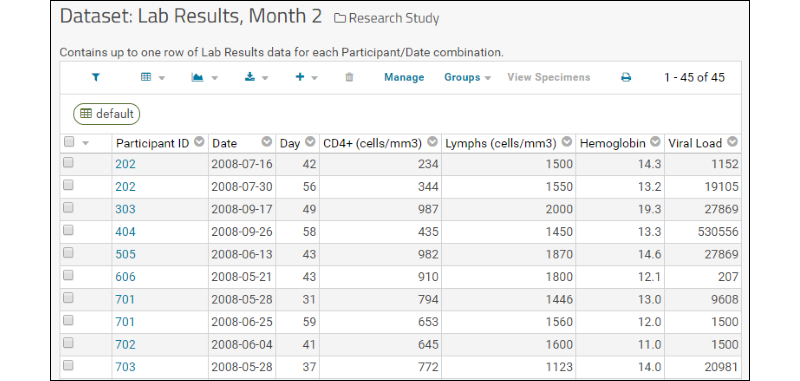

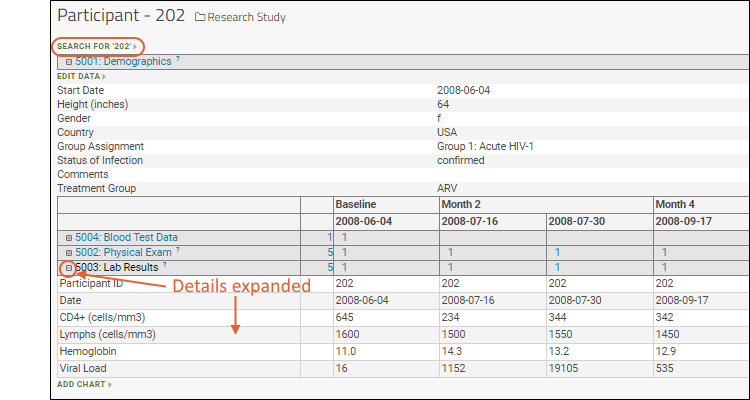

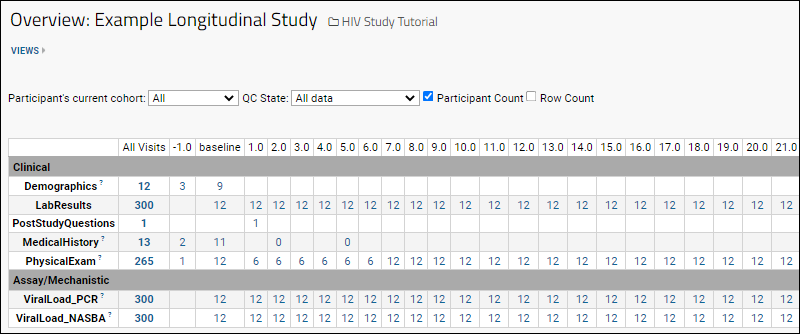

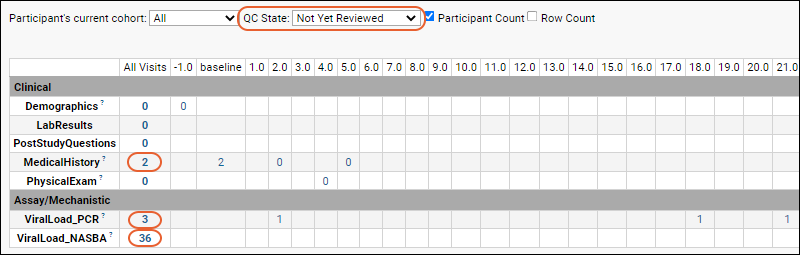

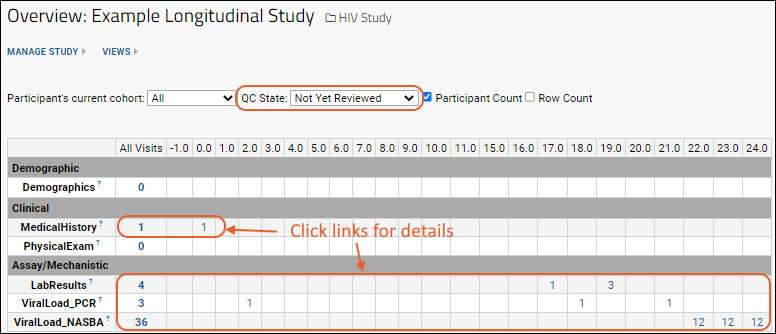

Step 1: Study Dashboards

Step 2: Study Reports

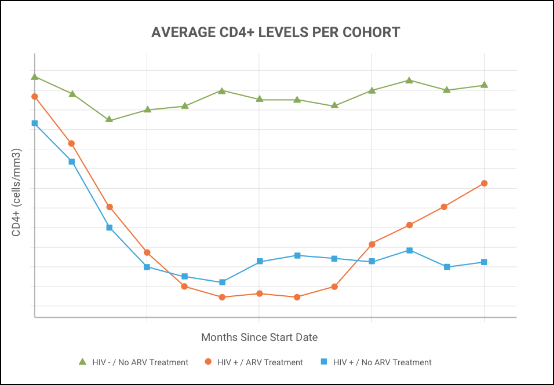

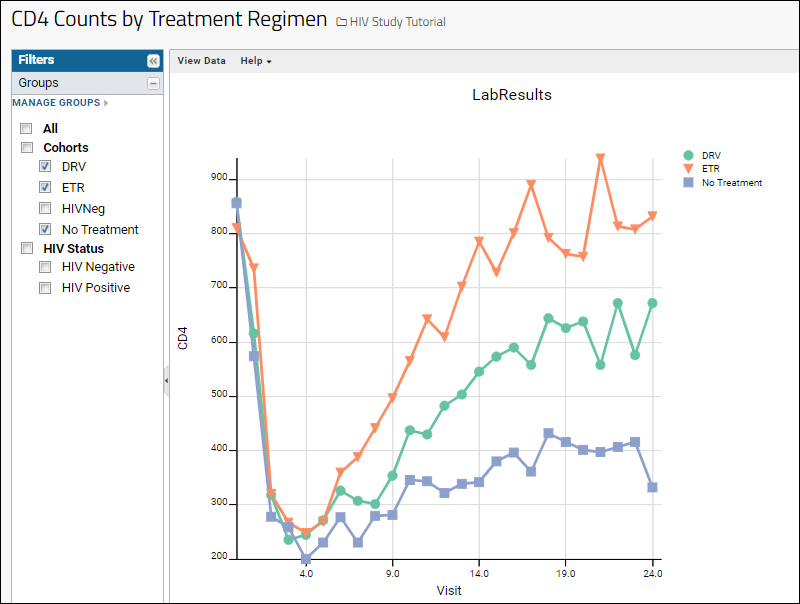

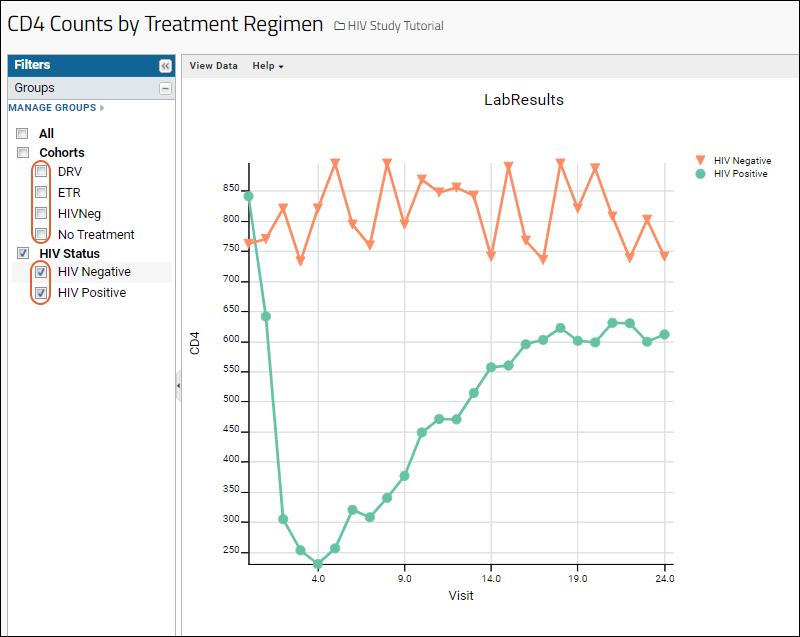

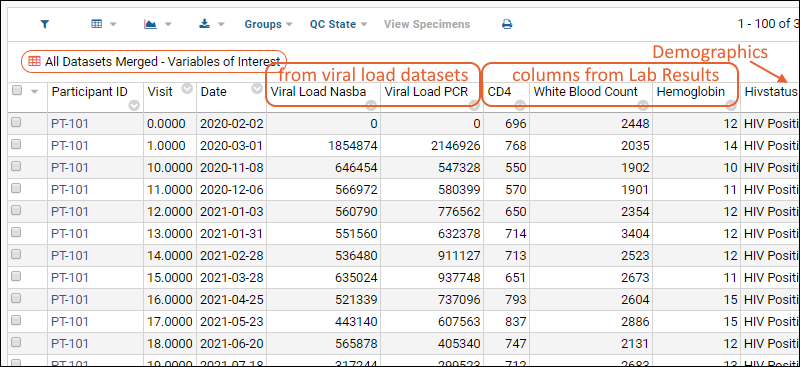

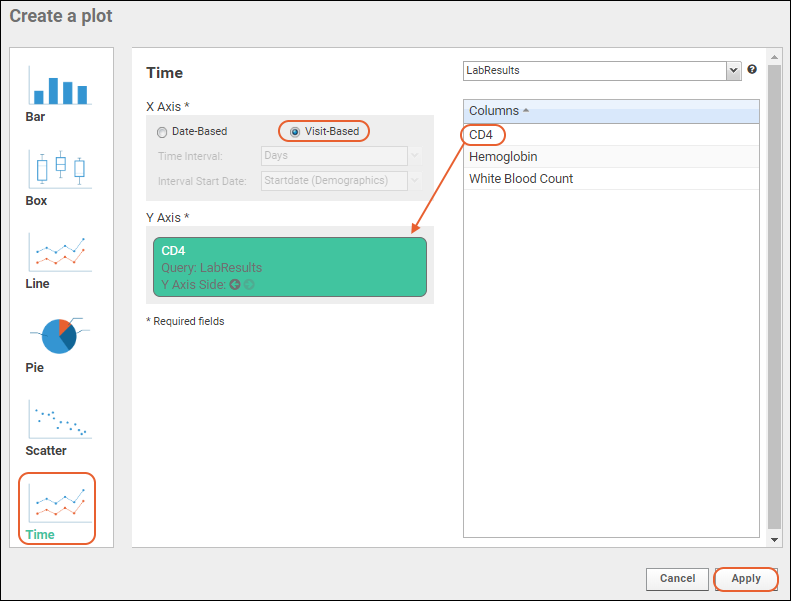

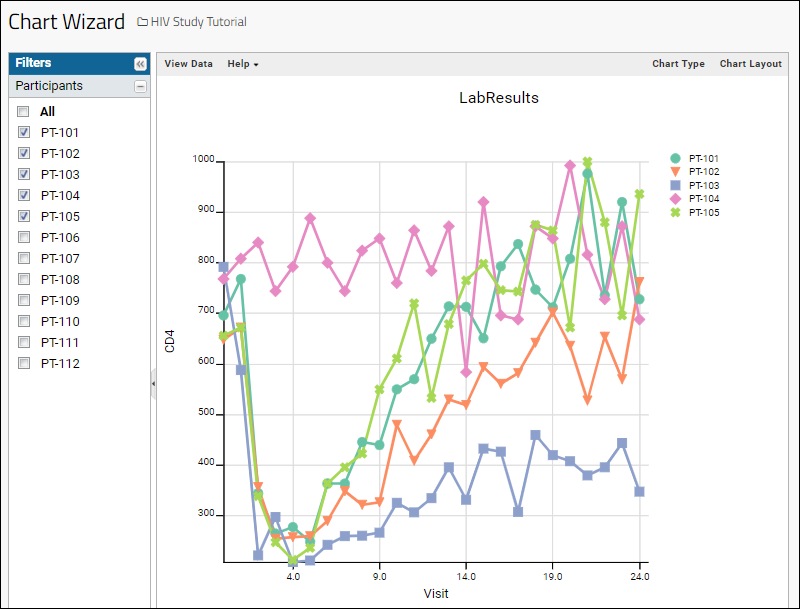

Step 3: Compare Participant Performance

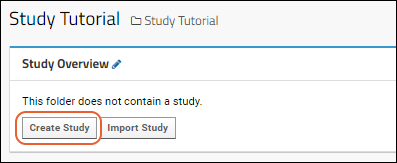

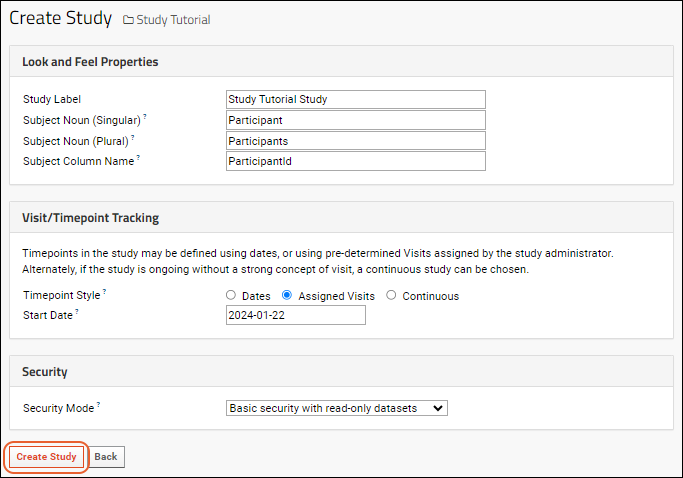

Tutorial: Set Up a New Study

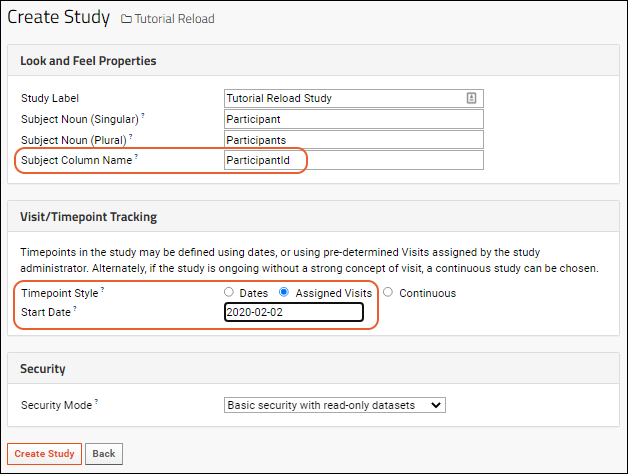

Step 1: Create Study Framework

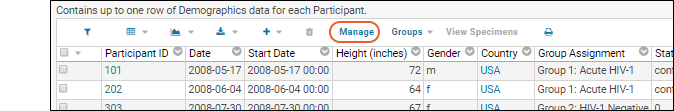

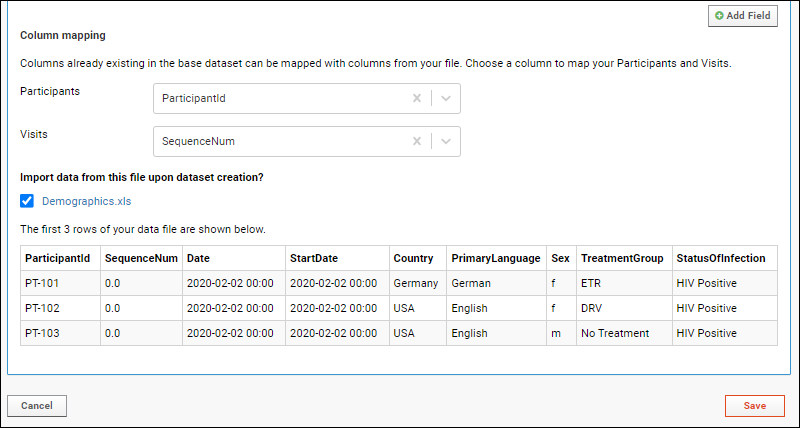

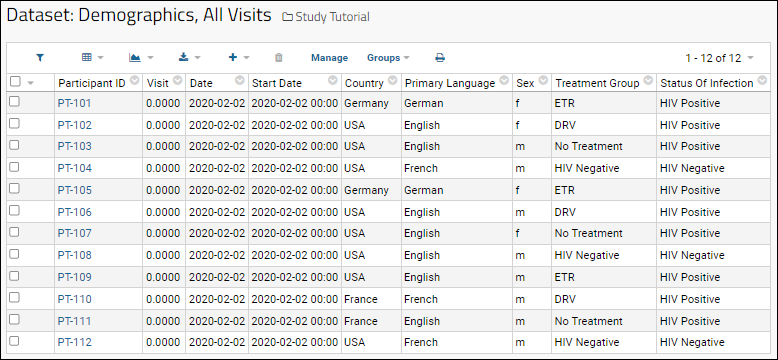

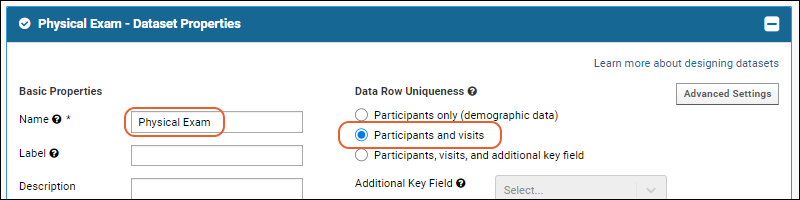

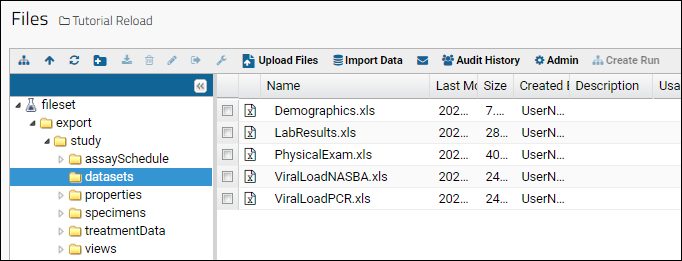

Step 2: Import Datasets

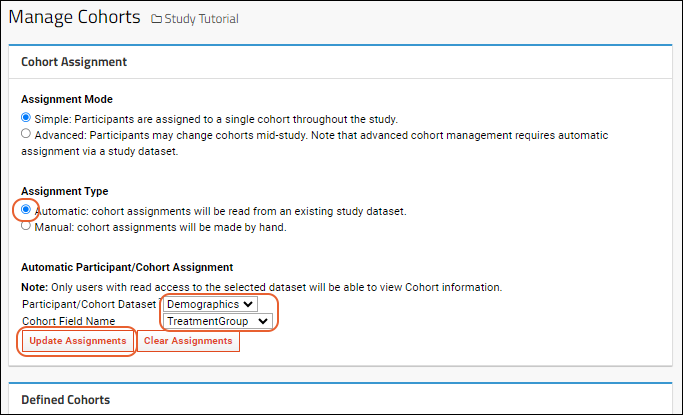

Step 3: Identify Cohorts

Step 4: Integrate and Visualize Data

Install an Example Study

Study User Guide

Study Navigation

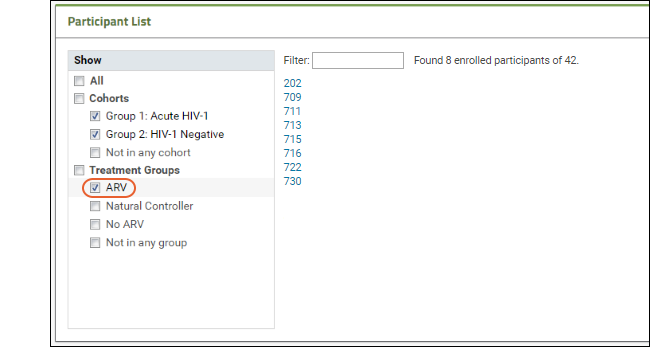

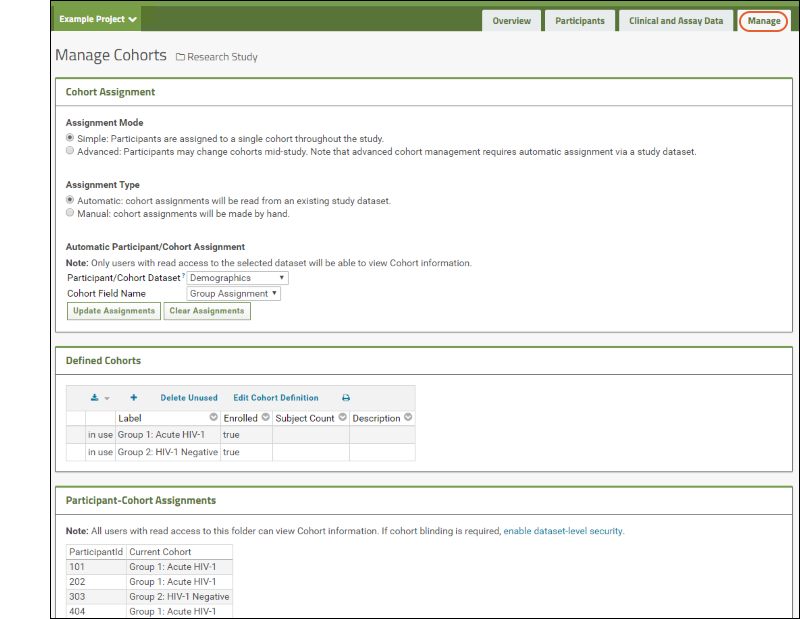

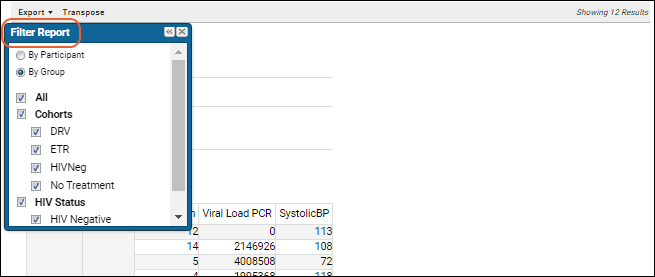

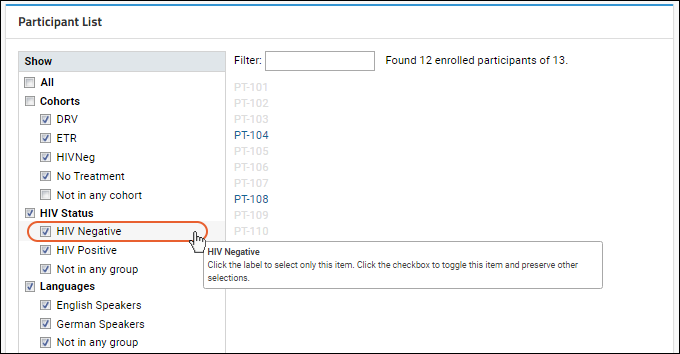

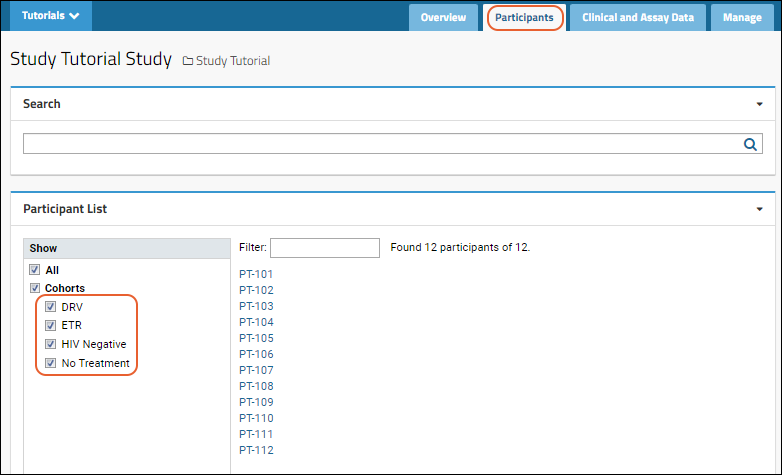

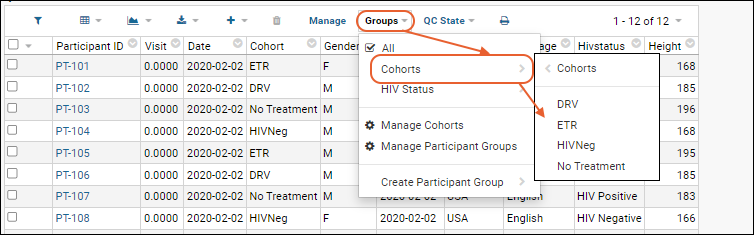

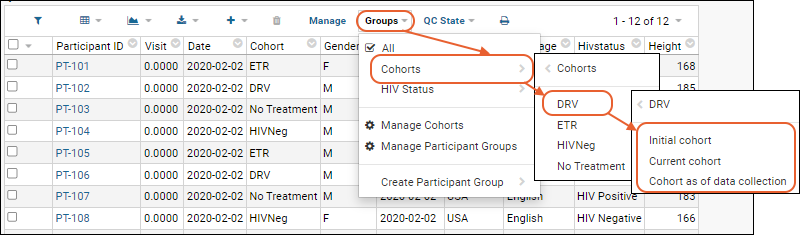

Cohorts

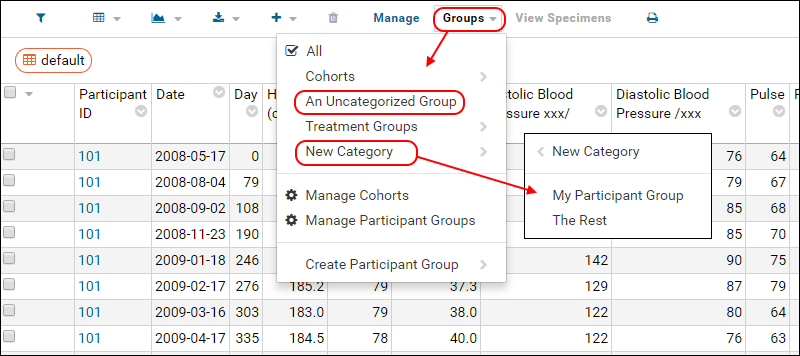

Participant Groups

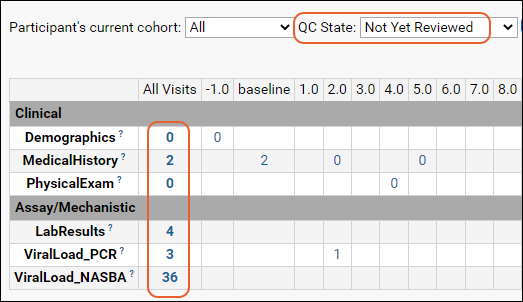

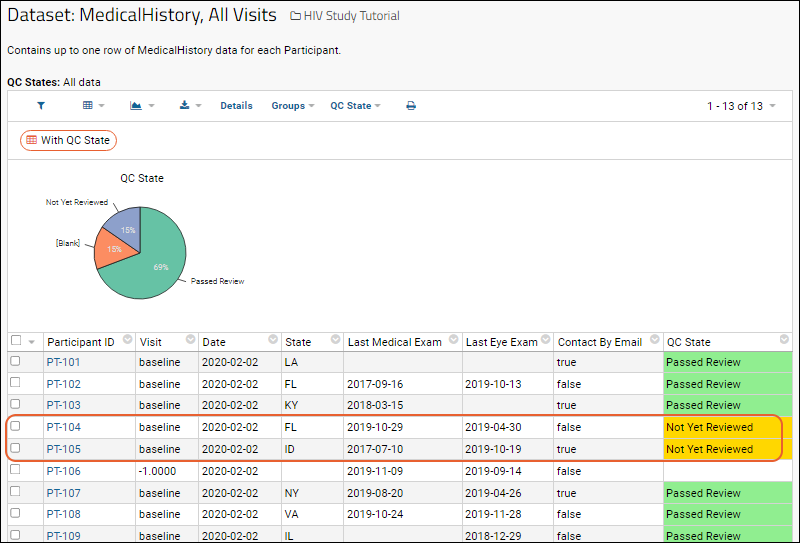

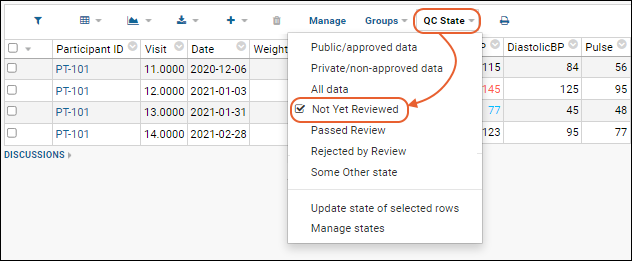

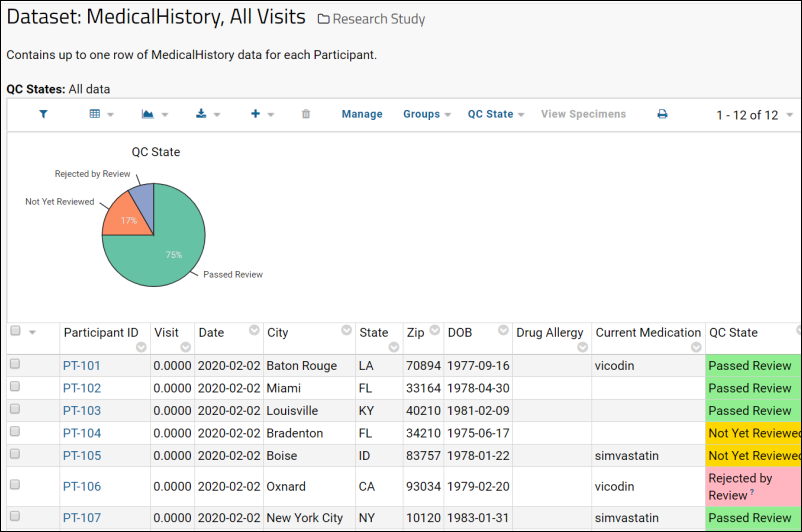

Dataset QC States: User Guide

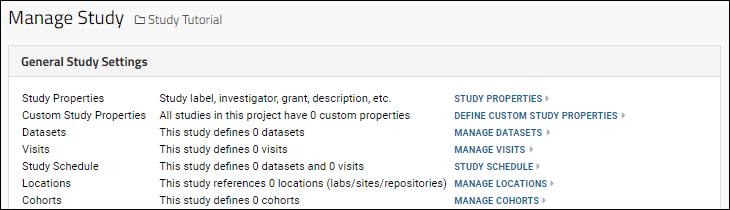

Study Administrator Guide

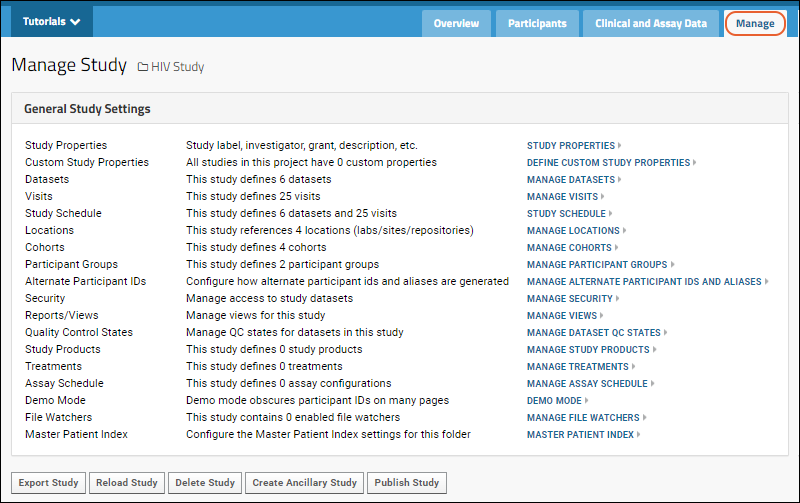

Study Management

Study Properties

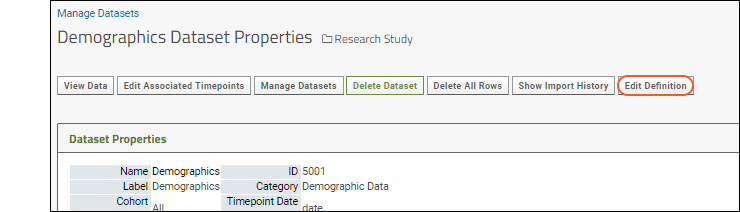

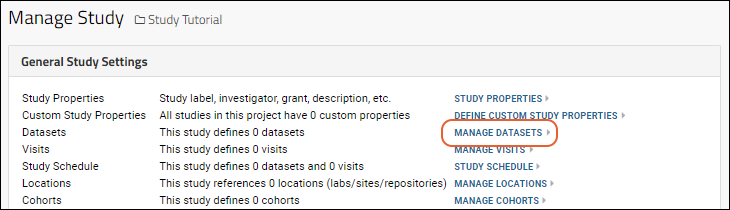

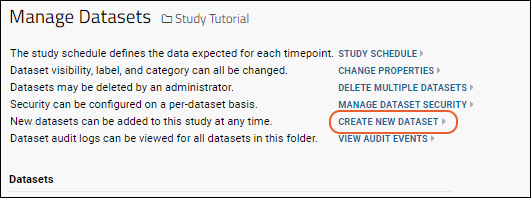

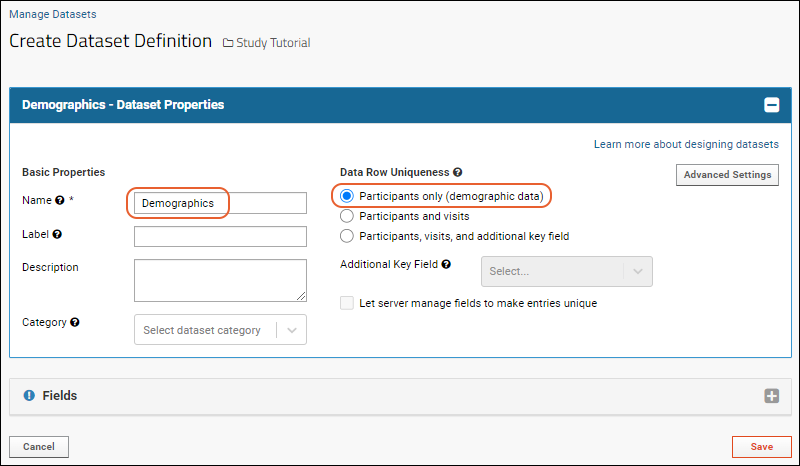

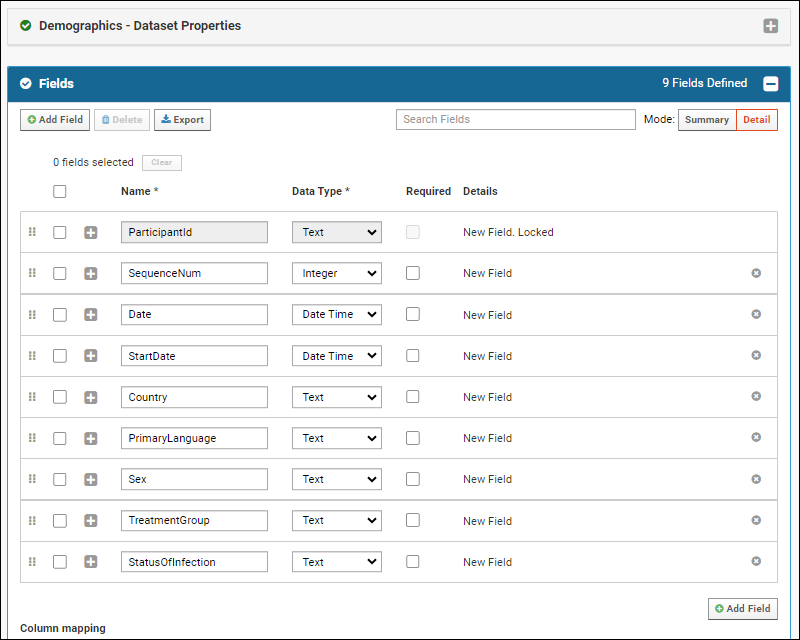

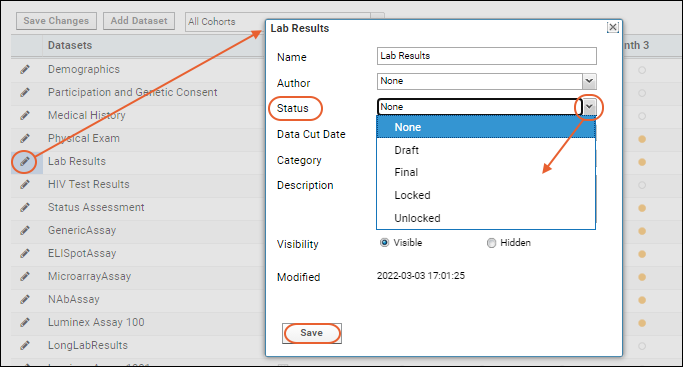

Manage Datasets

Manage Visits or Timepoints

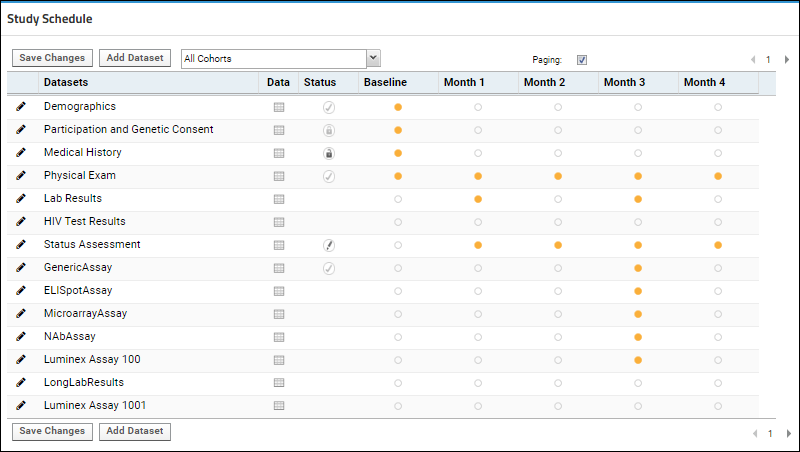

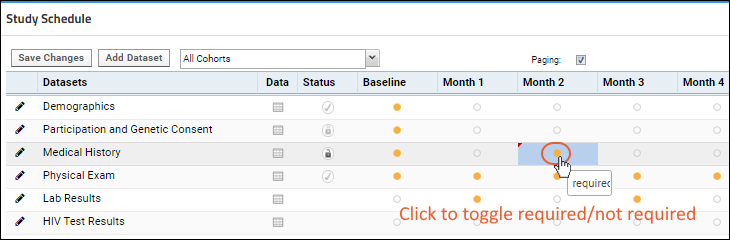

Study Schedule

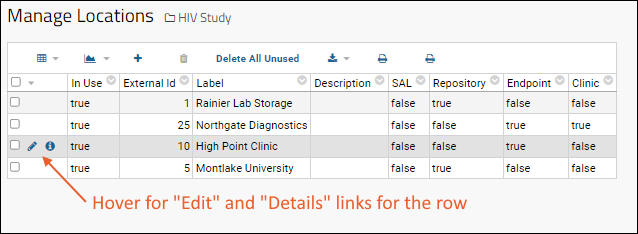

Manage Locations

Manage Cohorts

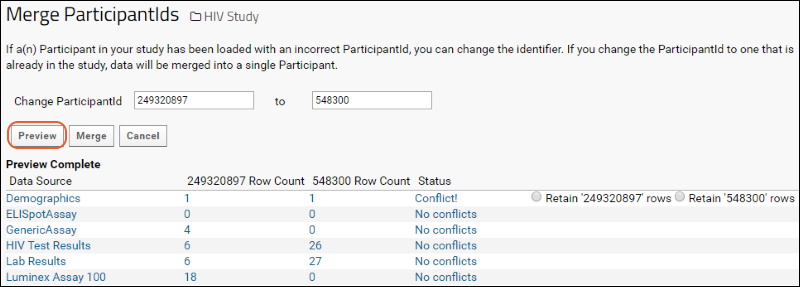

Manage Participants

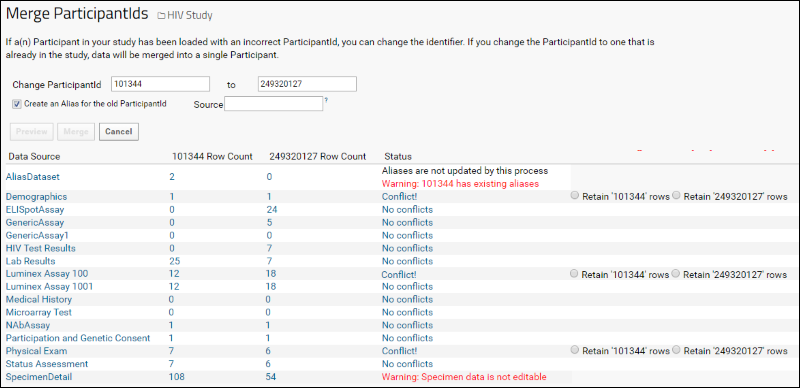

Participant Aliases

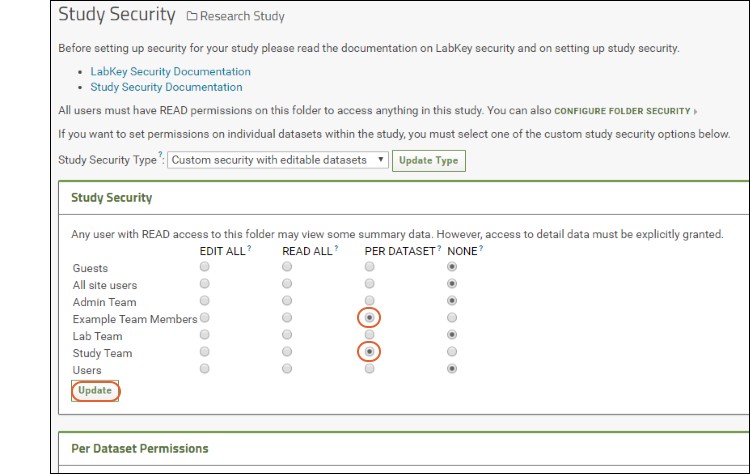

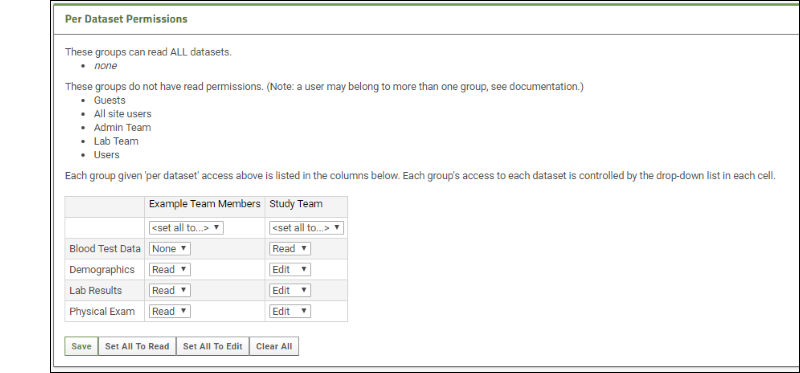

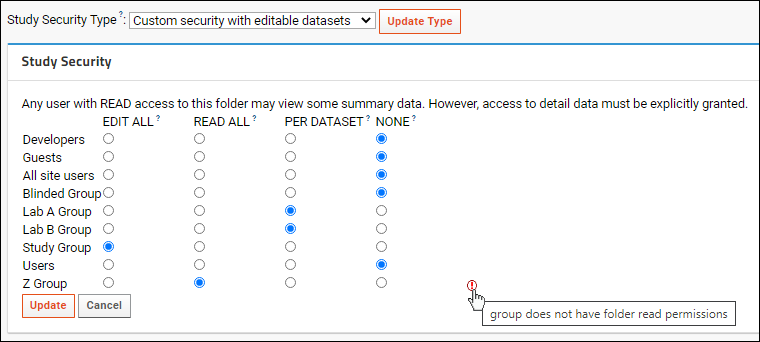

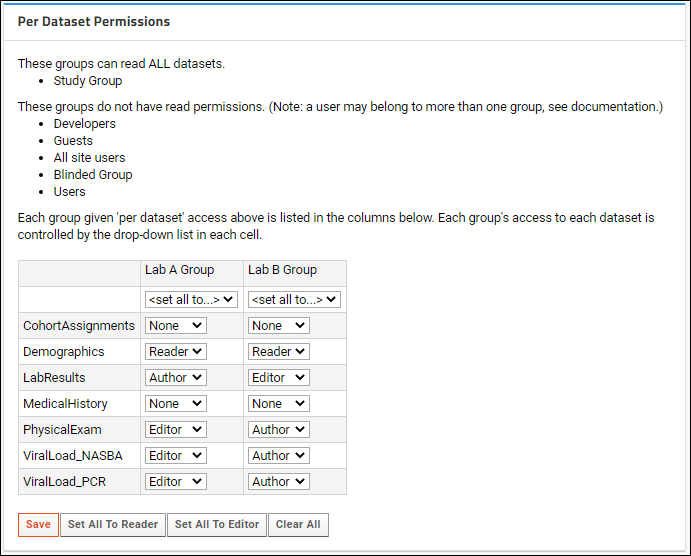

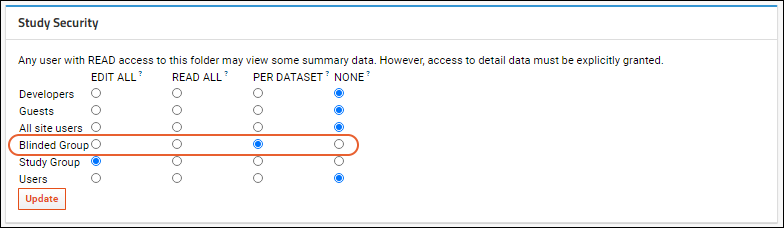

Manage Study Security

Configure Permissions for Reports & Views

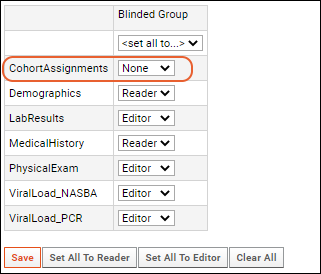

Securing Portions of a Dataset (Row and Column Level Security)

Dataset QC States: Admin Guide

Manage Study Products

Manage Treatments

Manage Assay Schedule

Study Demo Mode

Create a Study

Create and Populate Datasets

Import Data to a Dataset

Import From a Dataset Archive

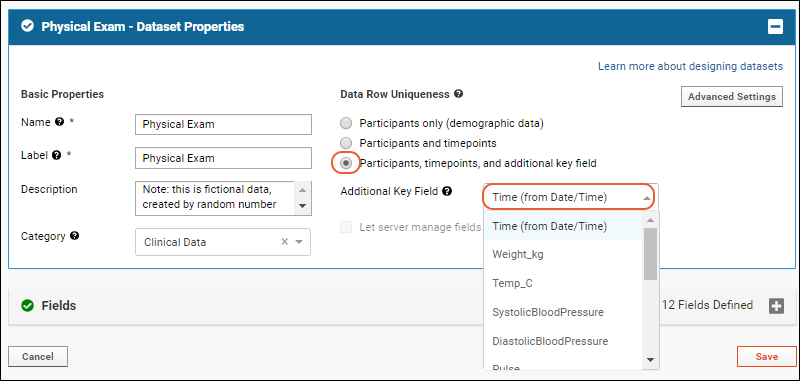

Dataset Properties

Study: Reserved and Special Fields

Dataset System Fields

Tutorial: Inferring Datasets from Excel and TSV Files

Visits and Dates

Create Visits Manually

Edit Visits or Timepoints

Import Visit Map

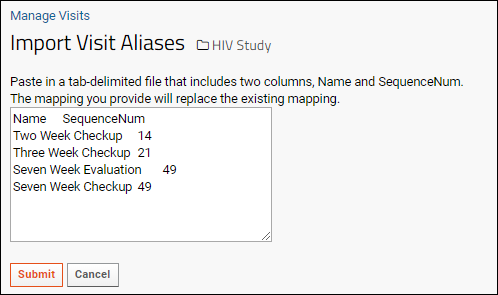

Import Visit Names / Aliases

Continuous Studies

Study Visits and Timepoints FAQ

Export/Import/Reload a Study

Export a Study

Import a Study

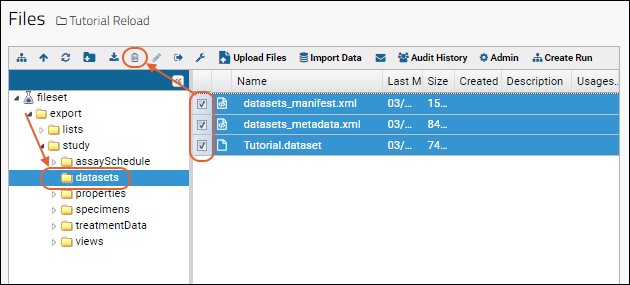

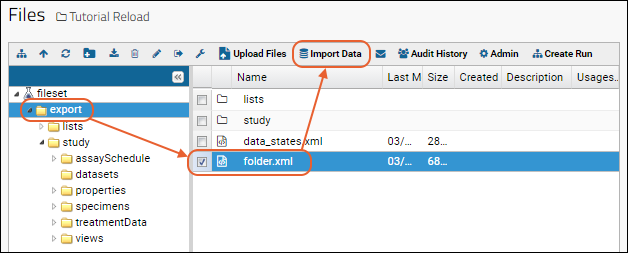

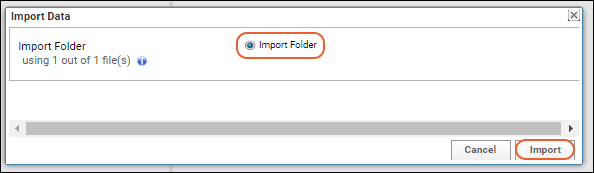

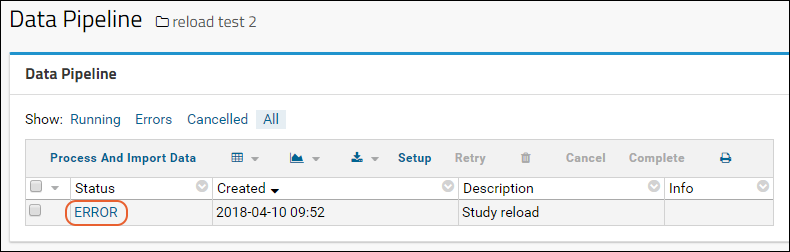

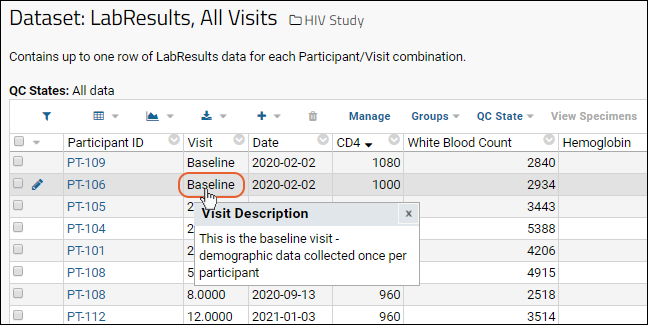

Reload a Study

Study Object Files and Formats

Publish a Study

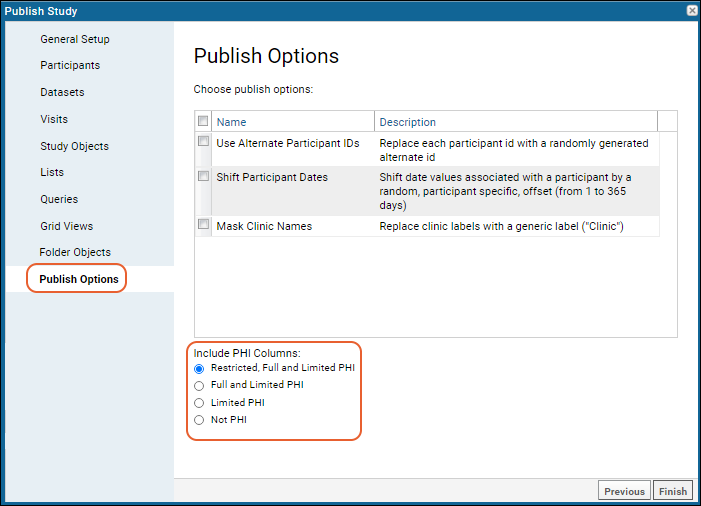

Publish a Study: Protected Health Information / PHI

Ancillary Studies

Refresh Data in Ancillary and Published Studies

Cohort Blinding

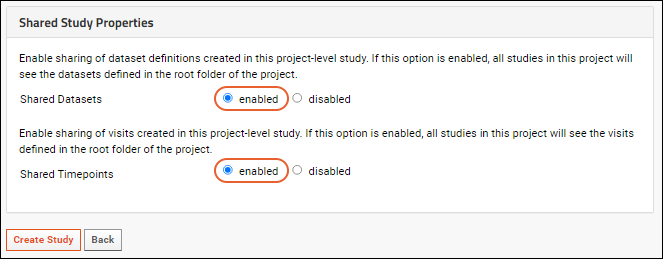

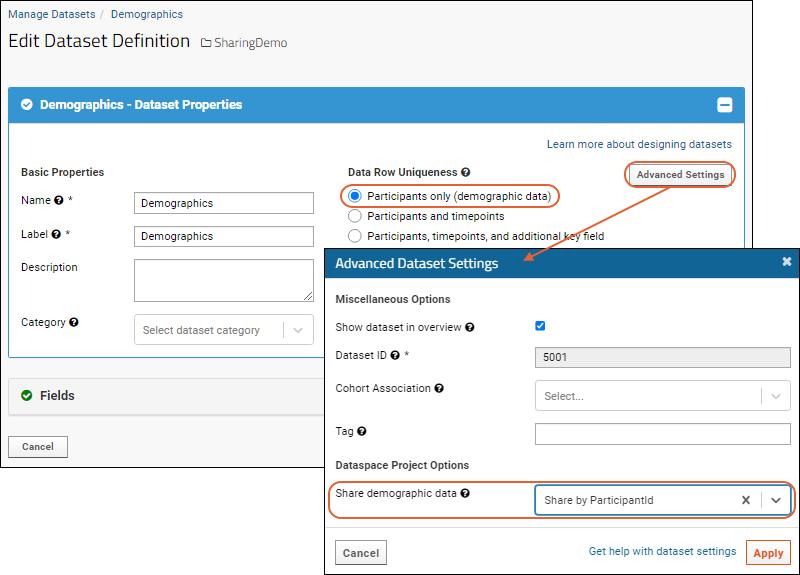

Shared Datasets and Timepoints

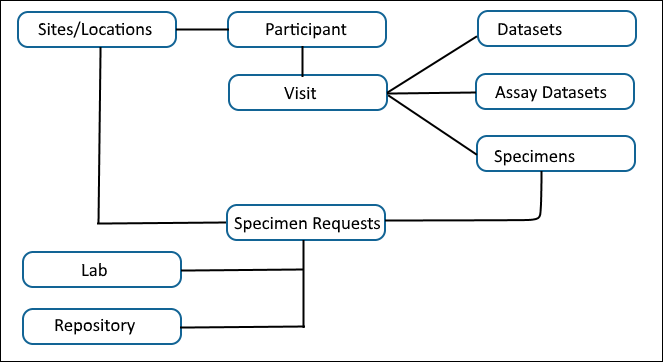

How is Study Data Stored in LabKey Server?

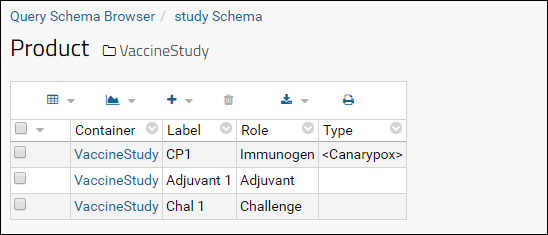

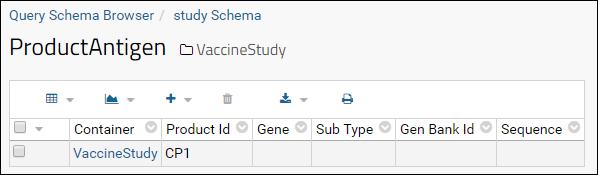

Create a Vaccine Study Design

Vaccine Study: Data Storage

Premium Resource: LabKey Data Finder

Electronic Health Records (EHR)

Premium Resource: EHR: Animal History

Premium Resource: EHR: Animal Search

Premium Resource: EHR: Data Entry

Premium Resource: EHR: Data Entry Development

Premium Resource: EHR: Lookups

Premium Resource: EHR: Genetics Algorithms

Premium Resource: EHR: Administration

Premium Resource: EHR: Connect to Sample Manager

Premium Resource: EHR: Billing Module

Premium Resource: EHR: Define Billing Rates and Fees

Premium Resource: EHR: Preview Billing Reports

Premium Resource: EHR: Perform Billing Run

Premium Resource: EHR: Historical Billing Data

Premium Resource: EHR: Compliance and Training Folder

Premium Resource: EHR: Trigger Scripts

Structured Narrative Datasets

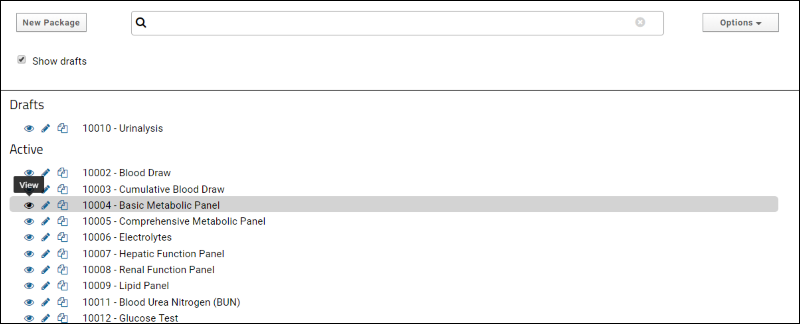

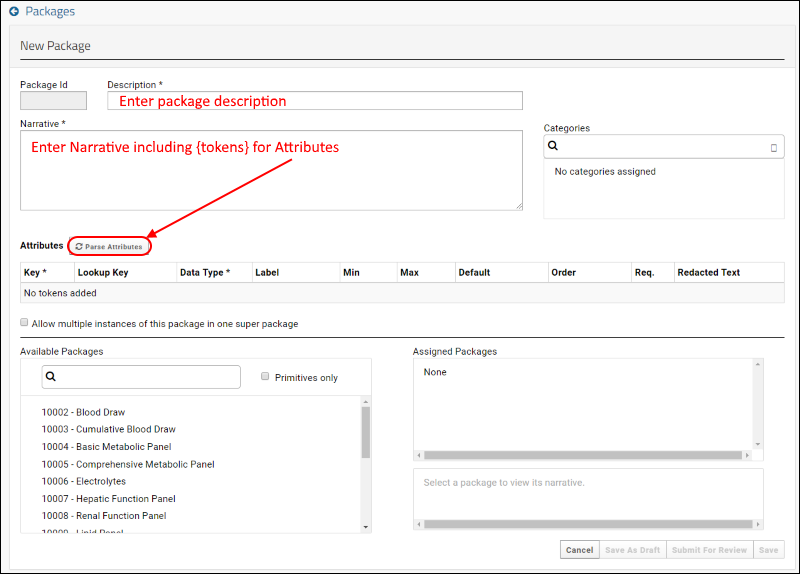

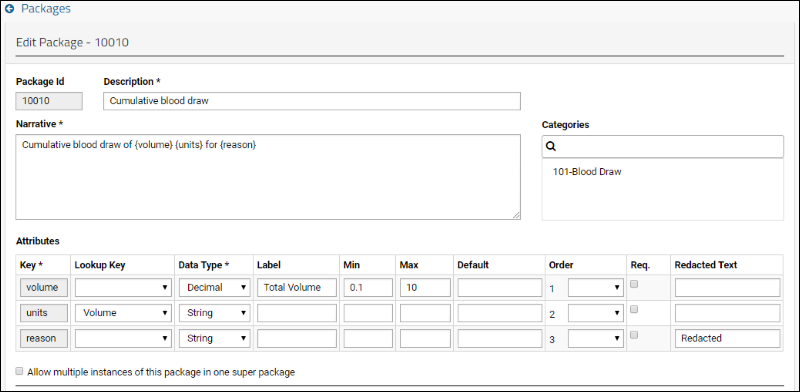

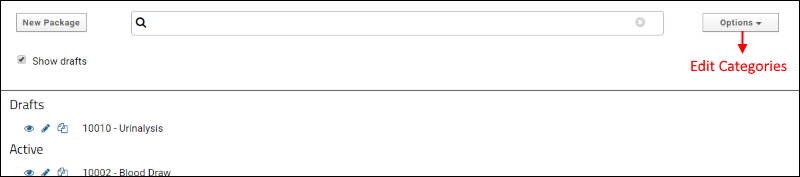

SND: Packages

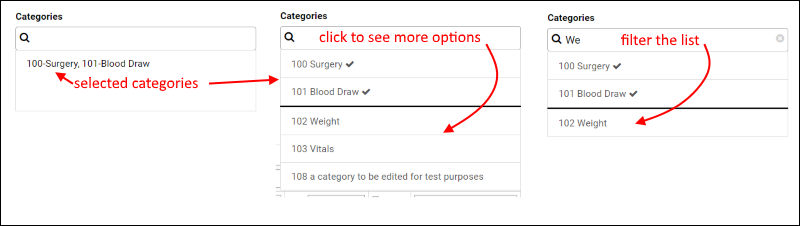

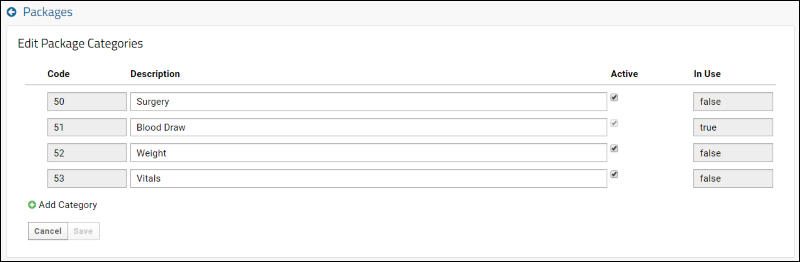

SND: Categories

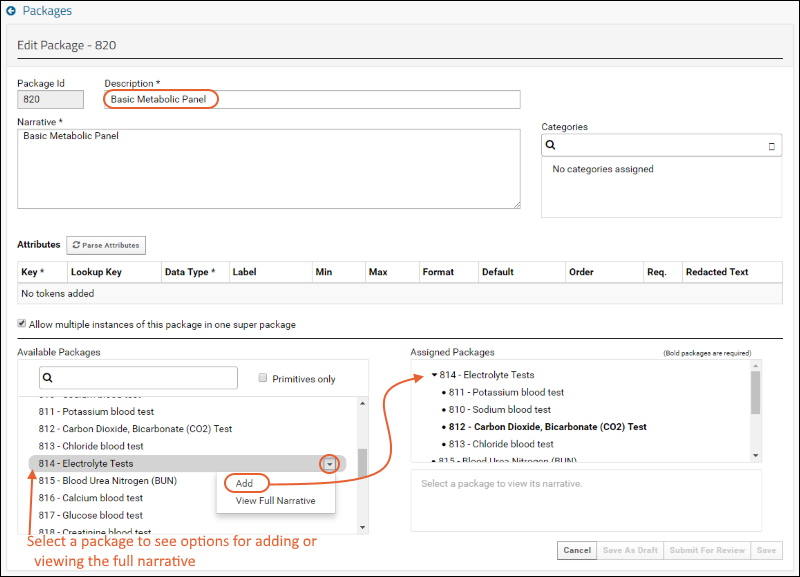

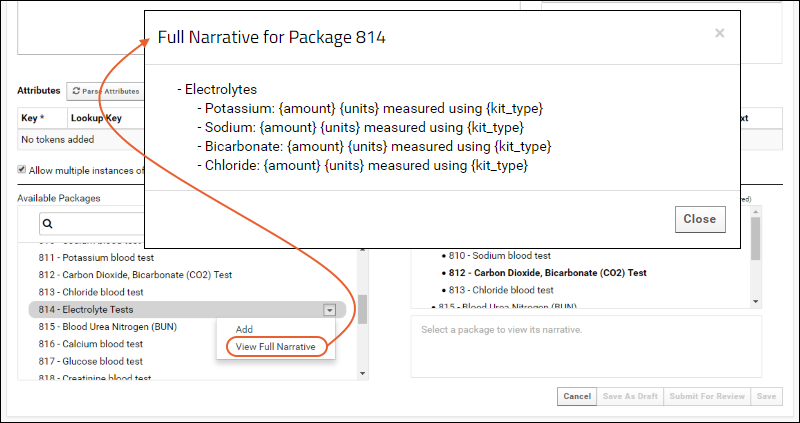

SND: Super-packages

SND: Projects

SND: Events

SND: QC and Security

SND: APIs

SND: Event Triggers

SND: UI Development

Extending SND Tables

XML Import of Packages

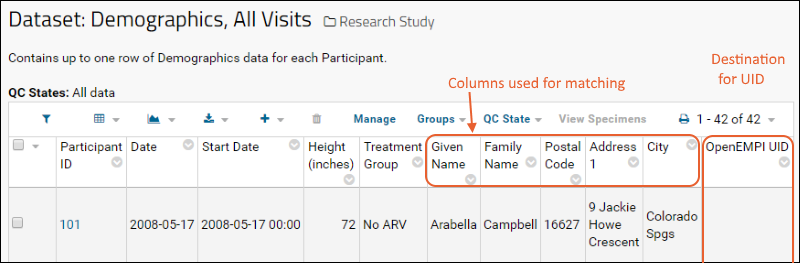

Enterprise Master Patient Index Integration

Specimen Tracking (Legacy)

Panorama: Targeted Mass Spectrometry

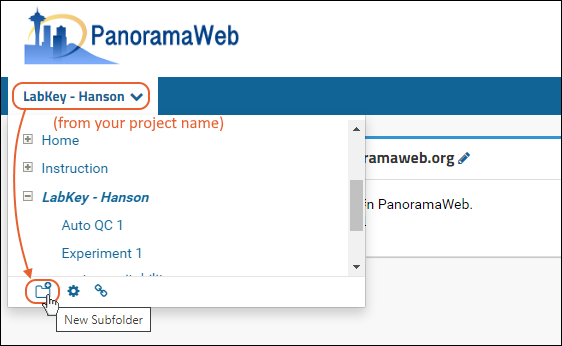

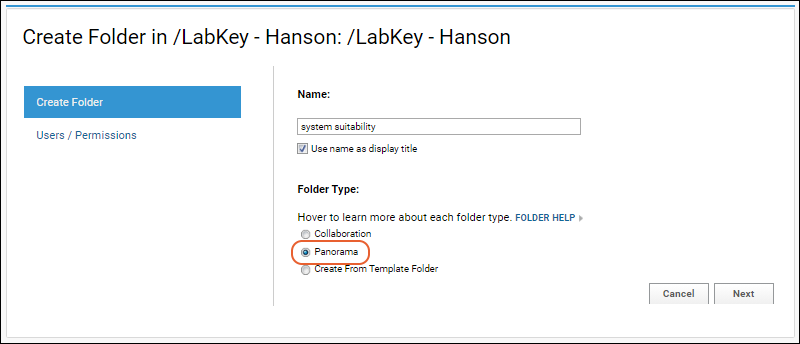

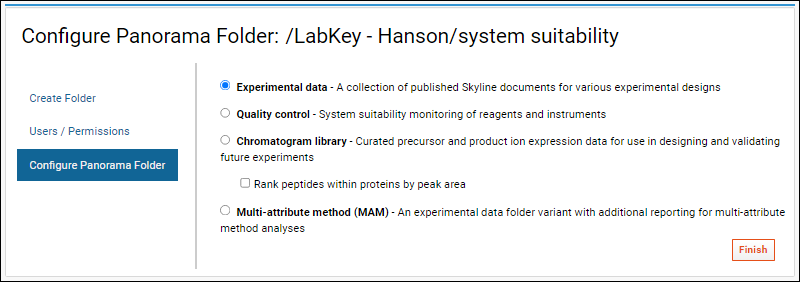

Configure Panorama Folder

Panorama Data Import

Panorama Experimental Data Folder

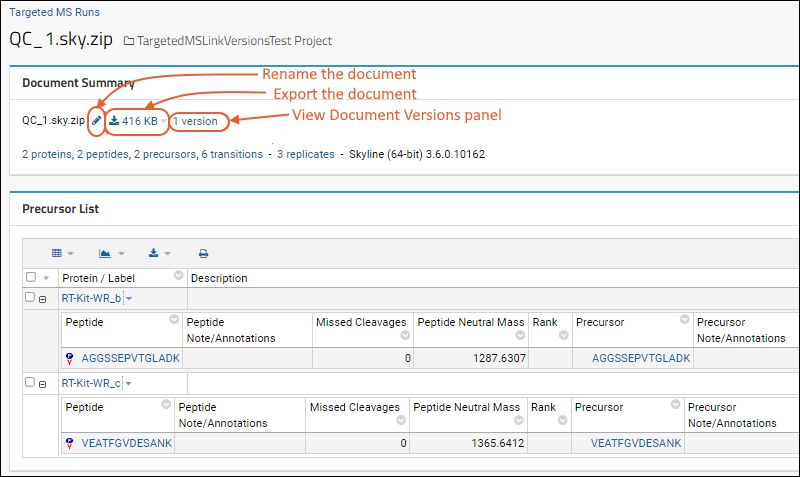

Panorama: Skyline Document Management

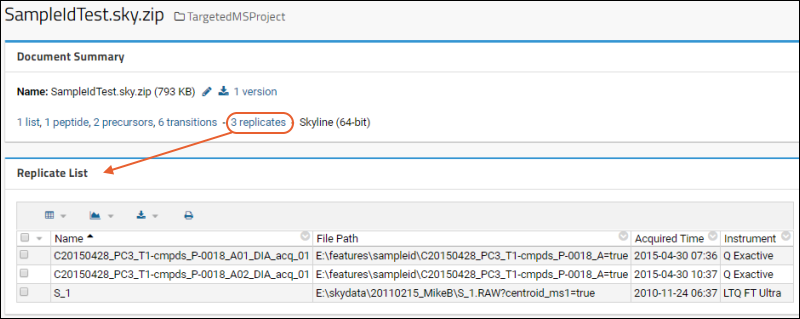

Panorama: Skyline Replicates View

Panorama: Protein/Molecule List Details

Panorama: Skyline Lists

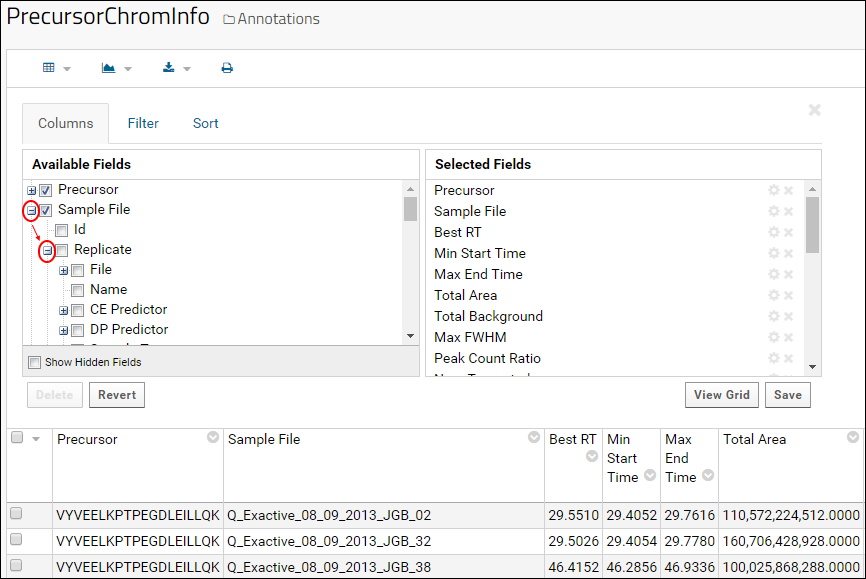

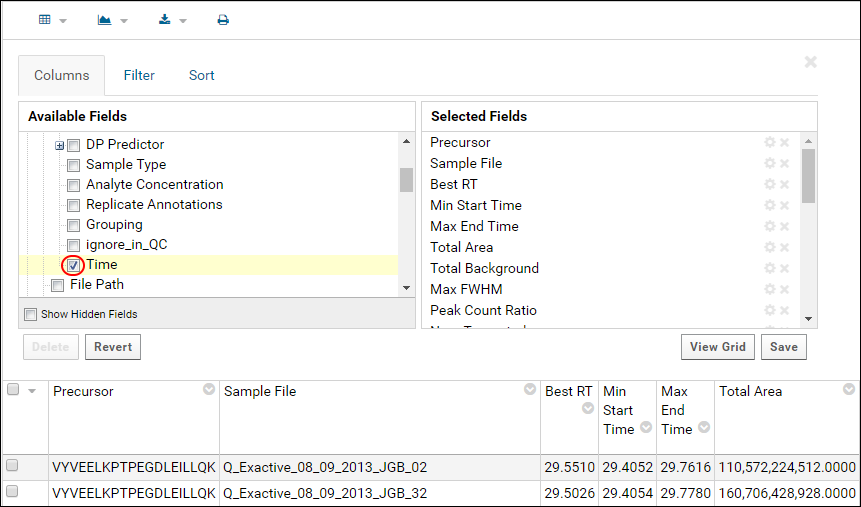

Panorama: Skyline Annotation Data

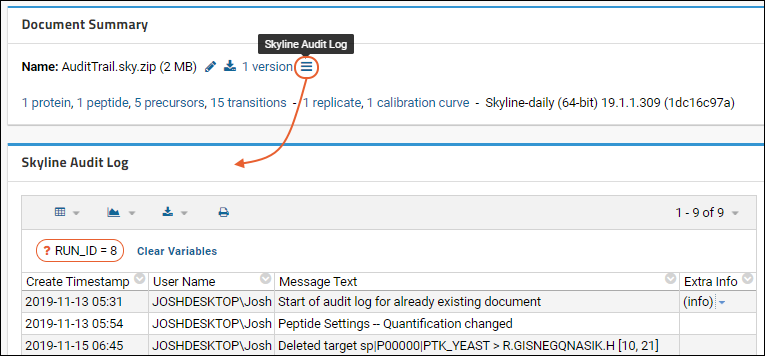

Panorama: Skyline Audit Log

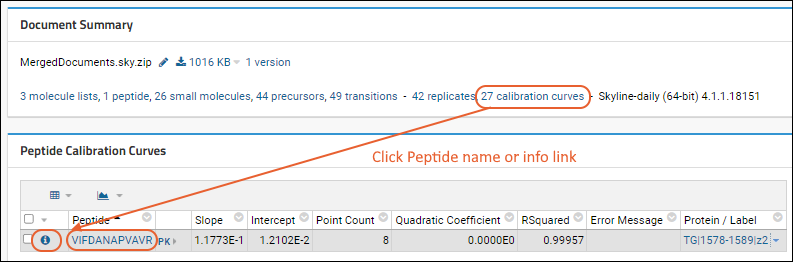

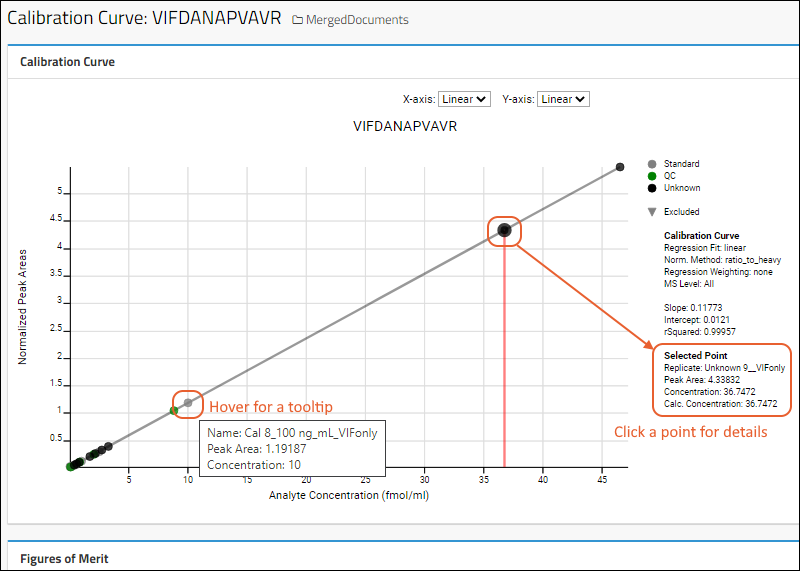

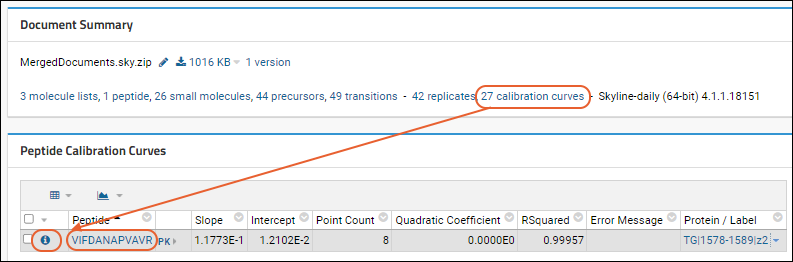

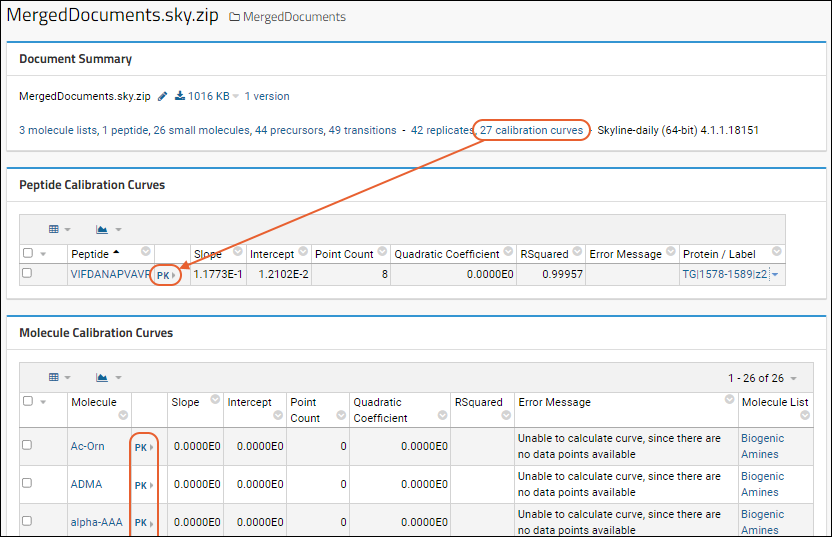

Panorama: Calibration Curves

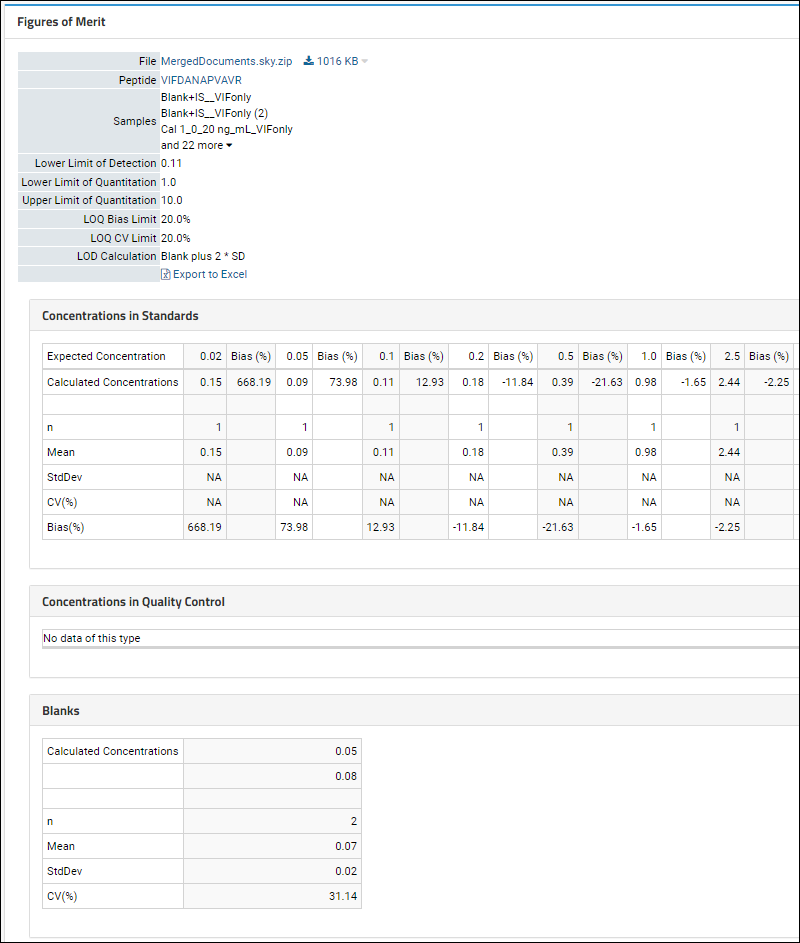

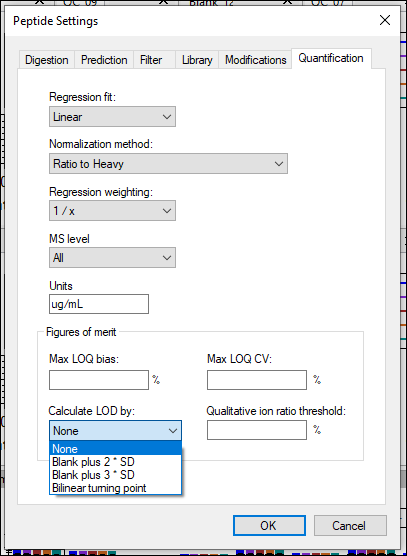

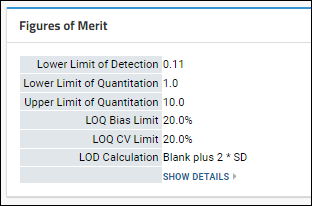

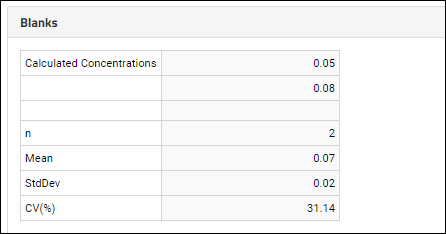

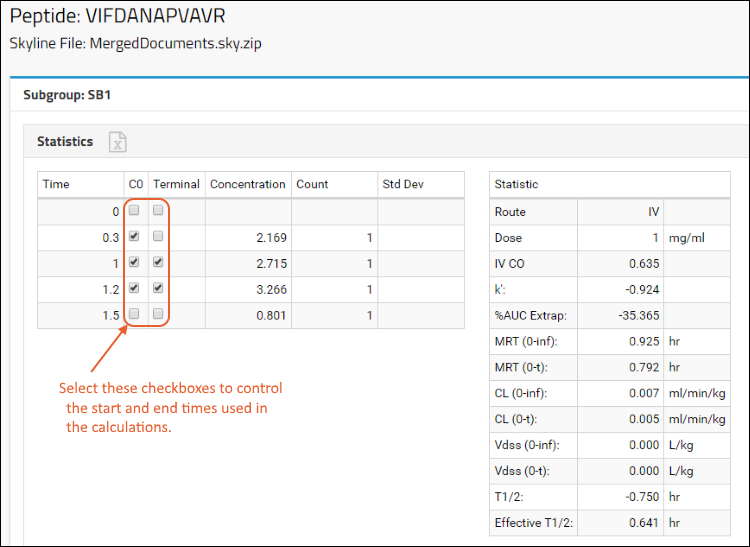

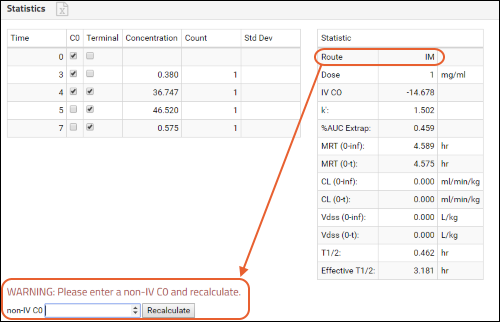

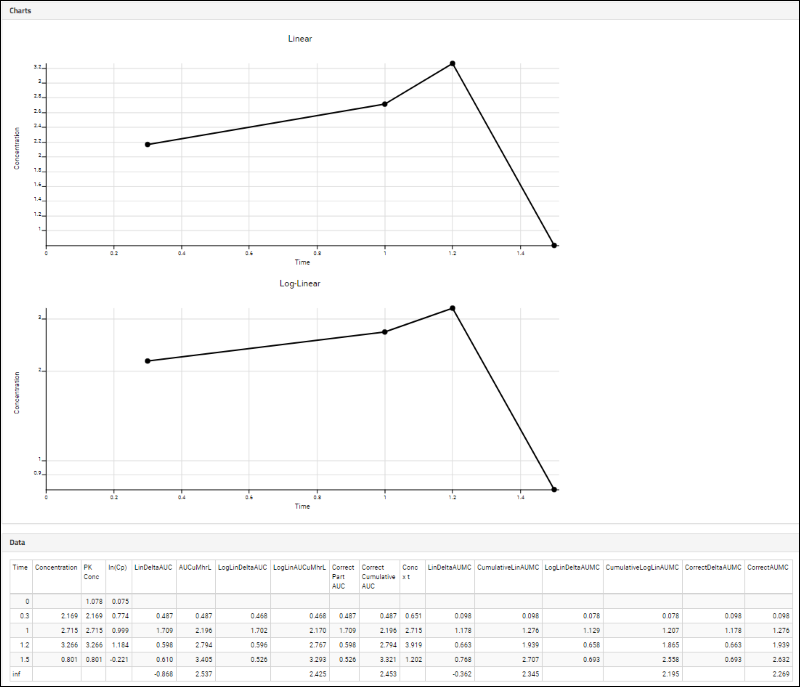

Panorama: Figures of Merit and Pharmacokinetics (PK)

Panorama: Instruments Summary and QC Links

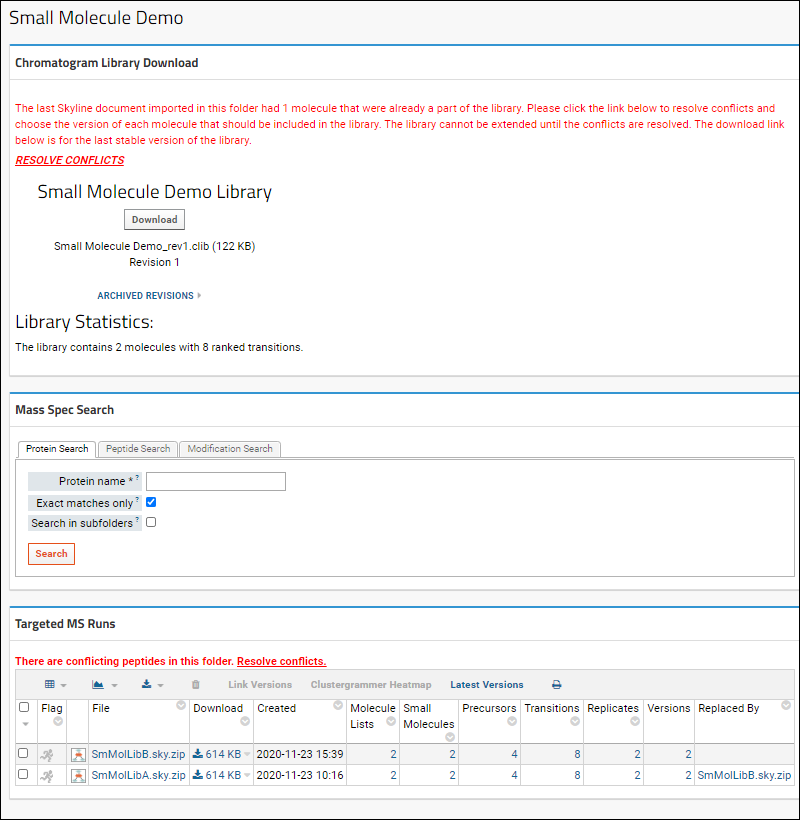

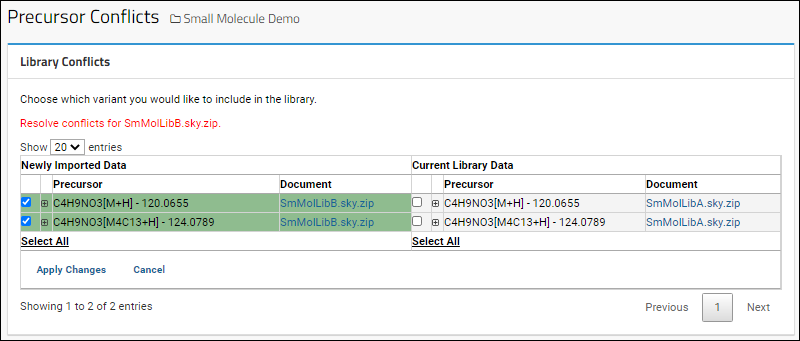

Working with Small Molecule Targets

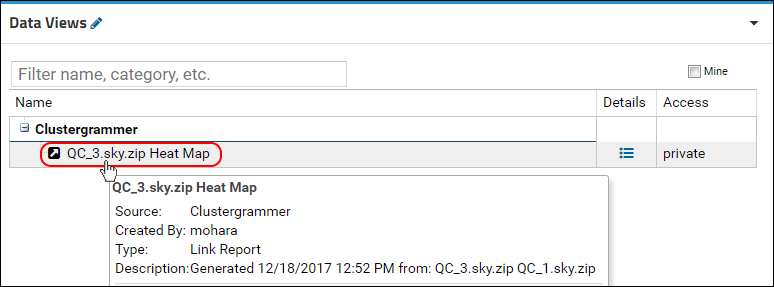

Panorama: Heat Maps

Panorama Multi-Attribute Method Folder

Panorama MAM Reports

Panorama: Crosslinked Peptides

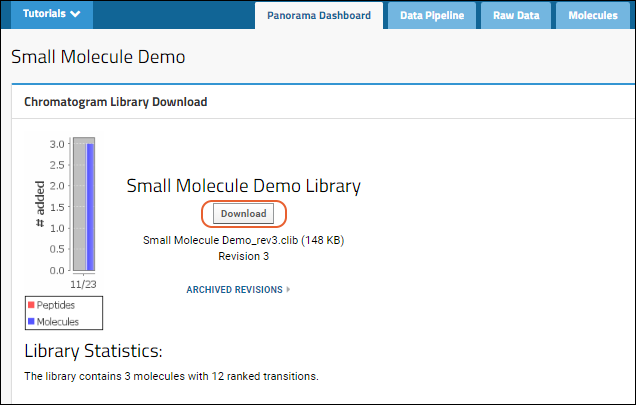

Panorama Chromatogram Library Folder

Using Chromatogram Libraries

Panorama: Reproducibility Report

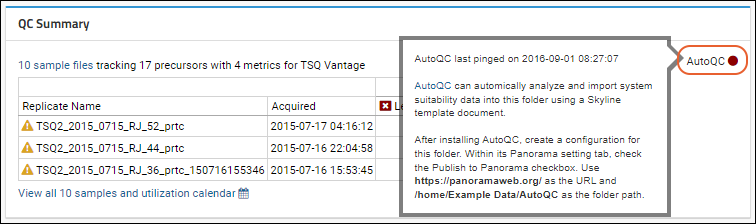

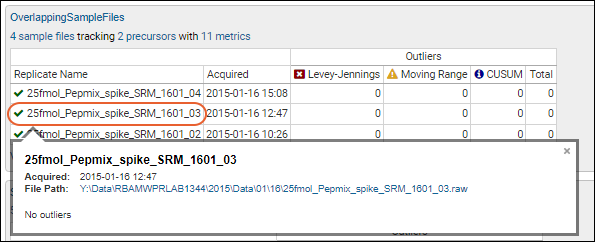

Panorama QC Folders

Panorama QC Dashboard

Panorama: Instrument Utilization Calendar

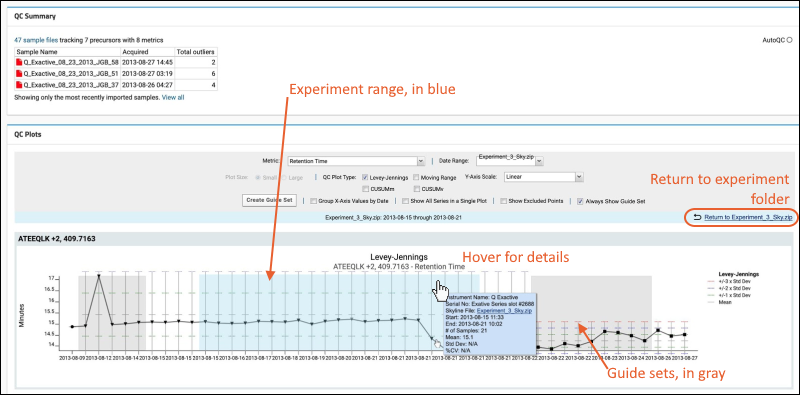

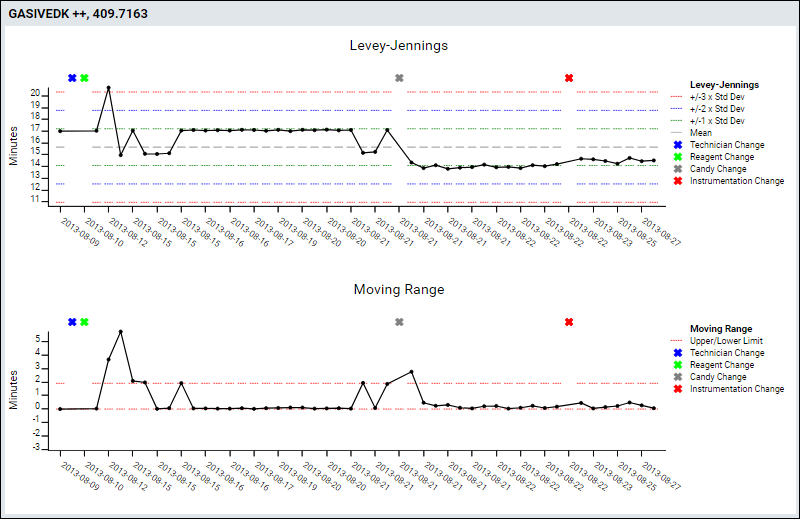

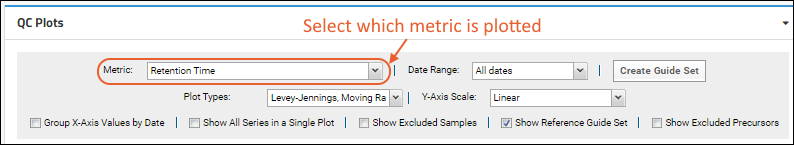

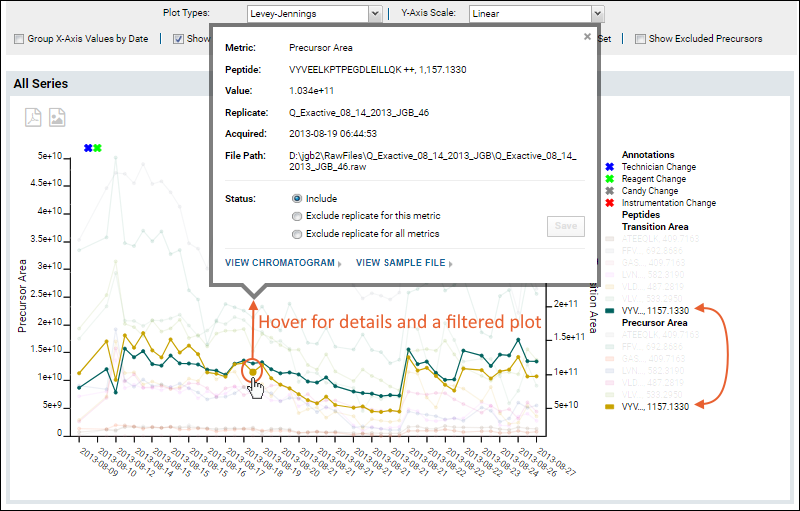

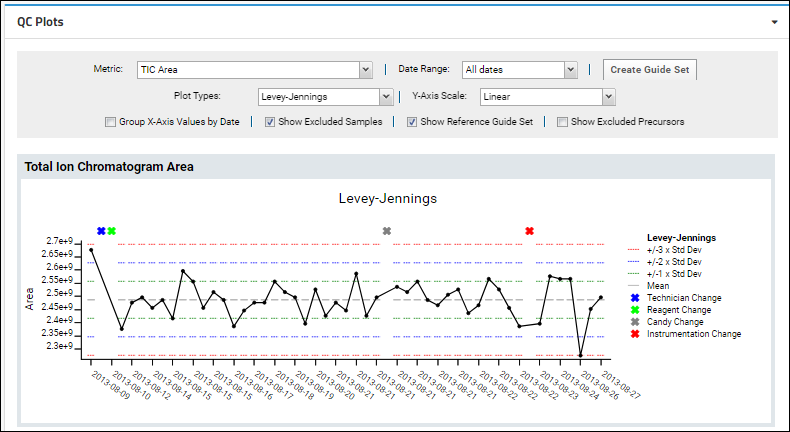

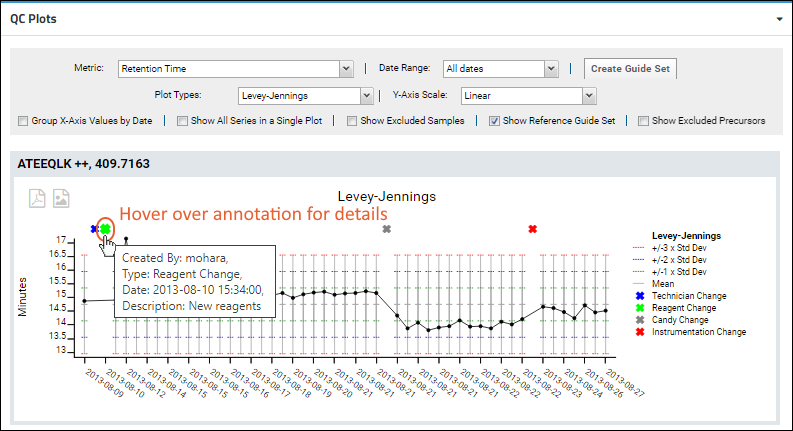

Panorama QC Plots

Panorama QC Plot Types

Panorama QC Annotations

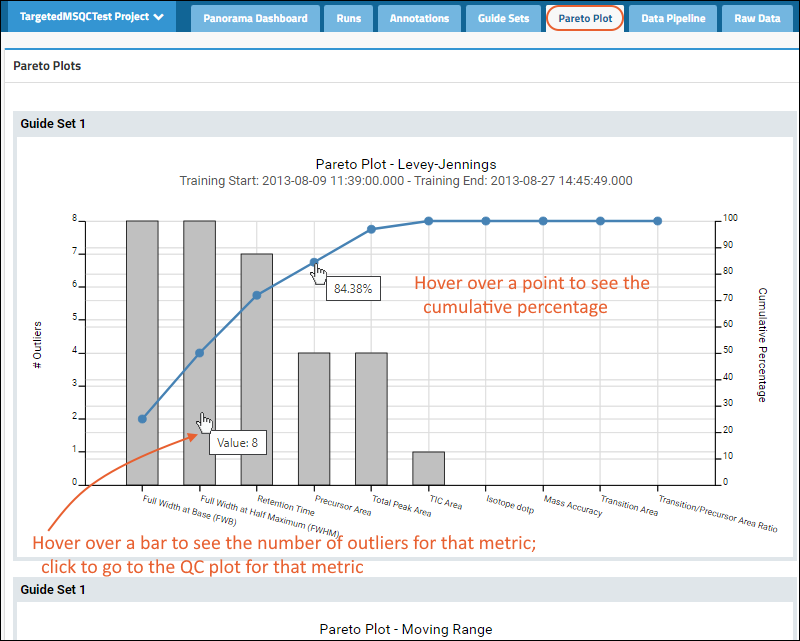

Panorama: Pareto Plots

Panorama: iRT Metrics

Panorama: Configure QC Metrics

Panorama: Outlier Notifications

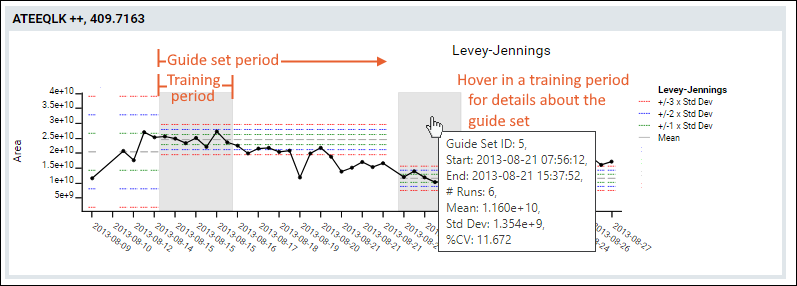

Panorama QC Guide Sets

Panorama: Chromatograms

Panorama and Sample Management

Collaboration

Files

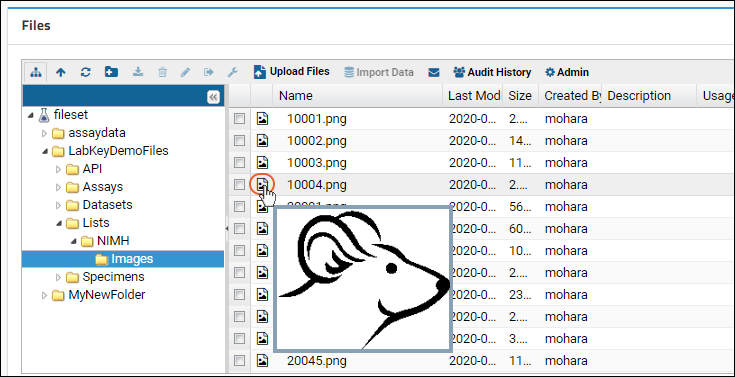

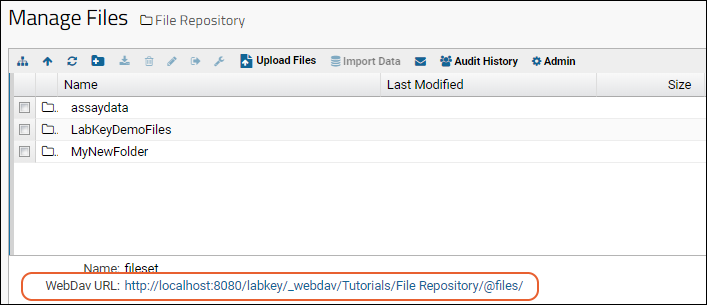

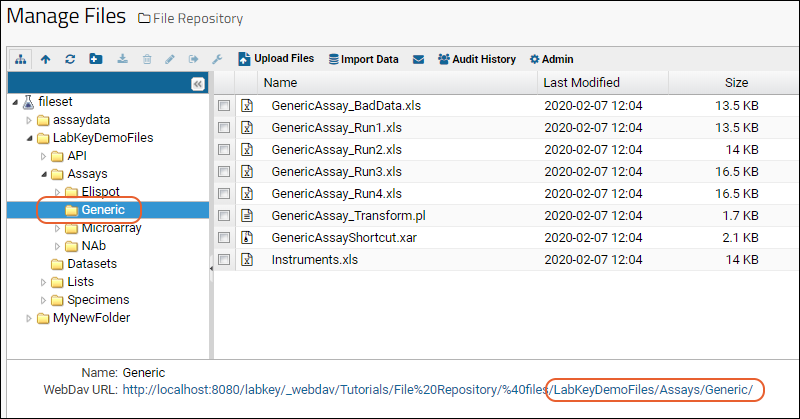

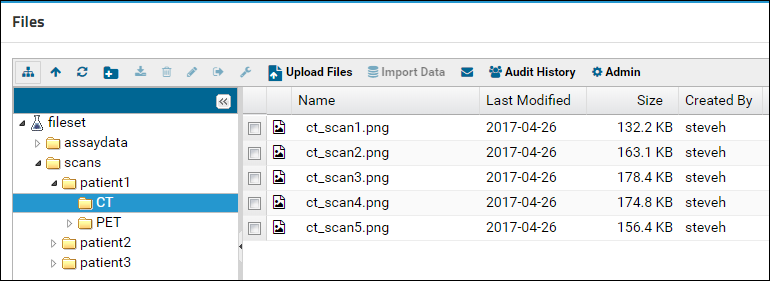

Tutorial: File Repository

Step 1: Set Up a File Repository

Step 2: File Repository Administration

Step 3: Search the Repository

Step 4: Import Data from the Repository

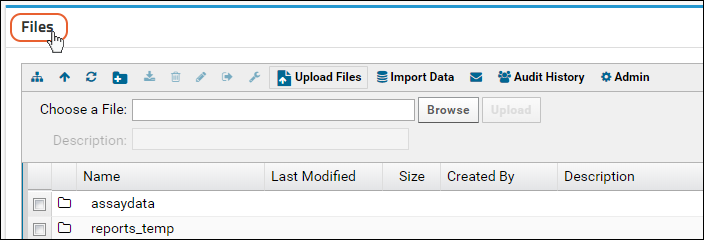

Using the Files Repository

View and Share Files

Controlling File Display via the URL

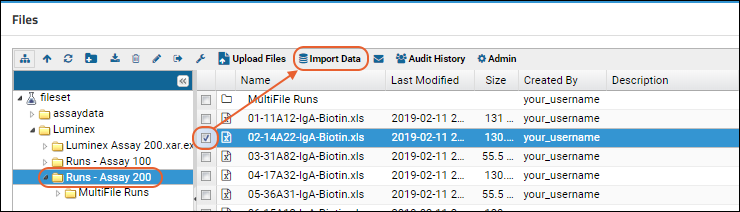

Import Data from Files

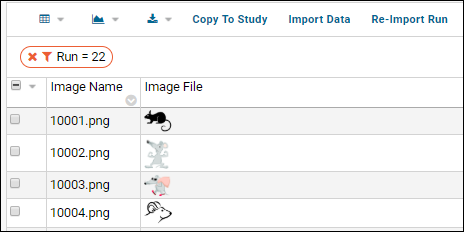

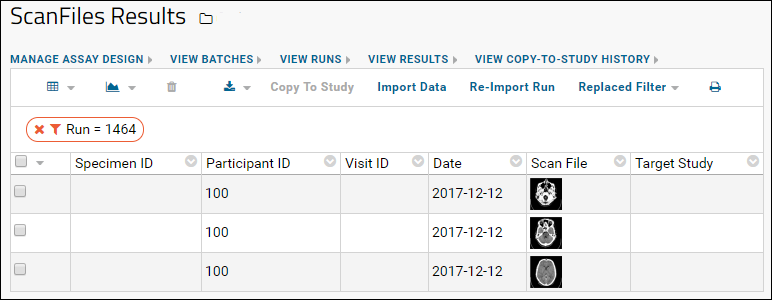

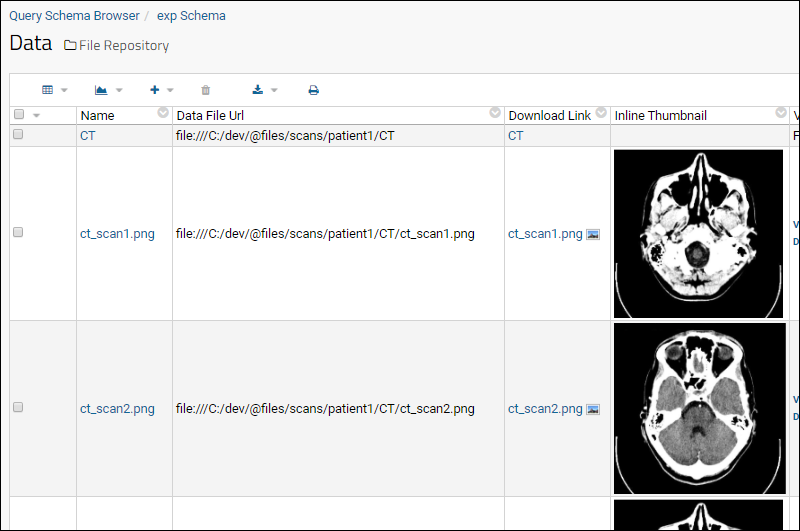

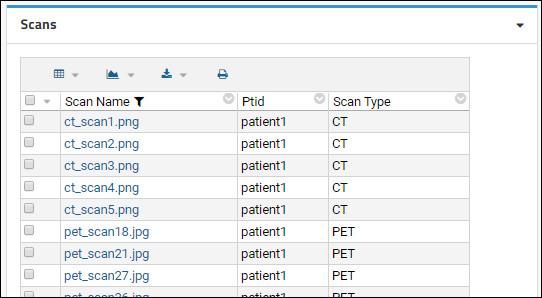

Linking Assays with Images and Other Files

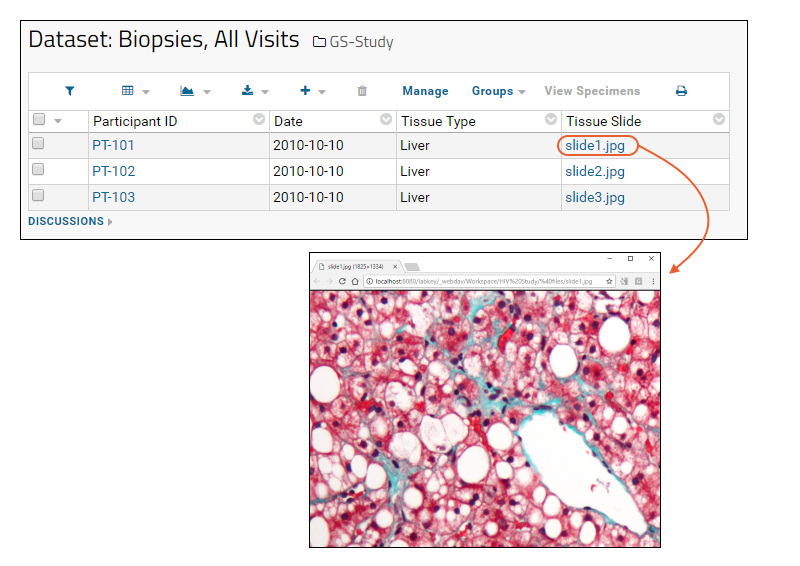

Linking Data Records to Image Files

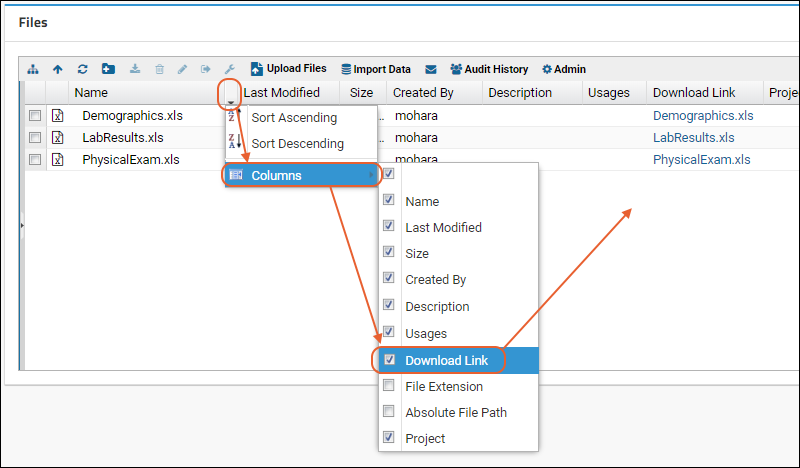

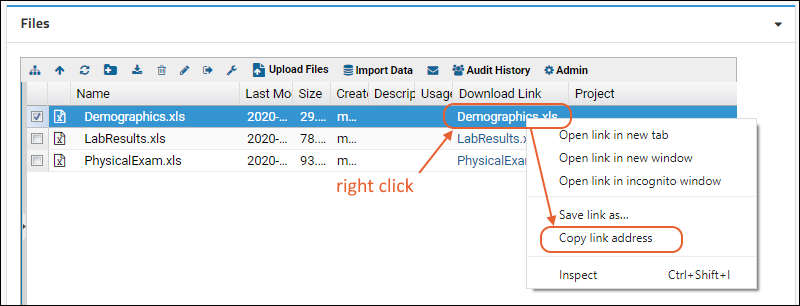

File Metadata

File Administrator Guide

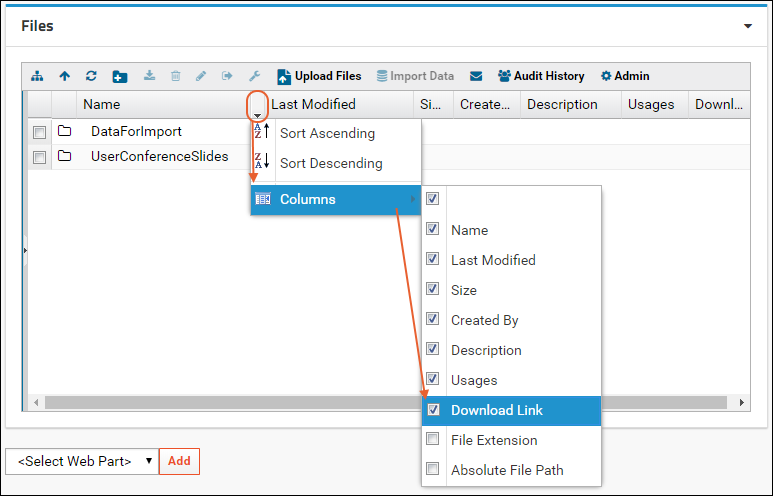

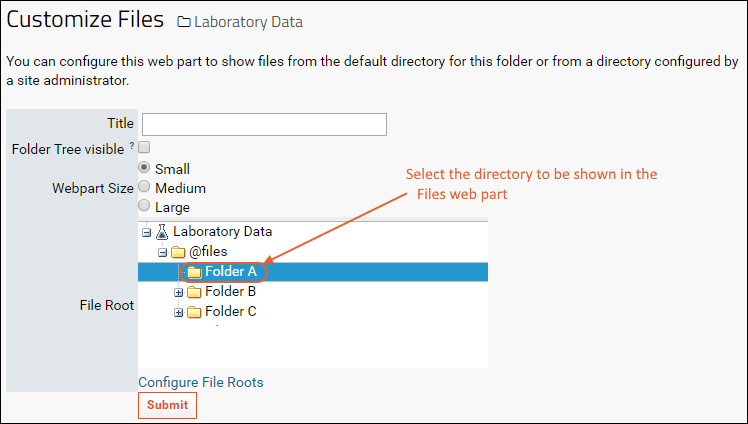

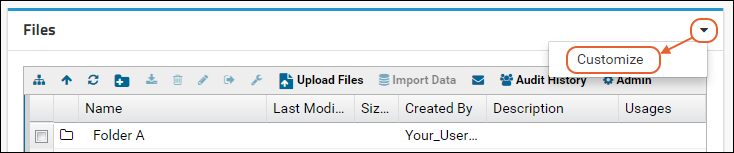

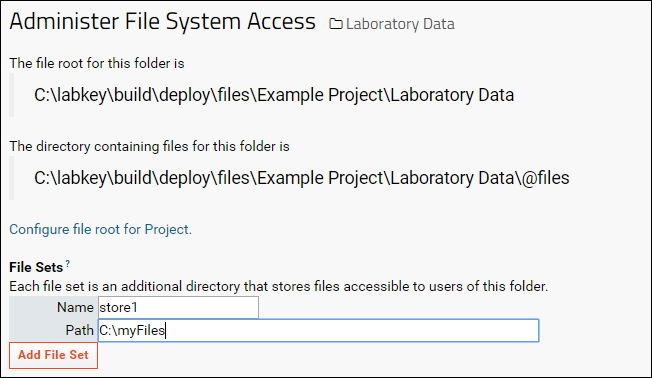

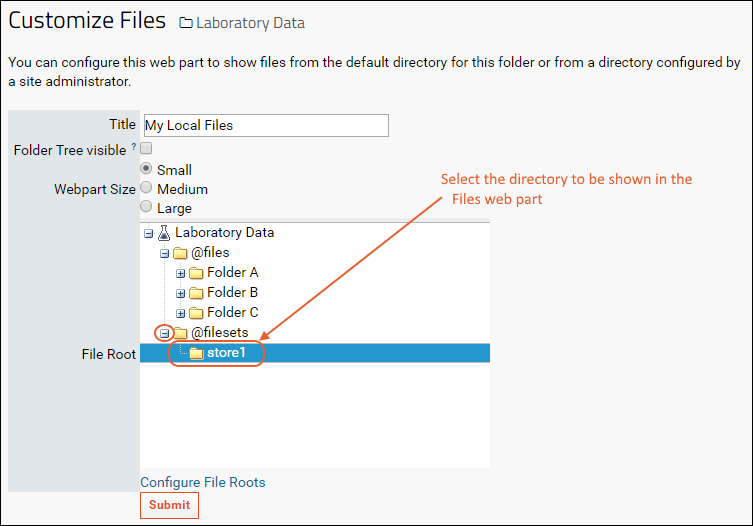

Files Web Part Administration

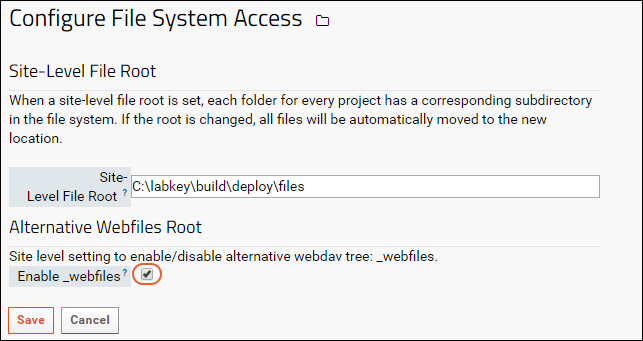

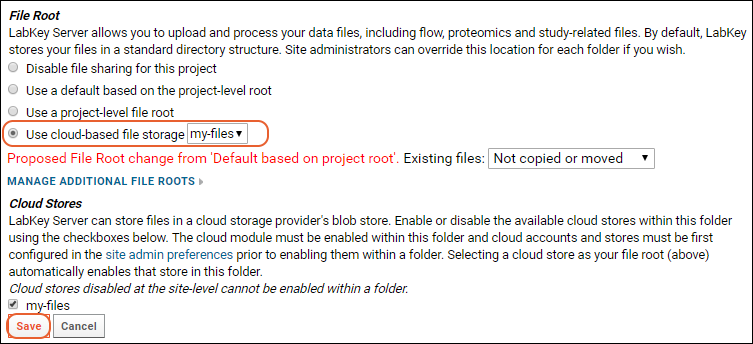

File Root Options

Troubleshoot Pipeline and Files

File Terminology

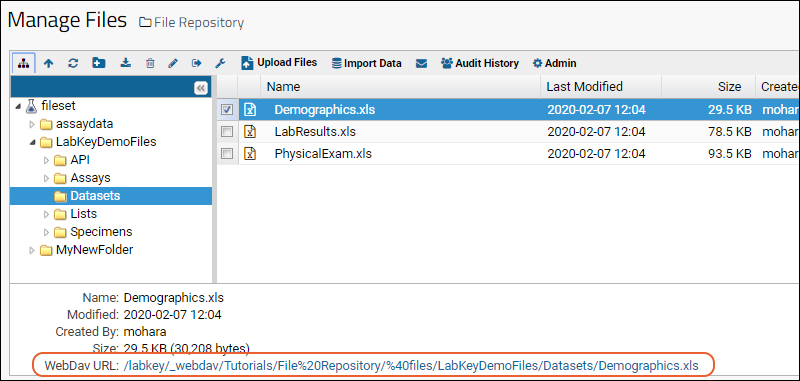

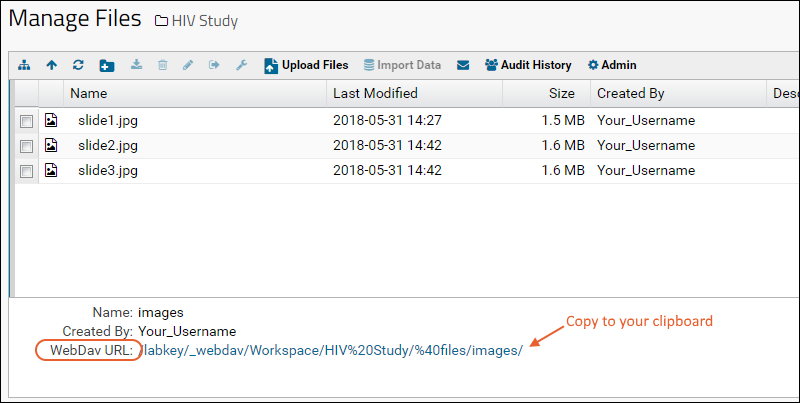

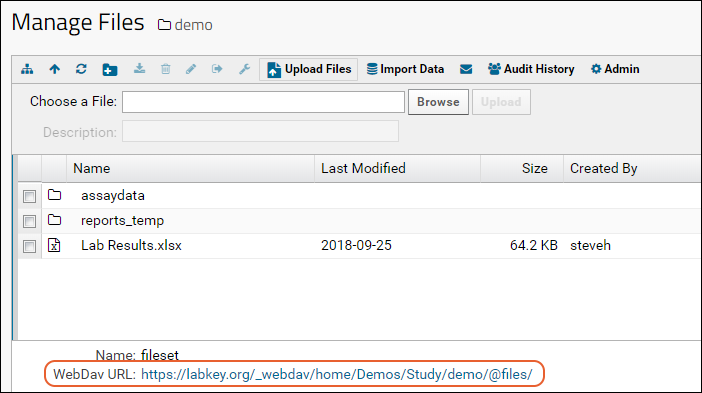

Transfer Files with WebDAV

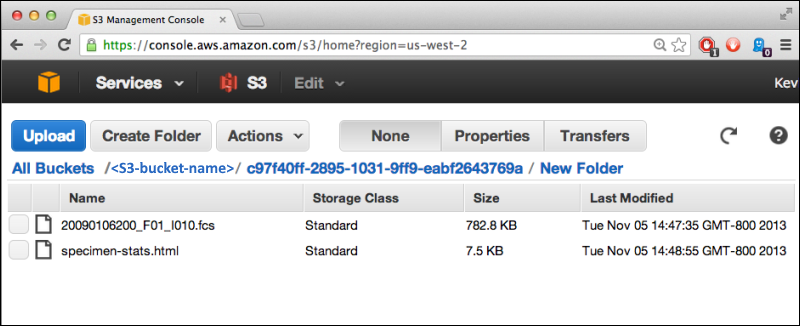

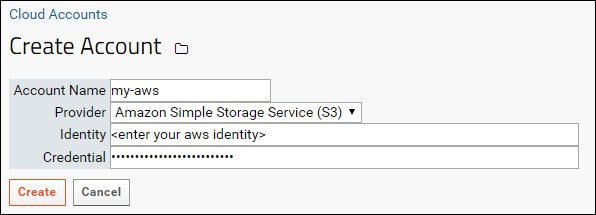

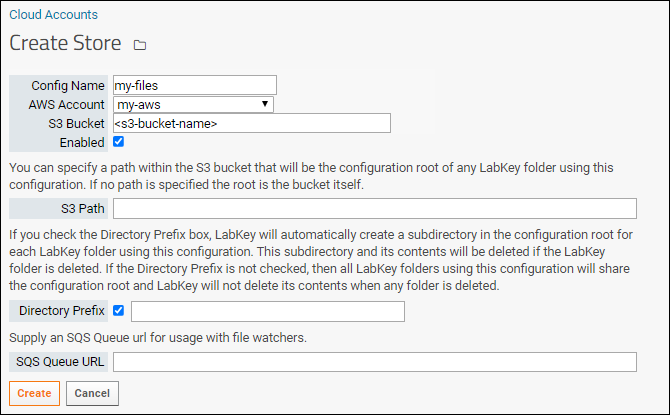

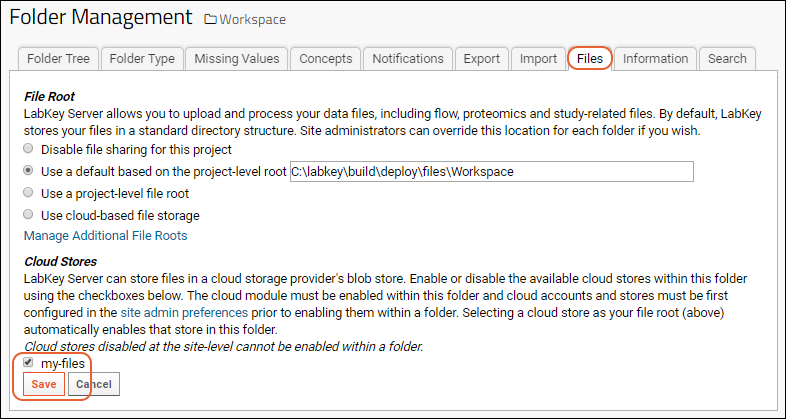

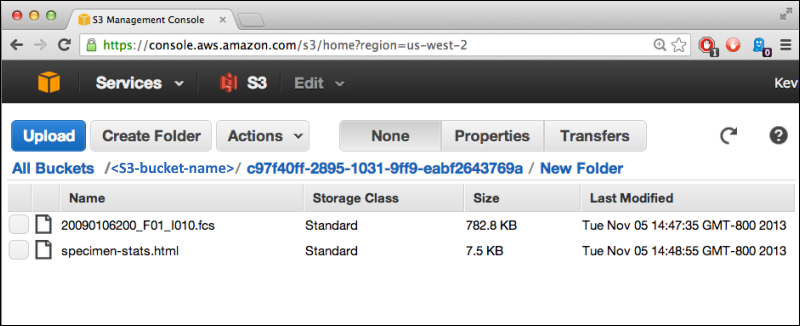

S3 Cloud Data Storage

AWS Identity Credentials

Configure Cloud Storage

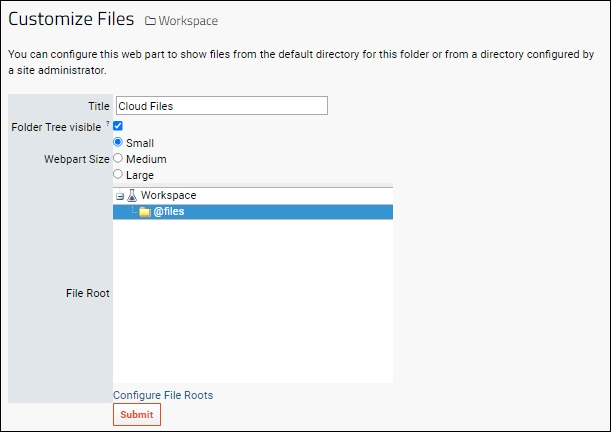

Use Files from Cloud Storage

Cloud Storage for File Watchers

Messages

Use Message Boards

Configure Message Boards

Object-Level Discussions

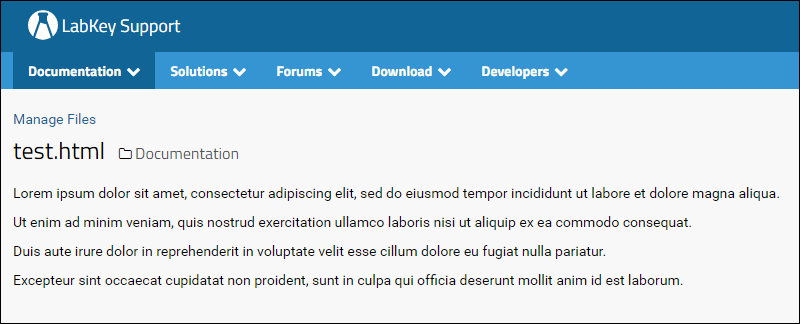

Wikis

Create a Wiki

Wiki Admin Guide

Manage Wiki Pages

Copy Wiki Pages

Wiki User Guide

Wiki Syntax

Wiki Syntax: Macros

Markdown Syntax

Special Wiki Pages

Embed Live Content in HTML Pages or Messages

Examples: Web Parts Embedded in Wiki Pages

Web Part Configuration Properties

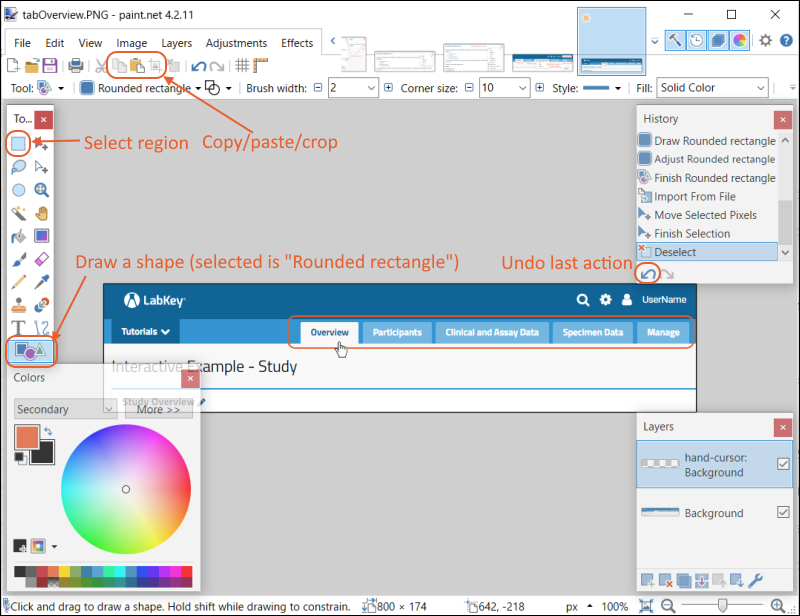

Add Screenshots to a Wiki

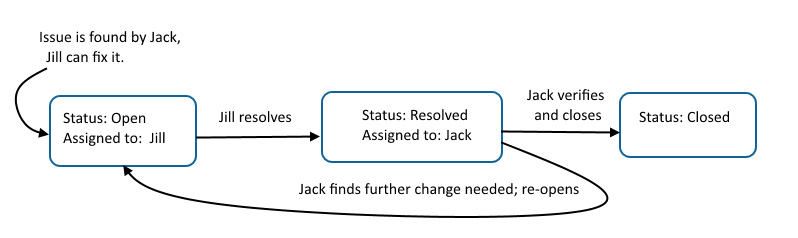

Issue/Bug Tracking

Tutorial: Issue Tracking

Using the Issue Tracker

Issue Tracker: Administration

Electronic Data Capture (EDC)

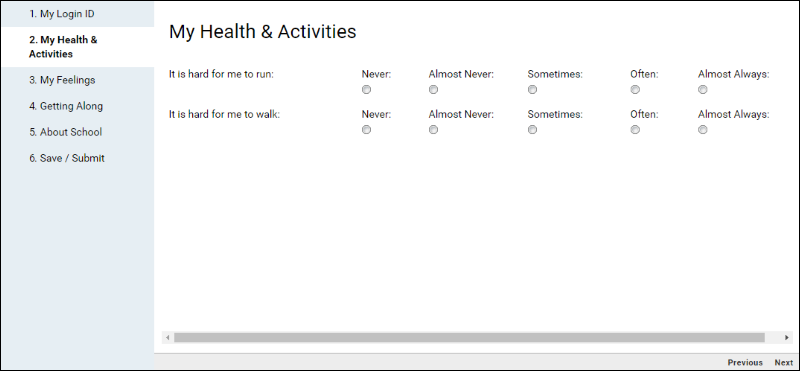

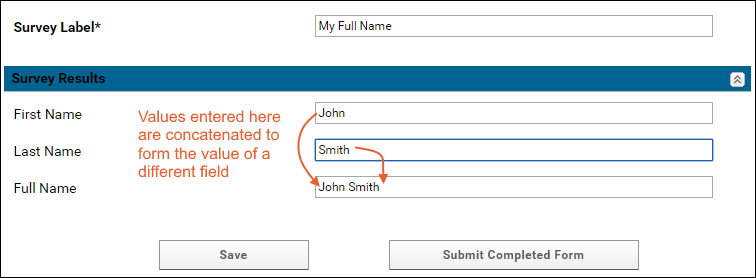

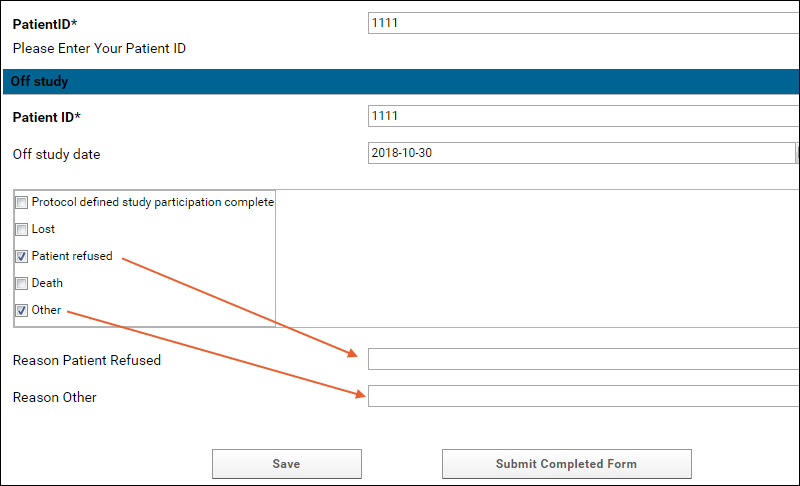

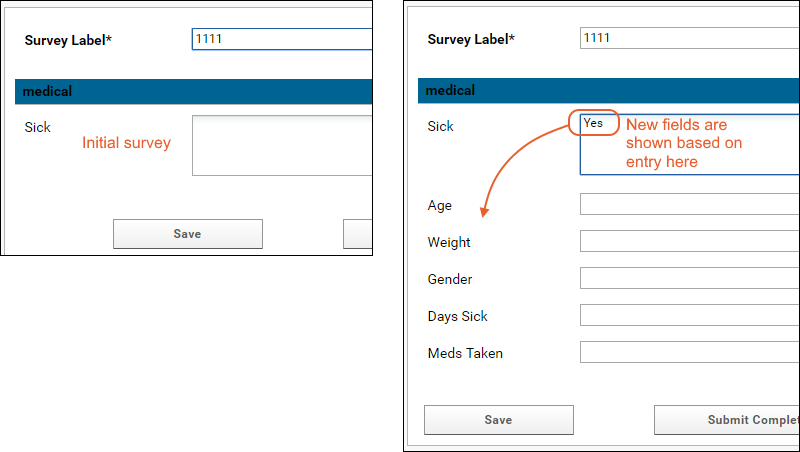

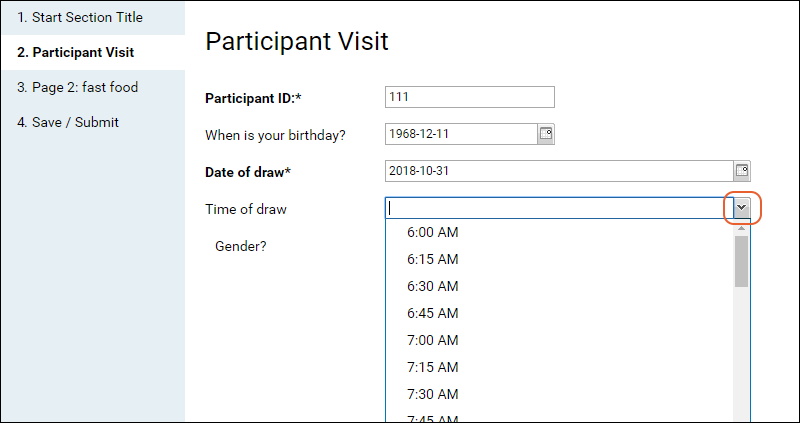

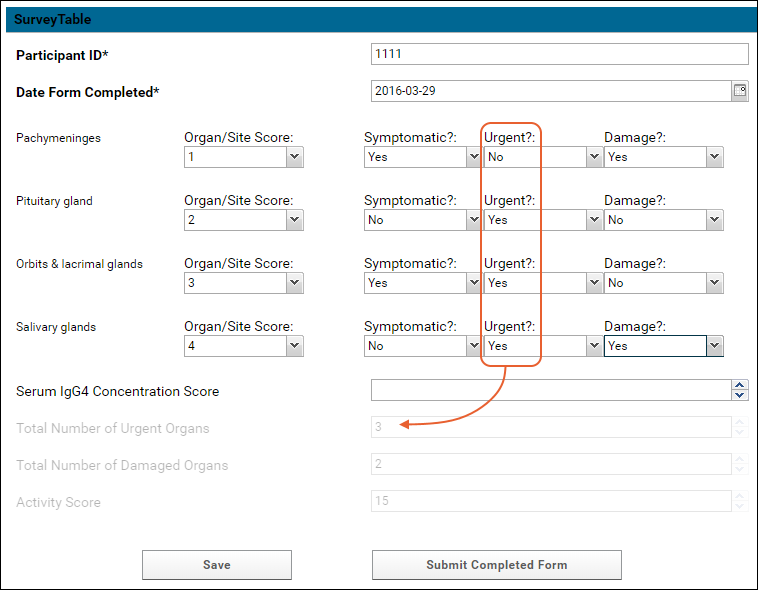

Tutorial: Survey Designer, Step 1

Tutorial: Survey Customization, Step 2

Survey Designer: Reference

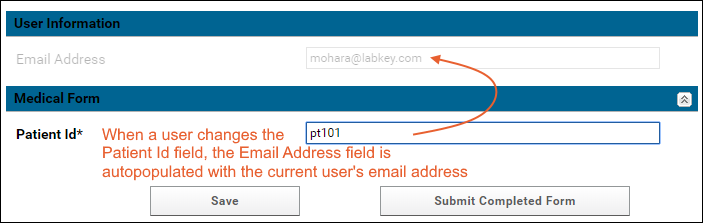

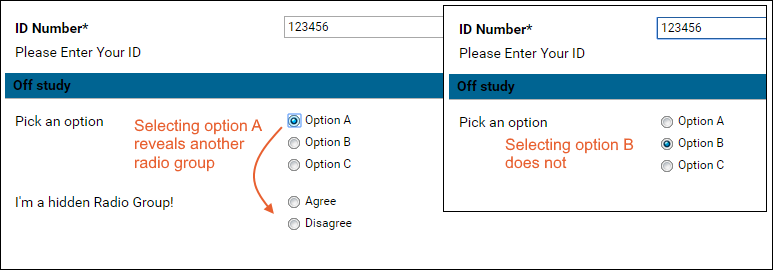

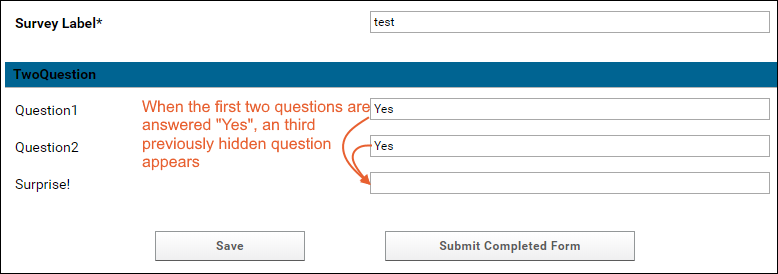

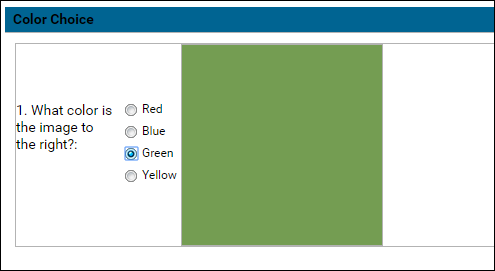

Survey Designer: Examples

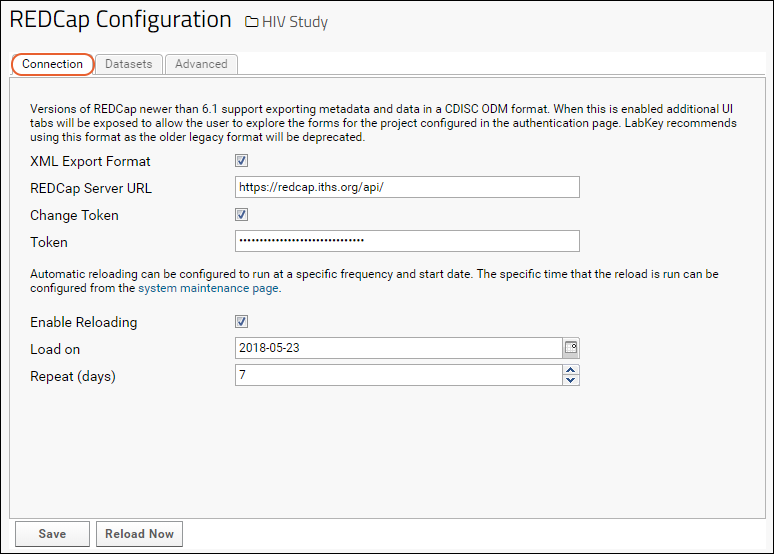

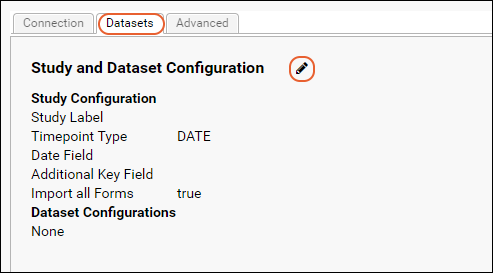

REDCap Survey Data Integration

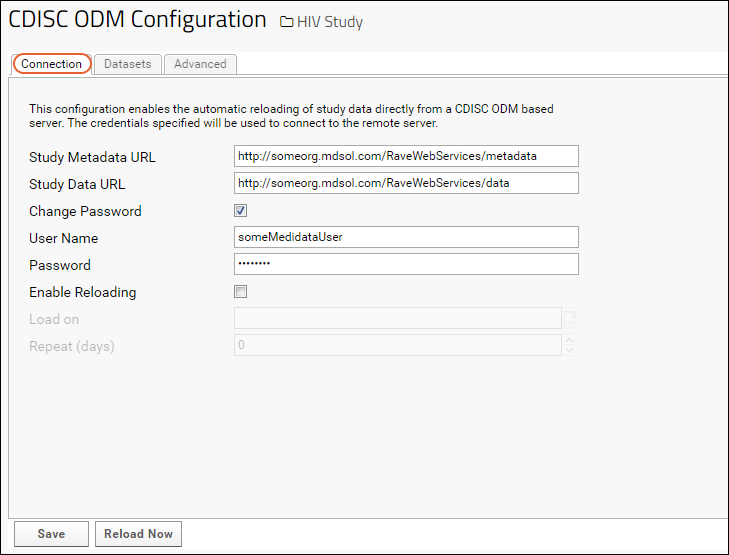

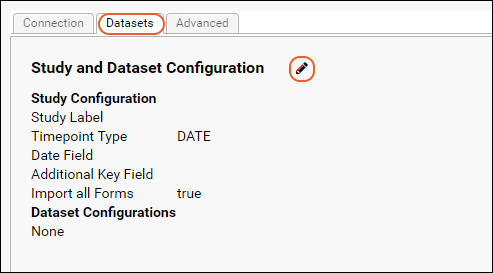

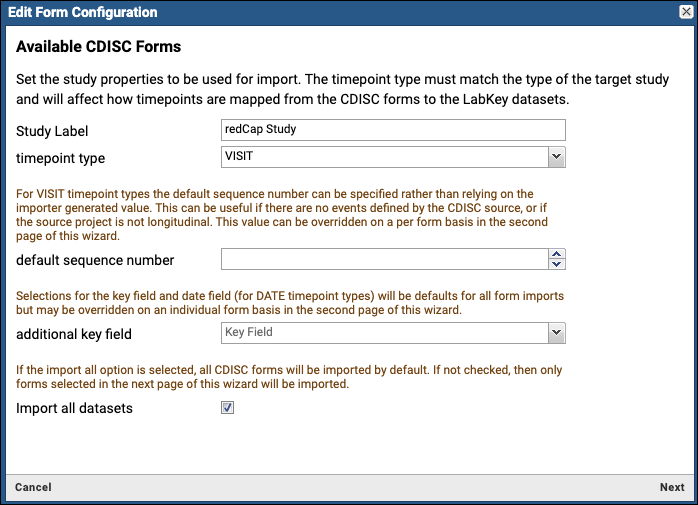

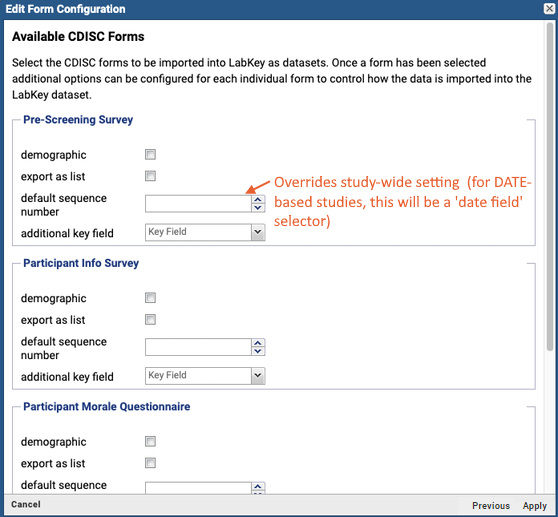

Medidata / CDISC ODM Integration

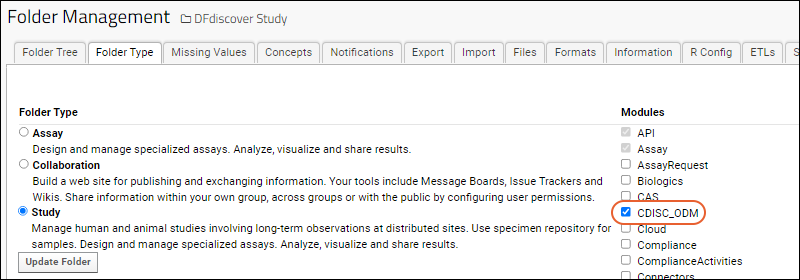

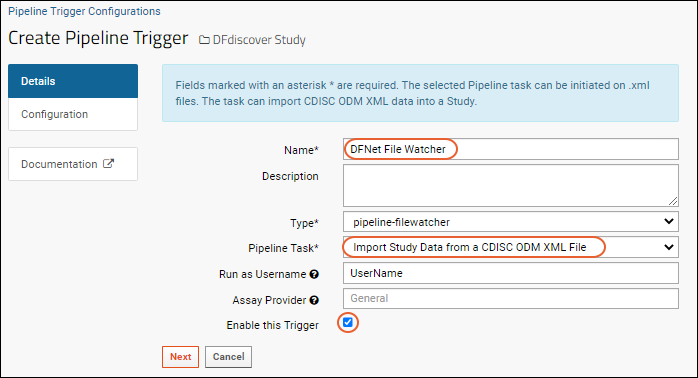

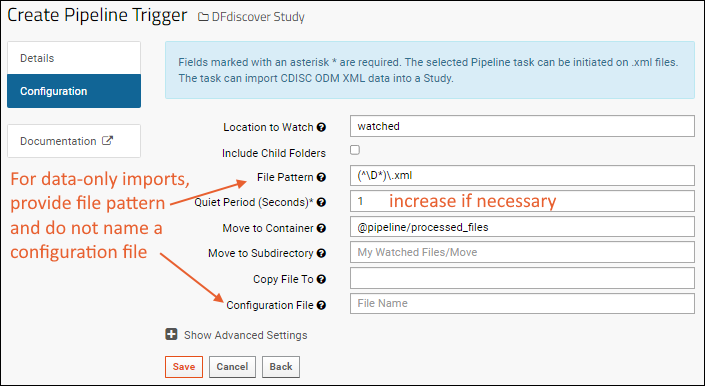

CDISC ODM XML Integration

Adjudication Module

Contact Information

How to Cite LabKey Server

Development

Set Up a Development Machine

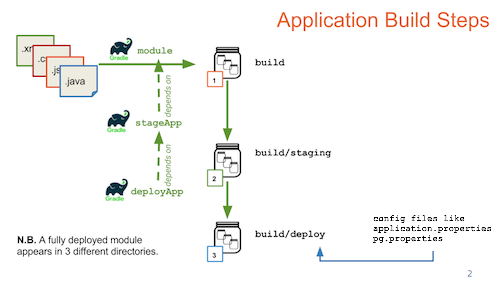

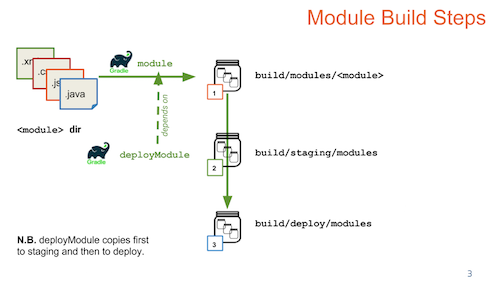

Gradle Build Overview

Build LabKey from Source

Build from Source (or Not)

Customize the Build

Node.js Build Dependency

Git Ignore Configurations

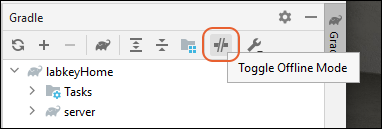

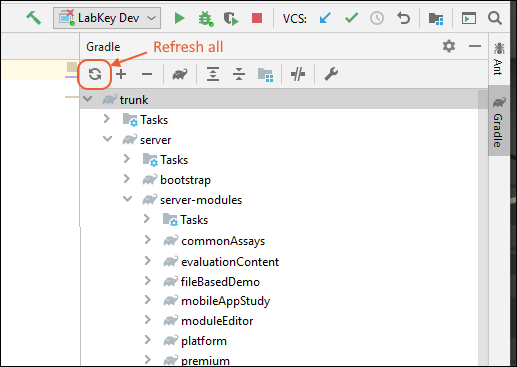

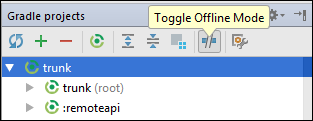

Build Offline

Gradle Cleaning

Gradle Properties

Gradle: How to Add Modules

Gradle: Declare Dependencies

Gradle Tips and Tricks

Premium Resource: Artifactory Set Up

Premium Resource: NPMRC Authentication File

Create Production Builds

Set up OSX for LabKey Development

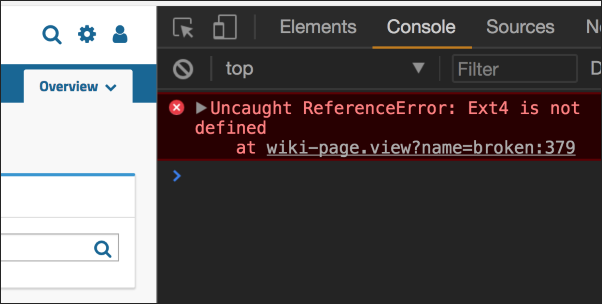

Troubleshoot Development Machines

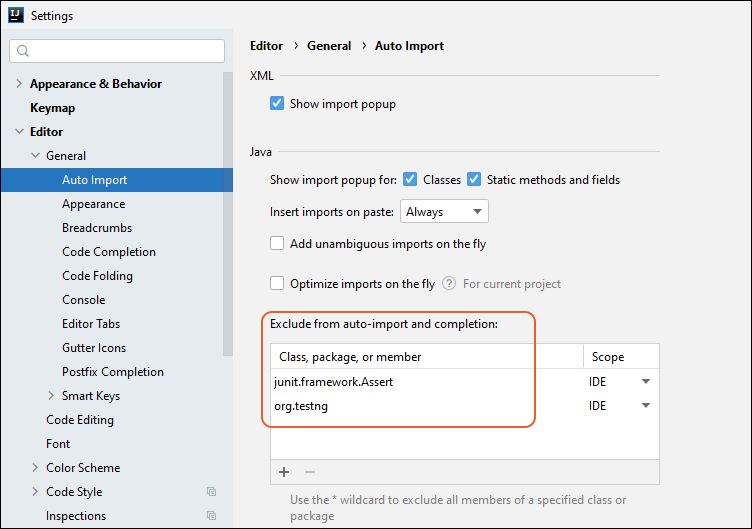

Premium Resource: IntelliJ Reference

Run in Development Mode

LabKey Client APIs

API Resources

JavaScript API

Tutorial: Create Applications with the JavaScript API

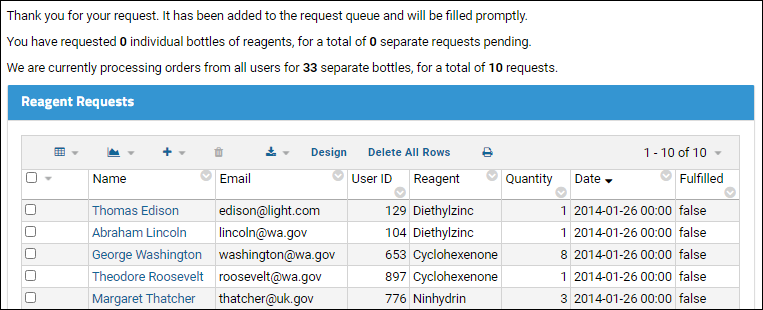

Step 1: Create Request Form

Step 2: Confirmation Page

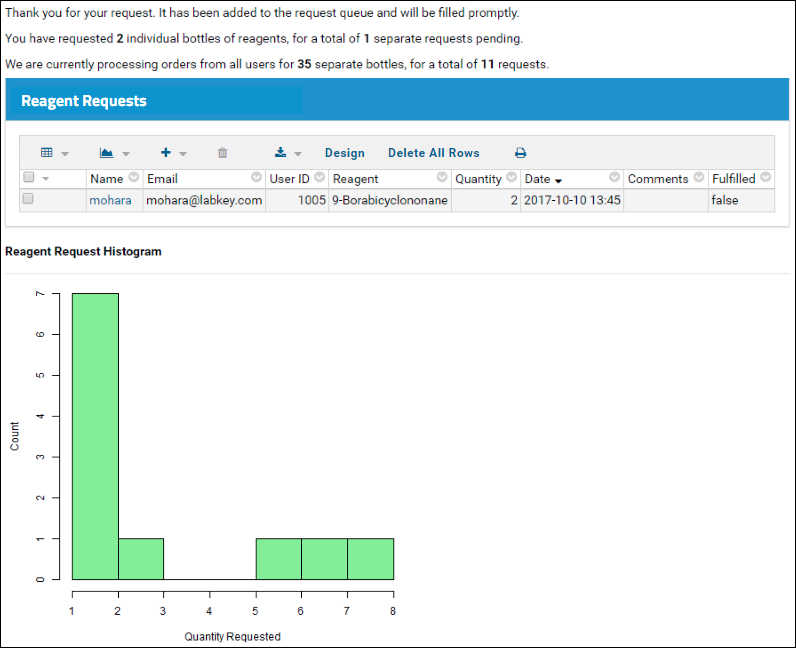

Step 3: R Histogram (Optional)

Step 4: Summary Report For Managers

Repackaging the App as a Module

Tutorial: Use URLs to Pass Data and Filter Grids

Choose Parameters

Show Filtered Grid

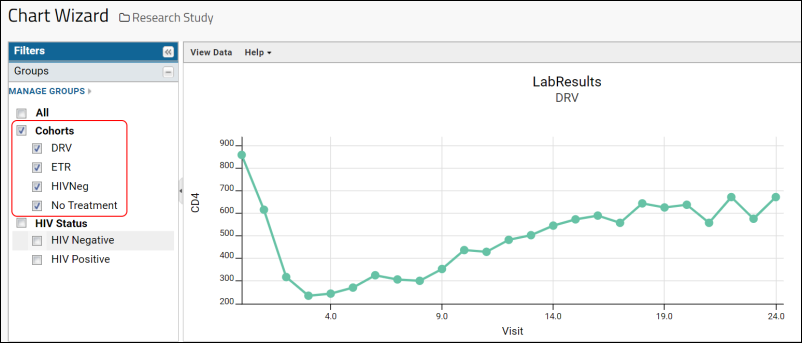

Tutorial: Visualizations in JavaScript

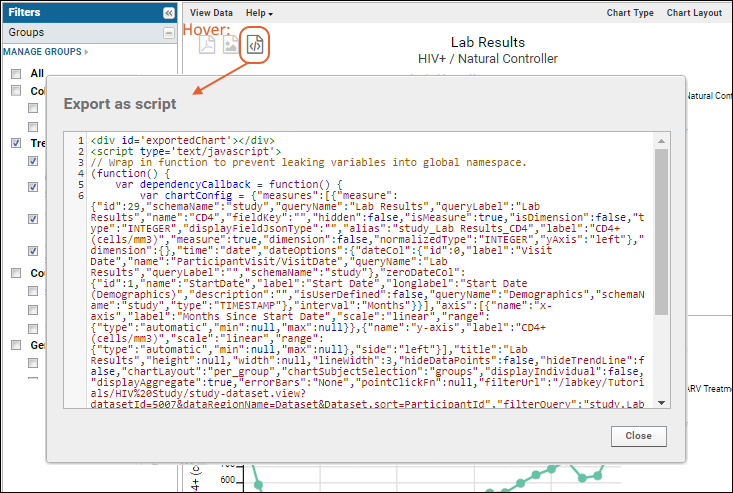

Step 1: Export Chart as JavaScript

Step 2: Embed the Script in a Wiki

Modify the Exported Chart Script

Display the Chart with Minimal UI

JavaScript API Examples

Premium Resource: JavaScript Security API Examples

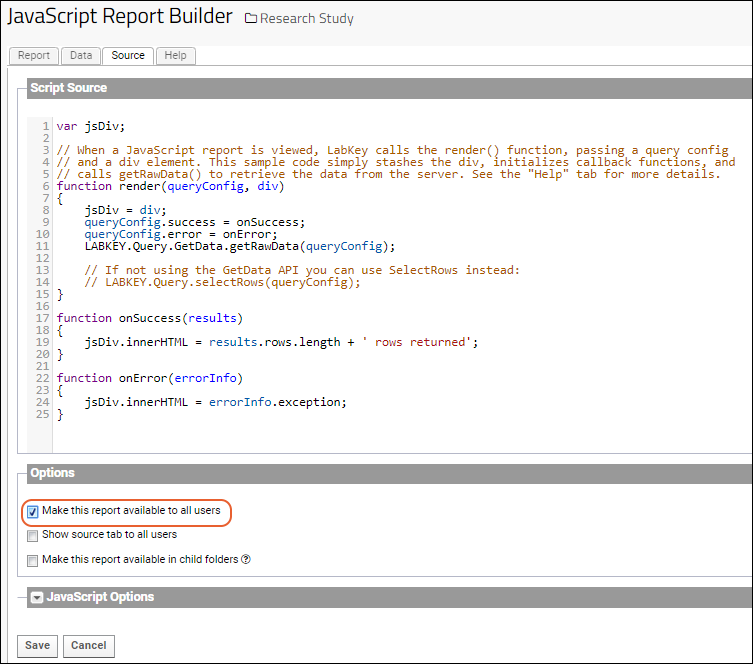

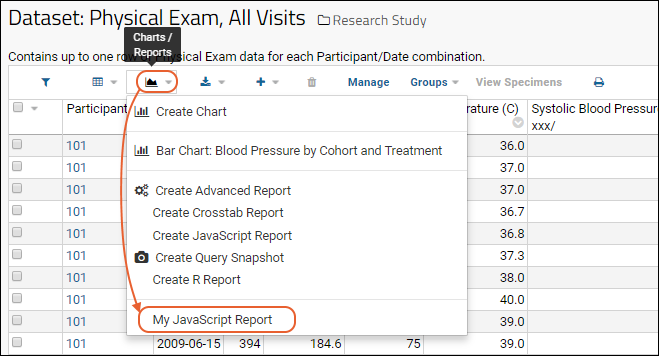

JavaScript Reports

Export Data Grid as a Script

Premium Resource: Custom Participant View

Example: Master-Detail Pages

Custom Button Bars

Premium Resource: Invoke JavaScript from Custom Buttons

Premium Resource: Custom Buttons for Large Grids

Premium Resource: Type-Ahead Entry Forms

Premium Resource: Sample Status Demo

Insert into Audit Table via API

Programming the File Repository

Vocabulary Domains

Declare Dependencies

Using ExtJS with LabKey

Naming & Documenting JavaScript APIs

How to Generate JSDoc

JsDoc Annotation Guidelines

Naming Conventions for JavaScript APIs

Java API

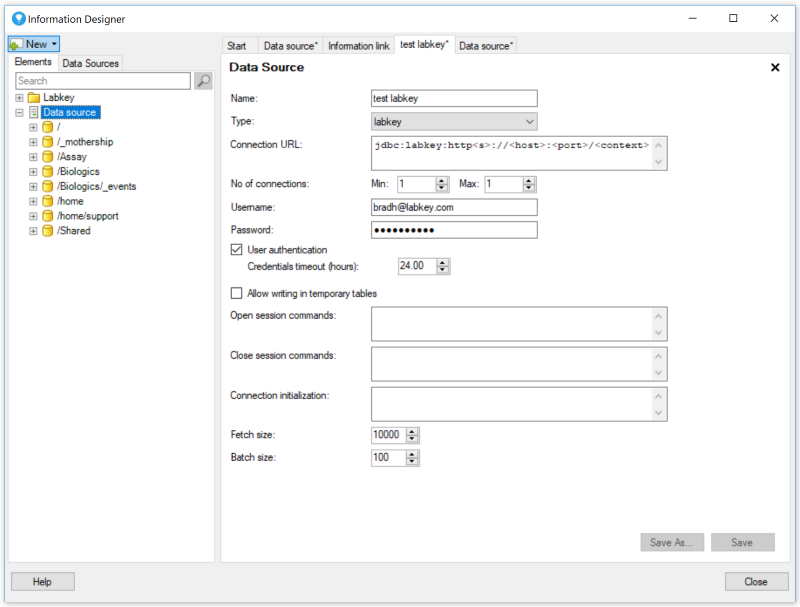

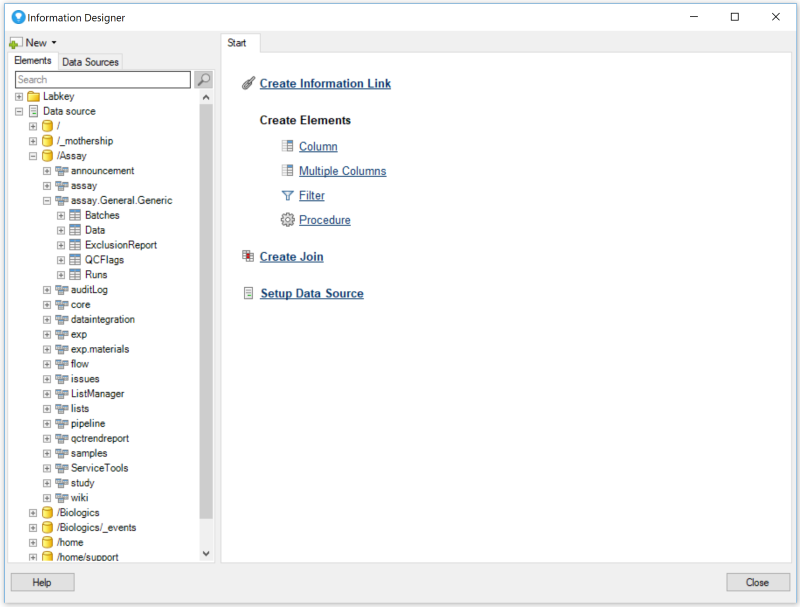

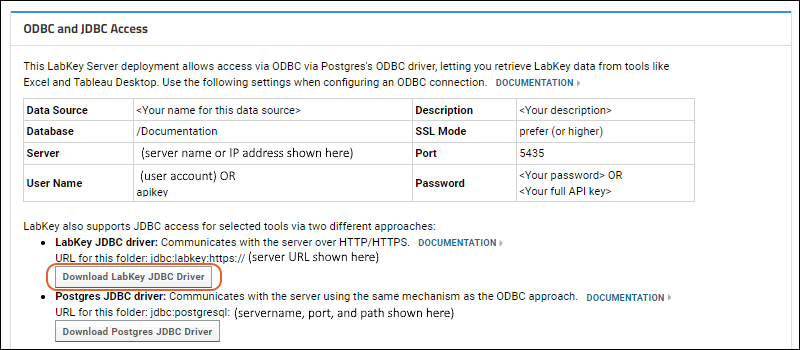

LabKey JDBC Driver

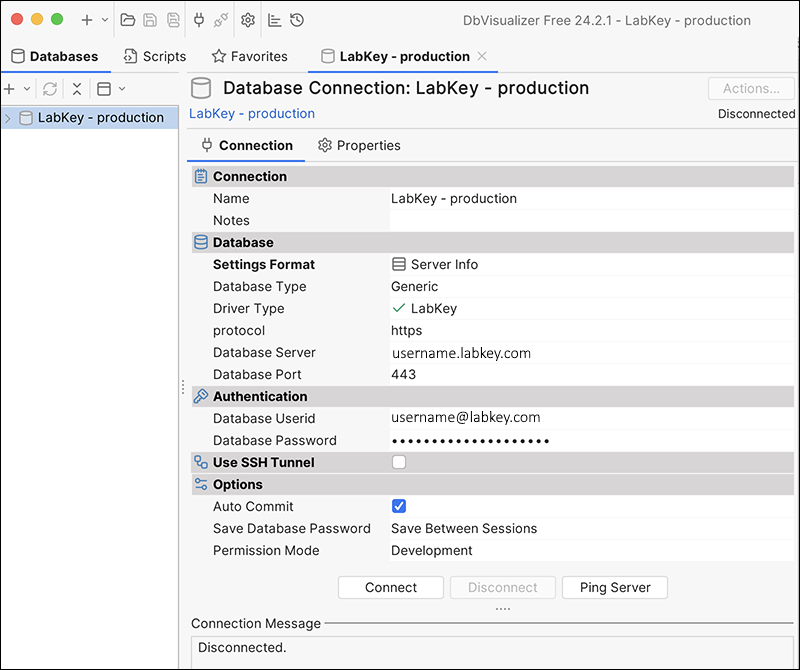

Integration with DBVisualizer

Security Bulk Update via API

Perl API

Python API

Premium Resource: Python API Demo

Premium Resource: Download a File with Python

Rlabkey Package

Troubleshoot Rlabkey

Premium Resource: Example Code for QC Reporting

SAS Client API Library

SAS Setup

SAS Macros

SAS Demos

HTTP Interface

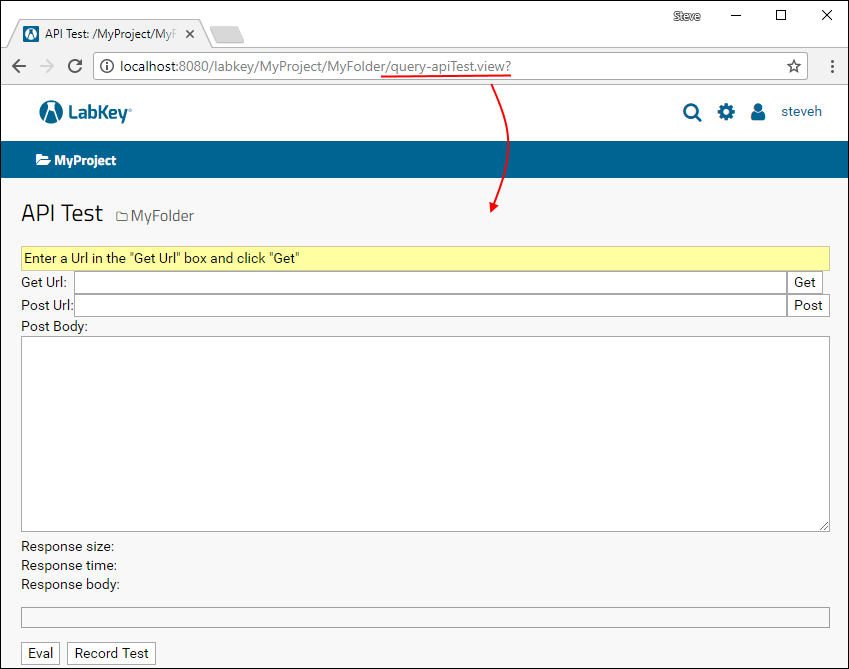

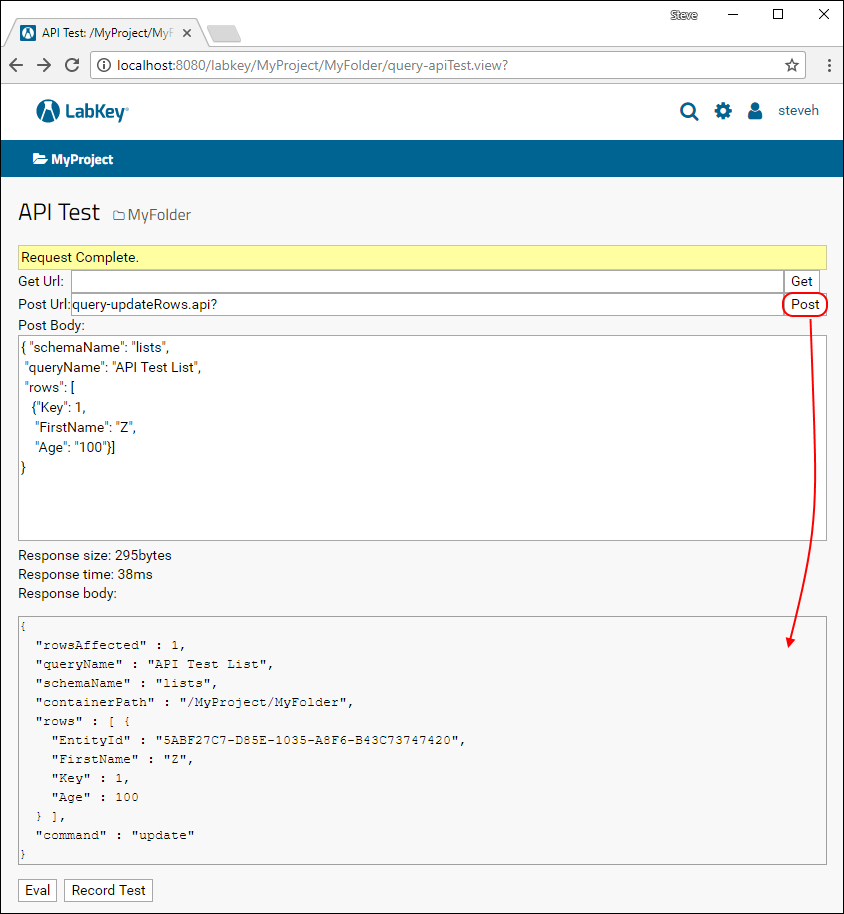

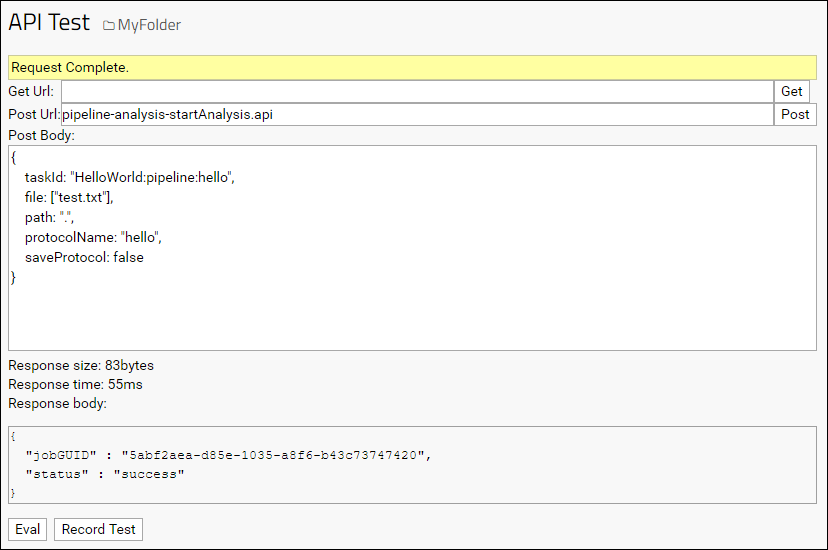

Examples: Controller Actions / API Test Page

Example: Access APIs from Perl

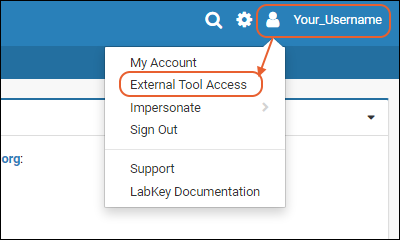

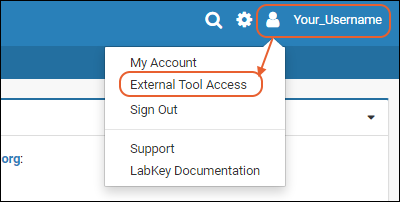

External Tool Access

API Keys

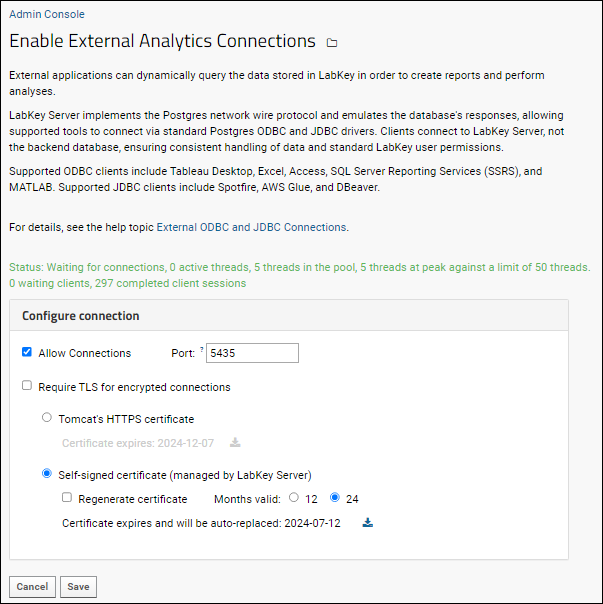

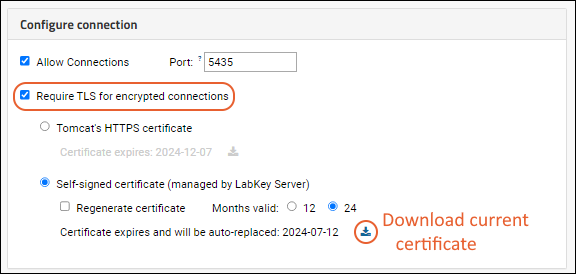

External ODBC and JDBC Connections

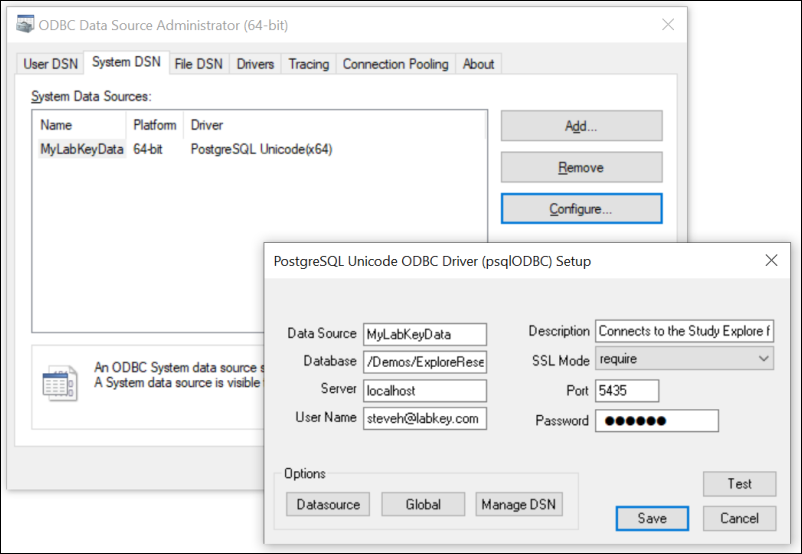

ODBC: Configure Windows Access

ODBC: Configure OSX/Mac Access

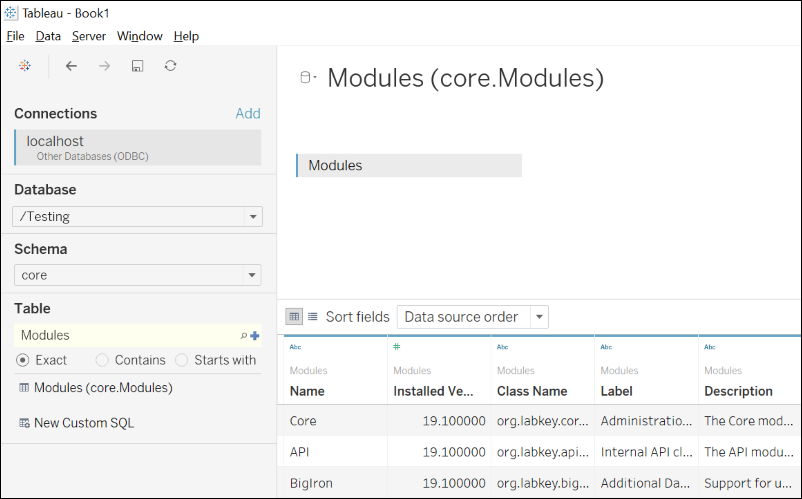

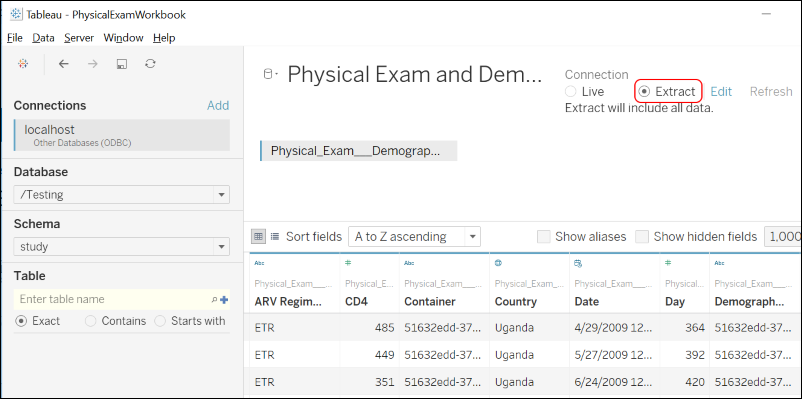

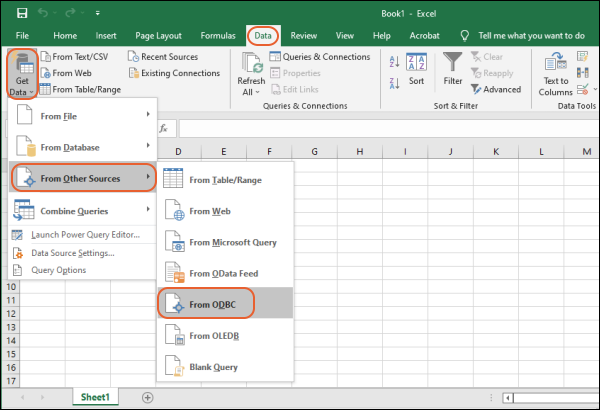

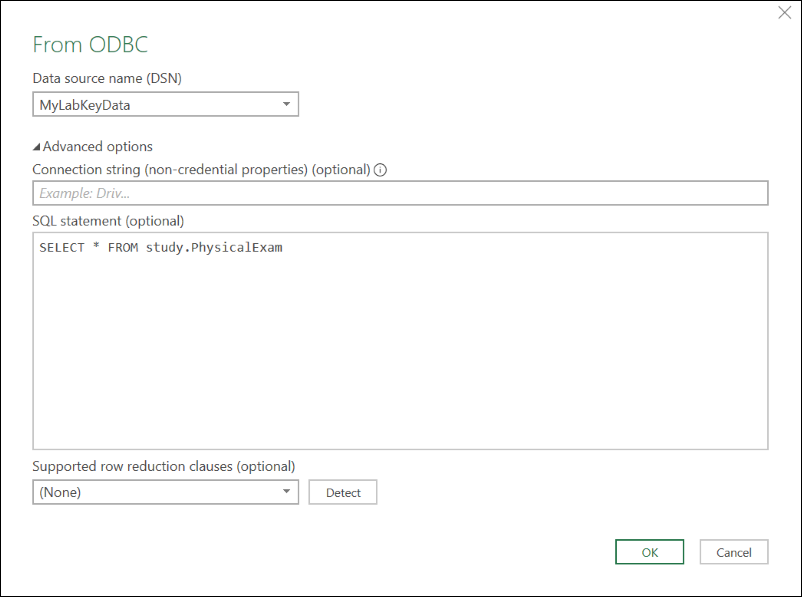

ODBC: External Tool Connections

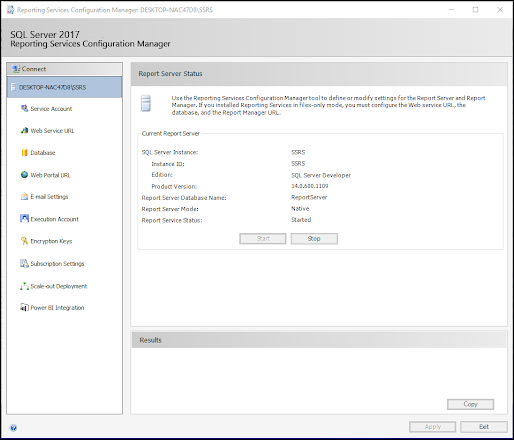

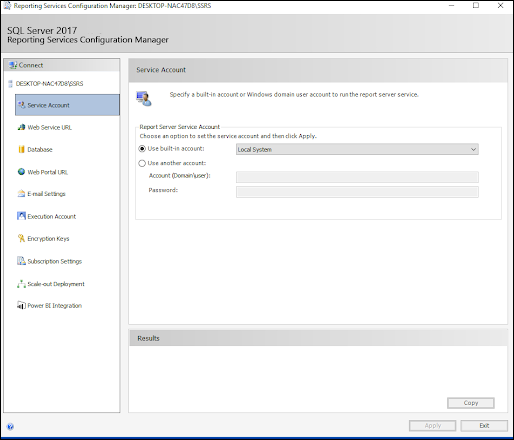

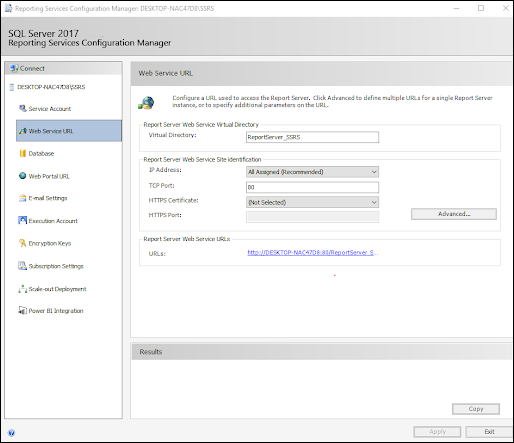

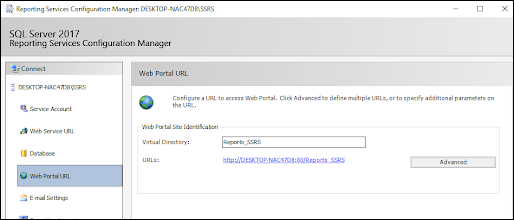

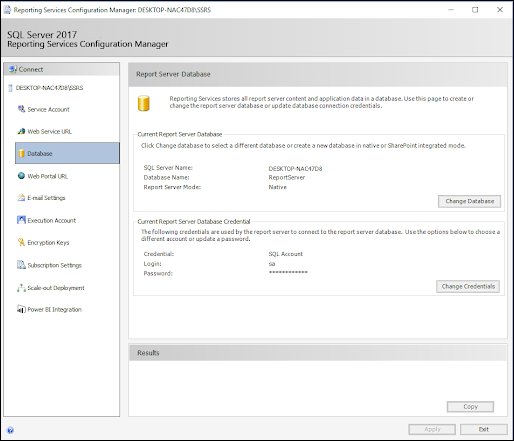

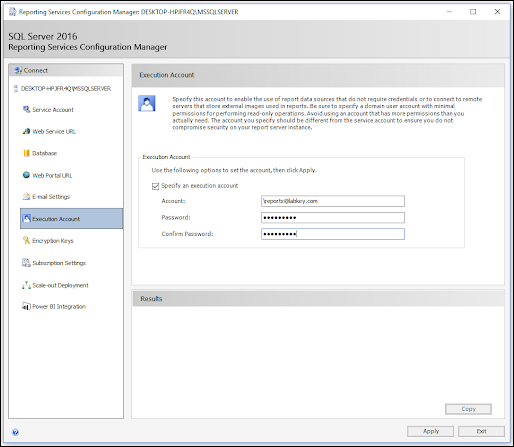

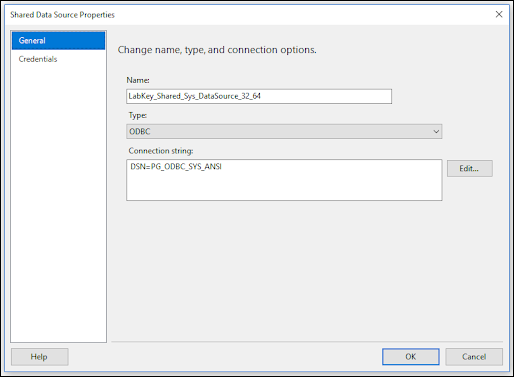

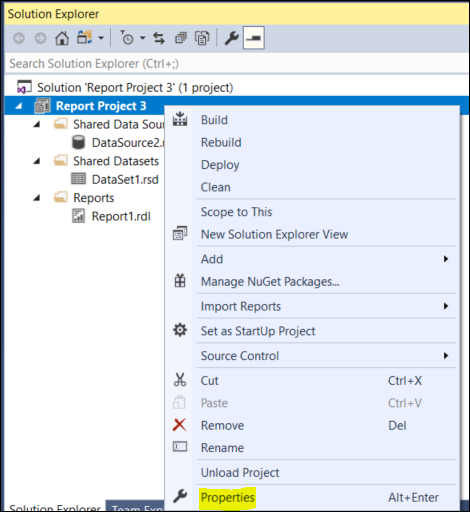

ODBC: Using SQL Server Reporting Service (SSRS)

Compliant Access via Session Key

Develop Modules

Tutorial: Hello World Module

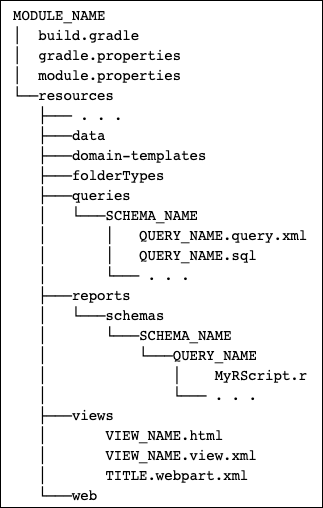

Map of Module Files

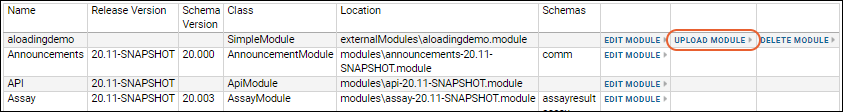

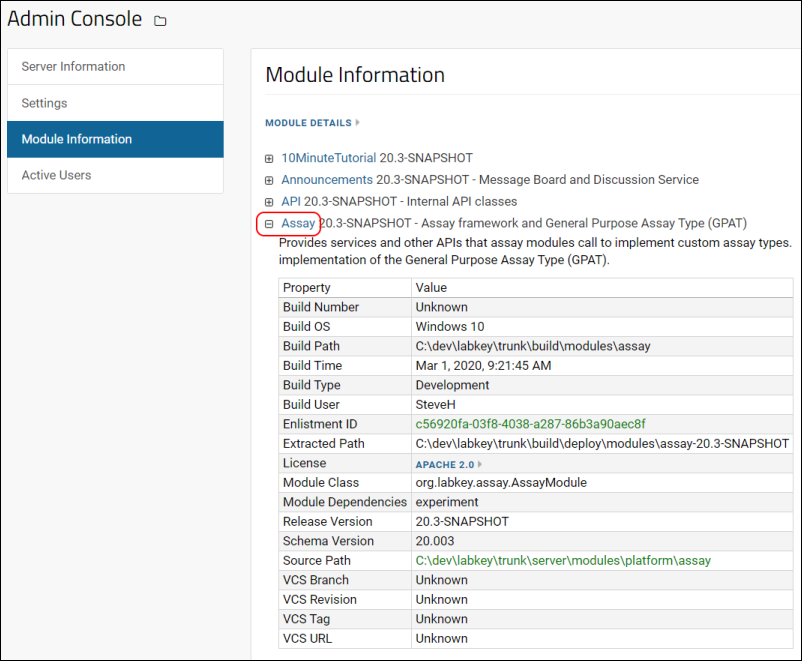

Module Loading Using the Server UI

Module Editing Using the Server UI

Example Modules

Tutorial: File Based Module Resources

Module Directories Setup

Module Query Views

Module SQL Queries

Module R Reports

Module HTML and Web Parts

Modules: JavaScript Libraries

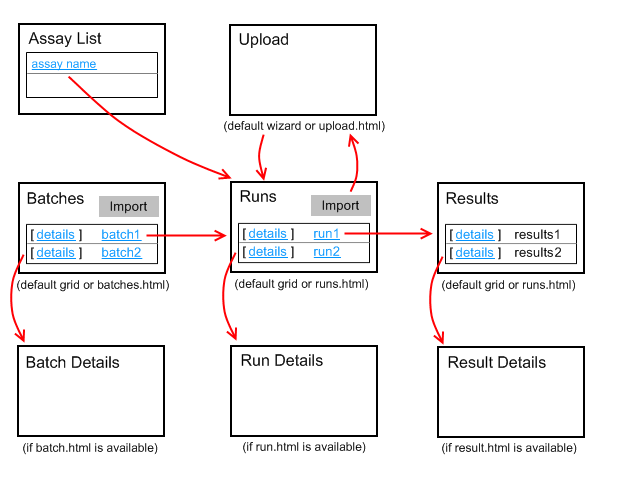

Modules: Assay Types

Assay Custom Domains

Assay Custom Details View

Loading Custom Views

Example Assay JavaScript Objects

Assay Query Metadata

Customize Batch Save Behavior

SQL Scripts for Module-Based Assays

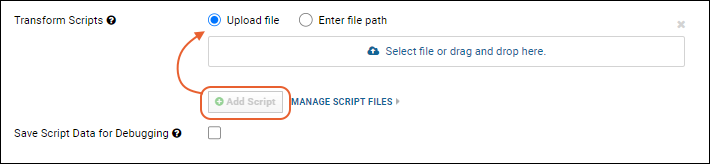

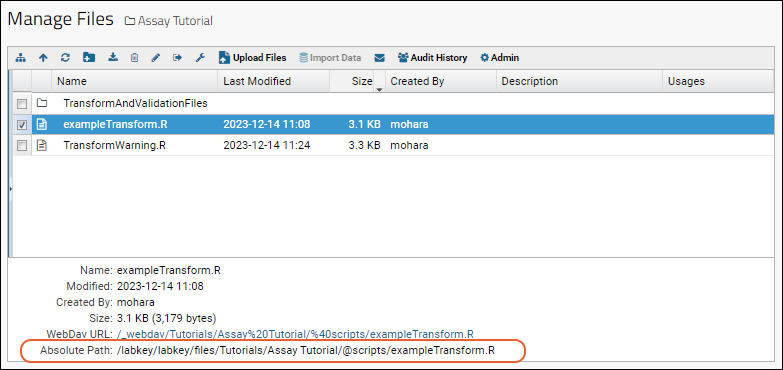

Transform Scripts

Example Workflow: Develop a Transformation Script

Example Transformation Scripts (perl)

Transformation Scripts in R

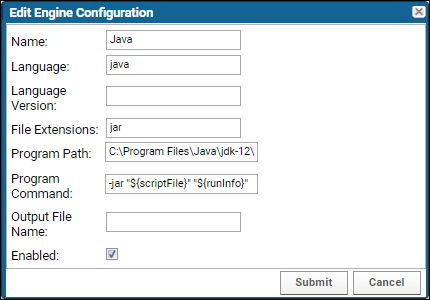

Transformation Scripts in Java

Transformation Scripts for Module-based Assays

Premium Resource: Python Transformation Script

Premium Resource: Create Samples with Transformation Script

Run Properties Reference

Transformation Script Substitution Syntax

Warnings in Transformation Scripts

Modules: Folder Types

Modules: Query Metadata

Modules: Report Metadata

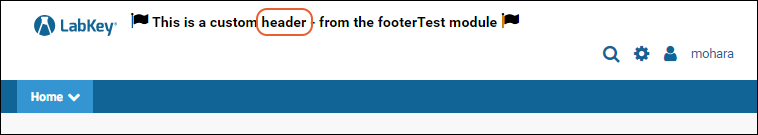

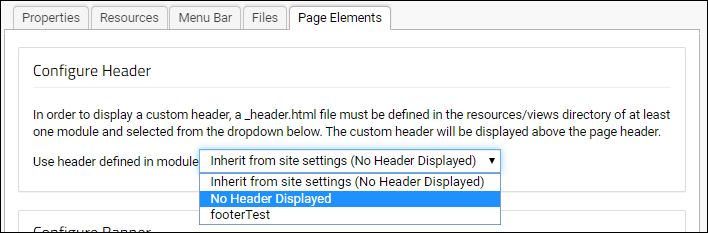

Modules: Custom Header

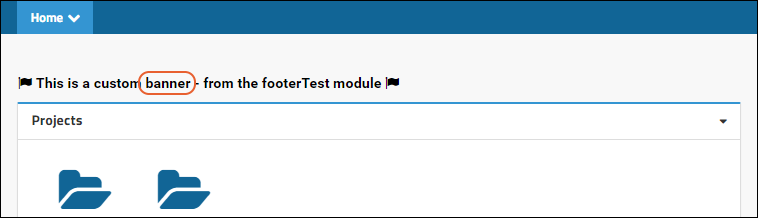

Modules: Custom Banner

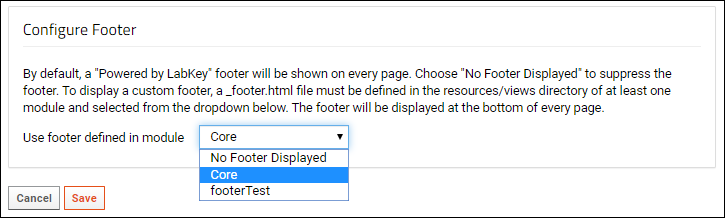

Modules: Custom Footer

Modules: SQL Scripts

Modules: SQL Script Conventions

Modules: Domain Templates

Java Modules

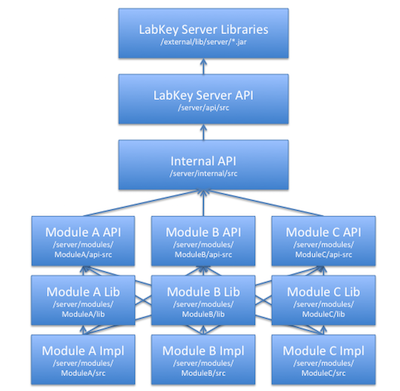

Module Architecture

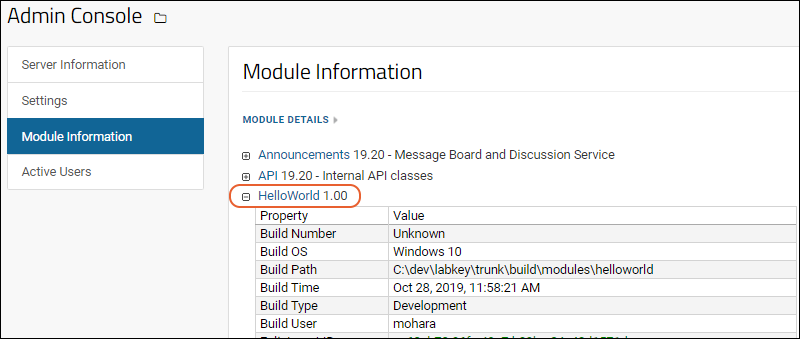

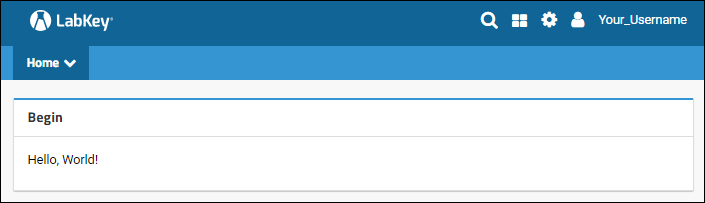

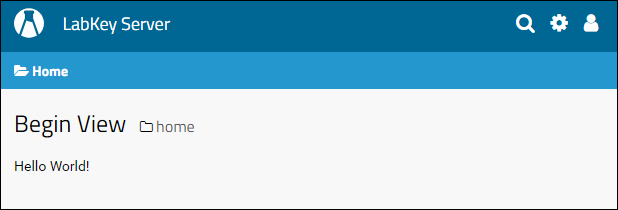

Tutorial: Hello World Java Module

LabKey Containers

Implementing Actions and Views

Implementing API Actions

Integrating with the Pipeline Module

Integrating with the Experiment API

Using SQL in Java Modules

Database Development Guide

HotSwapping Java classes

Modules: Custom Login Page

Modules: Custom Site Welcome Page

ETL: Extract Transform Load

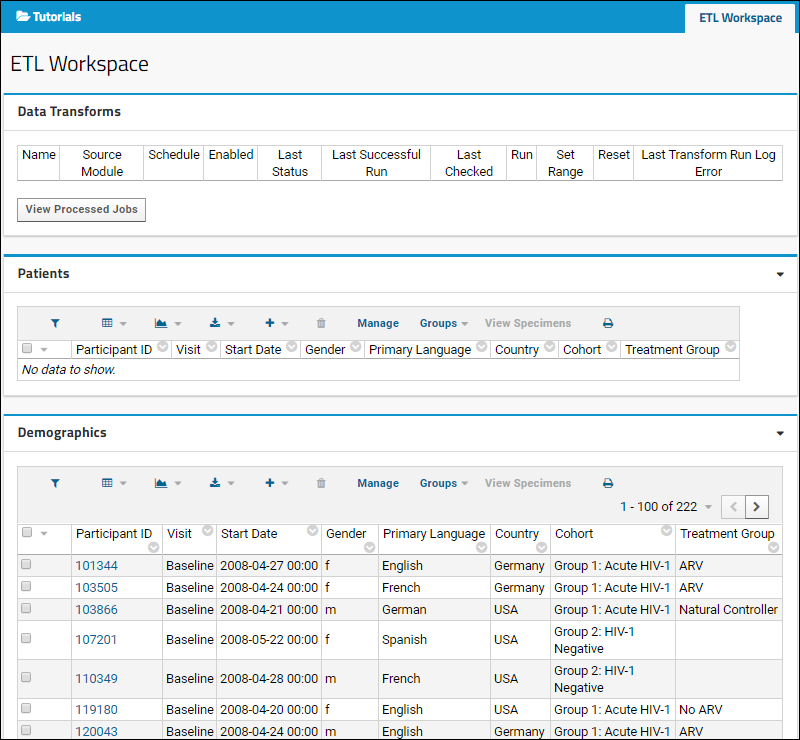

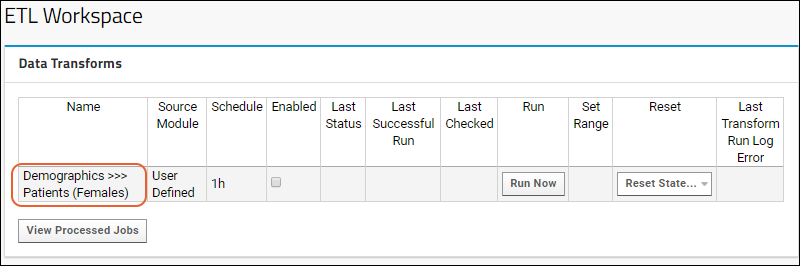

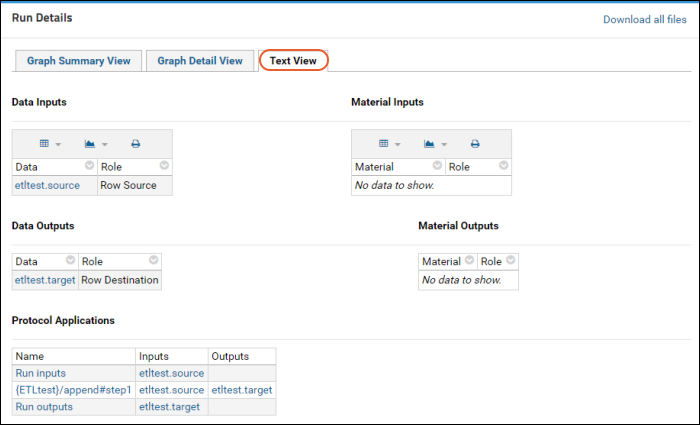

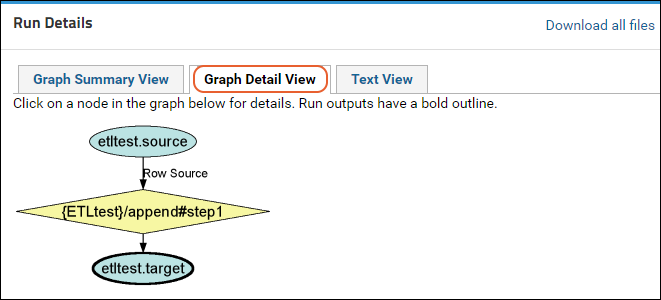

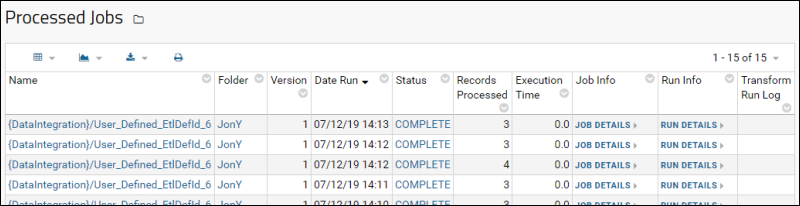

Tutorial: Extract-Transform-Load (ETL)

ETL Tutorial: Set Up

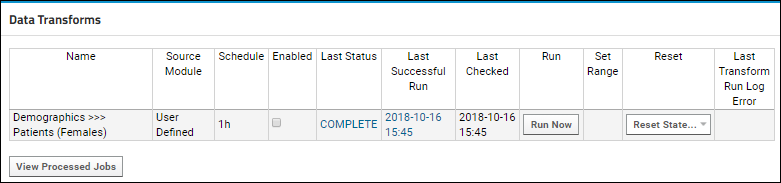

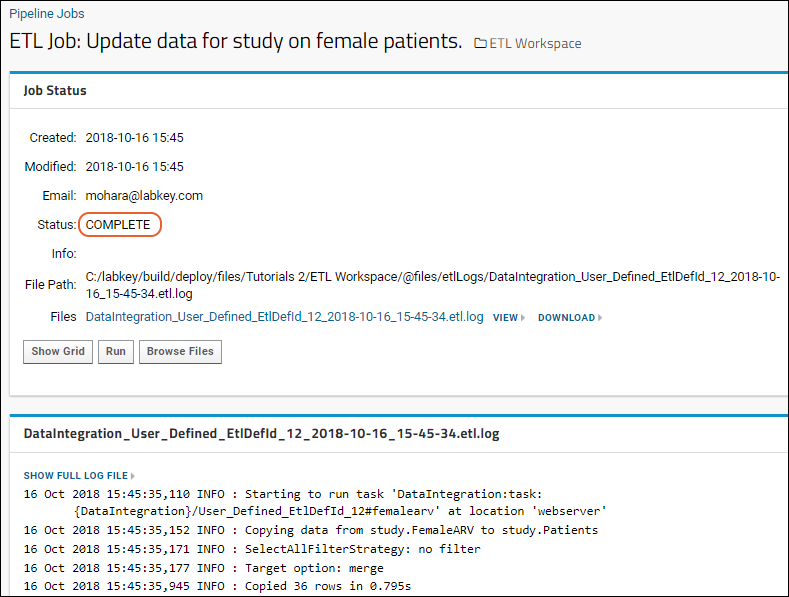

ETL Tutorial: Run an ETL Process

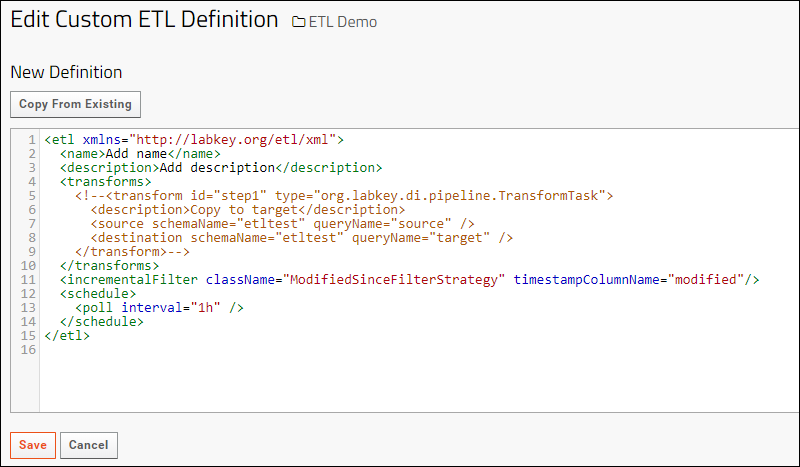

ETL Tutorial: Create a New ETL Process

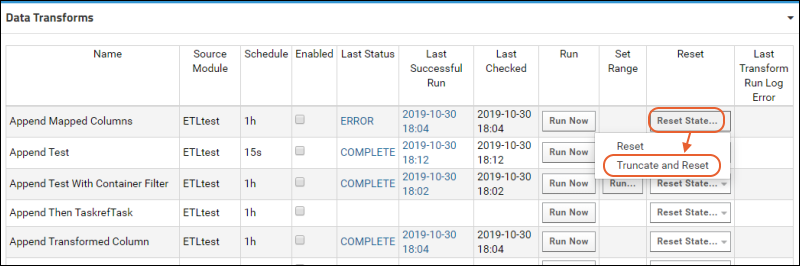

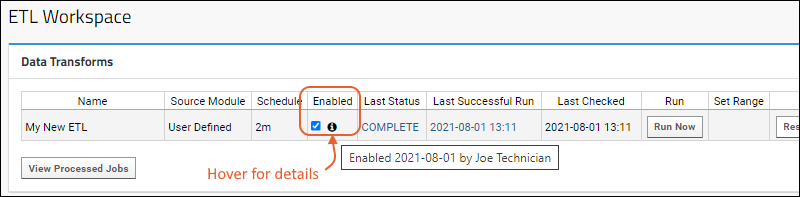

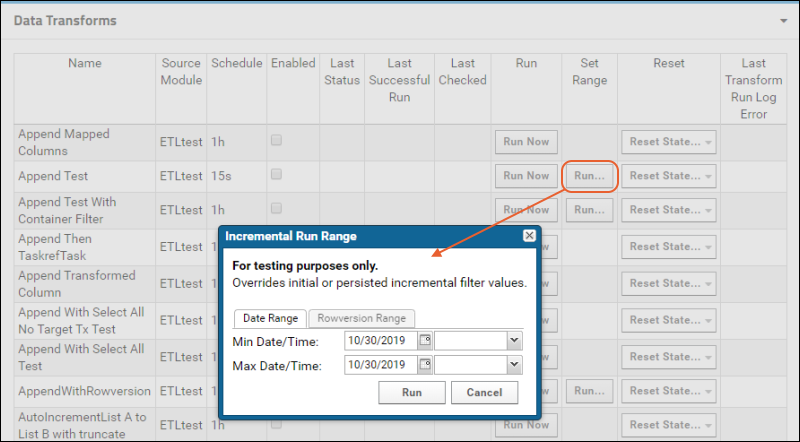

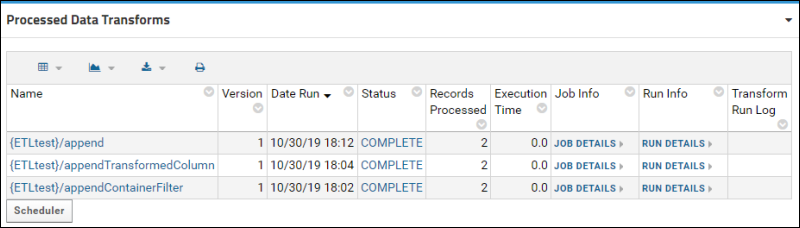

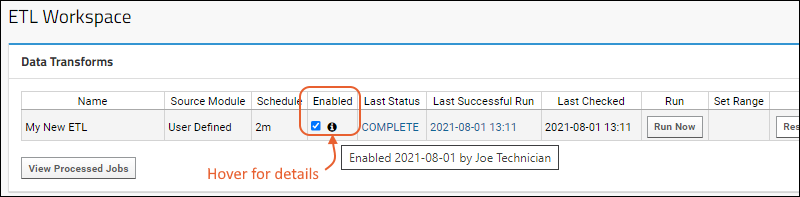

ETL: User Interface

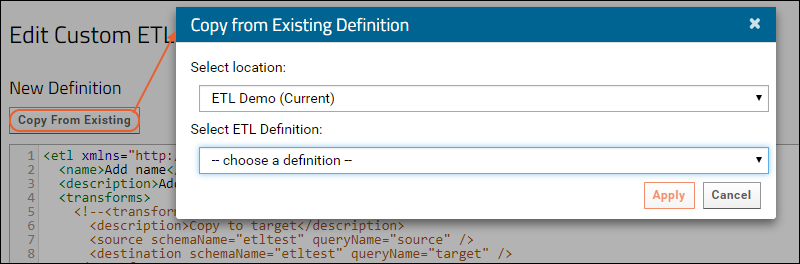

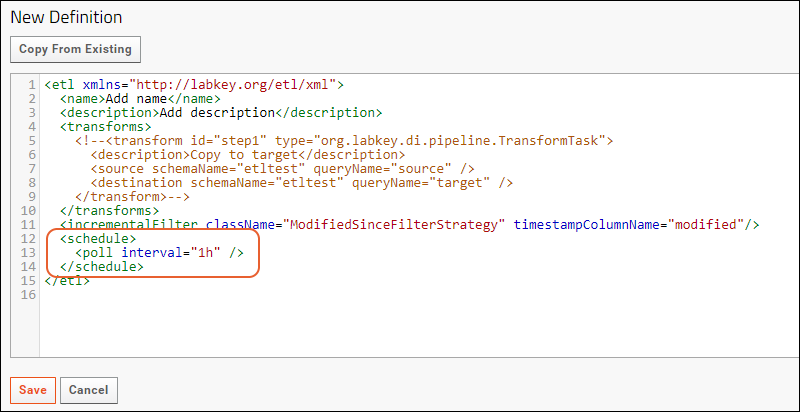

ETL: Create a New ETL

ETL: Planning

ETL: Attributes

ETL: Target Options

ETL: Column Mapping

ETL: Transform Types and Tasks

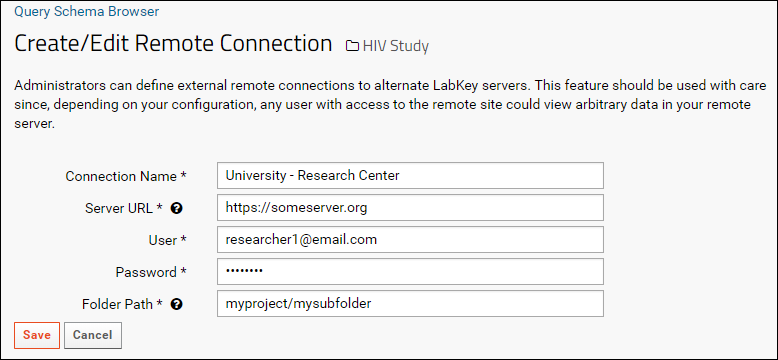

ETL: Manage Remote Connections

ETL: Filter Strategies

ETL: Schedules

ETL: Transactions

ETL: Queuing ETL Processes

ETL: Stored Procedures

ETL: Stored Procedures in PostgreSQL

ETL: Check For Work From a Stored Procedure

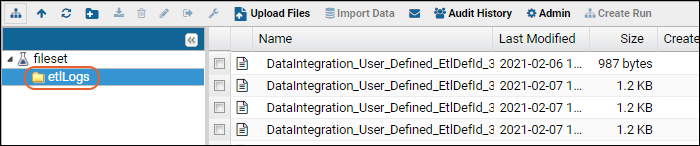

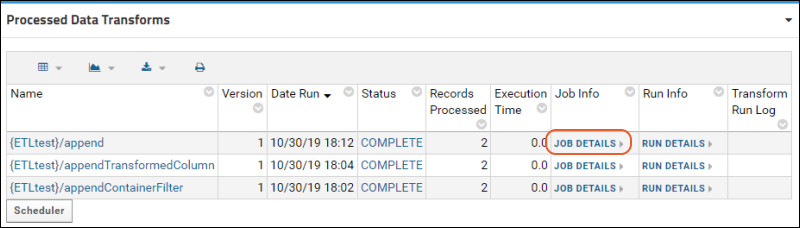

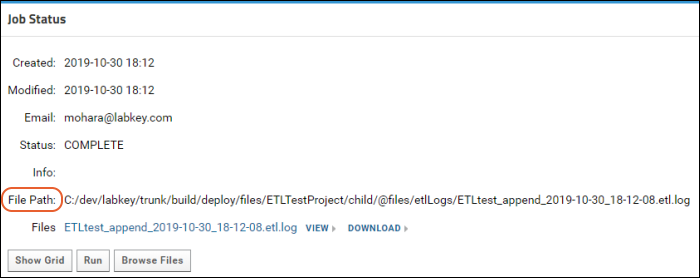

ETL: Logs and Error Handling

ETL: Examples

ETL: Module Structure

Premium Resource: ETL Best Practices

Deploy Modules to a Production Server

Main Credits Page

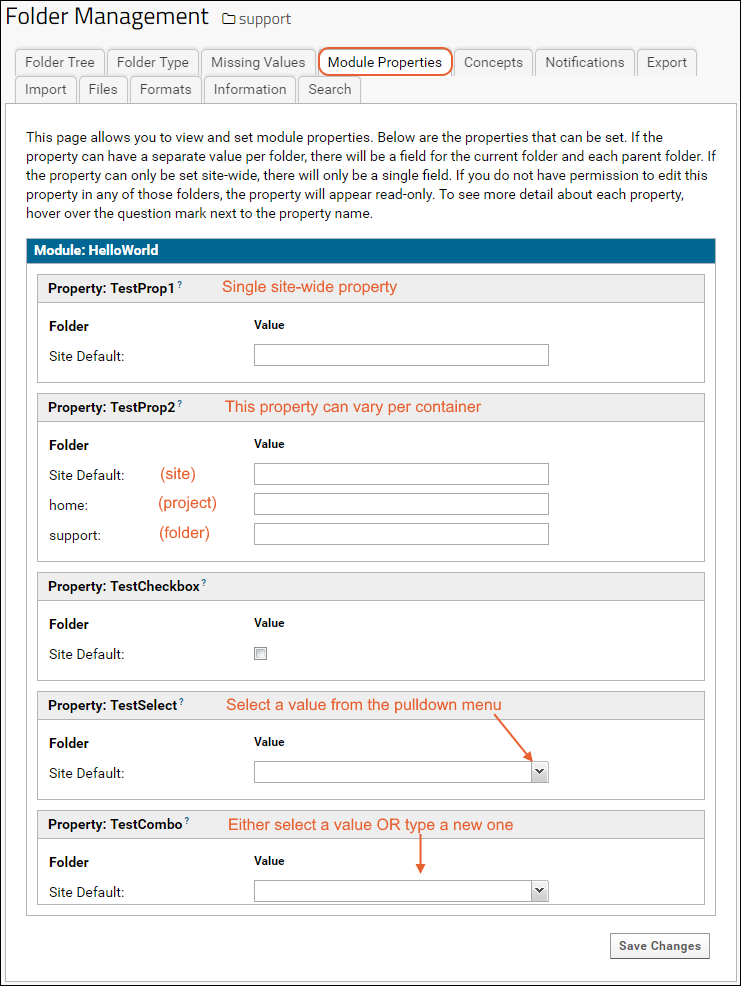

Module Properties

module.properties Reference

Common Development Tasks

Premium Resource: Content Security Policy Development Best Practices

Trigger Scripts

Script Pipeline: Running Scripts in Sequence

LabKey URLs

URL Actions

How To Find schemaName, queryName & viewName

LabKey/Rserve Setup Guide

Web Application Security

Cross-Site Request Forgery (CSRF) Protection

Premium Resource: Fetching CSRF Token

Premium Resource: Changes in JSONObject Behavior

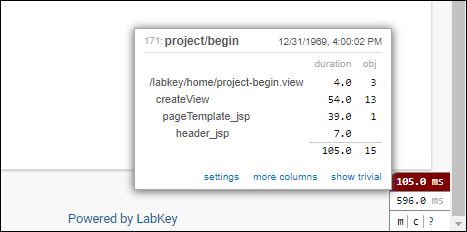

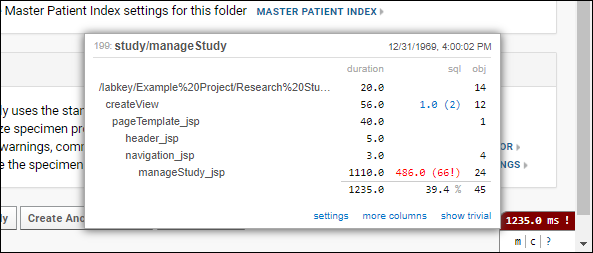

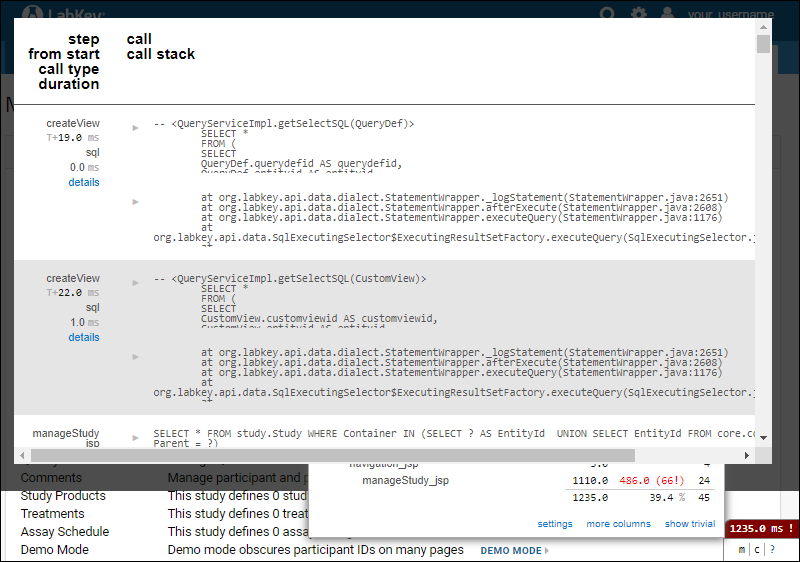

Profiler Settings

Using loginApi.api

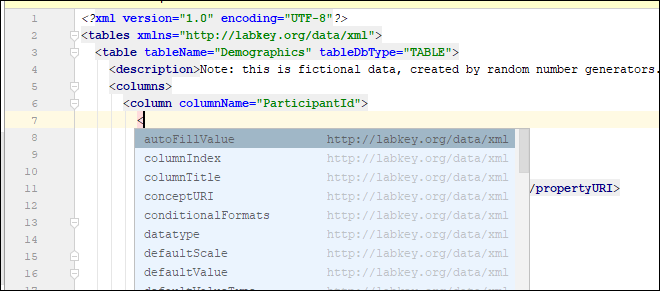

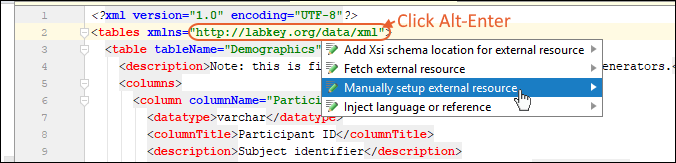

Use IntelliJ for XML File Editing

Premium Resource: Manual Index Creation

Premium Resource: LabKey Coding Standards and Practices

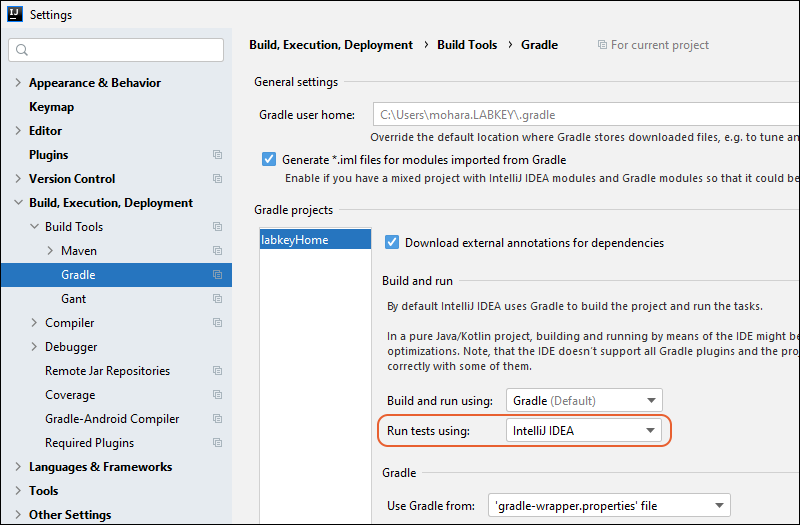

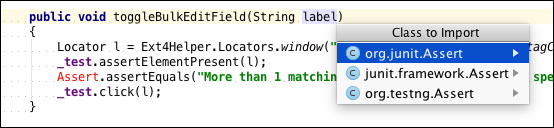

Premium Resource: Best Practices for Writing Automated Tests

Premium Resource: Server Encoding

Premium Resource: ReactJS Development Resources

Premium Resource: Feature Branch Workflow

Premium Resource: Develop with Git

Premium Resource: Git Branch Naming

Premium Resource: Issue Pull Request

LabKey Open Source Project

Release Schedule

Previous Releases

Previous Release Details

Branch Policy

Testing and Code Quality Workflow

Run Automated Tests

Tracking LabKey Issues

Security Issue Evaluation Policy

Submit Contributions

CSS Design Guidelines

Documentation Style Guide

Check In to the Source Project

Developer Reference

Administration

Tutorial: Security

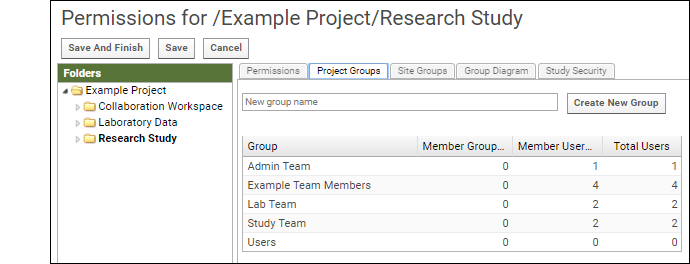

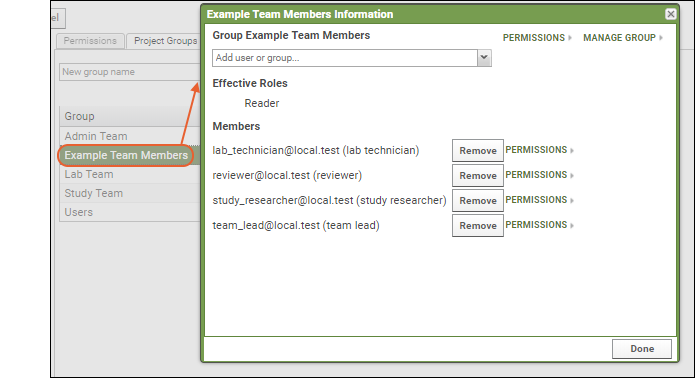

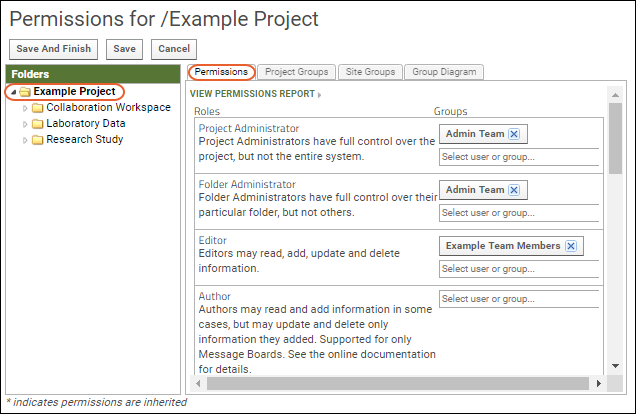

Step 1: Configure Permissions

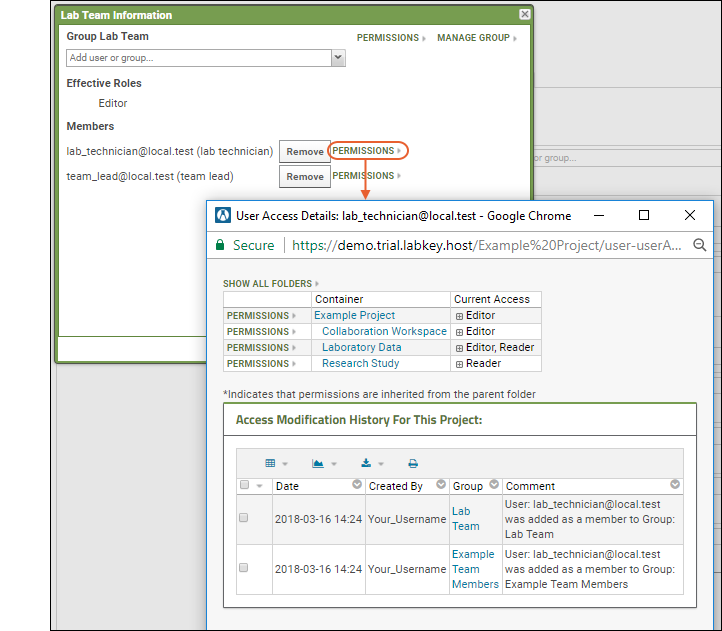

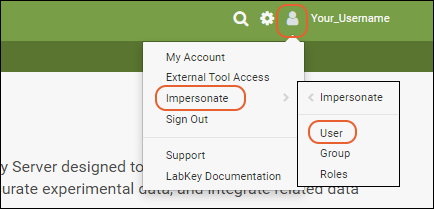

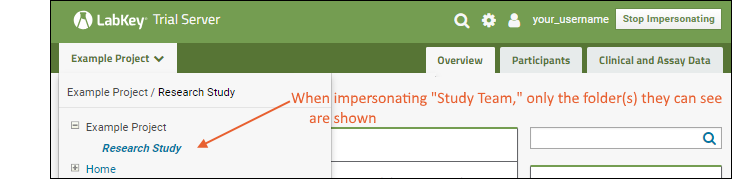

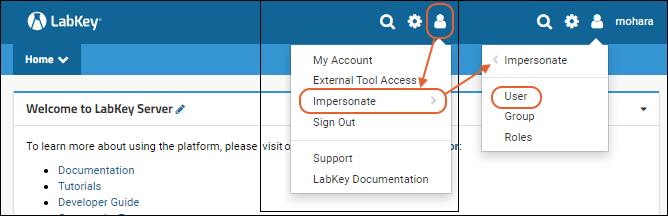

Step 2: Test Security with Impersonation

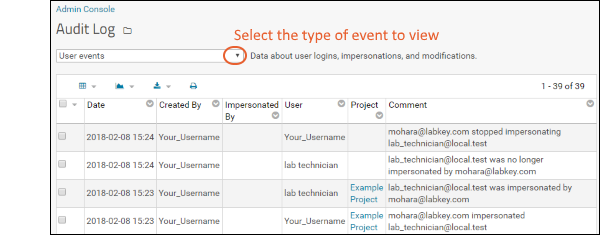

Step 3: Audit User Activity

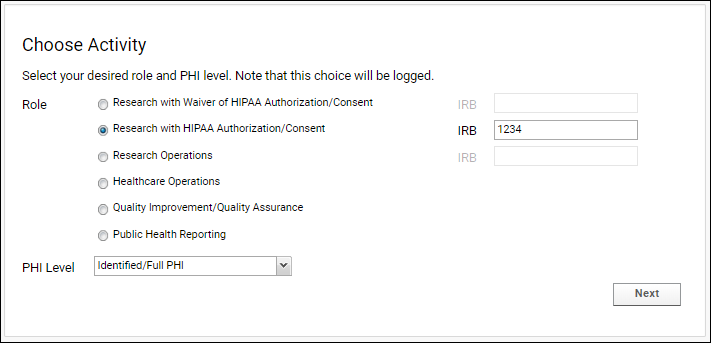

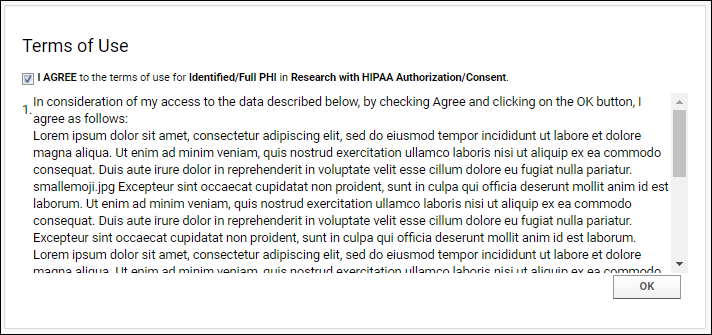

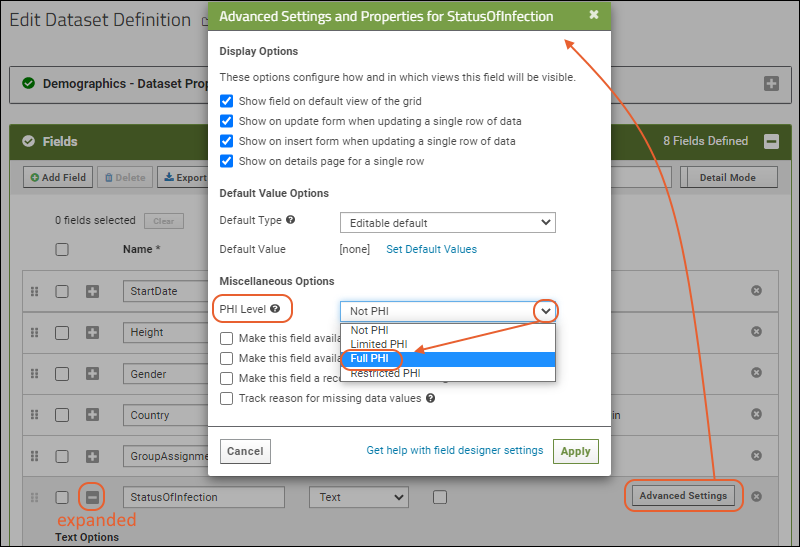

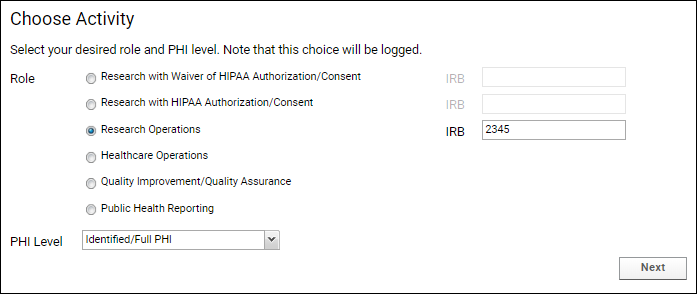

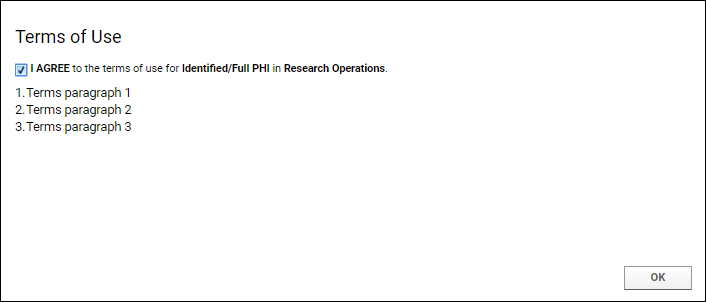

Step 4: Handle Protected Health Information (PHI)

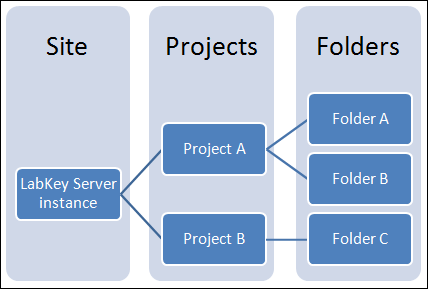

Projects and Folders

Project and Folder Basics

Site Structure: Best Practices

Folder Types

Project and Folder Settings

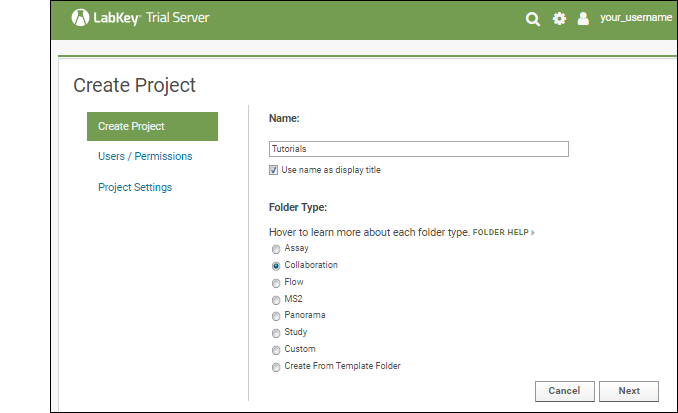

Create a Project or Folder

Manage Projects and Folders

Enable a Module in a Folder

Export / Import a Folder

Export and Import Permission Settings

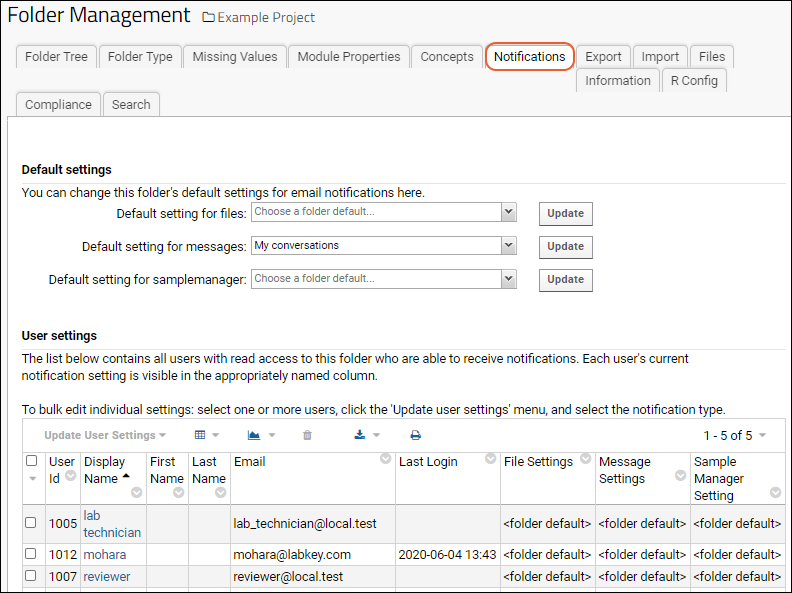

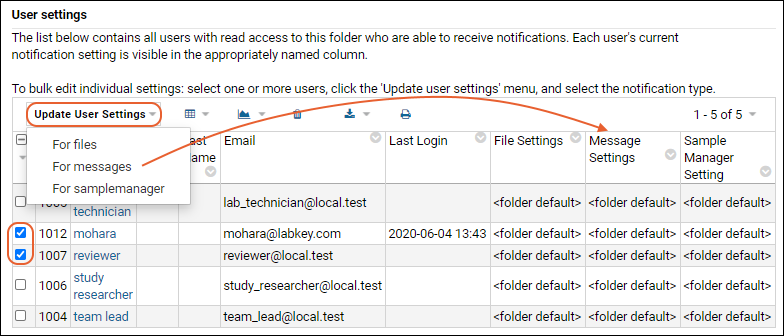

Manage Email Notifications

Establish Terms of Use

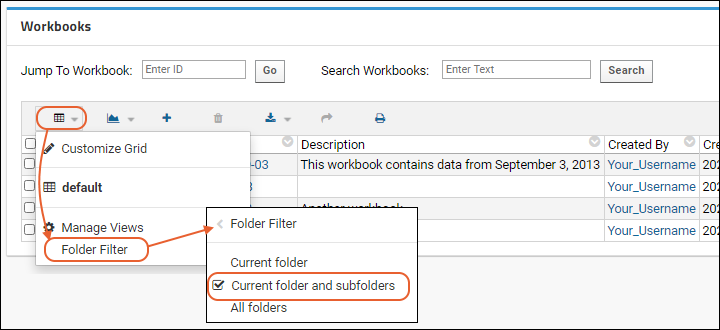

Workbooks

Shared Project

Build User Interface

Premium Resource: Custom Home Page Examples

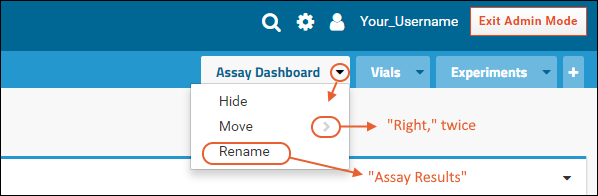

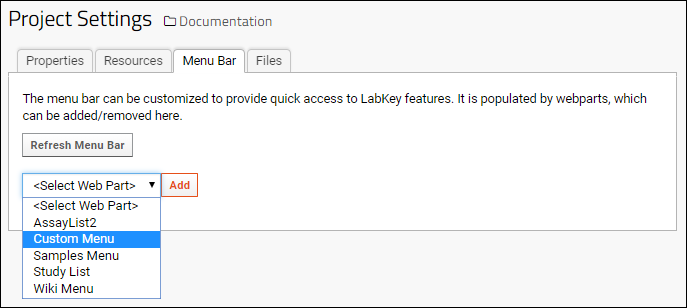

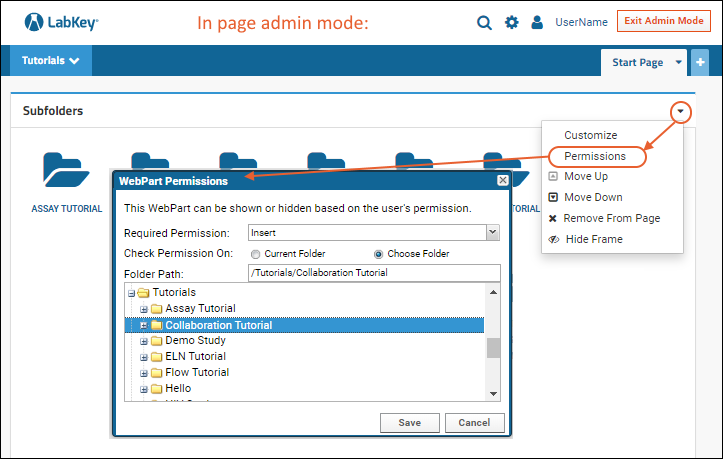

Page Admin Mode

Add Web Parts

Manage Web Parts

Web Part Inventory

Use Tabs

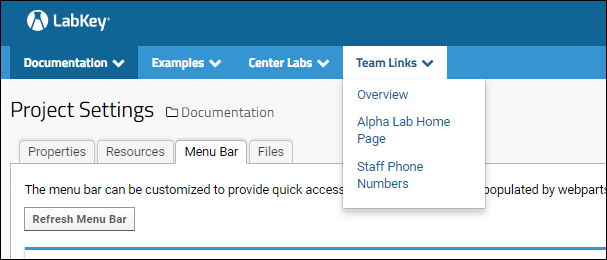

Add Custom Menus

Web Parts: Permissions Required to View

Security

Best Practices for System Security

Configure Permissions

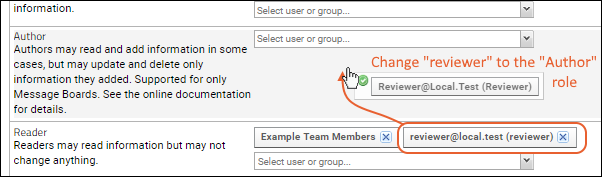

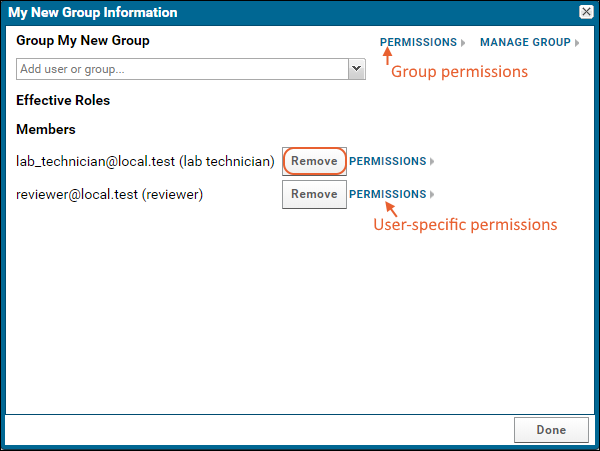

Security Groups

Global Groups

Site Groups

Project Groups

Guests / Anonymous Users

Security Roles Reference

Role/Permissions Matrix

Administrator Permissions Matrix

Matrix of Report, Chart, and Grid Permissions

Privileged Roles

Developer Roles

Storage Roles

Premium Resource: Add a Custom Security Role

User Accounts

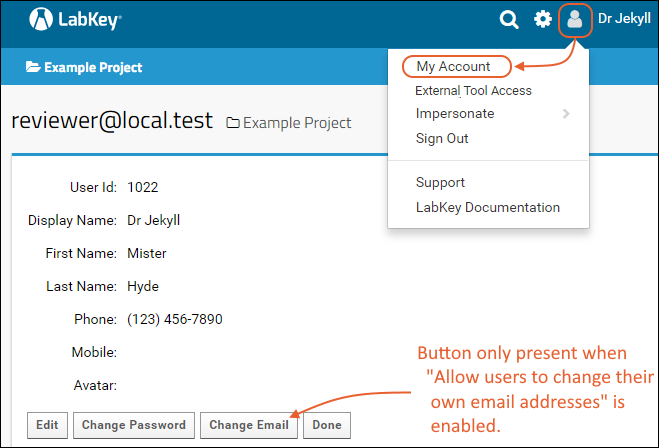

My Account

Add Users

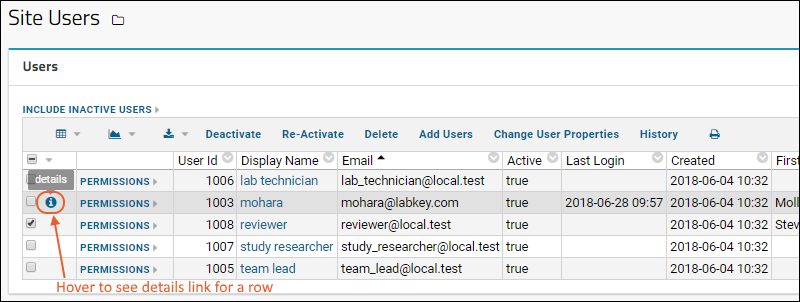

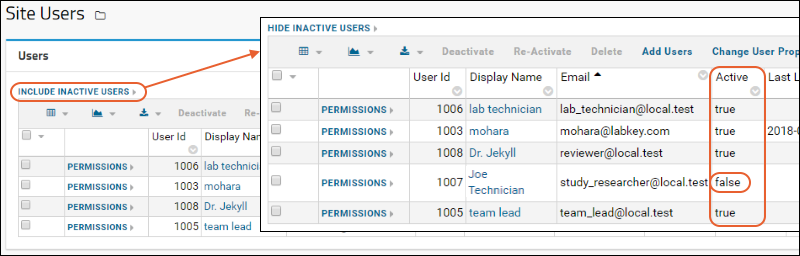

Manage Users

Manage Project Users

Premium Resource: Limit Active Users

Authentication

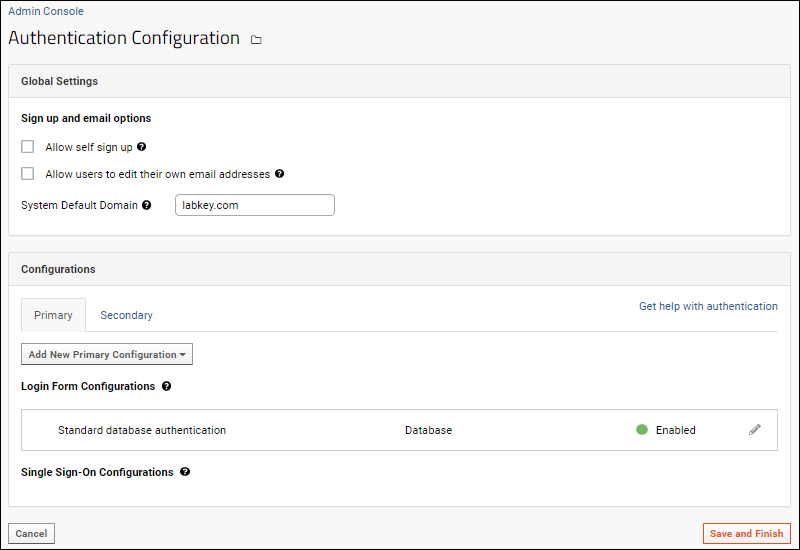

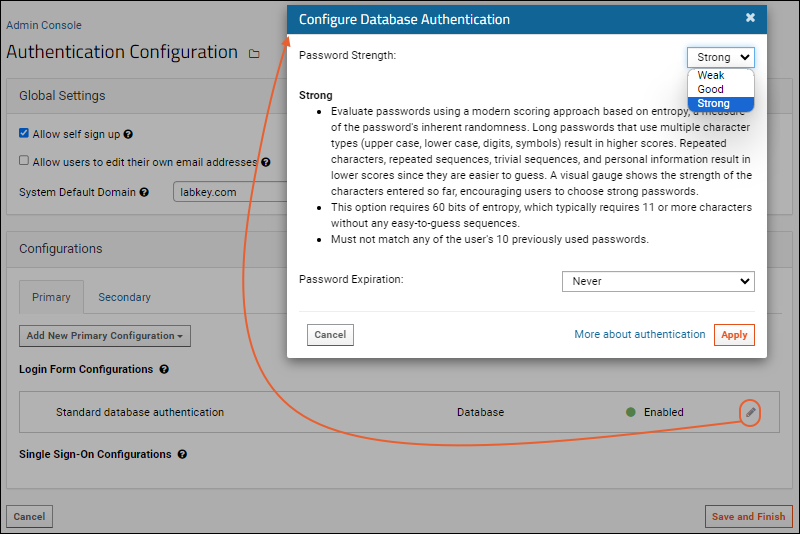

Configure Database Authentication

Passwords

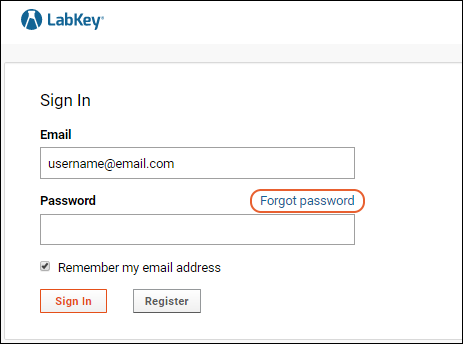

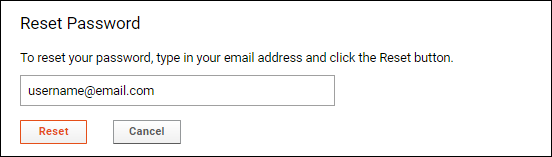

Password Reset

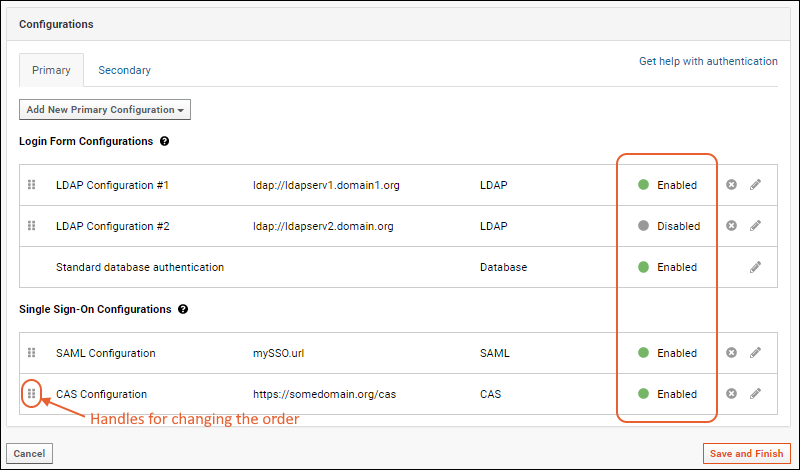

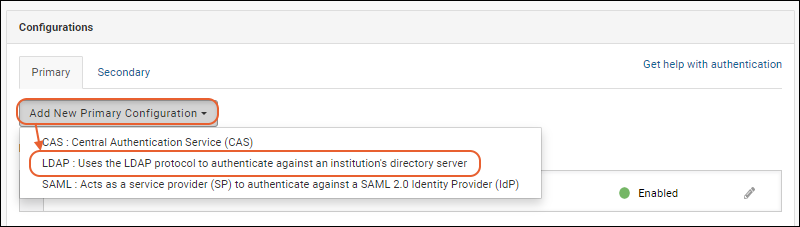

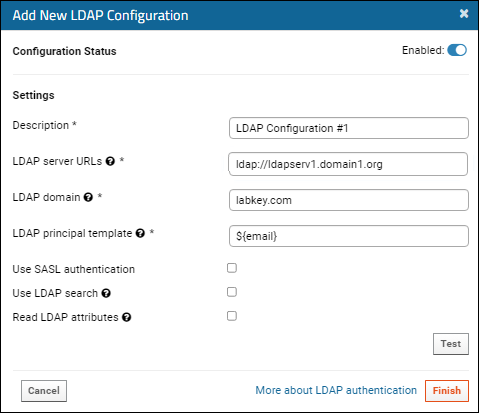

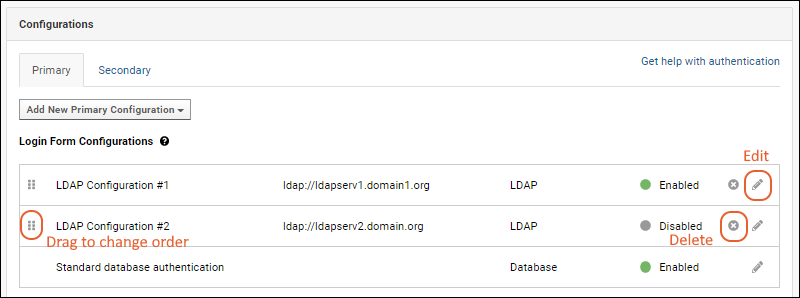

Configure LDAP Authentication

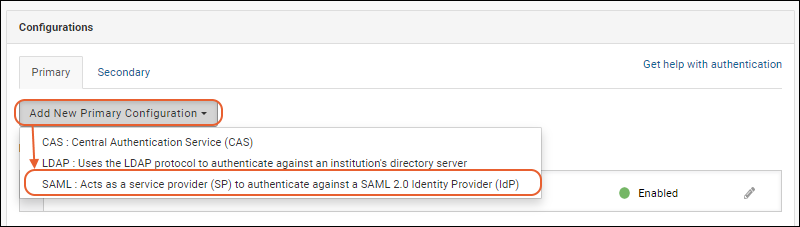

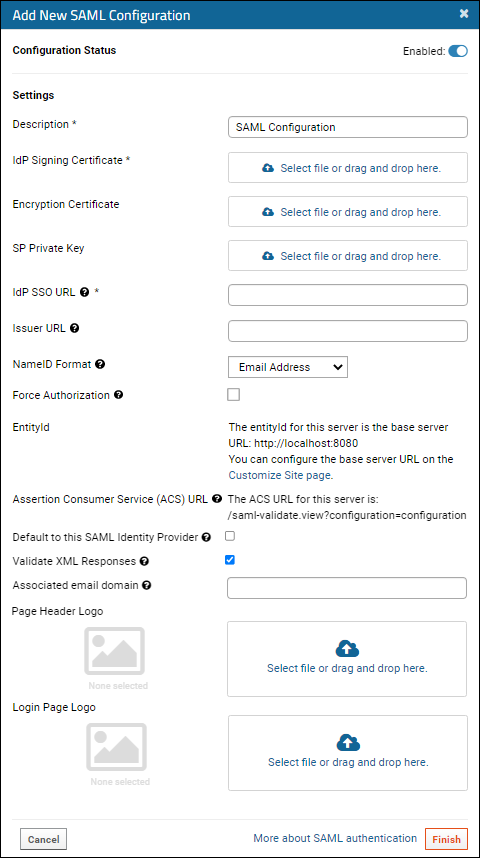

Configure SAML Authentication

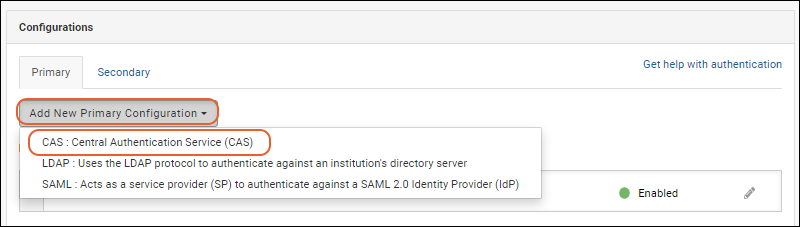

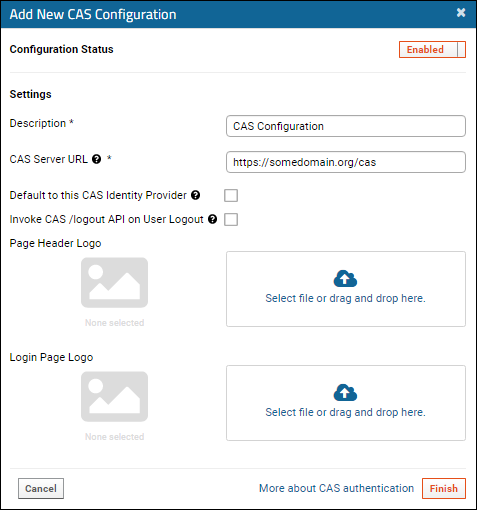

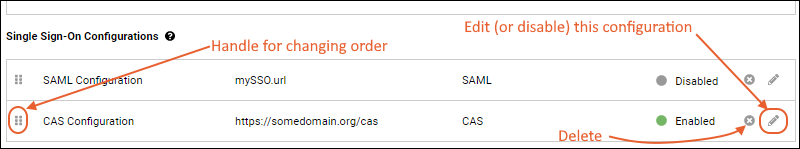

Configure CAS Authentication

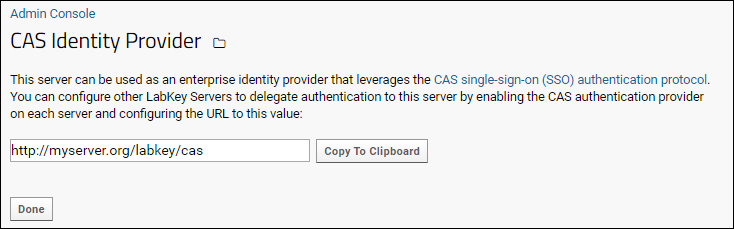

Configure CAS Identity Provider

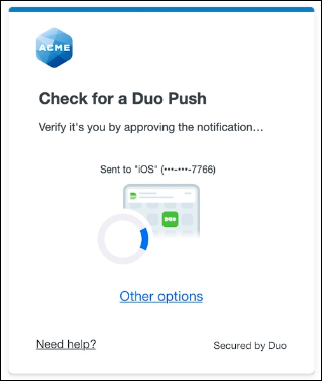

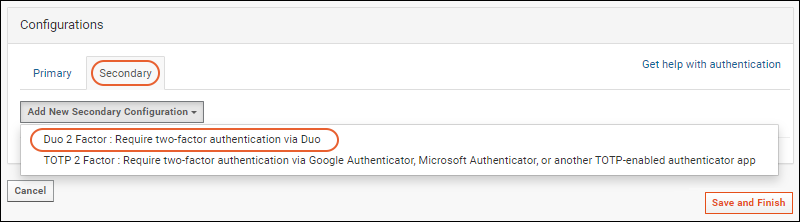

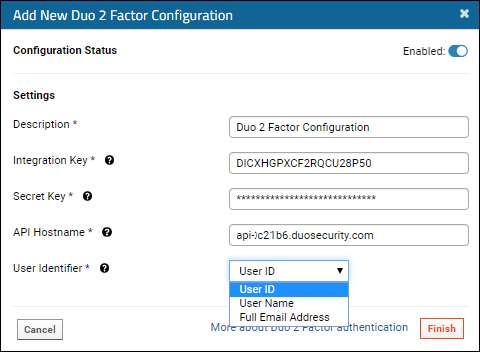

Configure Duo Two-Factor Authentication

Configure TOTP Two-Factor Authentication

Create a netrc file

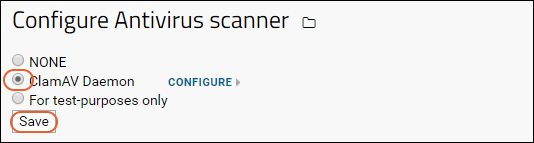

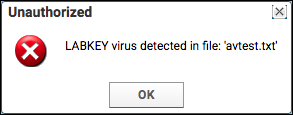

Virus Checking

Test Security Settings by Impersonation

Premium Resource: Best Practices for Security Scanning

Compliance

Compliance: Overview

Compliance: Checklist

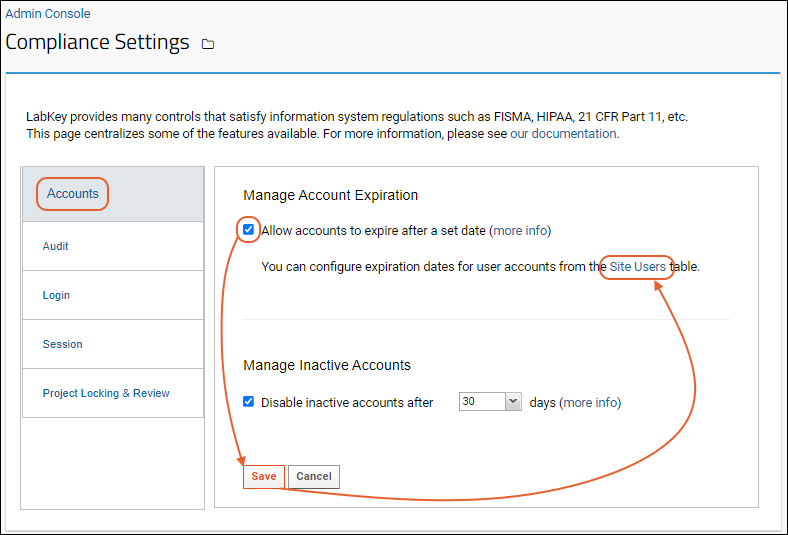

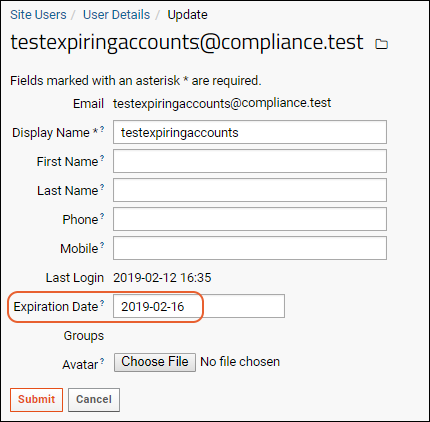

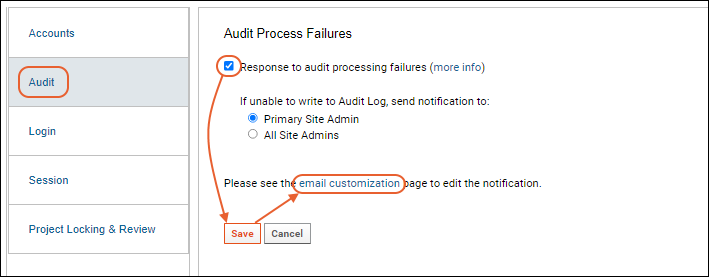

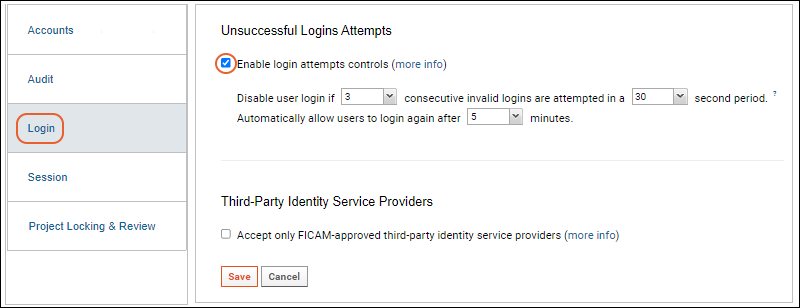

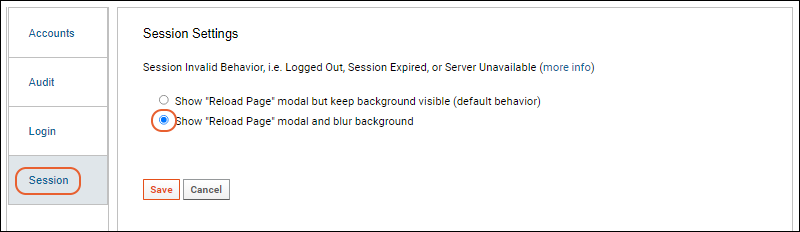

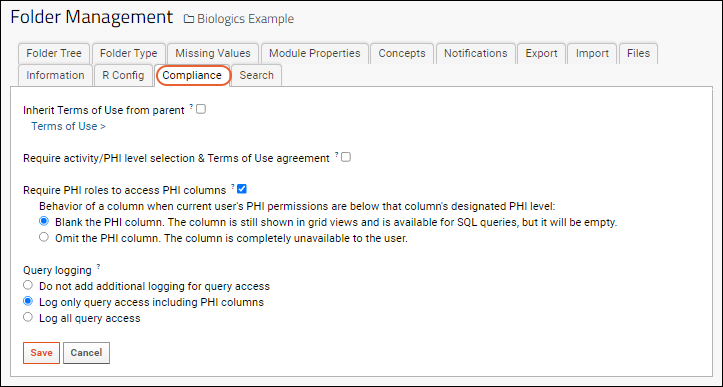

Compliance: Settings

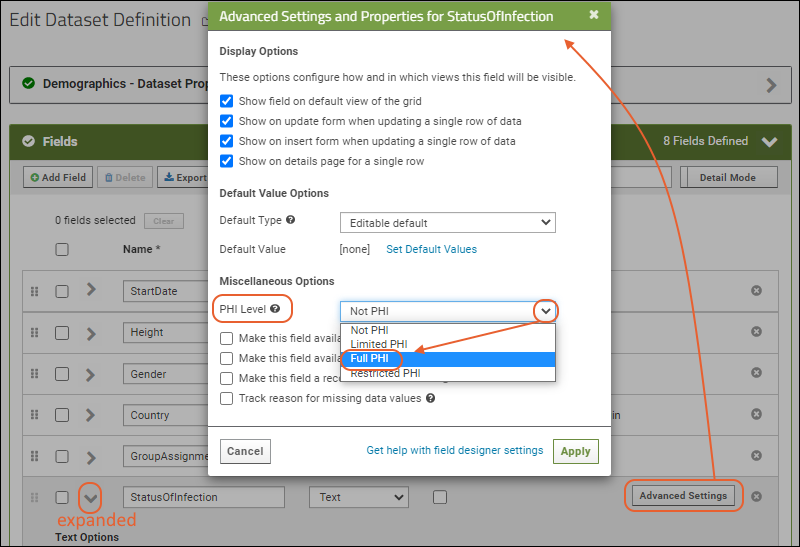

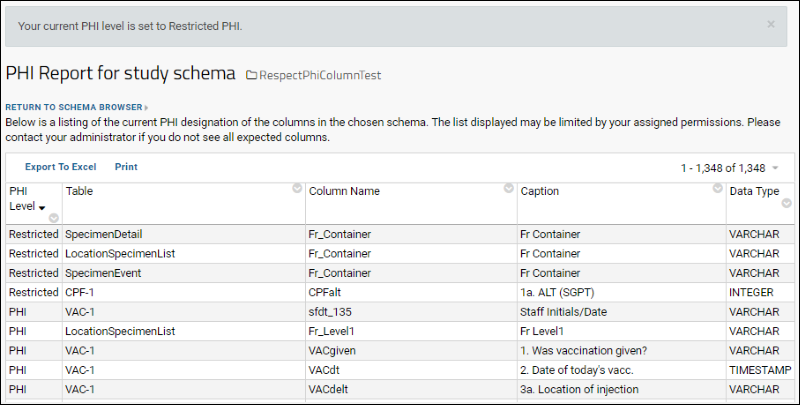

Compliance: Setting PHI Levels on Fields

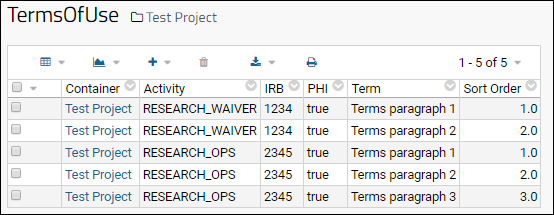

Compliance: Terms of Use

Compliance: Security Roles

Compliance: Configure PHI Data Handling

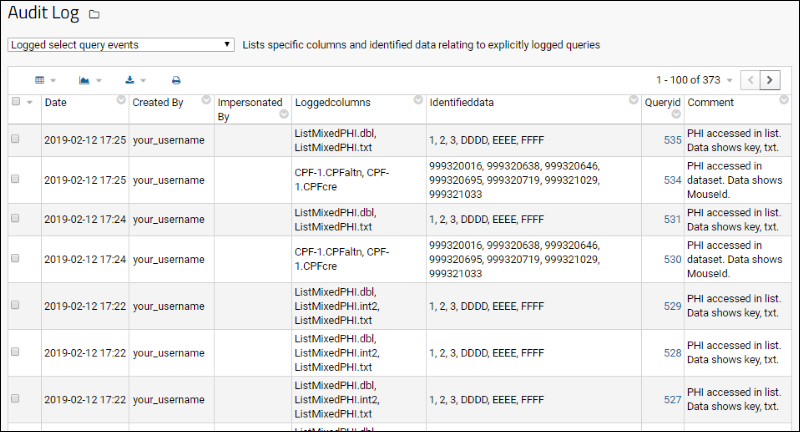

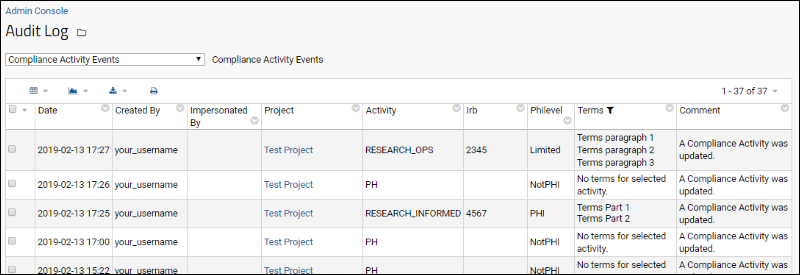

Compliance: Logging

Compliance: PHI Report

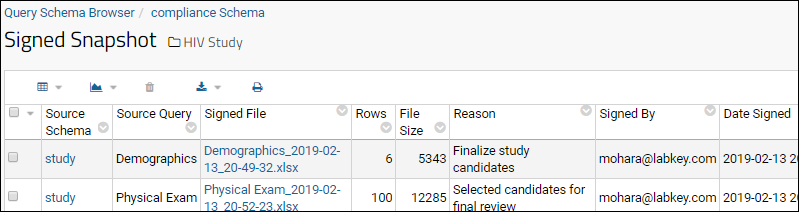

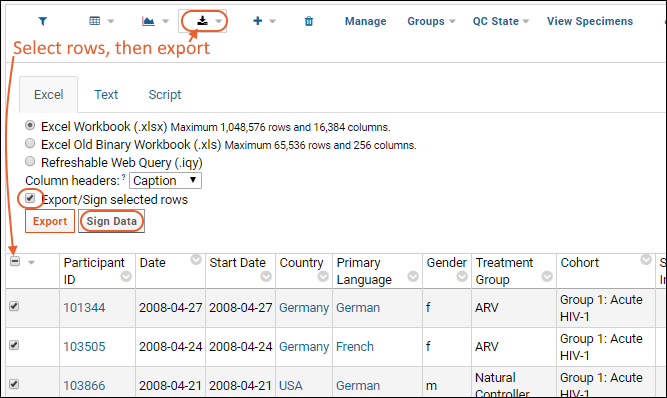

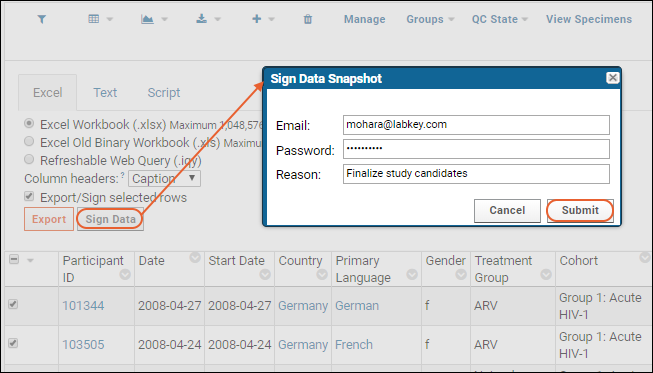

Electronic Signatures / Sign Data

GDPR Compliance

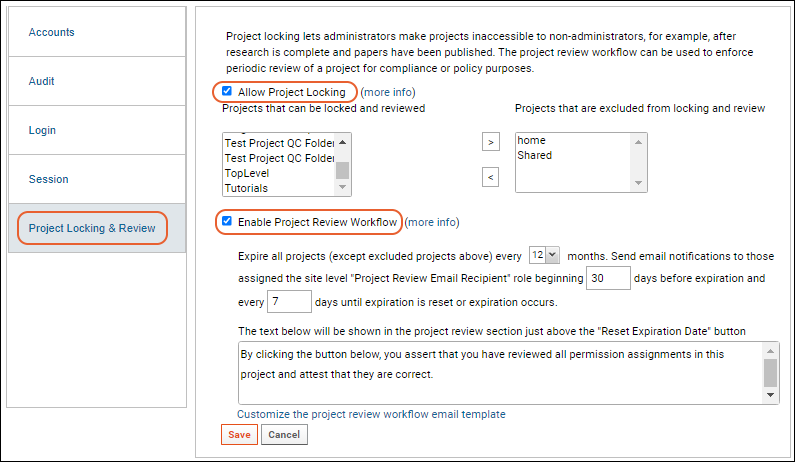

Project Locking and Review Workflow

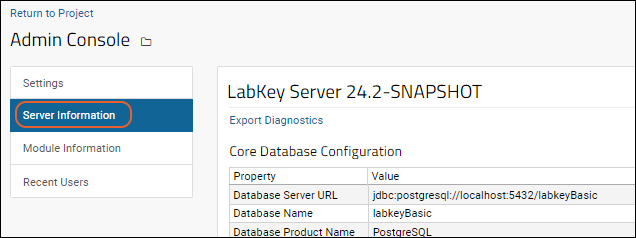

Admin Console

Site Settings

Usage/Exception Reporting

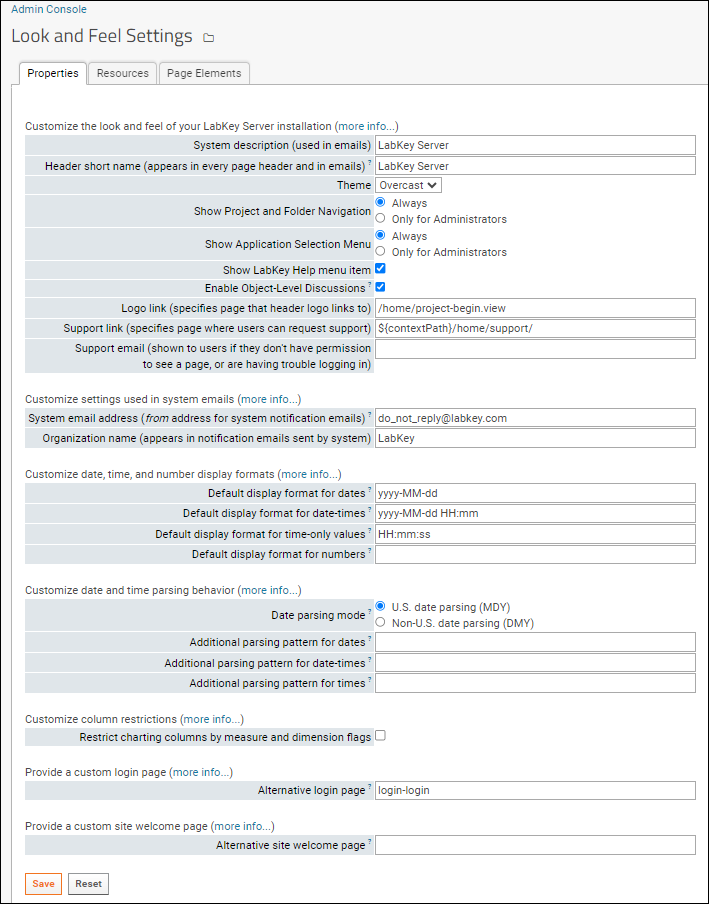

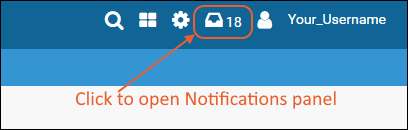

Look and Feel Settings

Page Elements

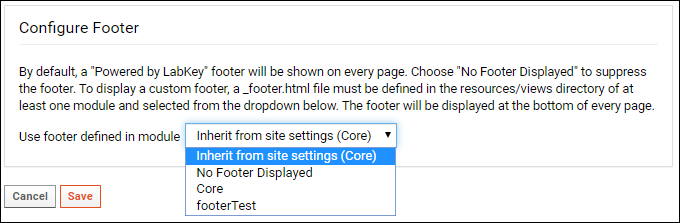

Web Site Theme

Email Template Customization

Optional, Deprecated, or Experimental Features

Manage Missing Value Indicators / Out of Range Values

Short URLs

System Maintenance

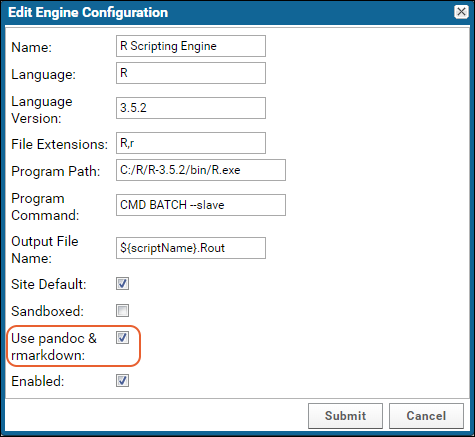

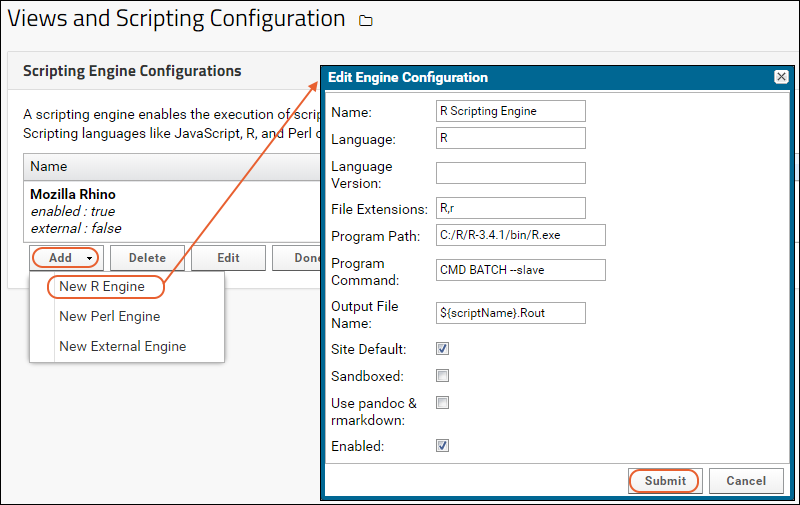

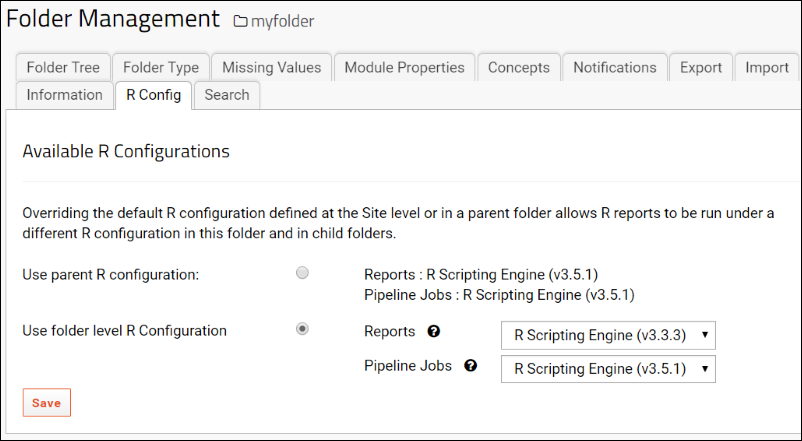

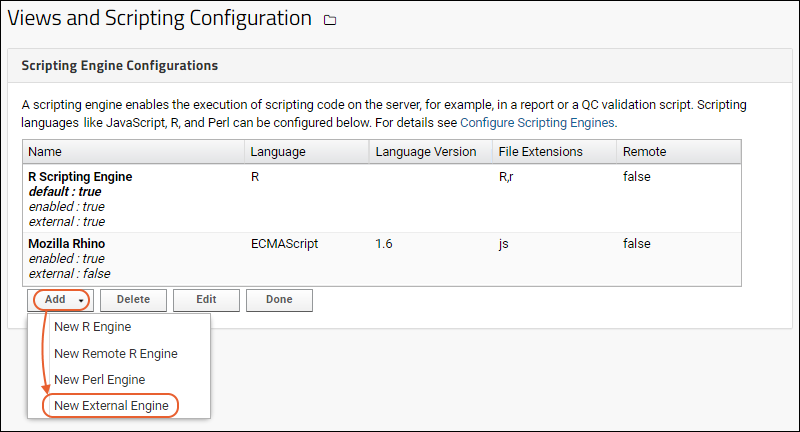

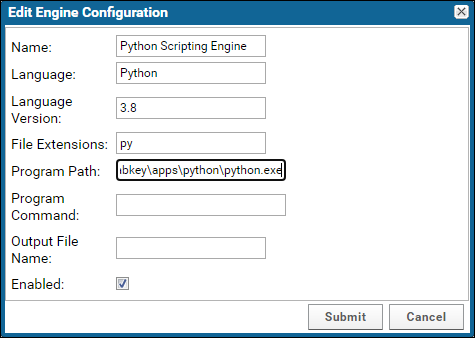

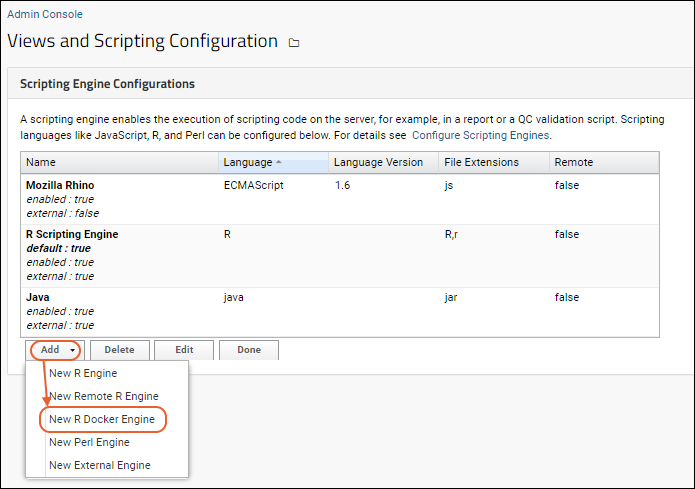

Configure Scripting Engines

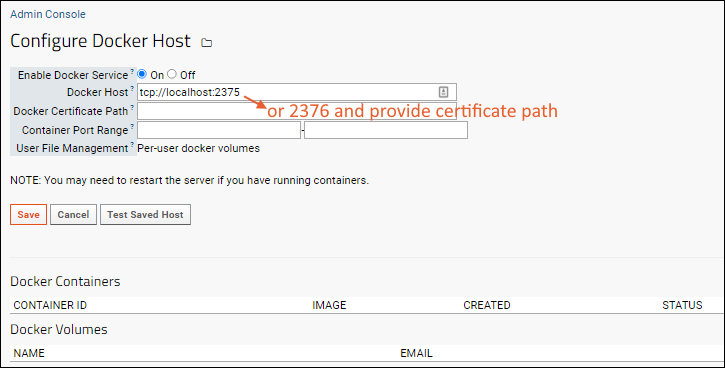

Configure Docker Host

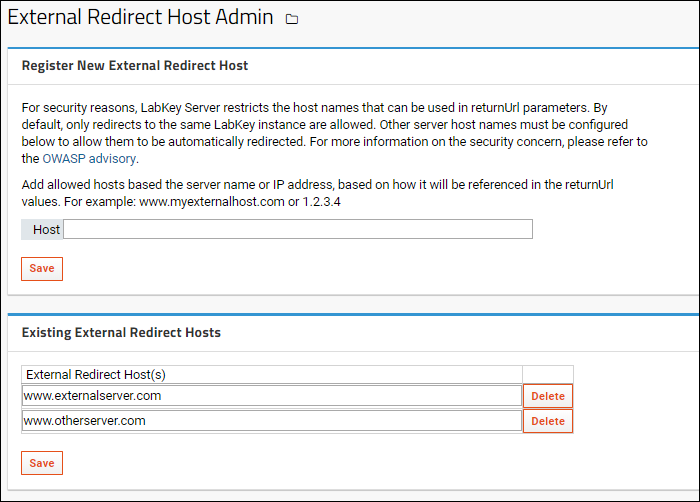

External Hosts

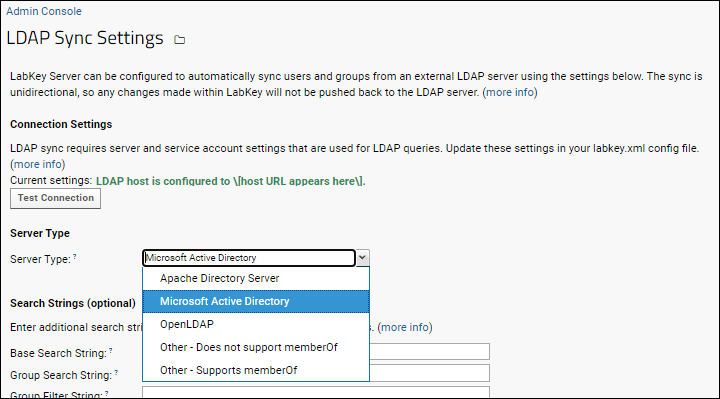

LDAP User/Group Synchronization

Proxy Servlets

Premium Resource: Plotly Dash Demo

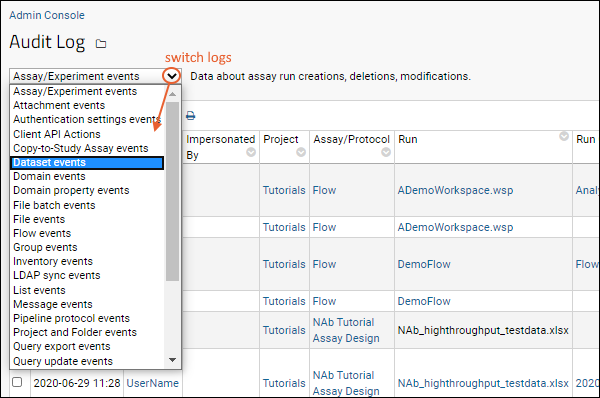

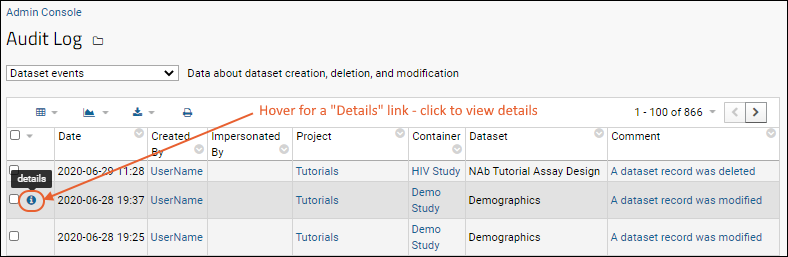

Audit Log / Audit Site Activity

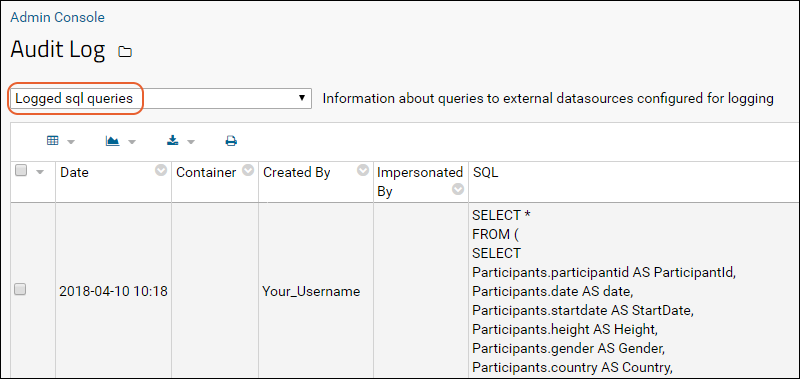

SQL Query Logging

Site HTTP Access Logs

Audit Log Maintenance

Export Diagnostic Information

Actions Diagnostics

Cache Statistics

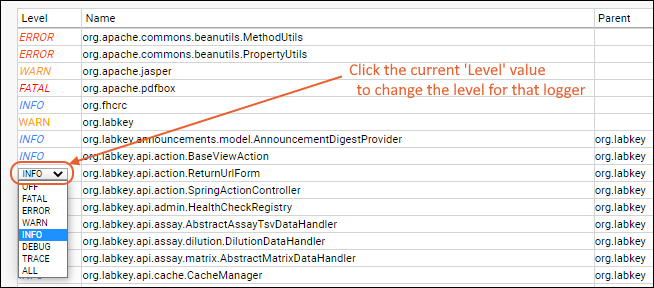

Loggers

Memory Usage

Query Performance

Site/Container Validation

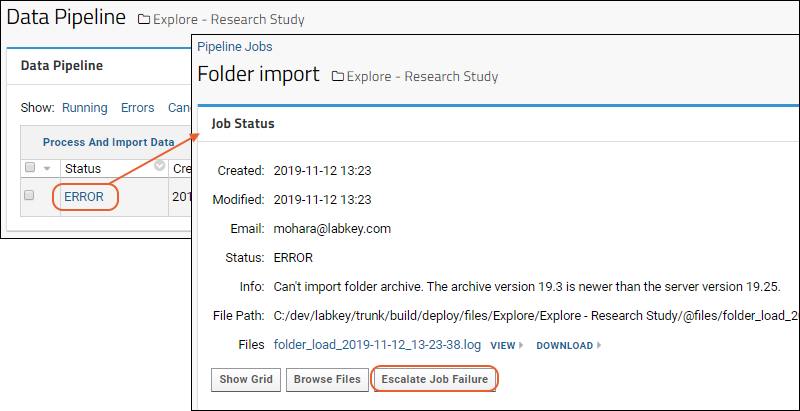

Data Processing Pipeline

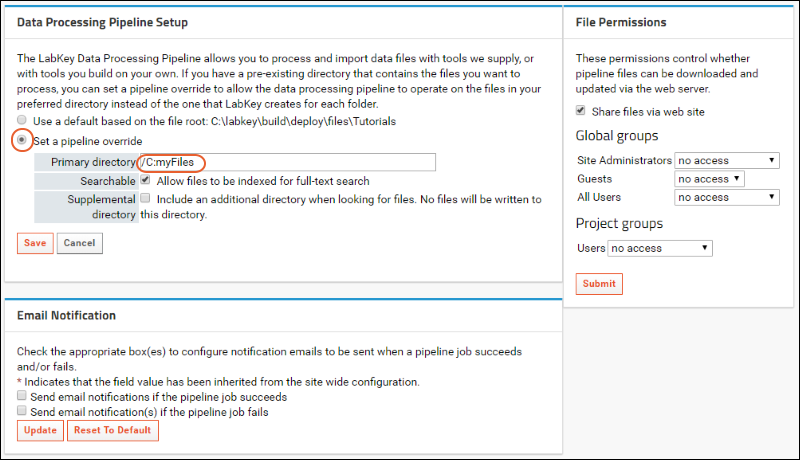

Set a Pipeline Override

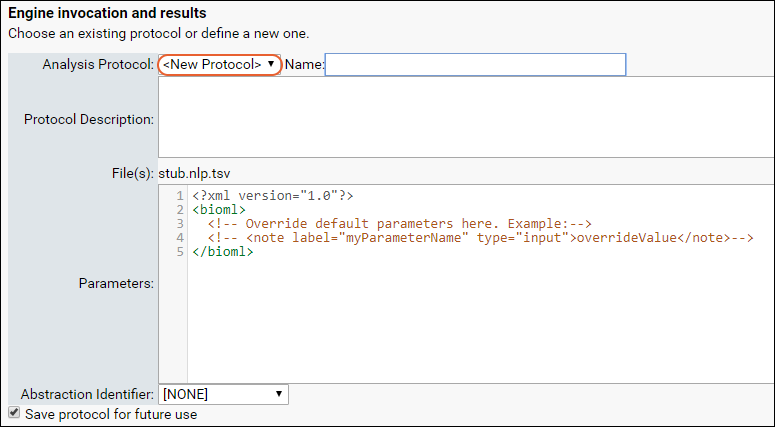

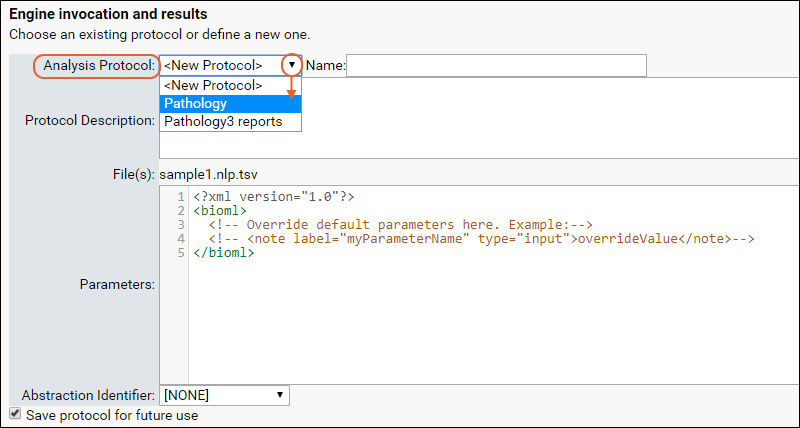

Pipeline Protocols

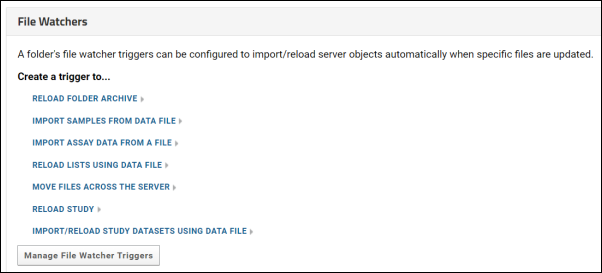

File Watchers

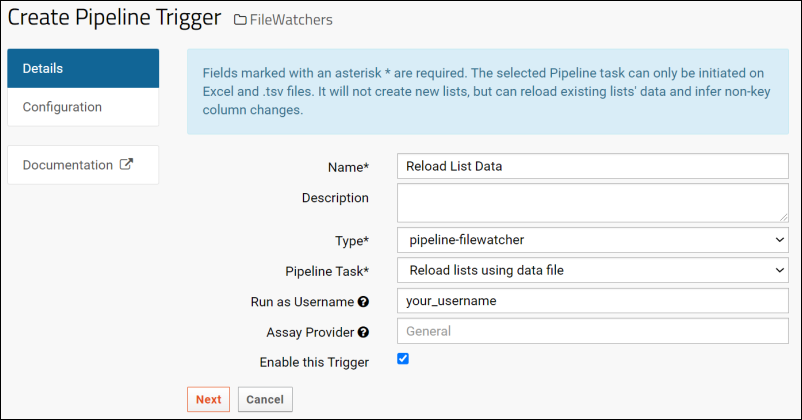

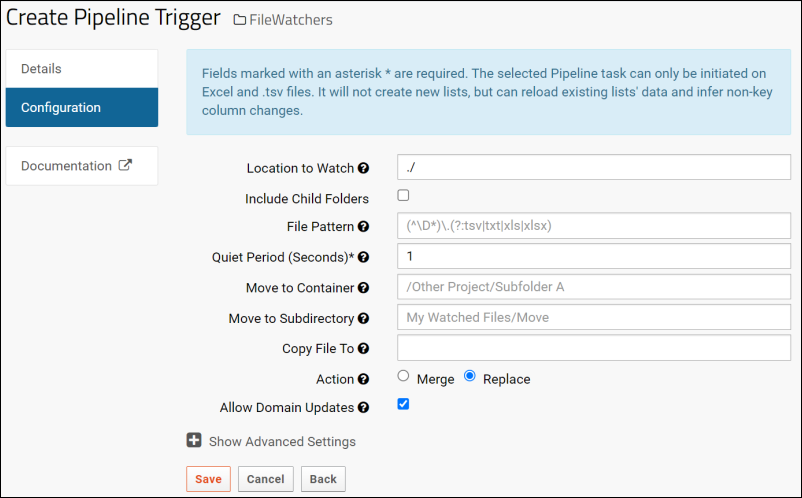

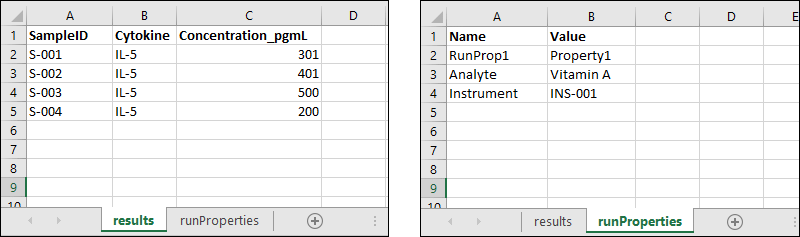

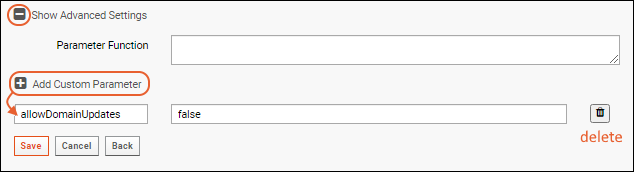

Create a File Watcher

File Watcher Tasks

File Watchers for Script Pipelines

File Watcher: File Name Patterns

File Watcher Examples

Premium Resource: Run Pipelines in Parallel

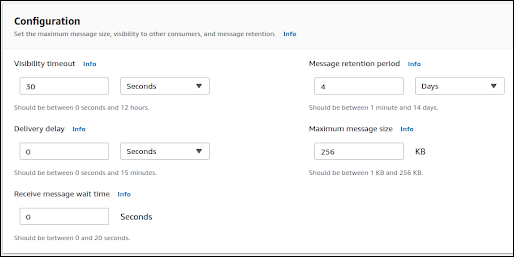

Enterprise Pipeline with ActiveMQ

ActiveMQ JMS Queue

Configure the Pipeline with ActiveMQ

Configure Remote Pipeline Server

Troubleshoot the Enterprise Pipeline

Install LabKey

Supported Technologies

Install on Linux

Set Application Properties

Premium Resource: Install on Windows

Common Install Tasks

Service File Customizations

Use HTTPS with LabKey

SMTP Configuration

Install and Set Up R

Configure an R Docker Engine

Control Startup Behavior

Server Startup Properties

ExtraWebapp Resources

Sending Email from Non-LabKey Domains

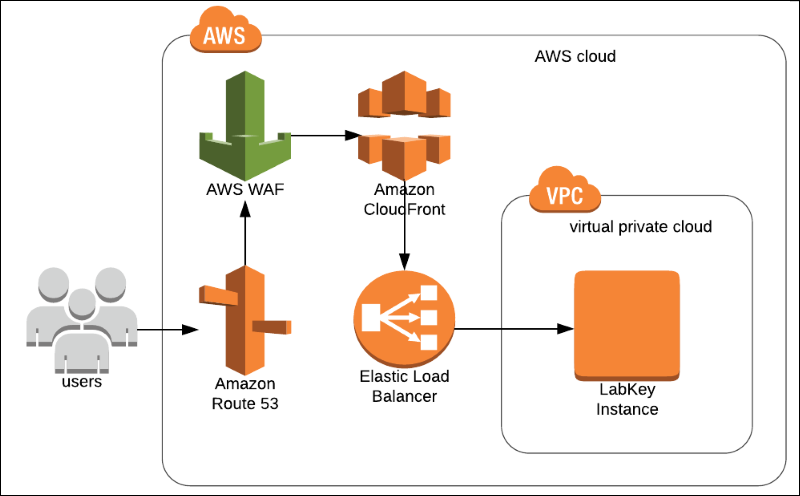

Deploying an AWS Web Application Firewall

Install Third Party Components

Troubleshoot Installation and Configuration

Troubleshoot: Error Messages

Collect Debugging Information

Example Hardware/Software Configurations

Premium Resource: Reference Architecture / System Requirements

LabKey Modules

Upgrade LabKey

Upgrade on Linux

Upgrade on Windows

Premium Resource: Upgrade JDK on AWS Ubuntu Servers

LabKey Releases and Upgrade Support Policy

External Schemas and Data Sources

External PostgreSQL Data Sources

External Microsoft SQL Server Data Sources

External MySQL Data Sources

External Oracle Data Sources

External SAS/SHARE Data Sources

External Redshift Data Sources

External Snowflake Data Sources

Premium Feature: Use Microsoft SQL Server

GROUP_CONCAT Install

PremiumStats Install

ETL: Stored Procedures in MS SQL Server

Backup and Maintenance

Backup Guidelines

An Example Backup Plan

Example Scripts for Backup Scenarios

Restore from Backup

Premium Resource: Change the Encryption Key

Use a Staging Server

Troubleshoot LabKey Server

Sample Manager

Use Sample Manager with LabKey Server

Use Sample Manager with Studies

LabKey ELN

ELN: Frequently Asked Questions

LabKey LIMS

LIMS: Downloadable Templates

LIMS: Samples

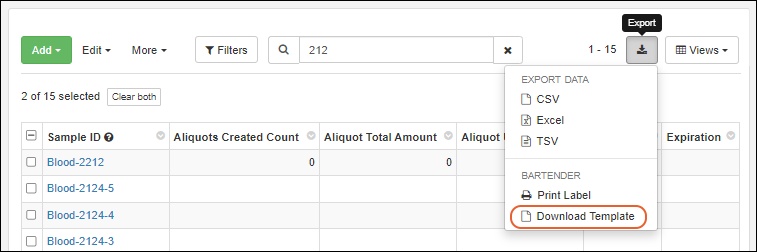

Print Labels with BarTender

LIMS: Assay Data

LIMS: Charts

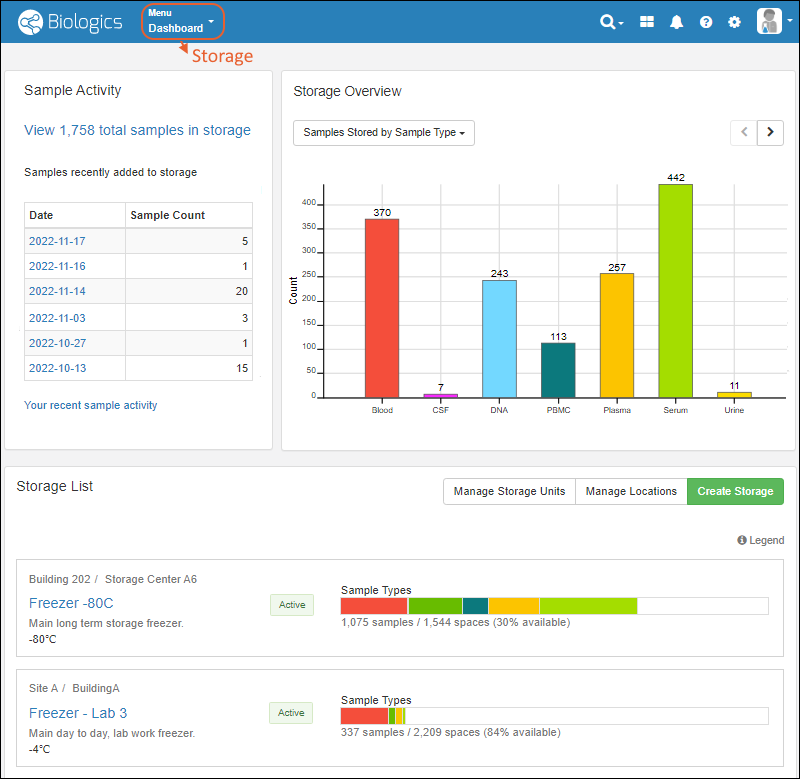

LIMS: Storage Management

LIMS: Workflow

Biologics LIMS

Introduction to LabKey Biologics

Release Notes: Biologics

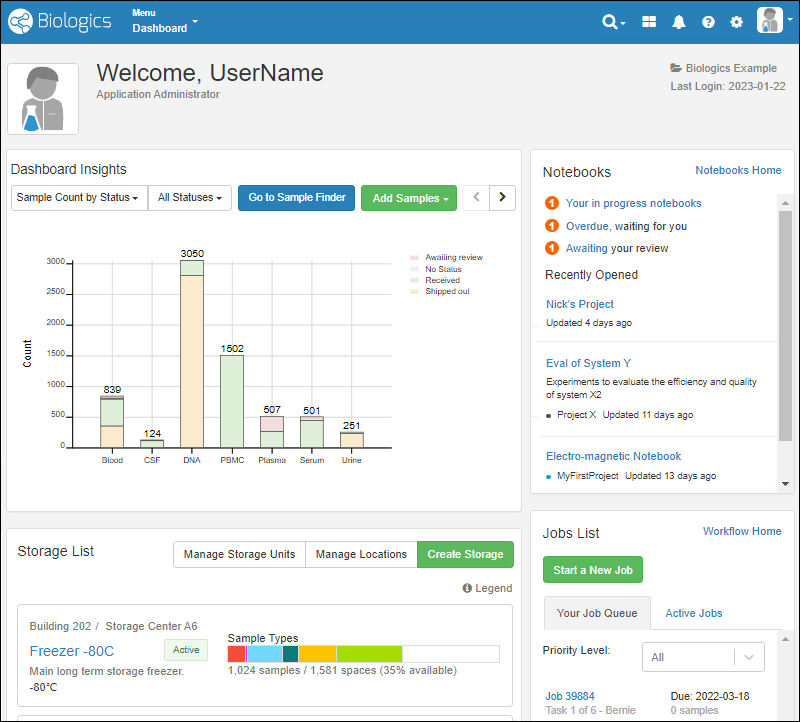

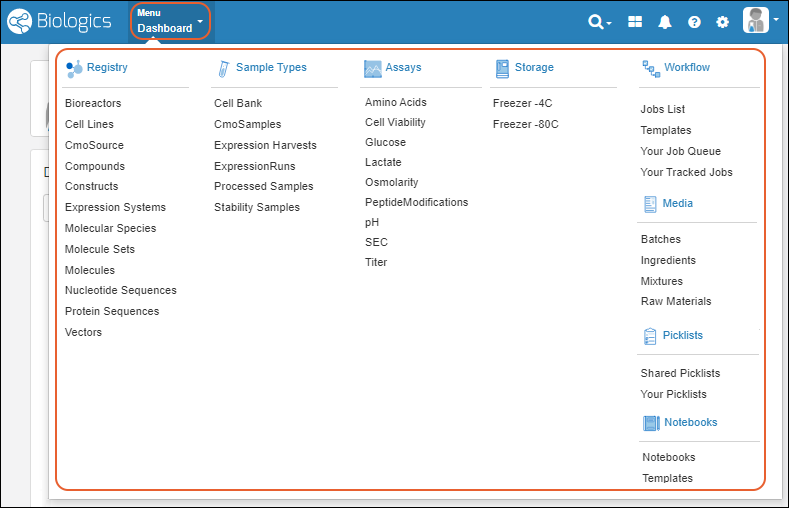

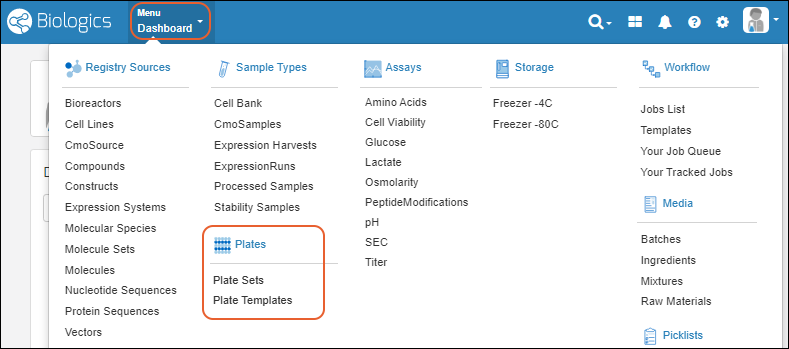

Biologics: Navigate

Biologics: Projects and Folders

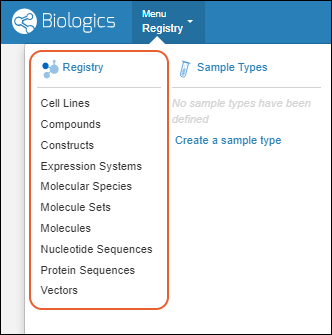

Biologics: Bioregistry

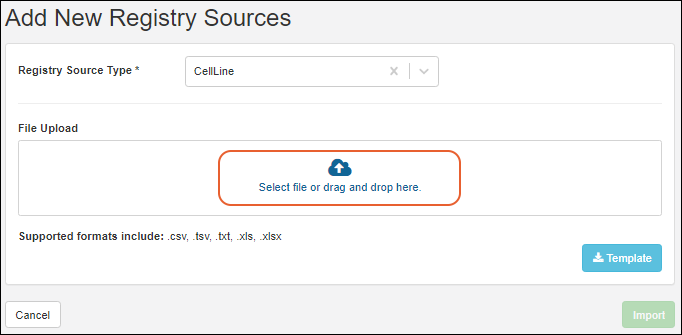

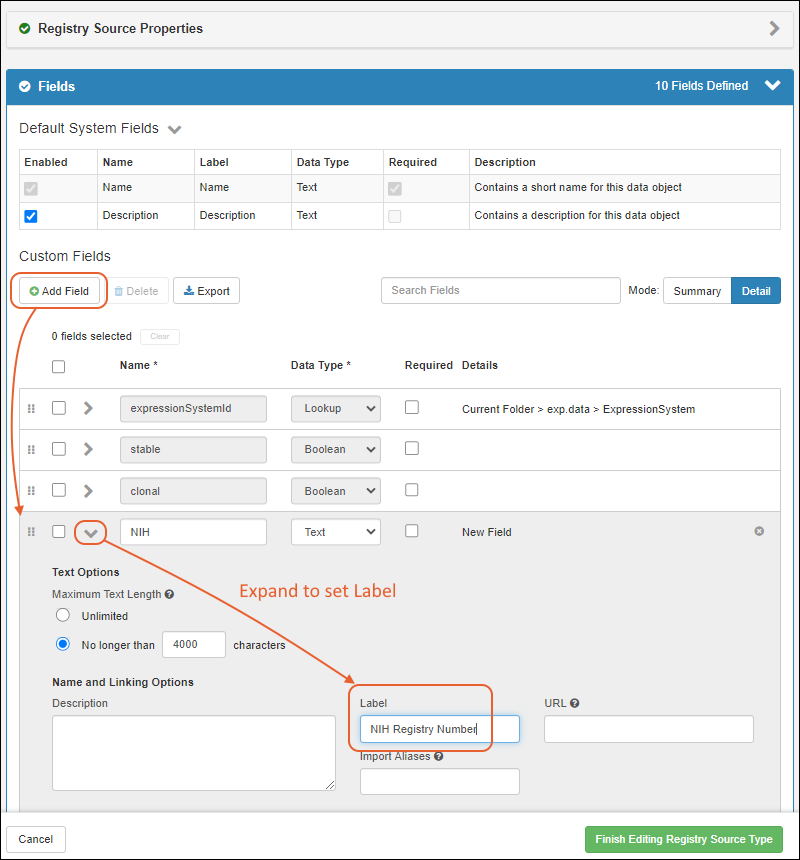

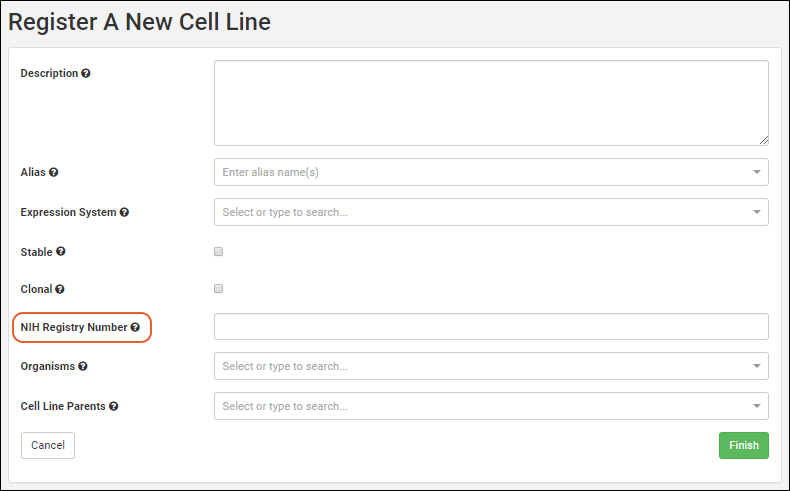

Create Registry Sources

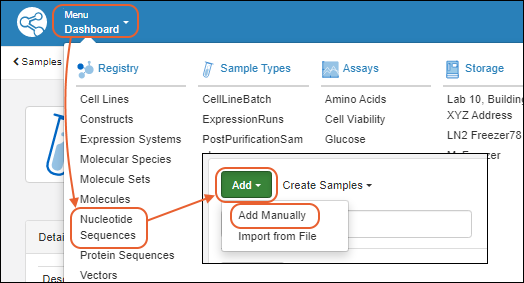

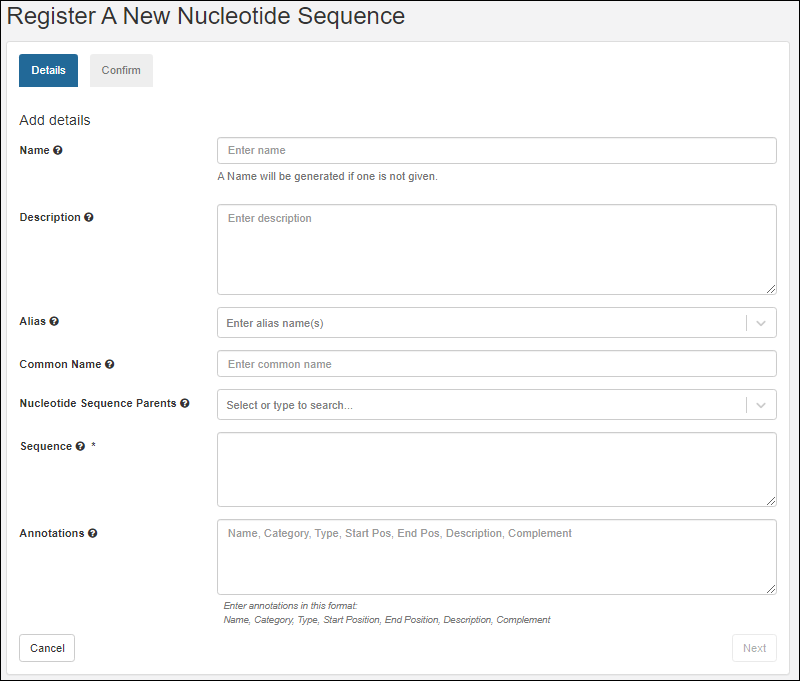

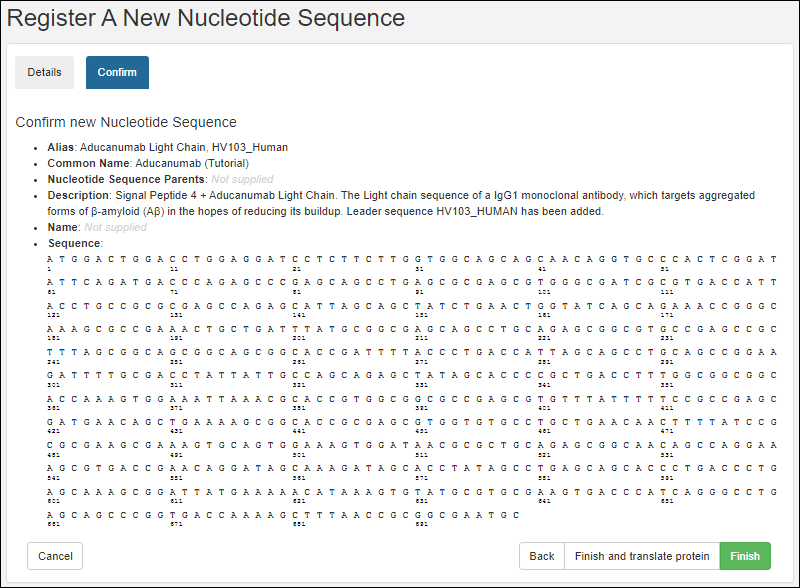

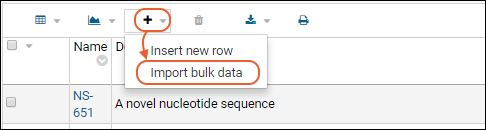

Register Nucleotide Sequences

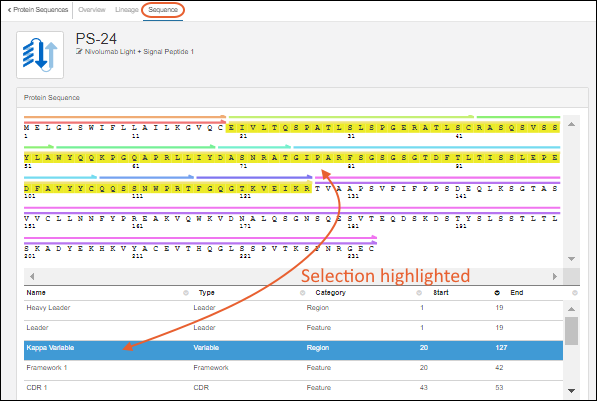

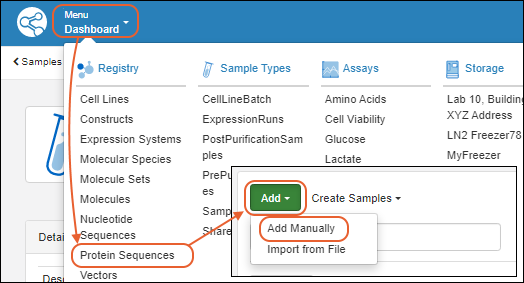

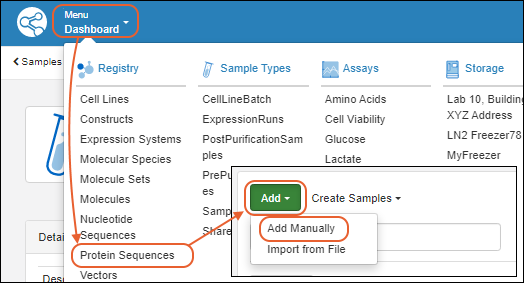

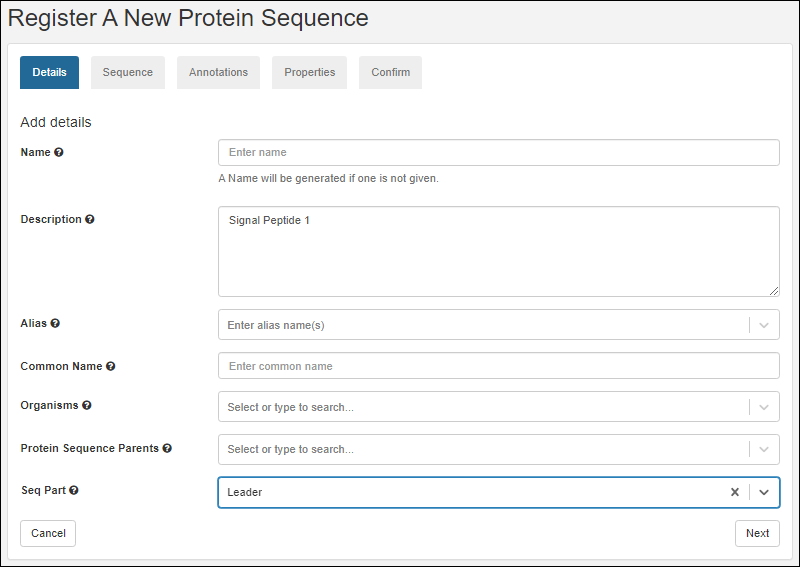

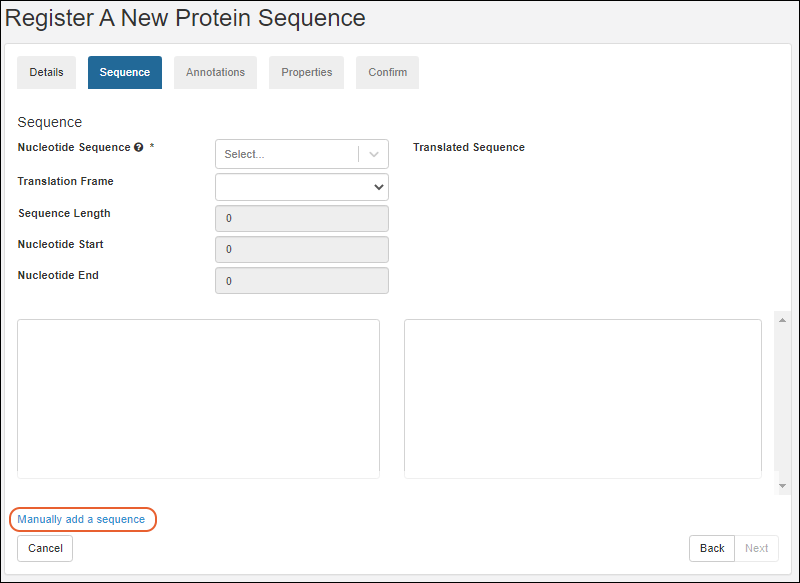

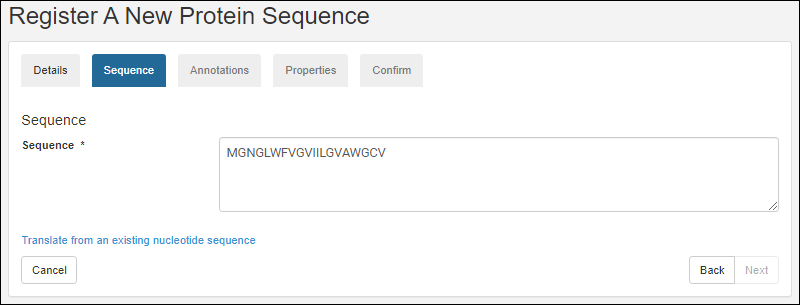

Register Protein Sequences

Register Leaders, Linkers, and Tags

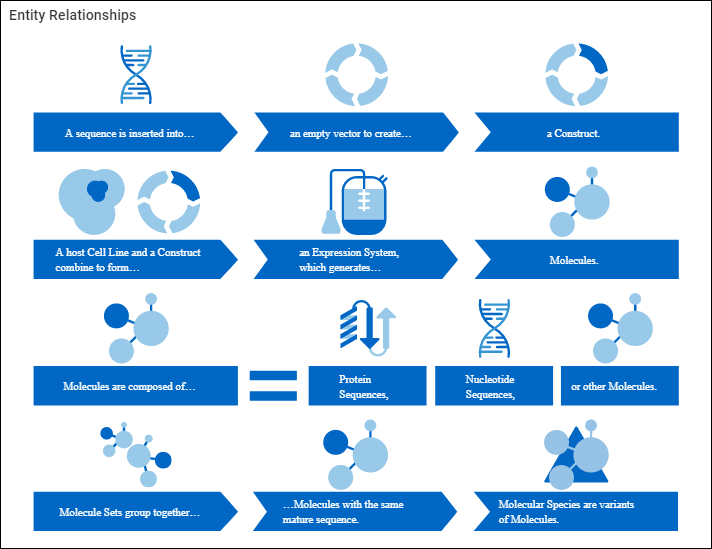

Vectors, Constructs, Cell Lines, and Expression Systems

Registry Reclassification

Biologics: Terminology

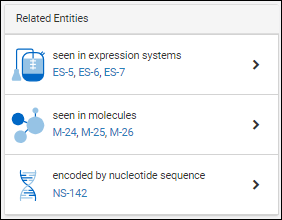

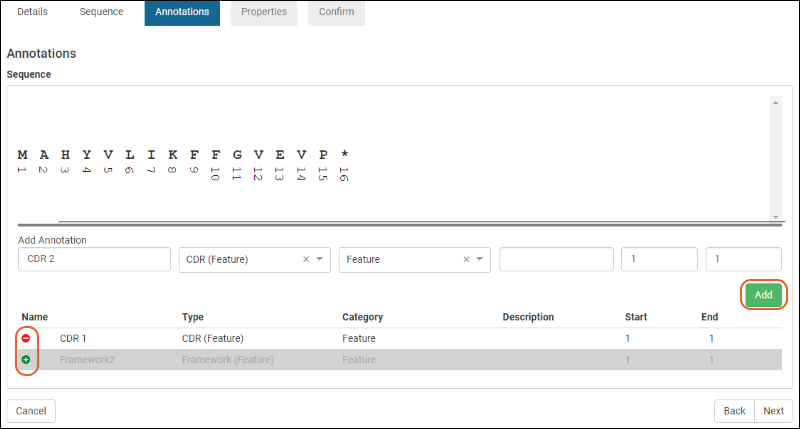

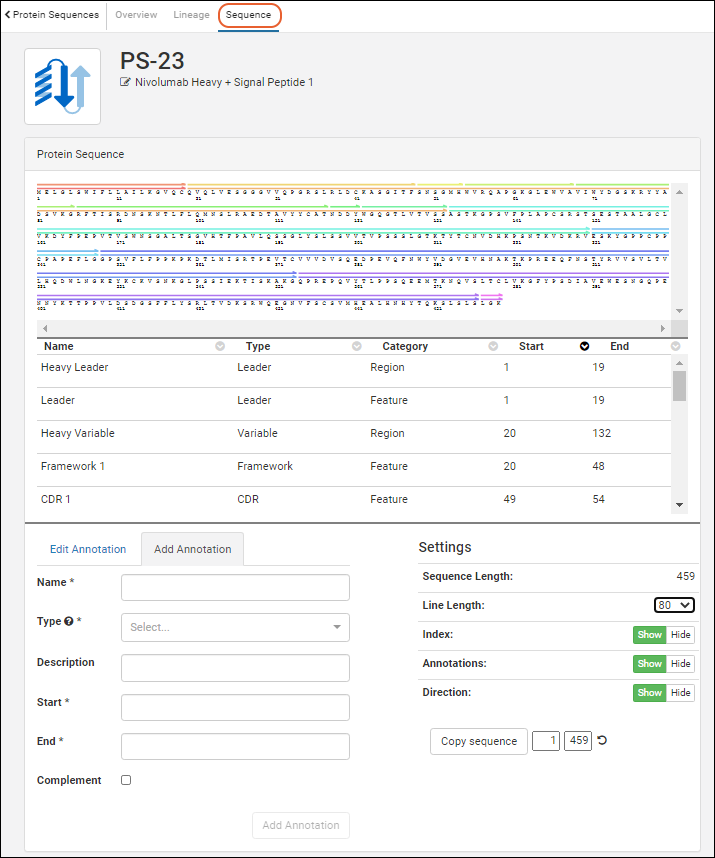

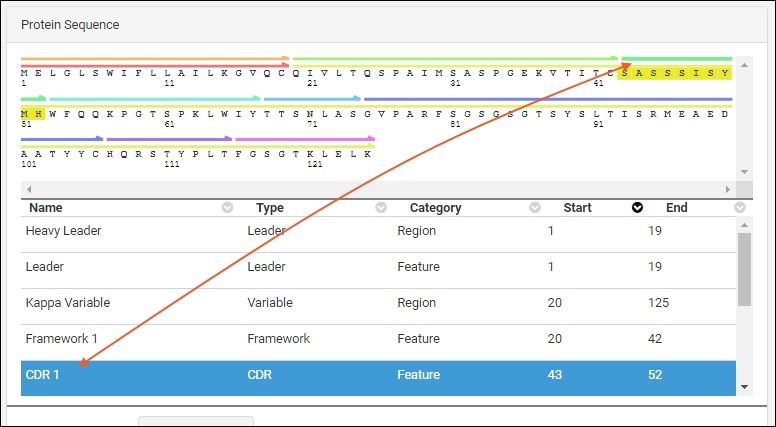

Protein Sequence Annotations

CoreAb Sequence Classification

Biologics: Chain and Structure Formats

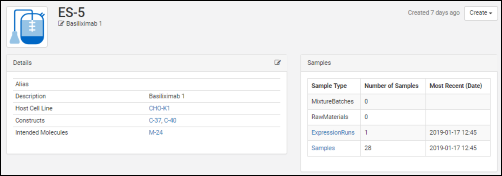

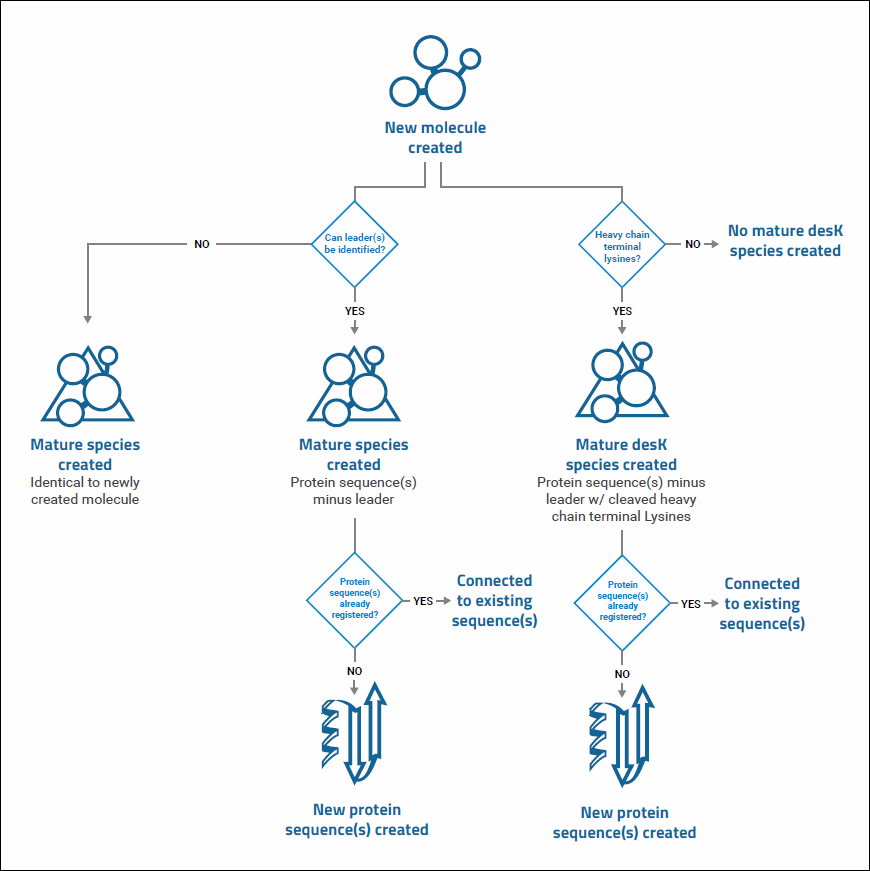

Molecules, Sets, and Molecular Species

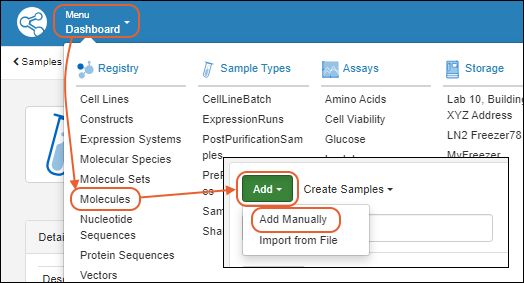

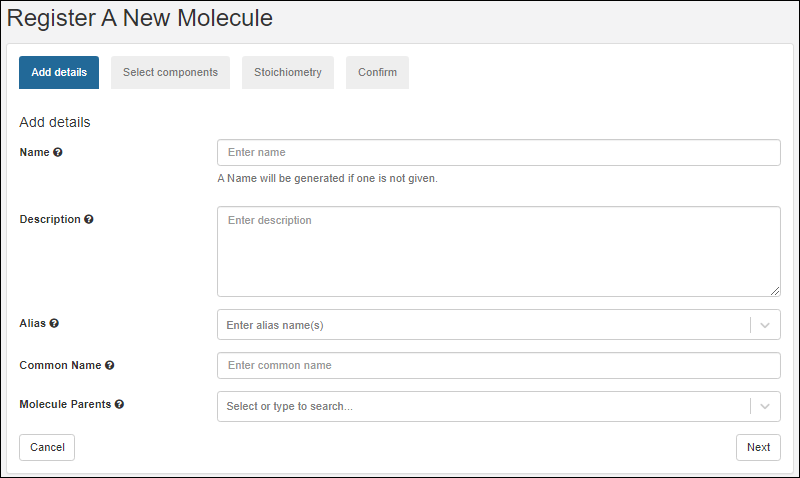

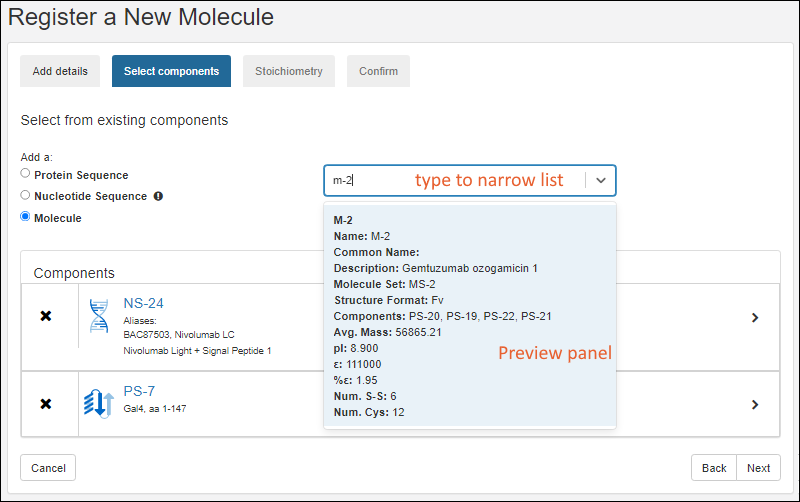

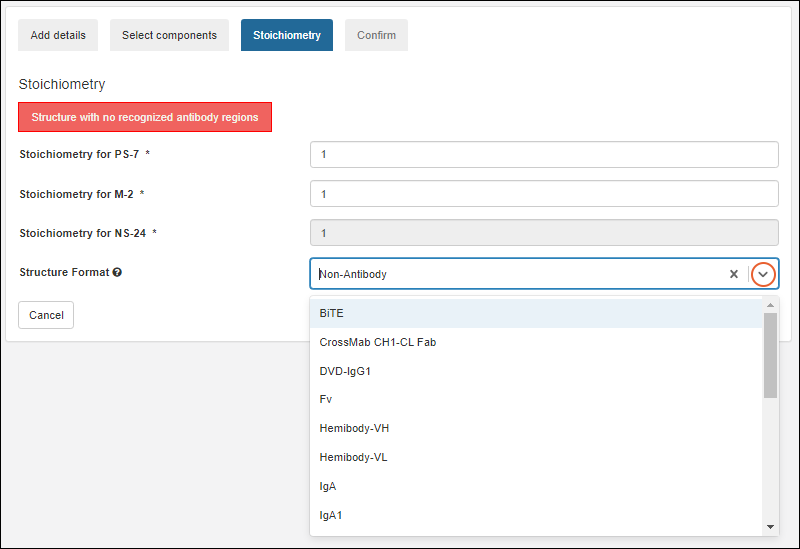

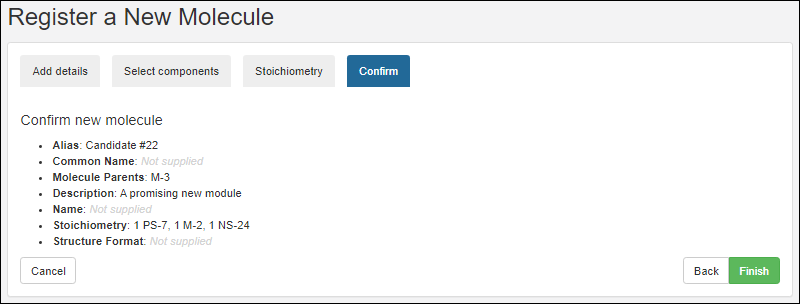

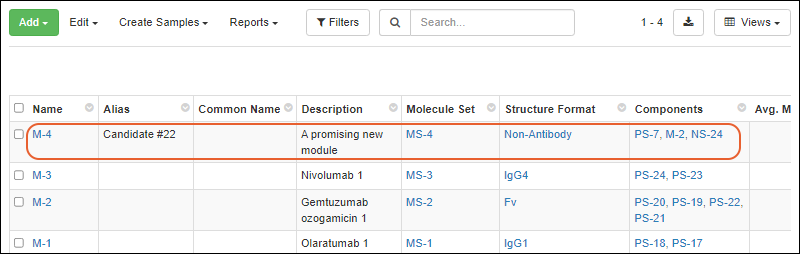

Register Molecules

Molecular Physical Property Calculator

Compounds and SMILES Lookups

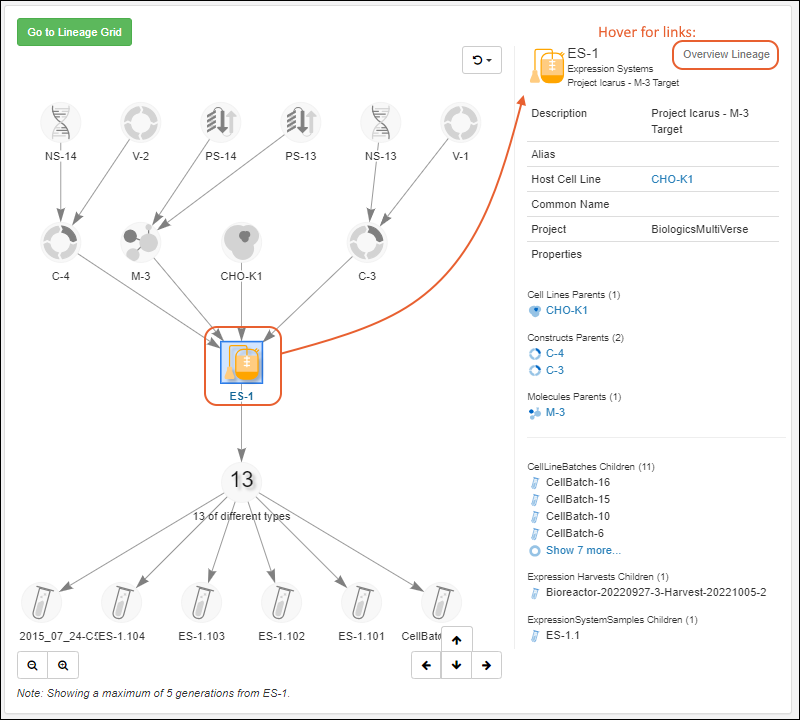

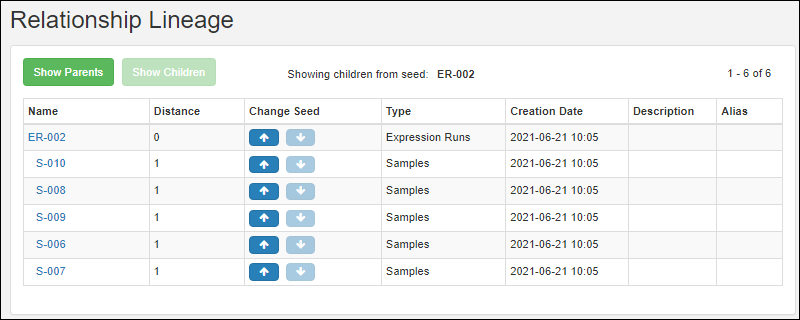

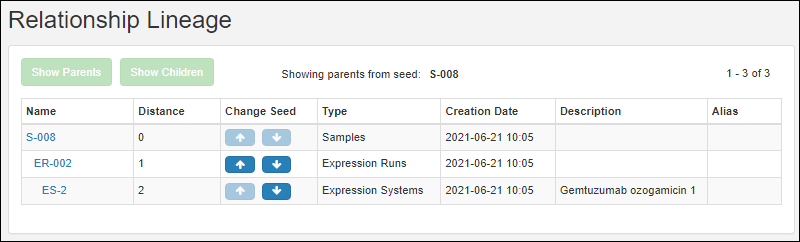

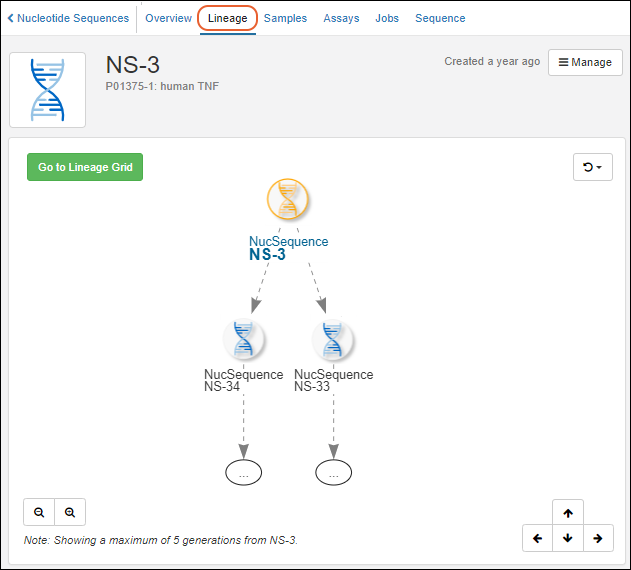

Entity Lineage

Customize the Bioregistry

Bulk Registration of Entities

Use the Registry API

Biologics: Plates

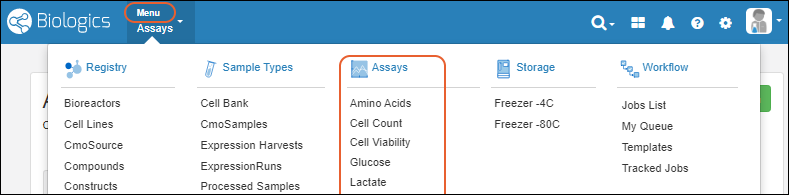

Biologics: Assay Data

Biologics: Specialty Assays

Biologics: Assay Integration

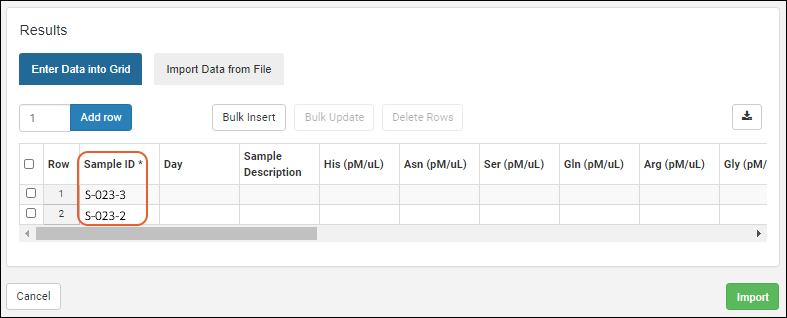

Biologics: Upload Assay Data

Biologics: Assay Batches and QC

Biologics: Media Registration

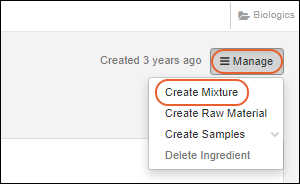

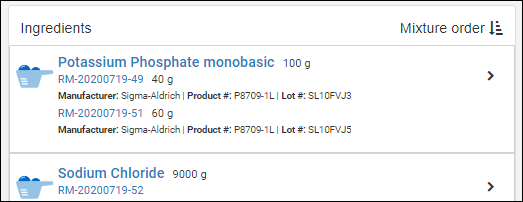

Managing Ingredients and Raw Materials

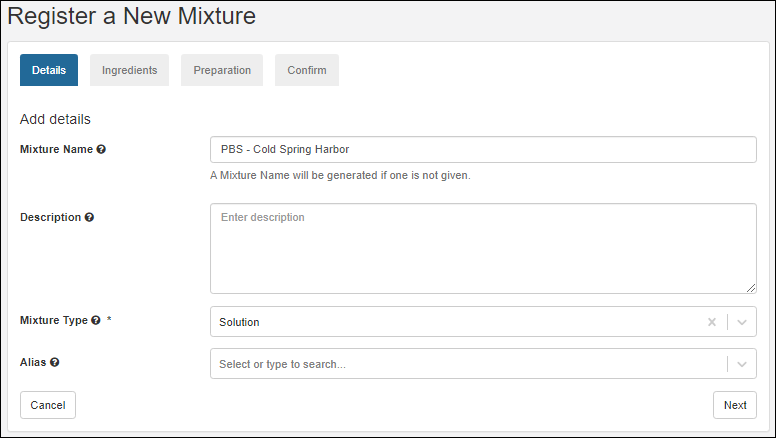

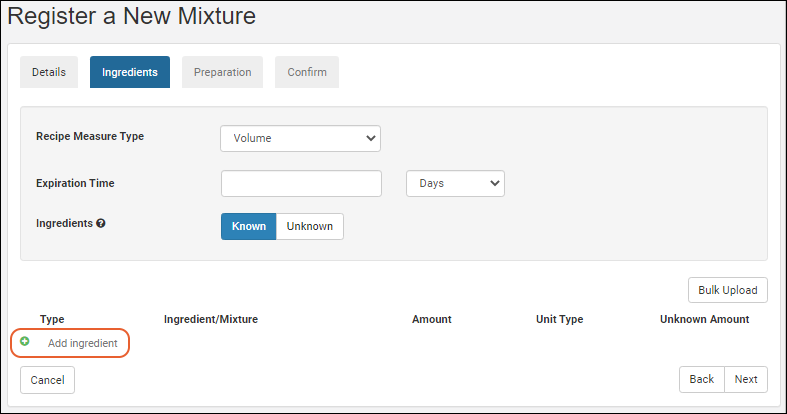

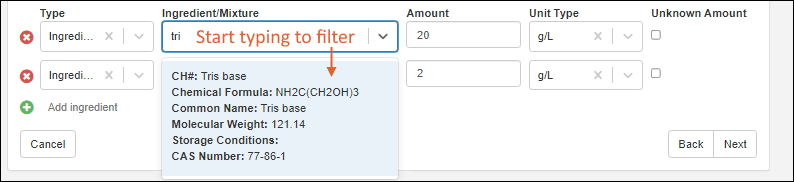

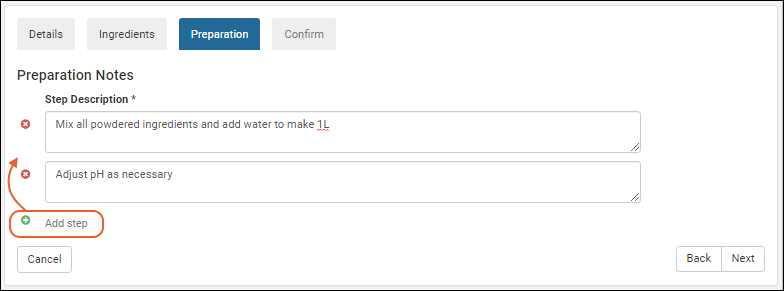

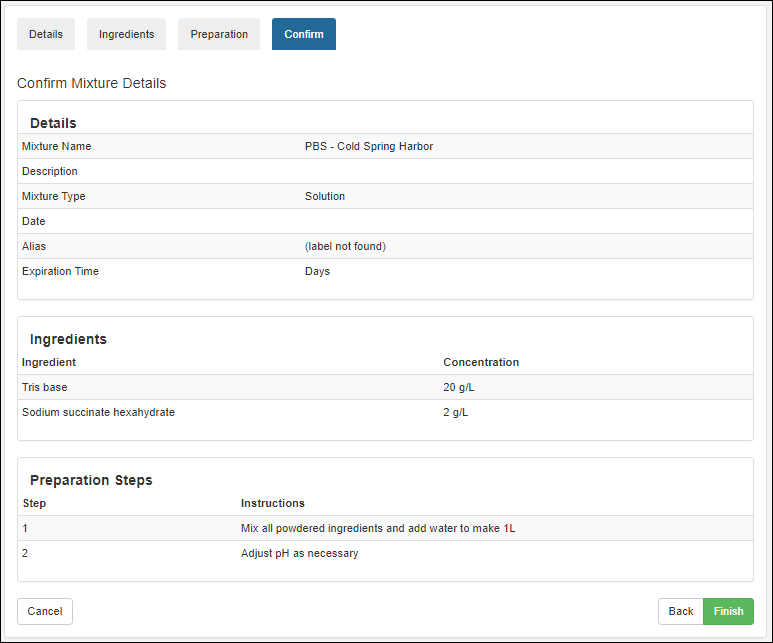

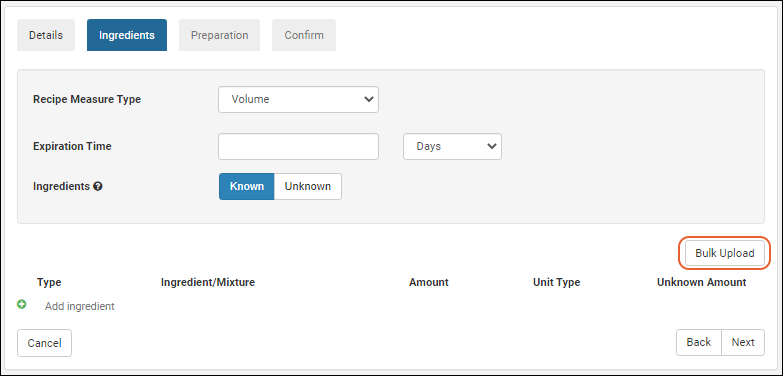

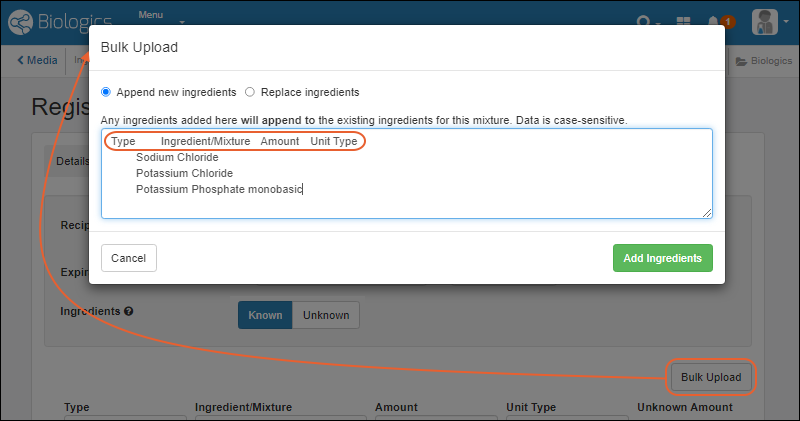

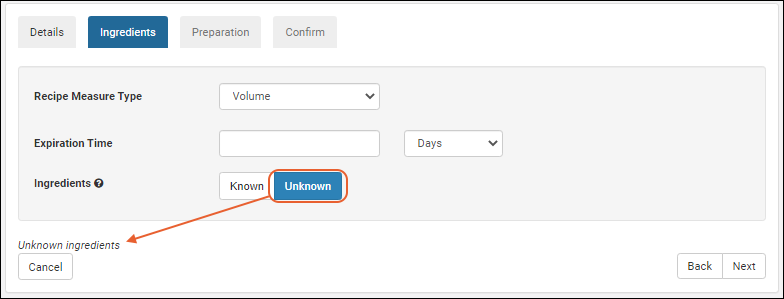

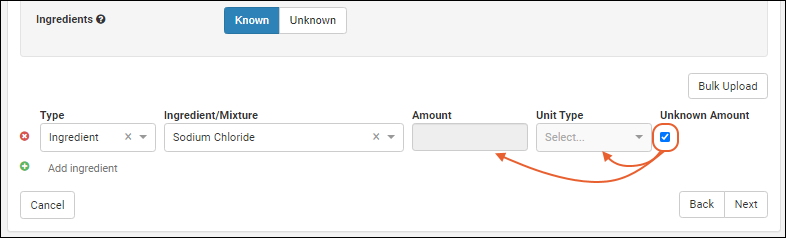

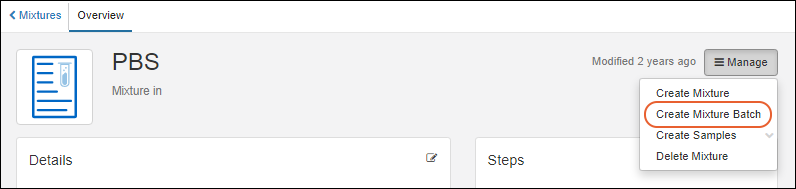

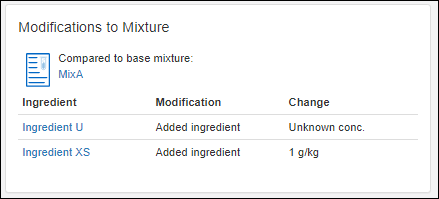

Registering Mixtures (Recipes)

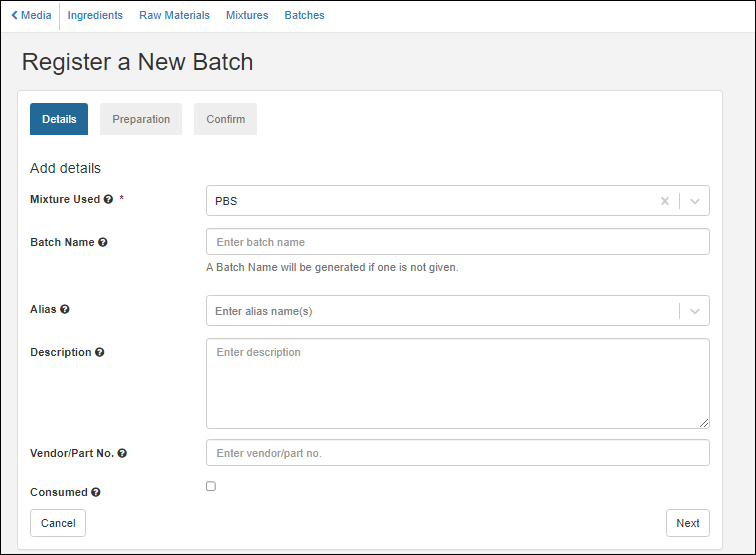

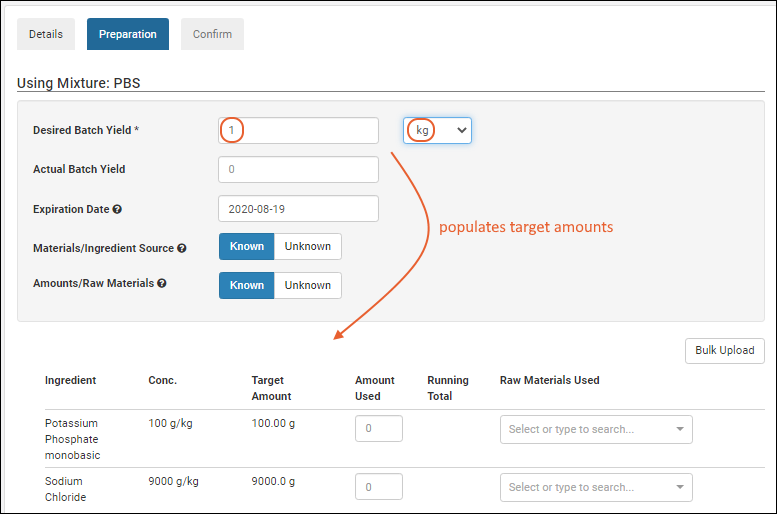

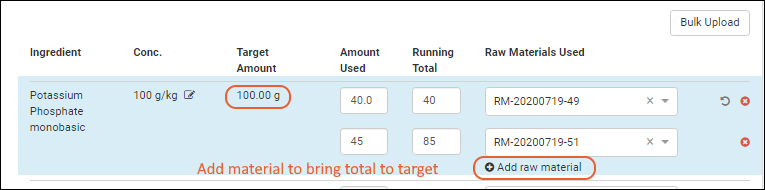

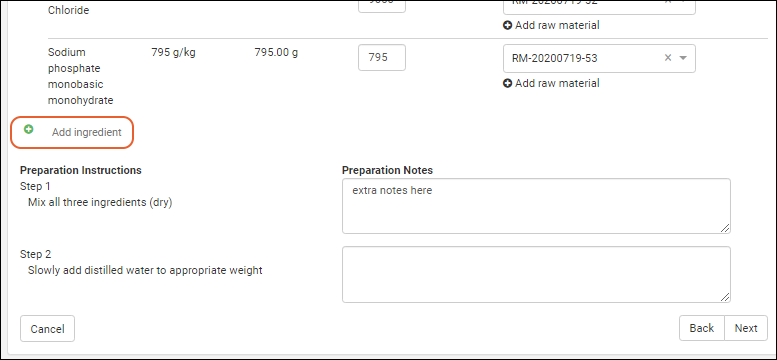

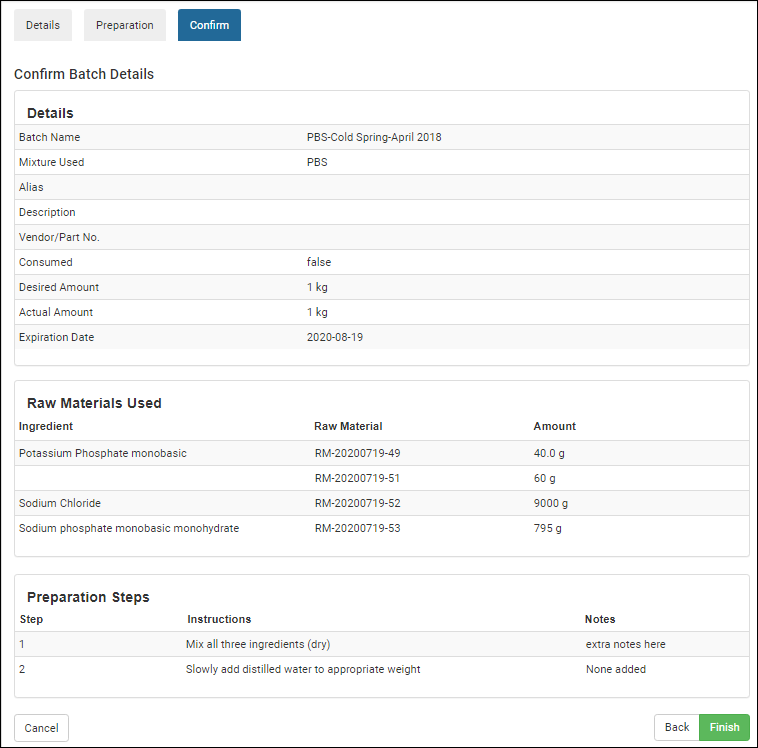

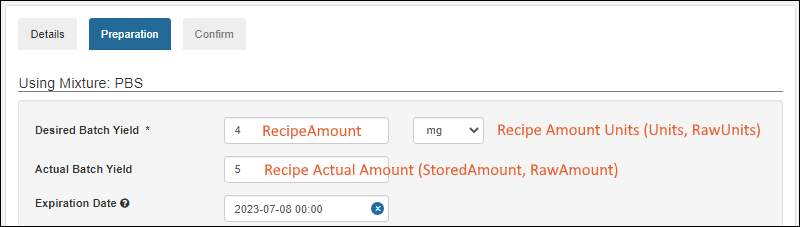

Registering Batches

Biologics Administration

Biologics: Detail Pages and Entry Forms

Biologics: Protect Sequence Fields

Manage Notebook Tags

Biologics Admin: URL Properties

Premium Resources

Product Selection Menu

LabKey Support Portals

Premium Resource: Training Materials

Premium Edition Required

Community Resources

LabKey Terminology/Glossary

FAQ: Frequently Asked Questions

System Integration: Instruments and Software

Demos

Videos

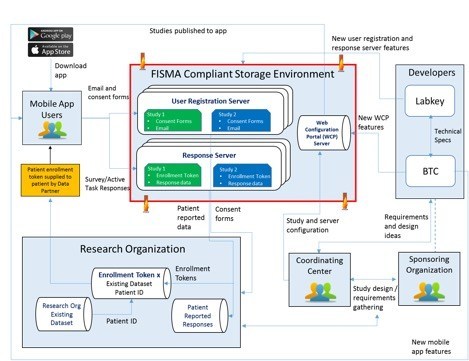

Project Highlight: FDA MyStudies Mobile App

LabKey Webinar Resources

Tech Talk: Custom File-Based Modules

Collaborative DataSpace: User Guide

DataSpace: Learn About

DataSpace: Find Subjects

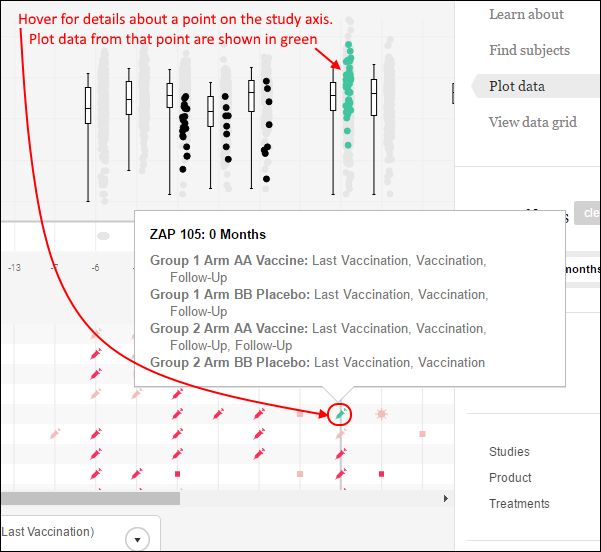

DataSpace: Plot Data

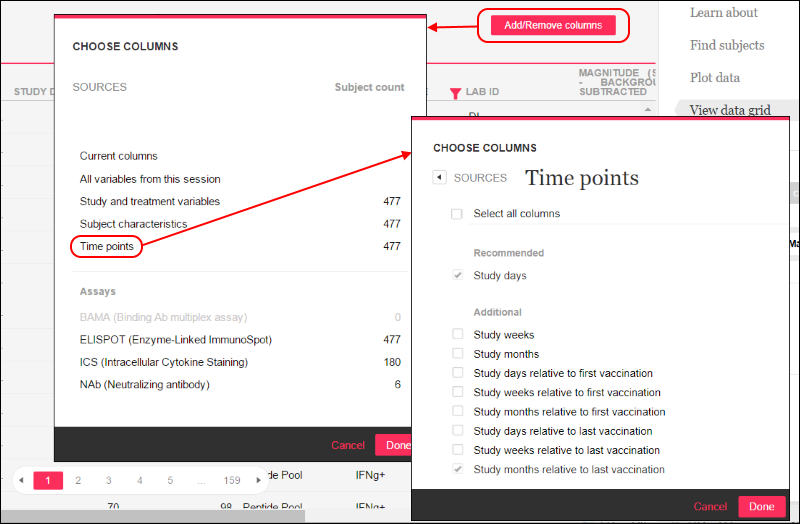

DataSpace: View Data Grid

DataSpace: Monoclonal Antibodies

Documentation Archive

Release Notes 24.7 (July 2024)

Release Notes 24.3 (March 2024)

What's New in 24.3

Release Notes: 23.11 (November 2023)

What's New in 23.11

Release Notes: 23.7 (July 2023)

What's New in 23.7

Release Notes: 23.3 (March 2023)

What's New in 23.3

Release Notes: 22.11 (November 2022)

What's New in 22.11

Release Notes 22.7 (July 2022)

What's New in 22.7

Release Notes 22.3 (March 2022)

What's New in 22.3

Release Notes 21.11 (November 2021)

What's New in 21.11

Release Notes 21.7 (July 2021)

What's New in 21.7

Release Notes 21.3 (March 2021)

What's New in 21.3

Release Notes 20.11 (November 2020)

What's New in 20.11

Release Notes 20.7

What's New in 20.7

Release Notes 20.3

What's New in 20.3

Release Notes 19.3

What's New in 19.3

Release Notes: 19.2

What's New in 19.2

Release Notes 19.1

What's New in 19.1.x

Release Notes 18.3

What's New in 18.3

Release Notes 18.2

What's New in 18.2

Release Notes 18.1

What's New in 18.1

Release Notes 17.3

Release Notes 17.2

Deprecated Docs - Admin-visible only

Documentation Home

Release Notes

Getting Started

- Try it Now: Data Grids

- Explore key feature areas:

- Explore Longitudinal Studies: HIV Disease Progression

- Explore Assay Data Analysis: T Cell Culture - Media Comparison

- Contact Us for a Custom Demo or Trial: The best way to get started is to let us know your research goals and team needs. Request a customized demo to see how we can help.

LabKey SDMS Documentation

LabKey Sample Manager Documentation

- Release Notes: Sample Manager

- Sample Manager Help and Documentation

- Sample Manager

- Electronic Lab Notebooks (ELN)

LabKey LIMS Documentation

Biologics LIMS Documentation

Premium Resources

- Premium Resources: Premium documentation, example code, and best practices.

More Resources

Release Notes 24.11 (November 2024)

- LabKey Server SDMS

- Client APIs and Development Notes

- Other LabKey Products:

LabKey Server SDMS

Premium Edition Feature Updates

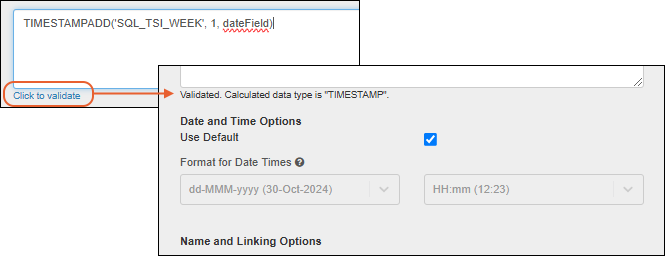

- Include "Calculation" fields in lists, datasets, sample, source, and assay definitions. (docs)

- Support for using a Snowflake database as an external data source is now available. (docs)

- The "External Tool Access" page has been improved to make it easier to use ODBC and JDBC connections to LabKey. (docs)

- Administrators can generate a report of file root sizes across the site. (docs)

- Reporting usage statistics to LabKey can be enabled without also enabling upgrade banners. (docs)

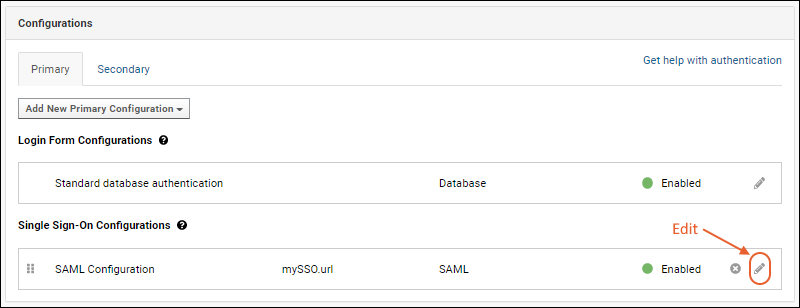

- SAML authentication uses RelayState instead of a configuration parameter, and the configuration interface has been streamlined. Also available in 24.7.7 (docs)

- Administrators can save incomplete authentication configurations if they are disabled. Also available in 24.7.4 (docs)

- Sample Manager Feature Updates

- LabKey LIMS Feature Updates

- Biologics LIMS Feature Updates

Community Edition Updates

- Beginning with maintenance release 24.11.4, when the server is configured to use only HTTPS, a Strict-Transport-Security header will be set to prevent future attempts to access the server over HTTP.

- Linking Sample data to a visit-based study can now be done using the visit label instead of requiring a sequencenum value. (docs)

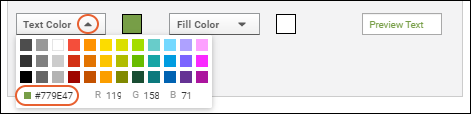

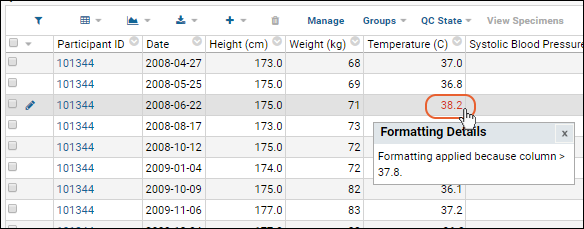

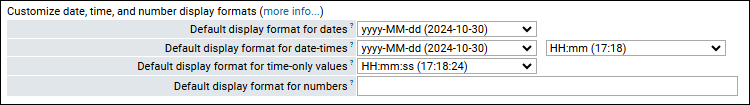

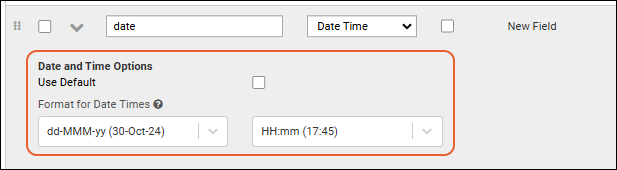

- Date, time, and datetime fields are now limited to a set of common display patterns, making it easier for users to choose the desired format. (docs)

- A new validator will find non-standard date and time display formats. (docs)

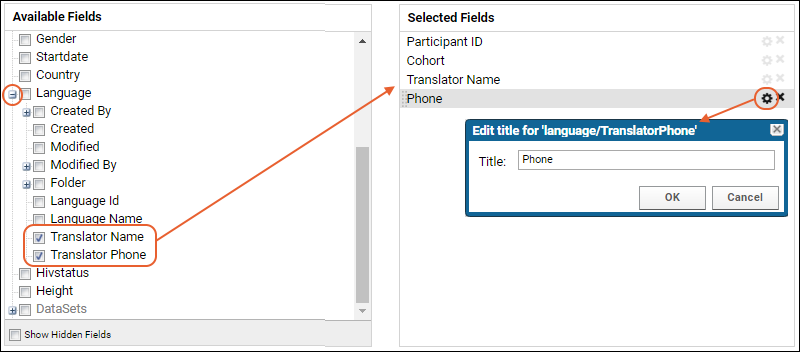

- Use shift-click in the grid view customizer to add all fields from a given node. (docs)

- When a pipeline job is cancelled, any queries it has initiated will also be cancelled. (docs)

- Users can include a description for future reference when generating an API key. (docs)

- Folder file root sizes are now calculated and shown to administrators. (docs)

- Administrators can provide a set of external sources of data to allow if a content security policy is configured. (docs)

- Sending of the Server HTTP header may be disabled using a site setting. (docs)

- Some "Experimental Features" have been relocated to a "Deprecated Features" page to better describe their status. (docs)

- When a user changes their database password, all current sessions associated with that login are invalidated. (docs)

Distribution Changes and Upgrade Notes

- LabKey Server embeds a copy of Tomcat 10. It no longer uses or depends on a separately installed copy of Tomcat.

- LabKey Cloud subscribers have been upgraded automatically.

- For users with on-premise installations, the process for upgrading from previous versions using a standalone Tomcat 9 has changed significantly. Administrators should be prepared to make additional changes during the first upgrade to use embedded Tomcat. (docs)

- For users with on-premise installations who already upgraded to use embedded Tomcat will follow a much simpler process upgrade to 24.11. Note that the distribution name itself has changed to drop the "-embedded" string, as all distributions are now embedded. (linux | windows)

- The process for installing a new LabKey Server has also changed significantly, making it simpler than in past releases. (docs)

- All specialty assays (ELISA, ELISpot, Microarray, MS2, NAb, Luminex, etc.) are now distributed only to clients actively using them. Administrators will see a warning about unknown modules when they upgrade and can safely remove these unused modules. Please contact your Account Manager if you have questions or concerns.

- HTTP access logging is now enabled by default and the recommended pattern has been updated. (docs)

- Users of proxies or load balancers may wish to add this to their accesslog.pattern to capture the IP address of the originating client:

%{X-Forwarded-For}i - MySQL 9.0.0 is now supported. (docs)

- LabKey Server now supports and recommends the recently released PostgreSQL 17.x. (docs)

Deprecated Features

- Support for Microsoft SQL Server 2014 has been removed. (docs | docs)

- Some older wiki macros were removed; the list of supported macros is in the documentation.

- Support for "Advanced Reports" has been deprecated.

- Support for the "Remote Login API" has been deprecated.

- Support for bitmask (integer) permissions has been deprecated. Developers can learn more below.

- The "Vaccine Study Protocol" interface has been deprecated.

- Future deprecations: These features will be deprecated in the next release. Contact your Account Manager if you have questions or concerns.

- Additional date, time, and datetime parsing patterns. Selecting a date parsing mode (Month-Day-Year or Day-Month-Year) will still be supported. (docs)

- You will no longer have the "Advanced import options" of choosing objects during import of folders, as well as having folder imports applied to multiple folders. (docs)

- "Assay Progress Reports" in studies. (docs)

Client APIs and Development Notes

- Version 3.4.0 of Rlabkey is available. (docs)

- Note: Rlabkey version 3.4.1 has also been released, supporting the consistency improvements in webdav URLs made in December 2024. Note that this means version 3.4.1 requires LabKey version 24.12 or higher; earlier versions of LabKey including 24.11 must use Rlabkey version 3.4.0 or lower. (docs)

- Client API calls that provide credentials and fail authentication will be rejected outright and immediately return a detailed error message. Previously, a call that failed authentication would proceed as an unauthenticated user which would fail (unless guests have access to the target resource) with a less informative message. This change is particularly helpful in cases where the credentials are correct but do not meet password complexity requirements or are expired.

- Version 3.0.0 of our gradlePlugin was released. It's earliest compatible LabKey release is 24.8 and it includes a few changes of note for developers. A few examples are here, and more are listed in the gradlePlugin release notes:

- The AntBuild plugin has been removed, so we no longer have a built-in way to detect modules that are built with Ant instead of Gradle.

- We removed support for picking up .jsp files from resources directories. Move any .jsp files in a resources directory under the src directory instead.

- Support for bitmask (integer) permissions has been removed. Class-based permissions replaced bitmask permissions many years ago.

- Developers who manage .view.xml files should replace <permissions> elements with <permissionClasses> elements. (docs)

- Code that inspects bitmask permissions returned by APIs should switch to inspecting permission class alternatives.

- Developers of React-based modules will need to make changes in the entryPoints.js file instead of directly in the .view.xml file.

- Documentation has been added to assist you in determining which packages and versions are installed for R and python. (R info | python info)

- API Resources

Sample Manager

The Sample Manager Release Notes list features by monthly version and product edition.LabKey LIMS

Announcing LabKey LIMS! Learn more here: The LabKey LIMS Release Notes list features in addition to those in the Professional Edition of LabKey Sample Manager.Biologics LIMS

The Biologics LIMS Release Notes list features in addition to those in LabKey LIMS.Previous Release Notes: Version 24.7

Upcoming Features

Upcoming Features

Releases we are currently working on:

Recent Documentation Updates

Release Notes 25.3 (March 2025)

- LabKey Server SDMS

- Client APIs and Development Notes

- Other LabKey Products:

LabKey Server SDMS

Premium Edition Feature Updates

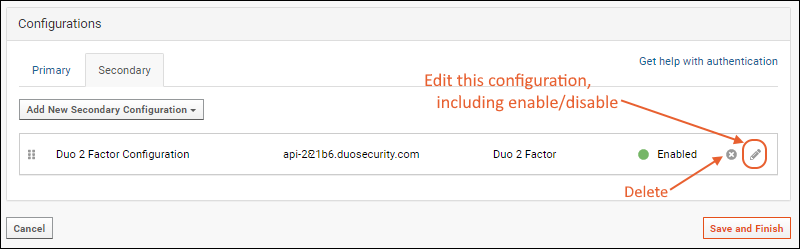

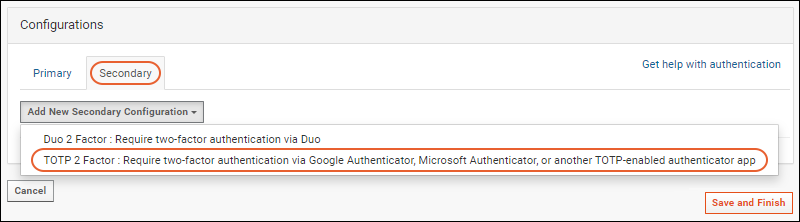

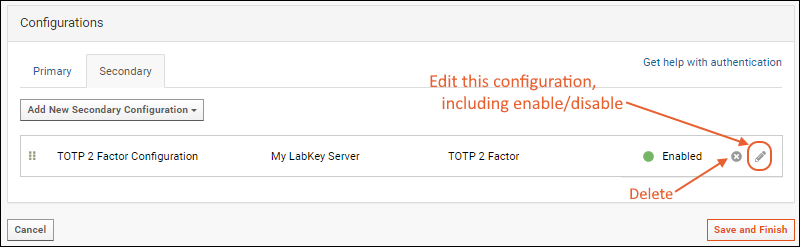

- The Duo secondary authentication provider now integrates with Duo Universal Prompt. (docs) Also available in 24.11.5.

- Sample Manager Feature Updates

- LabKey LIMS Feature Updates

- Biologics LIMS Feature Updates

Community Edition Updates

- Assay transform scripts can be configured to run when data is imported, updated, or both. (docs)

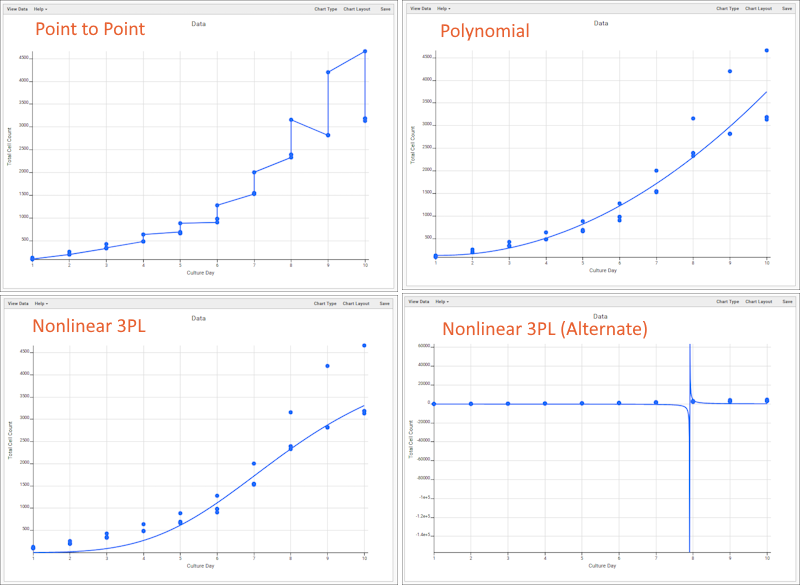

- A trendline option has been added to the chart builder for Line charts. (docs)

- When the server is configured to use only HTTPS, a Strict-Transport-Security header will be set to prevent future attempts to access the server over HTTP. (docs) Also available in 24.11.4.

- Study participants can be deleted from all datasets at once. (docs)

- Set a timeout for read-only HTTP requests, after which long running processes will be killed. (docs)

- Administrators can register a list of acceptable file extensions for uploads; others will be denied. If no list is provided, any extension is allowed.

- Encoding of WebDav URLs has been made more consistent; users who have been relying on the previous behavior may need to make changes. (docs | details)

- Names of data structures, including Sample Types, Source Types, and Assay Designs, may not contain certain special characters or substrings used internally. (docs)

- The wiki and announcements renderer for Markdown has been replaced. The new renderer is highly compatible with the previous renderer and adds several new features.

Distribution Changes and Upgrade Notes

- LabKey Server embeds a copy of Tomcat 10. It no longer uses or depends on a separately installed copy of Tomcat. (new installation docs)

- LabKey Cloud subscribers are upgraded automatically.

- Users with on-premise installations who have already upgraded to use embedded Tomcat should follow these upgrade instructions. (upgrade on linux | upgrade on windows)

- Users with on-premise installations that have not already made the additional changes required to use embedded Tomcat will need to follow the migration process in the documentation archives. (migration docs)

Deprecated Features

- Support for PostgreSQL 12.x has been removed. (supported versions)

- Support for "Advanced Reports" has been removed.

- Support for the "Remote Login API" has been removed.

- Support for bitmask (integer) permissions has been removed. Developers can learn more in the archives.

- The "Vaccine Study Protocol" interface has been removed.

- Support for FreezerPro integration has been removed.

- Support for additional date, time, and datetime parsing patterns has been deprecated.

- The "Advanced import options" of choosing objects during import of folders, as well as having folder imports applied to multiple folders have been deprecated.

- "Assay Progress Reports" in studies have been deprecated.

- Support for SQL Server 2016 has been deprecated.

Client APIs and Development Notes

- Rlabkey version 3.4.1 has been released, supporting the consistency improvements in webdav URLs. Note that this means version 3.4.1 requires LabKey version 24.12 or higher; earlier versions of LabKey must use Rlabkey version 3.4.0 or lower. (docs)

- API Resources

Sample Manager

The Sample Manager Release Notes list features by monthly version and product edition.LabKey LIMS

The LabKey LIMS Release Notes list features in addition to those in the Professional Edition of LabKey Sample Manager.Biologics LIMS

The Biologics LIMS Release Notes list features in addition to those in LabKey LIMS.Previous Release Notes: Version 24.11

Getting Started

- Try LabKey Now

- Learn more about Sample Manager

- Learn more about Biologics LIMS

- Learn even more, then contact us for a custom demo or trial

Topics

More LabKey Solutions

Try it Now: Data Grids

- Securely share your data with colleagues through interactive grid views

- Collaboratively build and explore interactive visualizations

- Drill down into de-identified data for study participants

- Combine related datasets using data integration tools

Begin the Data Grid Tutorial

Trial Servers

LabKey Server Trial

LabKey Server Trial instances contain a core subset of features, and sample content to help get you started. Upload your own data, try tutorials, and even create a custom site tailored to your research and share it with colleagues. Your trial lasts 30 days and we're ready to help you with next steps toward incorporating LabKey Server into your research projects.Start here: Explore LabKey Server with a trial in LabKey CloudSample Manager Trial

Try the core features of LabKey Sample Manager using our example data and adding your own. Your trial lasts 30 days and we're ready to help you with next steps.Start here: Get Started with Sample ManagerBiologics LIMS Trial

Try the core features of LabKey Biologics LIMS using our example data and tutorial walkthroughs. Your trial lasts 30 days and we're ready to help you with next steps toward incorporating LabKey Biologics into your work.Start here: Explore LabKey Biologics with a TrialExplore LabKey Server with a trial in LabKey Cloud

To get started using LabKey Server and understanding the core functionality, contact us about your research needs and goals. Upon request we will set up a LabKey Cloud-based trial of LabKey Server for you.

You'll receive an email with details about getting started and logging in.

Trial server instances contain a subset of features, and some basic content to get you started. Upload your own data, try tutorials, and even create a custom site tailored to your research and share it with colleagues. Your trial lasts 30 days and we're ready to help you with next steps toward incorporating LabKey Server into your research projects.

Tours & Tutorials

Step by step introductions to key functionality of LabKey Server.

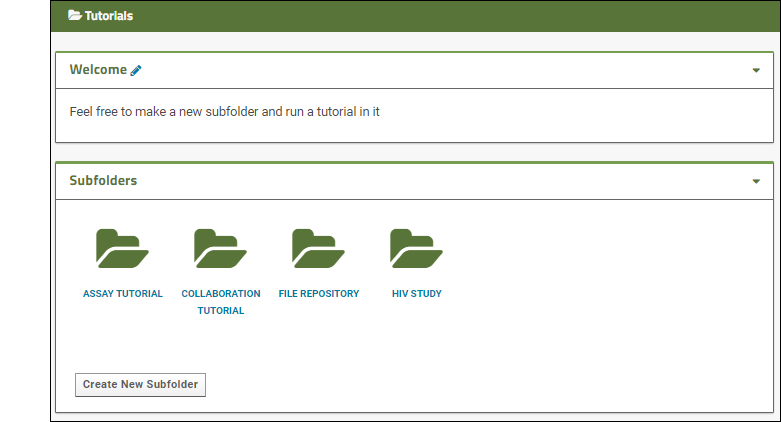

Introduction to LabKey Server: Trial

Welcome to LabKey Server!

- Navigation and User Interface

- Security Model

- Tools for Working Together

- Tools for Data Analysis

- Tools for Research Studies

- What's Next?

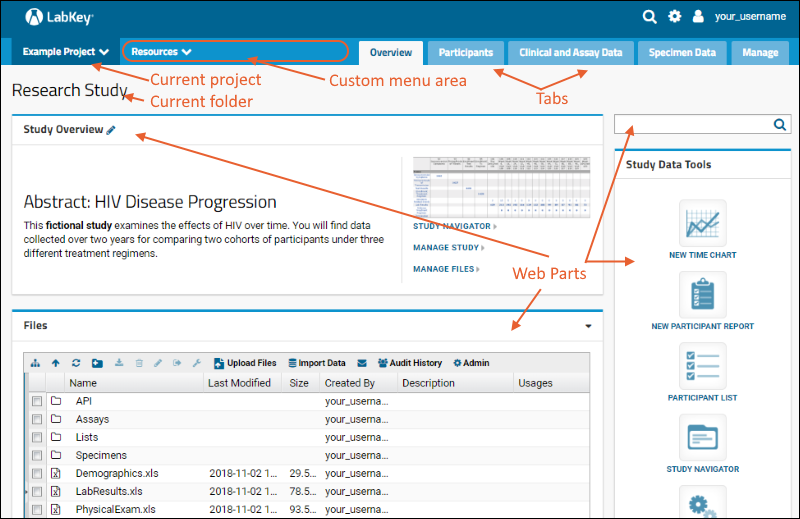

Navigation and User Interface

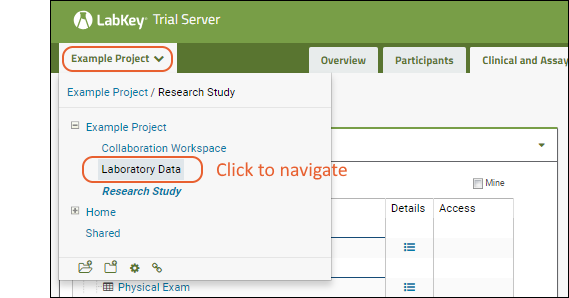

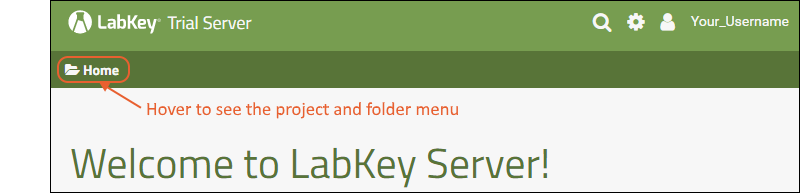

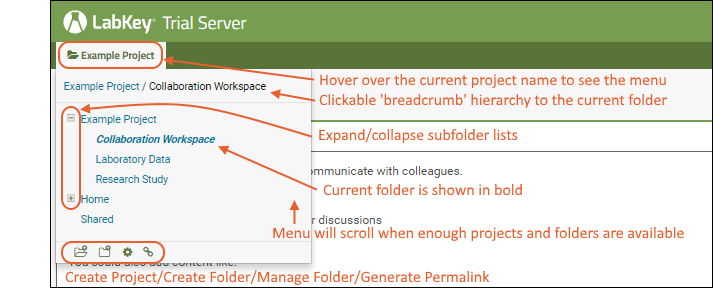

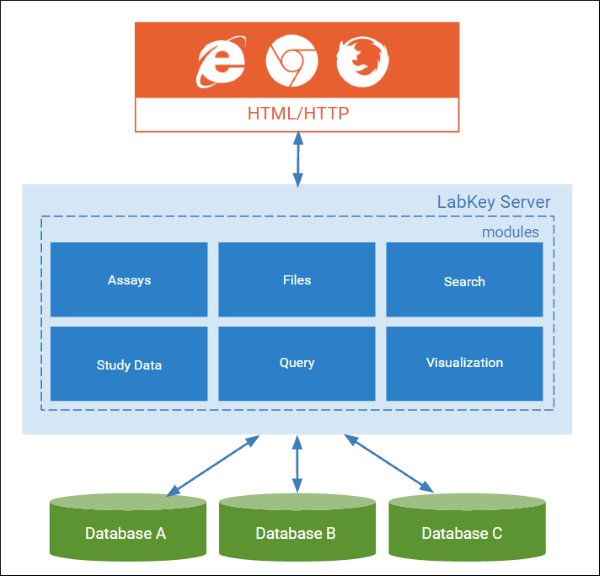

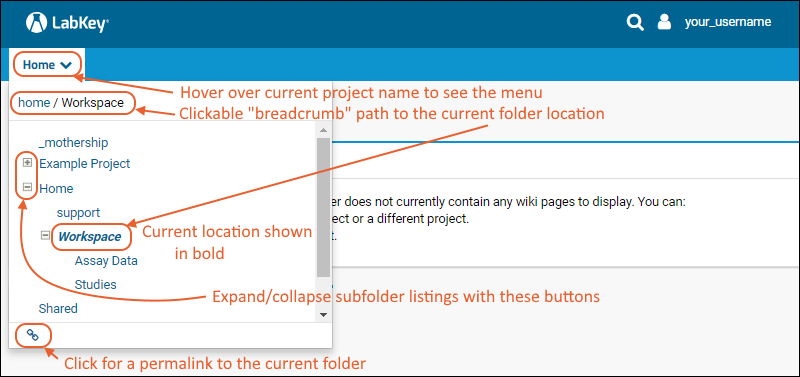

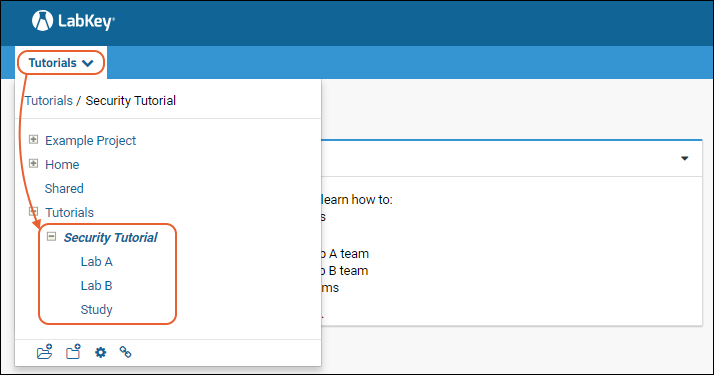

Projects and Folders

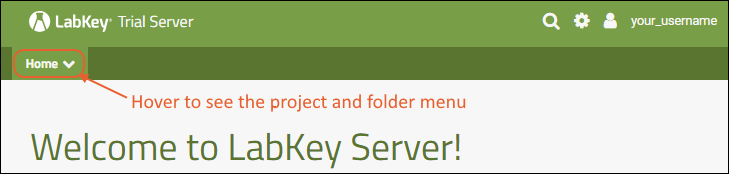

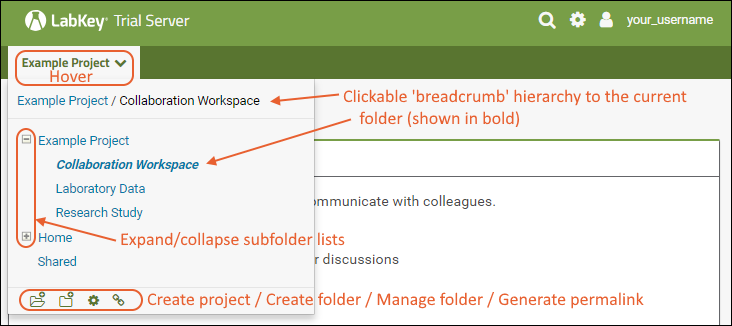

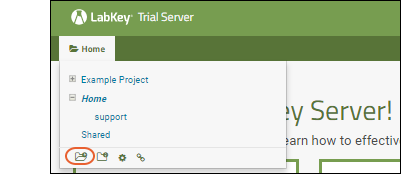

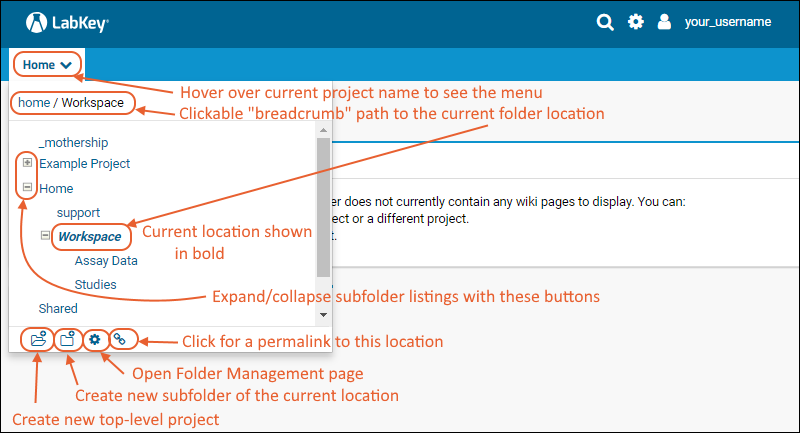

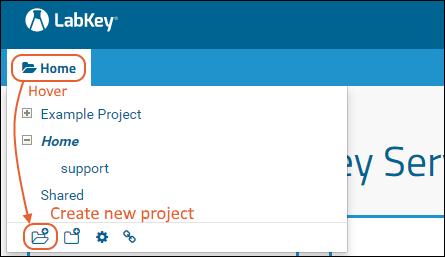

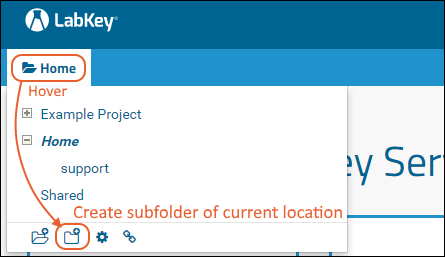

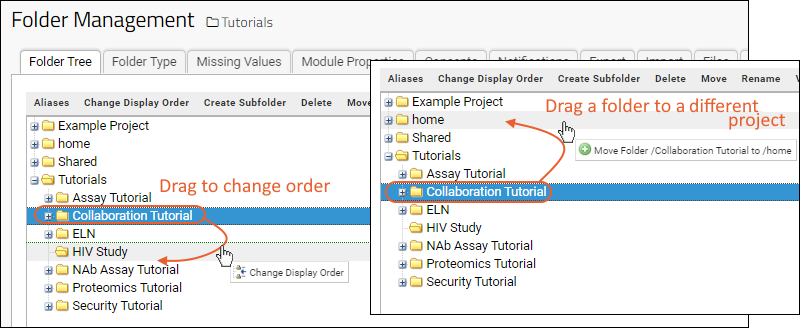

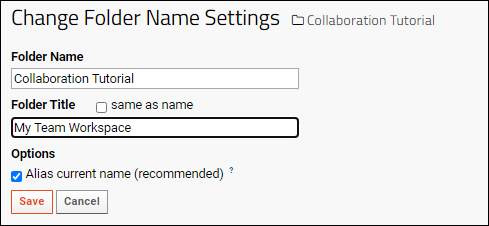

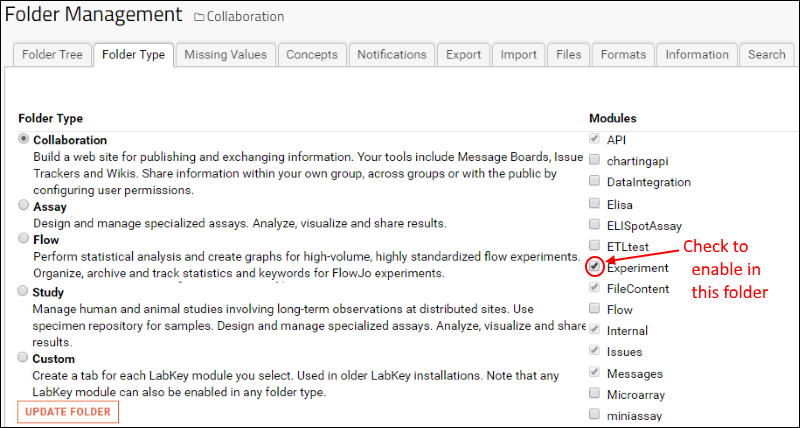

The project and folder hierarchy is like a directory tree and forms the basic organizing structure inside LabKey Server. Everything you create or configure in LabKey Server is located in some folder. Projects are the top level folders, with all the same behavior, plus some additional configuration options; they typically represent a separate team or research effort.The Home project is a special project. On your Trial server, it contains the main welcome banner. To return to the home project at any time, click the LabKey logo in the upper left corner.The project menu is on the left end of the menu bar and includes the display name of the current project. Hover over the project menu to see the available projects, and folders within them. Click any project or folder name to navigate there.Any project or folder with subfolders will show / buttons for expanding and contracting the list shown. If you are in a subfolder, there will be a clickable 'breadcrumb' trail at the top of the menu for quickly moving up the hierarchy. The menu will scroll when there are enough items, with the current location visible and expanded by default.

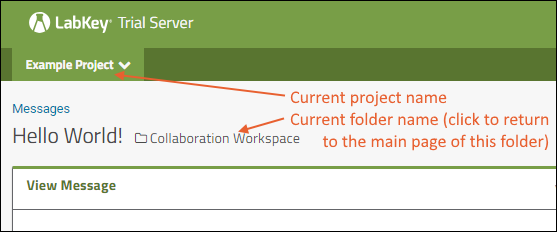

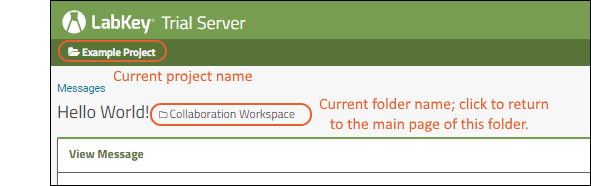

Hover over the project menu to see the available projects, and folders within them. Click any project or folder name to navigate there.Any project or folder with subfolders will show / buttons for expanding and contracting the list shown. If you are in a subfolder, there will be a clickable 'breadcrumb' trail at the top of the menu for quickly moving up the hierarchy. The menu will scroll when there are enough items, with the current location visible and expanded by default. The project menu always displays the name of the current project, even when you are in a folder or subfolder. A link with the Folder Name is shown near the top of page views like the following, offering easy one click return to the main page of the folder.

The project menu always displays the name of the current project, even when you are in a folder or subfolder. A link with the Folder Name is shown near the top of page views like the following, offering easy one click return to the main page of the folder. For more about projects, folders, and navigation, see Project and Folder Basics.

For more about projects, folders, and navigation, see Project and Folder Basics.

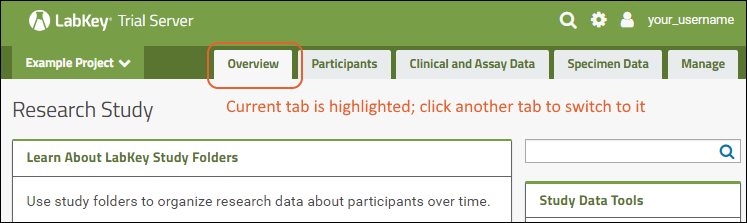

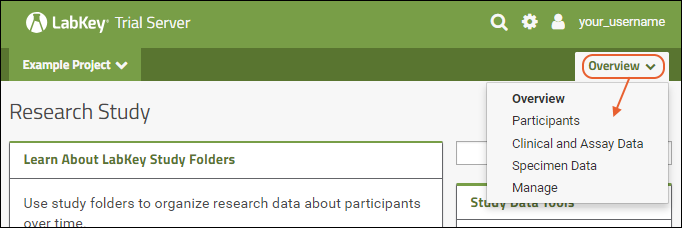

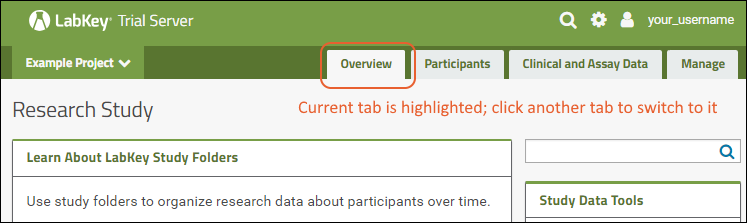

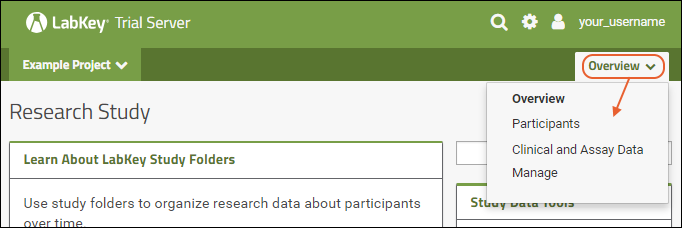

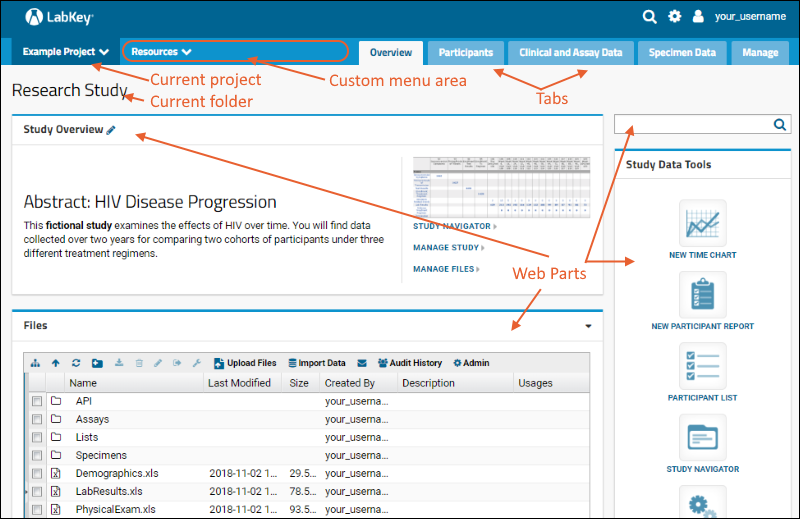

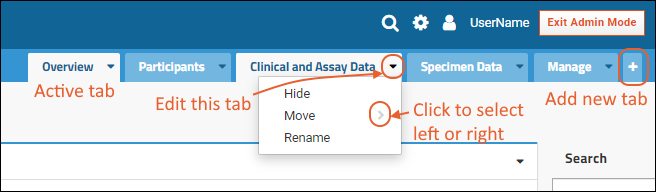

Tabs

Using tabs within a folder can give you new "pages" of user interface to help organize content. For an example of tabs in action, see the Research Study within the Example Project. When your browser window is too narrow to display tabs arrayed across the screen, they will be collapsed into a pulldown menu showing the current tab name and a (chevron). Click the name of the tab on this menu to navigate to it.

When your browser window is too narrow to display tabs arrayed across the screen, they will be collapsed into a pulldown menu showing the current tab name and a (chevron). Click the name of the tab on this menu to navigate to it. For more about adding and customizing tabs, see Use Tabs.

For more about adding and customizing tabs, see Use Tabs.

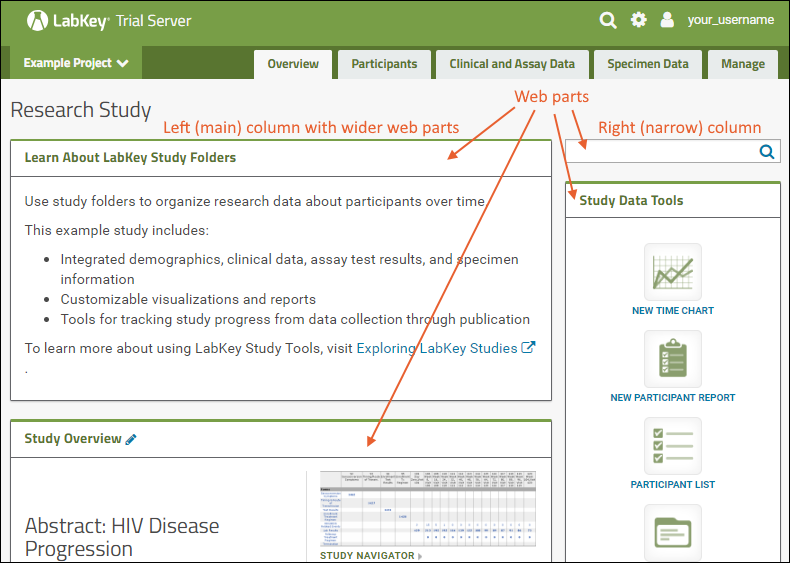

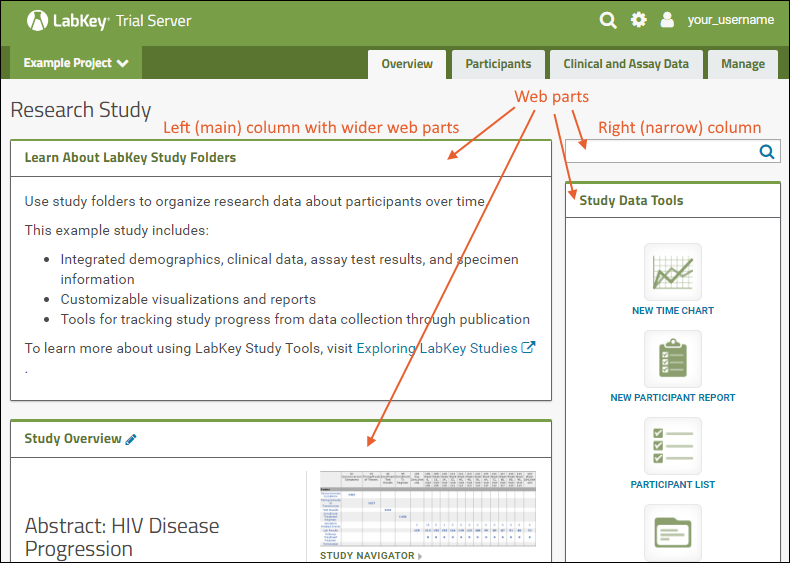

Web Parts

Web parts are user interface panels that can be shown on any folder page or tab. Each web part provides some type of interaction for users with underlying data or other content. There is a main "wide" column on the left and narrower column on the right. Each column supports a different set of web parts. By combining and reordering these web parts, an administrator can tailor the layout to the needs of the users.For a hands-on example to try right now, explore the Collaboration Workspace project on your Trial Server.To learn more, see Add Web Parts and Manage Web Parts. For a list of the types of web parts available in a full installation of LabKey Server, see the Web Part Inventory.

There is a main "wide" column on the left and narrower column on the right. Each column supports a different set of web parts. By combining and reordering these web parts, an administrator can tailor the layout to the needs of the users.For a hands-on example to try right now, explore the Collaboration Workspace project on your Trial Server.To learn more, see Add Web Parts and Manage Web Parts. For a list of the types of web parts available in a full installation of LabKey Server, see the Web Part Inventory.

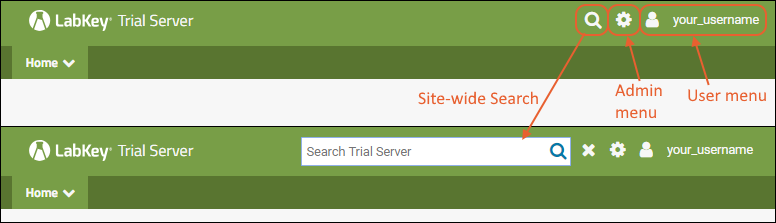

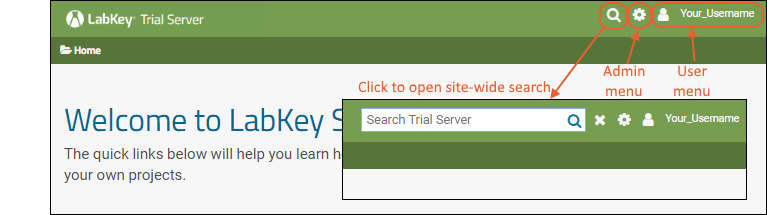

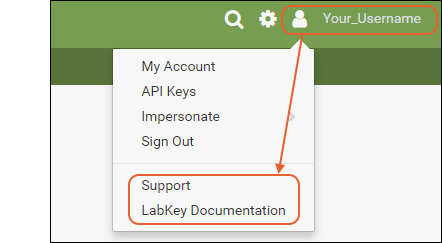

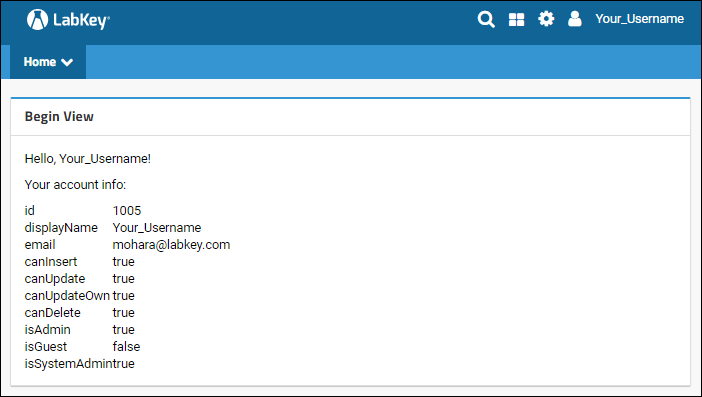

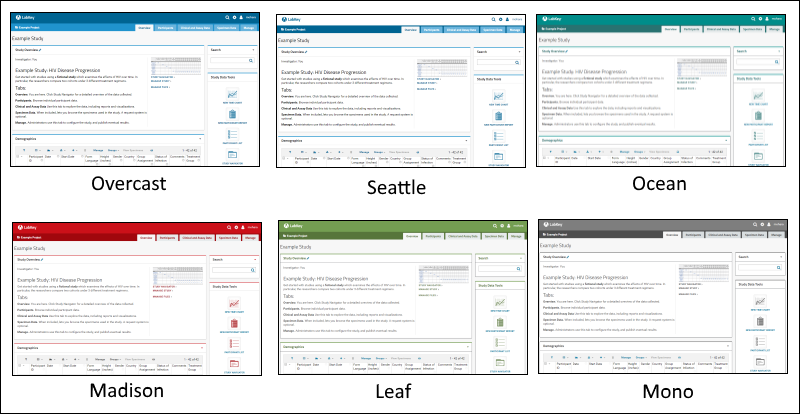

Header Menus

In the upper right, icon menus offer:

In the upper right, icon menus offer:

- : Click to open a site-wide search box.

- : Shown only to Admins: Administrative options available to users granted such access. See below.

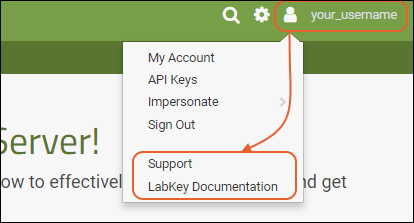

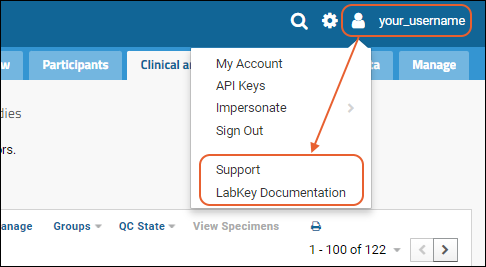

- username: Login and security options; help links to documentation.

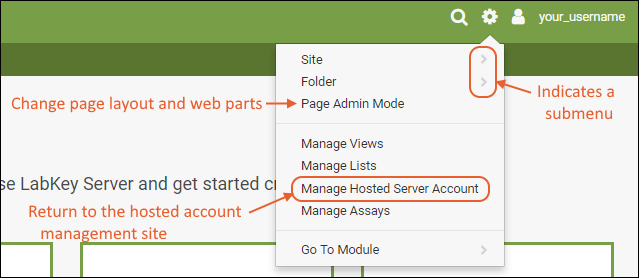

Admin MenuThe "first user" of this trial site will always be an administrator and have access to the menu. If that user adds others, they may or may not have the same menu of options available, depending on permissions granted to them.

Admin MenuThe "first user" of this trial site will always be an administrator and have access to the menu. If that user adds others, they may or may not have the same menu of options available, depending on permissions granted to them.

- Site >: Settings that pertain to the entire site.

- Admin Console: In this Trial edition of LabKey Server, some fields are not configurable and may be shown as read-only. See Admin Console for details about options available in the full installation of LabKey.

- Site Users, Groups, Permissions: Site-level security settings.

- Create Project: Creates a new project (top-level folder) on the server.

- Folder >: Settings for the current folder.

- Permissions: Security configuration for the current folder.

- Management: General configuration for the current folder.

- Project Users and Settings: General configuration for the current project.

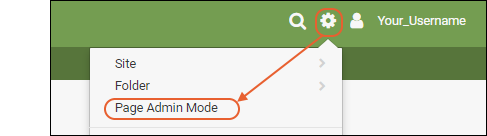

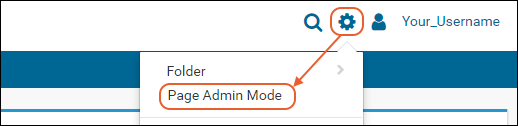

- Page Admin Mode: Used to change page layout and add or remove UI elements.

- Manage Views, Lists, Assays: Configuration for common data containers.

- Manage Hosted Server Account: Return to the site from which you launched this trial server.

- Go To Module >: Home pages for the currently enabled modules.

Security Model

LabKey Server has a group and role-based security model. Whether an individual is authorized to see a resource or perform an action is checked dynamically based on the groups they belong to and roles (permissions) granted to them. Learn more here: Security. Try a walkthrough using your Trial Server here: Exploring LabKey SecurityTools for Working Together

Collaborating with teams within a single lab or around the world is made easier when you share resources and information in an online workspace.- Message Boards: Post announcements and carry on threaded discussions. LabKey uses message boards for the Support Forums. Learn more here.

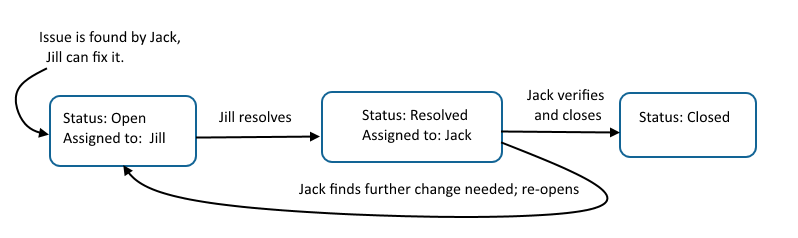

- Issue Trackers: Track issues, bugs, or other workflow tasks (like assay requests) by customizing an issue tracker. LabKey uses an issue tracker to manage development issues. Learn more here.

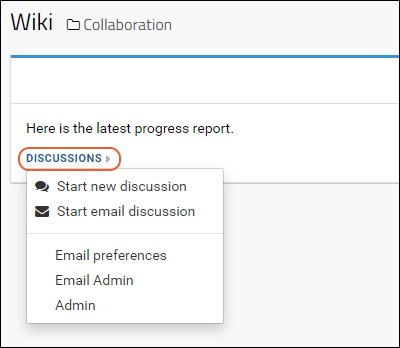

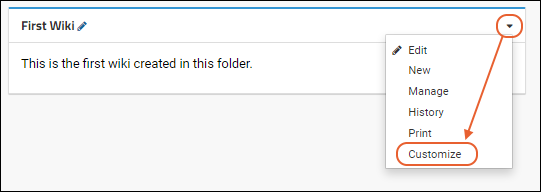

- Wikis: Documents written in HTML, Markdown, Wiki syntax, or plain text; they can include images, links, and live content from data tables. You're reading a Wiki page right now. Learn more here.

- File Repositories: Upload and selectively share files and spreadsheets of data; connect with custom import methods. You can see an example here. Learn more here.

To learn more and try these tools for yourself, navigate to the Example Project > Collaboration Workspace folder of your Trial Server in one browser window, and open the topic Exploring LabKey Collaboration in another.

Tools for Data Analysis

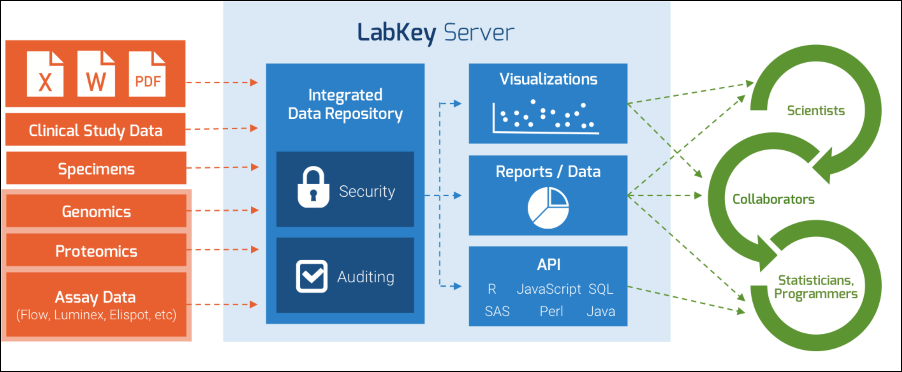

Biomedical research data comes in many forms, shapes, and sizes. LabKey integrates directly with many types of instruments and software systems and with customization can support any type of tabular data.

- Uploading Data: From dragging and dropping single spreadsheets to connecting a data pipeline to an outside location for incoming data, your options are as varied as your data. Learn about the options here: Import Data.

- LabKey Data Grids: LabKey data grids show a configurable view of the underlying database table. To learn more, try a quick online tour of data grids.

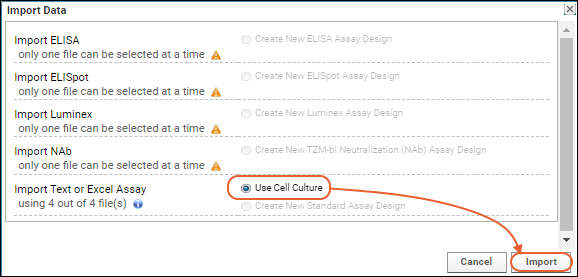

- Interpreting Instrument Data: Using assay designs and pipeline protocols, you can direct LabKey Server to correctly interpret complex instrument data during import. Learn more here: Assay Data.

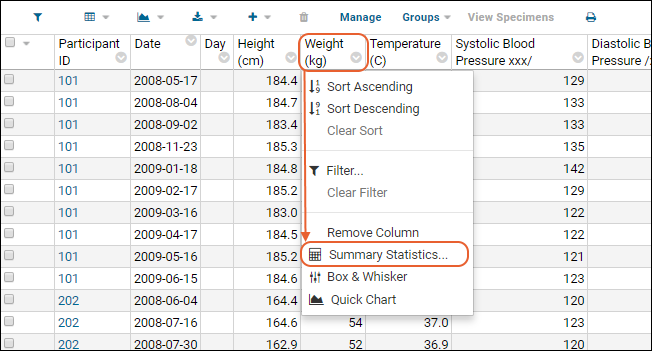

- Statistics and Queries: Add summary statistics to your data grids and write SQL queries within the UI. Learn more here: Column Summary Statistics and SQL Queries.

- Visualizations: Create easy charts and plots backed by live data. Learn more here: Visualizations.

- Reporting: Generate reports and query snapshots of your data. Use R, JavaScript, and LabKey plotting APIs to present your data in many ways. Learn more here: Reports and Charts.

To tour some example content and try these tools, navigate to the Example Project > Laboratory Data folder of your Trial Server in one browser window, and open the topic Exploring Laboratory Data in another.

Tools for Research Studies

Study folders organize research data about participants over time. There are many different ways to configure and use LabKey studies. Learn more here: Studies.- Study Schedules and Navigation: Dashboards for seeing at a glance what work is completed and what is scheduled helps coordinators manage research data collection. Learn more here: Study Navigation.

- Participant and Date Alignment: By aligning all of your data based on the participant and date information, you can integrate and compare otherwise disparate test results. Explore the breadth of data for a single study subject, or view trends across cohorts of similar subjects. Learn more here: Study User Guide.

- Publishing and Sharing: Select who should see which parts of your study data and analysis. Choose how to publish results, including many options for obscuring and protecting PHI (protected health information) and aggregating results. Learn more here: Study Administrator Guide and Publish a Study: Protected Health Information / PHI.

To learn more and try these tools, navigate to the Example Project > Research Study folder of your Trial Server in one browser window, and open the topic Exploring LabKey Studies in another.

What's Next?

Explore the example content on your Trial Server using one of these walkthroughs. Find out more about what a full installation of LabKey Server can do by reading documentation here:Exploring LabKey Collaboration

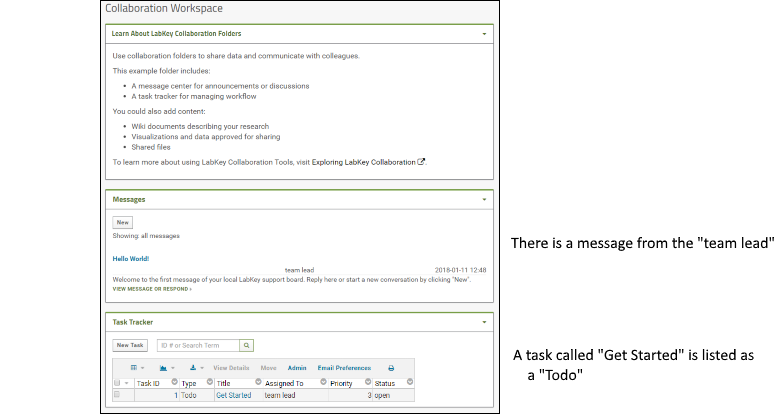

Tour

The "Collaboration Workspace" folder in the "Example Project" shows three web parts on its main dashboard. Web parts are user interface panels and can be customized in many ways.- 1. Learn About LabKey Collaboration Folders: a panel of descriptive information (not part of a default Collaboration folder).

- 2. Messages: show conversations or announcements in a messages web part

- 3. Task Tracker: LabKey's issue tracker tools can be tailored to a variety of uses.

To help show some of the collaborative options, this project also includes a few sample users with different roles. You can see a message from the "team lead" and there is also a task called "Get Started" listed as a "Todo".

To help show some of the collaborative options, this project also includes a few sample users with different roles. You can see a message from the "team lead" and there is also a task called "Get Started" listed as a "Todo".

Try It Now

Messages

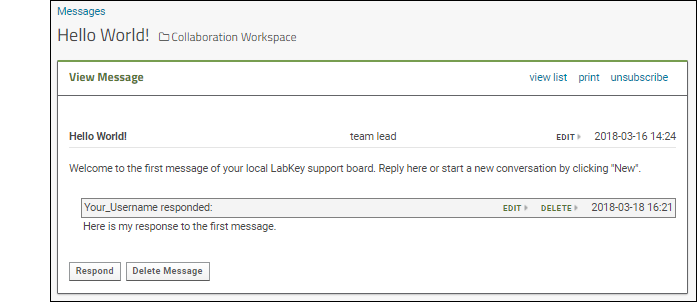

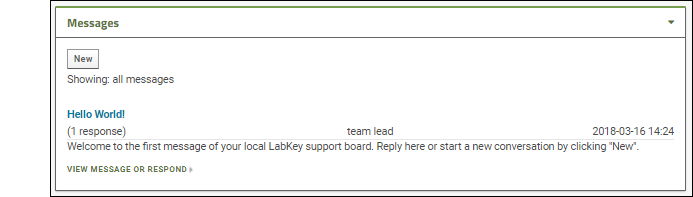

A message board is a basic tool for communication; LabKey Message Boards can be customized to many use cases: from announcements to developer support and discussion.- Notice the first message, "Hello World".

- Click View Message or Respond below it.

- Click Respond.

- Enter any text you like in the Body field. If you also change the Title, your response will have a new title but the main message thread will retain the existing title.

- Notice that you can select other options for how your body text is rendered. Options: Plain Text, HTML, Markdown, or Wiki syntax.

- Notice you could attach a file if you like.

- Click Submit.

- You are now viewing the message thread. Notice links to edit or delete. Since you an administrator on this server, you can edit messages others wrote, which would not be true for most users.

- You may have also received an email when you posted your response. By default, you are subscribed to any messages you create or comment on. Click unsubscribe to see how you would reset your preferences if you don't want to receive these emails.

- Click the Collaboration Workspace link near the top of the page to return to the main folder dashboard. These links are shown any time you are viewing a page within a folder.

- Notice that on the main folder dashboard, the message board does not show the text of your reply, but just the note "(1 response)".

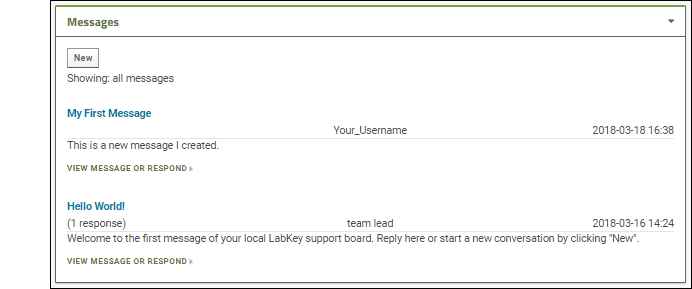

- Click New to create a new message.

- You will see the same input fields as when replying; enter some text and click Submit.

- When you return to the main folder dashboard, you will see your new message.

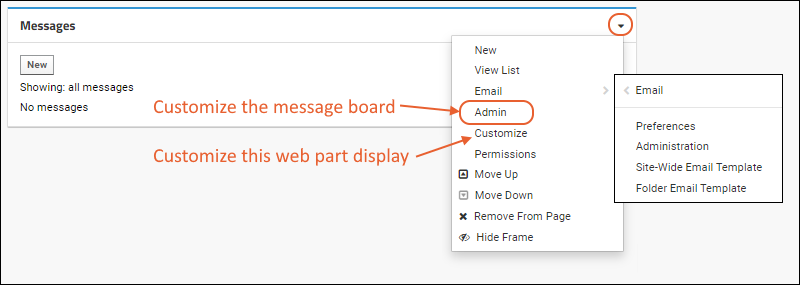

- An administrator can control many display aspects of message boards, including the level of detail, order of messages, and even what "Messages" are called.

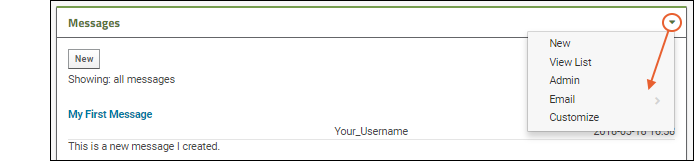

- The (triangle) menu in the upper right corner includes several options for customizing.

- New: Create a new message.

- View List: See the message board in list format.

- Admin: Change things like display order, what messages are called, security, and what options users have when adding messages.

- Email: Control when and how email notifications are sent about messages on this board.

- Customize: Select whether this web part shows the "full" information about the message, as shown in our example, or just a simple preview.

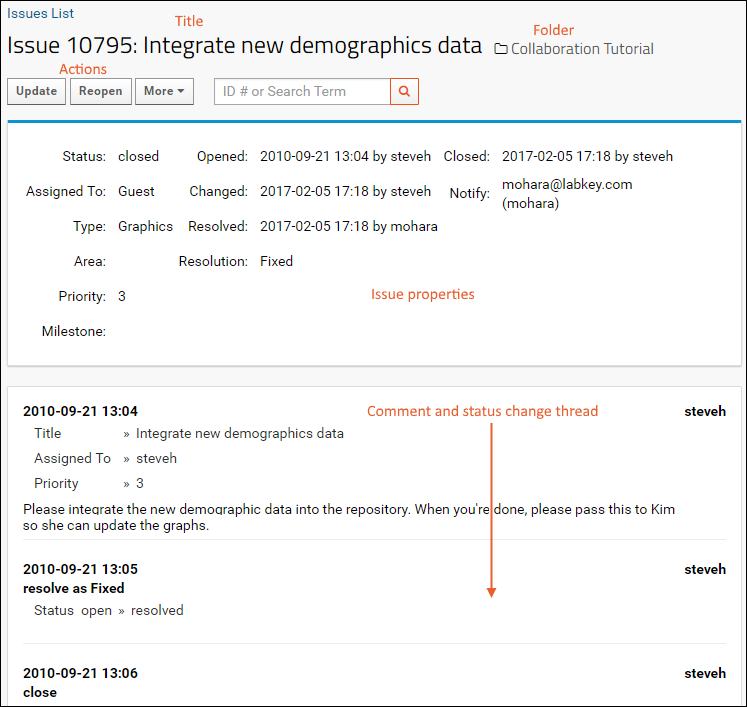

Task Tracker

LabKey provides flexible tools for tracking tasks with multiple steps done by various team members. Generically referred to as "issue trackers" the example project includes a simple "Task Tracker".The basic life cycle of any task or issue moves through three states:- Open: someone decides something needs to be done; various steps and reassignments can happen, including prioritization and reassignments

- Resolved: someone does the thing

- Closed: the orginal requestor confirms the solution is correct

- Navigate to the Collaboration Workspace.

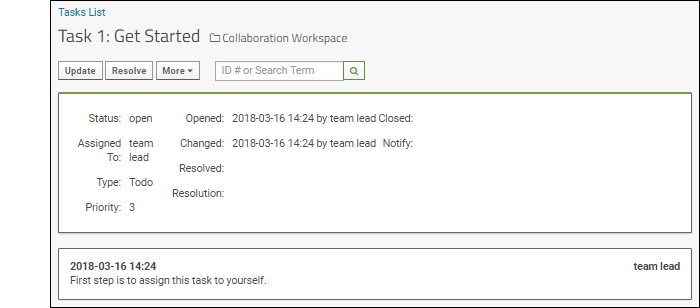

- Scroll down to the Task Tracker and click the title of the task, Get Started to open the detail view.

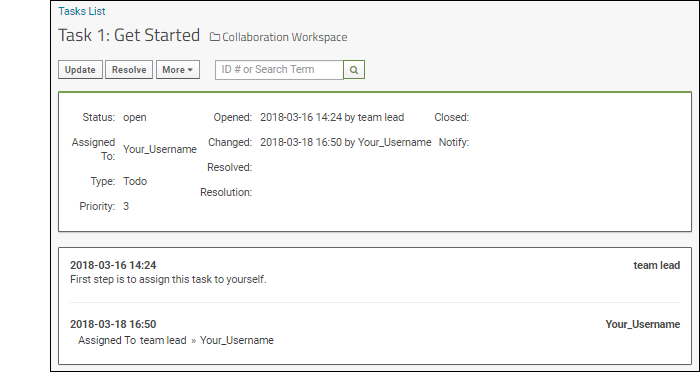

- The status, assignment, priority, and other information are shown here. You can see that the team leader opened this task and added the first step: assign this task to yourself.

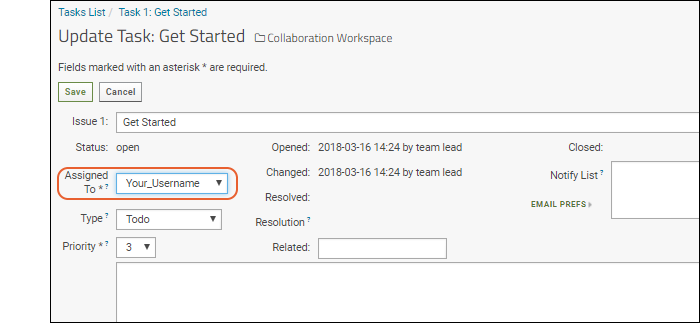

- Click Update. Used when you are not "resolving" the issue, merely changing assignment or adding extra information.

- Select your username from the Assigned To pulldown.

- You could also change other information and provide an optional comment about your update.

- Click Save.

- Note: You may receive an email when you do this. Email preferences are configurable. Learn more here.

- The task is now assigned to you. You can see the sequence of changes growing below the current issue properties.

- Click Collaboration Workspace to return to the main page.

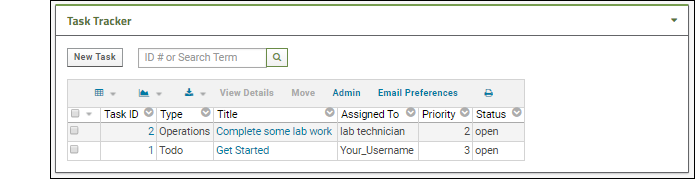

- Notice the task is assigned to you now.

- Click New Task to create a new task.

- Enter your choice of title, notice that the default status "Open" cannot be changed, but you can change the priority, and enter other information. Note that setting the priority field is required.

- Assign the task to the "lab technician" user.

- Click Save to open the new issue.

- When you return to the task list on the Collaboration Workspace page, you will see it listed as issue 2.

To show how the resolution process works, use the fact that you are an administrator and can use impersonation to take on another user's identity. Learn more here.

To show how the resolution process works, use the fact that you are an administrator and can use impersonation to take on another user's identity. Learn more here.

- Select (Your Username) > Impersonate > User.

- Choose "lab_technician" and click Impersonate. You are now seeing the page as the lab technician would. For example, you no longer have access to administrator options on the messages web part.

- Click the title of the new task you assigned to the lab technician to open it.

- Click Resolve.

- Notice that by default, the resolved task will be assigned to the original user who opened it - in this case you!

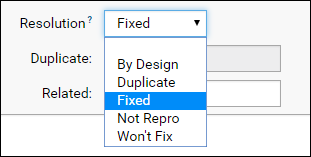

- The default value for the Resolution field is "Fixed", but you can select other options if appropriate.

- Enter a comment saying you've completed the task, then click Save.

- Click Stop Impersonating.

- Open the task again and close it as yourself.

- Enter a few more tasks to have more data for viewing.

- When finished, return to the Collaboration Workspace main page.

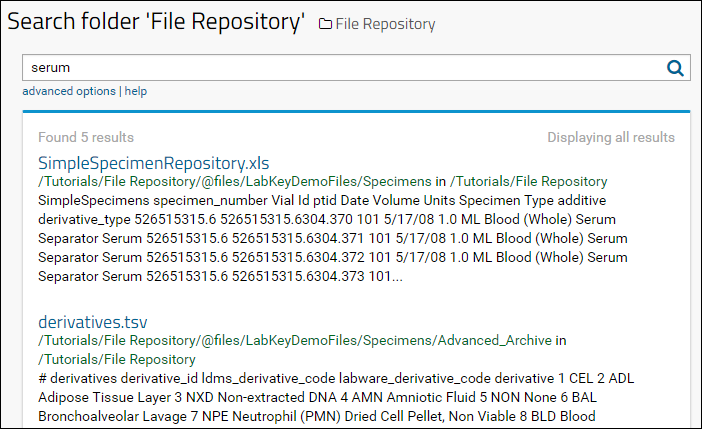

- Search the contents by ID number or search term using the search box.

- Sort and filter the list of tasks using the header menu for any column. Learn more here.

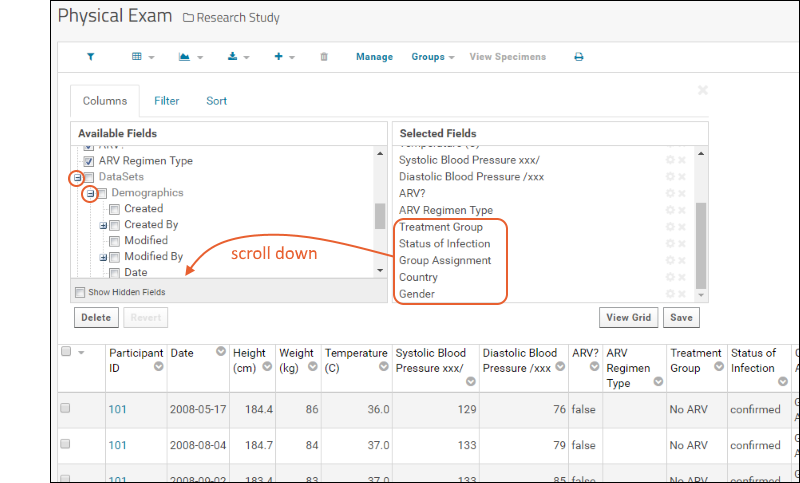

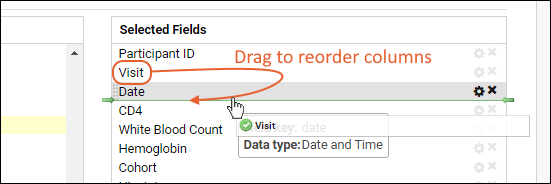

- Create custom grid views (ways to view tasks), such as "All assigned to me" or "All priority 1 issues for the current milestone". Use (Grid Views) > Customize Grid and add filters and sorts here. Learn more here.

- You could also customize the grid to expose other columns if helpful, such as the name of the user who originally opened the issue. Learn more here.

What Else Can I Do?

Add New Web Parts

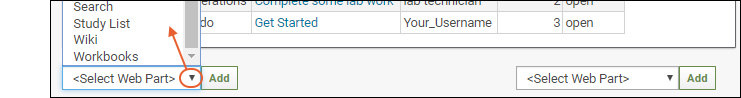

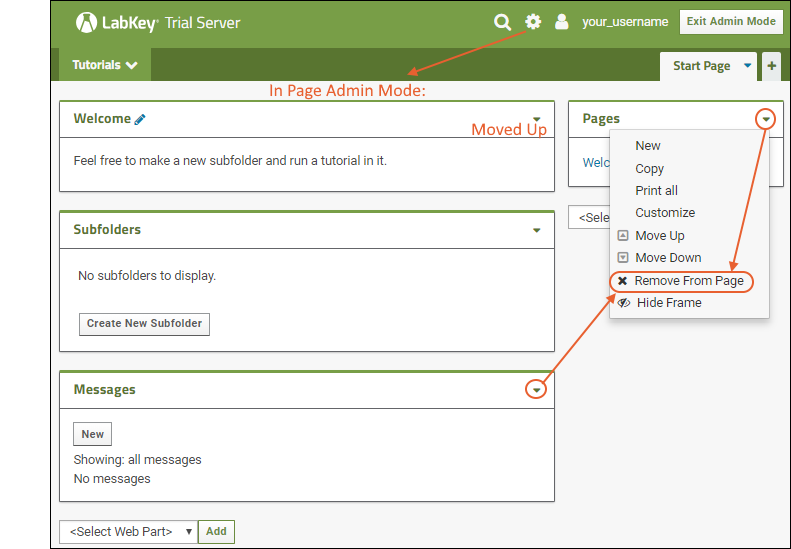

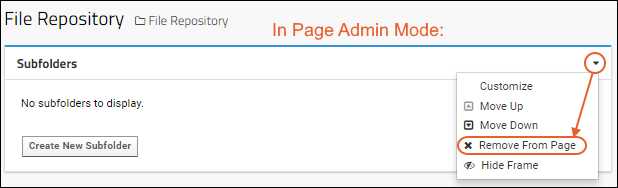

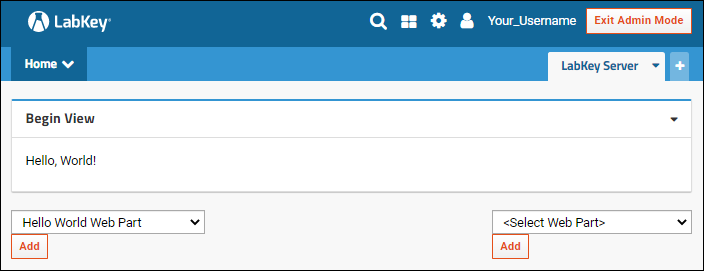

Web parts are panels of user interface that display content to your users. As an administrator, you can customize what is shown on the page by using page admin mode. To add a new web part to any folder page:- Select > Page Admin Mode. Note that if you do not see this option, make sure you are logged in as an administrator and not impersonating another user.

- Notice that <Select Web Part> pulldown menus appear at the bottom of the page. There is a wider "main" column on the left and a narrow column on the right; each column supports a different set of web parts.

- Note: If both pulldown menus are stacked on the right, make your browser slightly wider to show them on separate sides.

- Select the type of web part you want to create on the desired side. For example, to create a main panel wiki like the welcome panel shown in the Collaboration Workspace folder, select Wiki on the left.

- Click Add.

- The new web part will be added at the bottom of the column. While you are in Page Admin Mode, you can reposition it on the page using the (triangle) menu in the web part header. Move up or down as desired.

- Click Exit Admin Mode in the upper right.

Add a Wiki

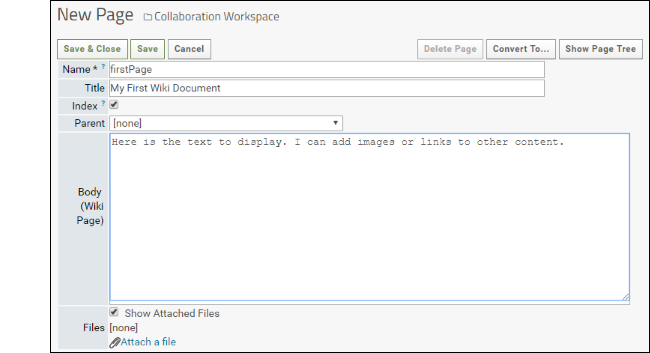

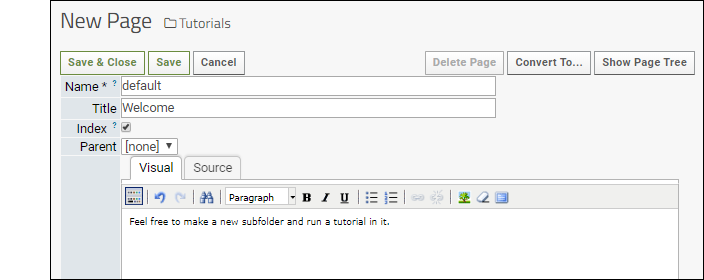

Wiki documents provide an easy way to display content. They can contain any text or visual information and be formatted in HTML, Markdown, Wiki syntax, or plain text by using "Wiki" format but not including formatting syntax. To create our first wiki, we use a wiki web part.- Add a Wiki web part on the left side of the page. If you followed the instructions above, you already did so.

- Click Create a new wiki page. Note that if a page named "default" already exists in the folder, the new web part will display it. In this case, create a new one by selecting New from the (triangle) menu in the header of the web part.

- The Name must be unique in the folder.

- The Title is displayed at the top of the page.

- Choosing a Parent page lets you organize many wikis into a hierarchy. The table of contents on the right of the page you are reading now shows many examples.

- Enter the Body of the wiki. To change what formatting is used, use the Convert to... button in the upper right. A short guide to the formatting you select is shown at the bottom of the edit page.

- Notice that you can attach files and elect whether to show them listed at the bottom of the wiki page.

- Click Save & Close.

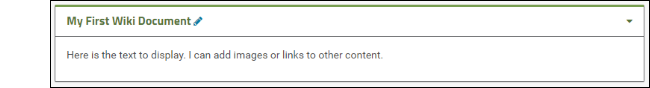

- Scroll down to see your new wiki web part.

- To reopen for editing, click the (pencil) icon.

Add a List

A list is a simple table of data. For example, you might store a list of labs you work with.- Select > Manage Lists.

- You will see a number of preexisting lists related to the task tracker.

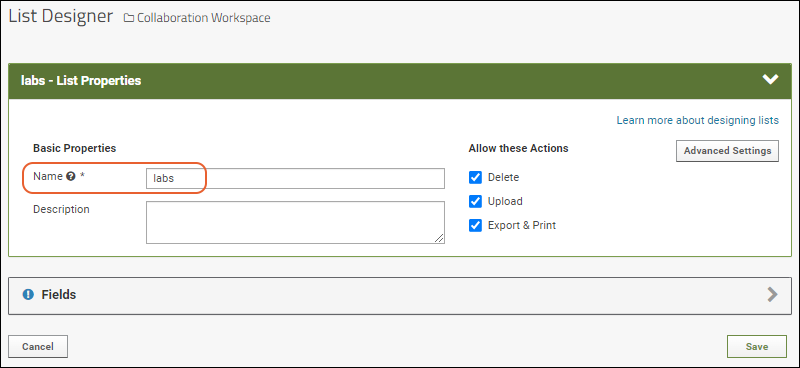

- Click Create New List.

- Name: Enter a short name (such as "labs"). It must be unique in the folder.

- Review the list properties available; for this first example, leave them unchanged.

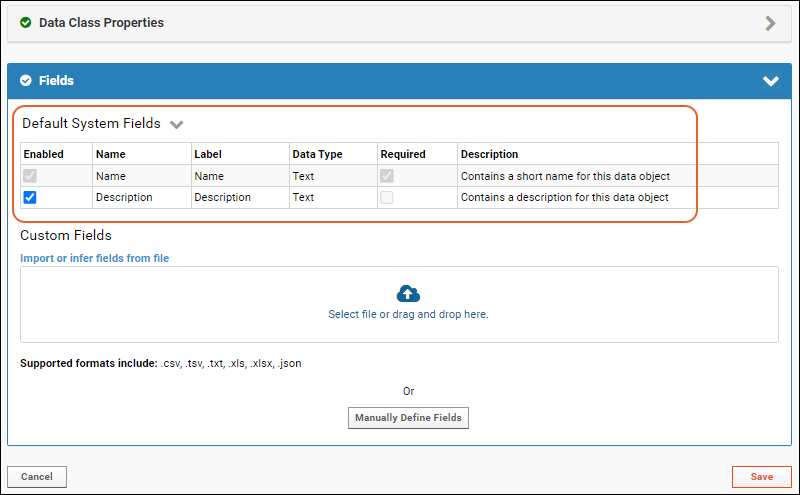

- Click the Fields section header to open it.

- Click Manually Define Fields.

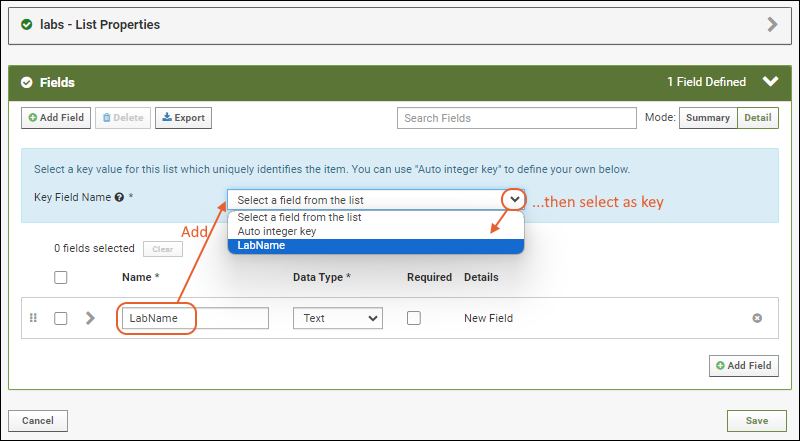

- In the Name box of the first field, enter "LabName" (no spaces) to create a key column for our list. Leave the data type "Text".

- After doing this, you can set the Key Field Name in the blue panel. Select LabName from the dropdown (the field you just added).

- Use the icon to expand the field and see the options available. Even more can be found by clicking Advanced Settings.

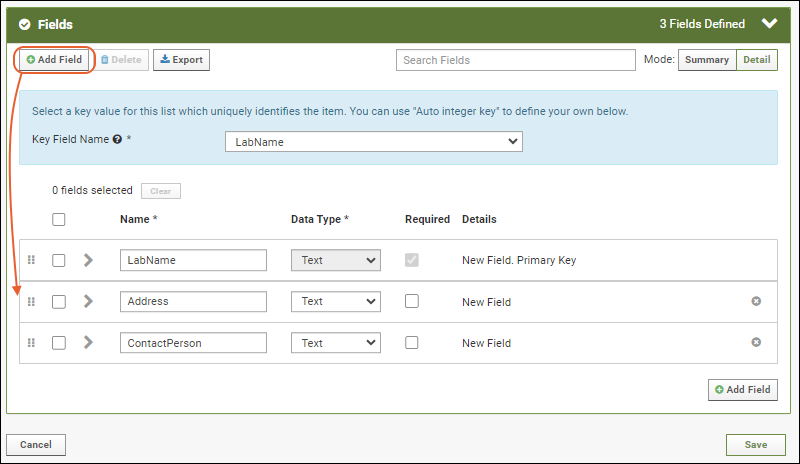

- Click Add Field and enter the name of each additional column you want in your list. In this example, we have added an address and contact person, both text fields. Notice the "Primary Key" field is specifed in the field details for LabName; it cannot be deleted and you cannot change its data type.

- Click Save to create the "labs" list. You will see it in the grid of "Available Lists".

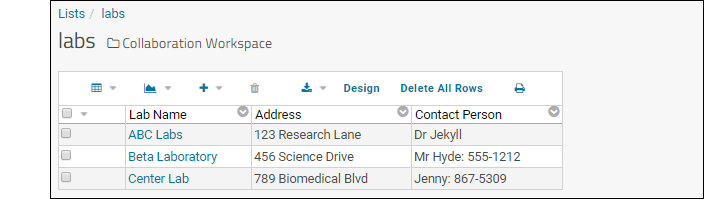

- Click the list name ("labs") in the grid. There is no data to show.

- Select (Insert data) > Insert new row.

- Enter any values you like.

- Click Submit.

- Repeat the process to add a few more rows.

- Click the Collaboration Workspace link to return to the main folder page.

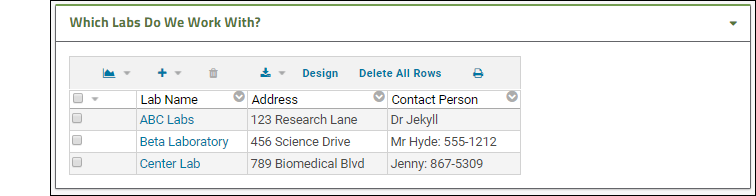

- Add a List - Single web part on the left side of the page.

- Give it the title you like, and select the new list you just created.

- Click Submit.

Now your collaboration workspace shows quick contact information on the front page.

Now your collaboration workspace shows quick contact information on the front page.Add a File Repository

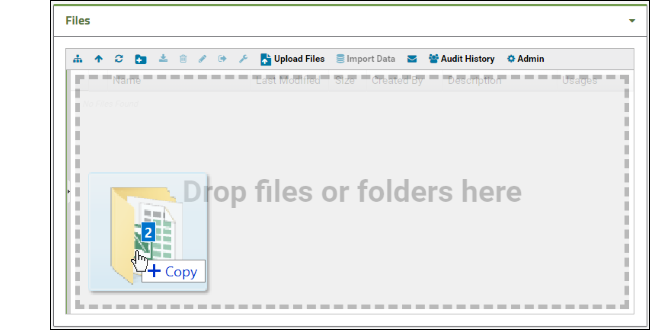

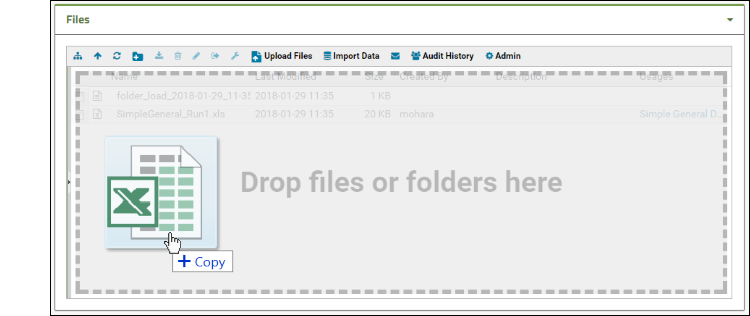

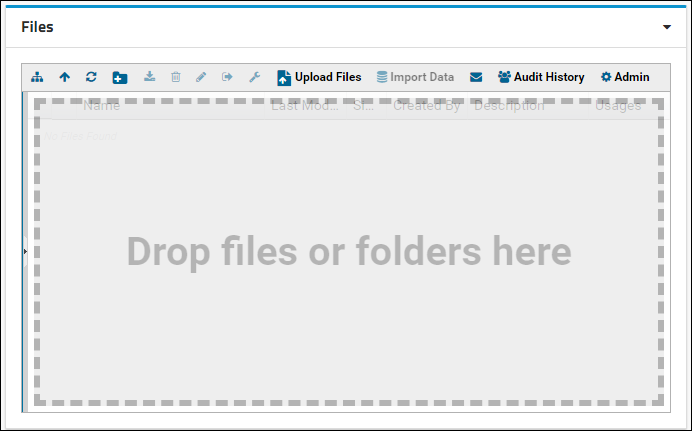

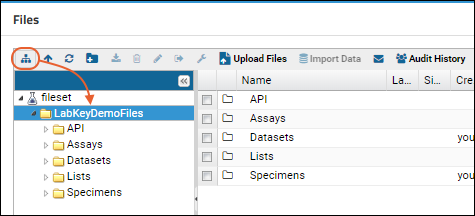

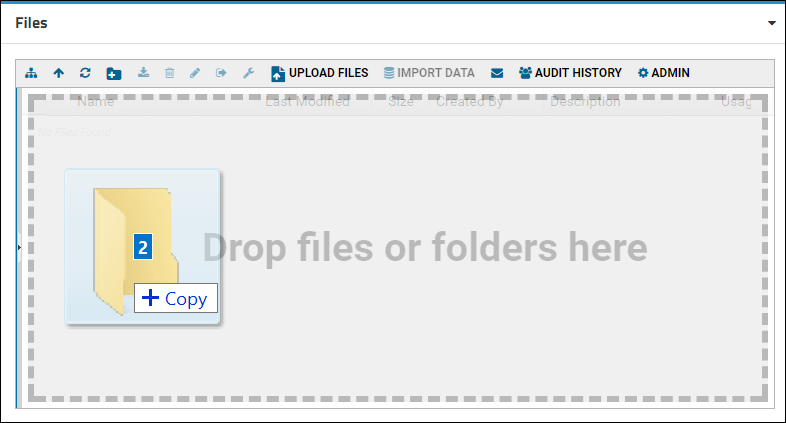

- Add a Files web part on the left side of the page.

- Drag and drop files and folders into the window to upload them.

- Each file can then be downloaded by other users of the collaboration workspace.

More Tutorials

Other tutorials using "Collaboration" folders that you can run on your LabKey Trial Server: To avoid overwriting this Example Project content with new tutorial content, you could create a new "Tutorials" project to work in. See Exploring Project Creation for a walkthrough.Explore More on your LabKey Trial Server

Exploring Laboratory Data

Tour

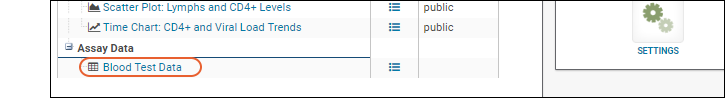

The "Laboratory Data" folder is an Assay folder. You'll see four web parts in our example:- 1. Learn About LabKey Assay Folders: a panel of descriptive information (not part of a default Assay folder).

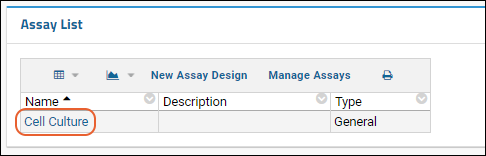

- 2. Assay List: a list of assays defined in the folder. Here we have "Blood Test Data."

- 3. Blood Test Data Results: A query web part showing a grid of data.

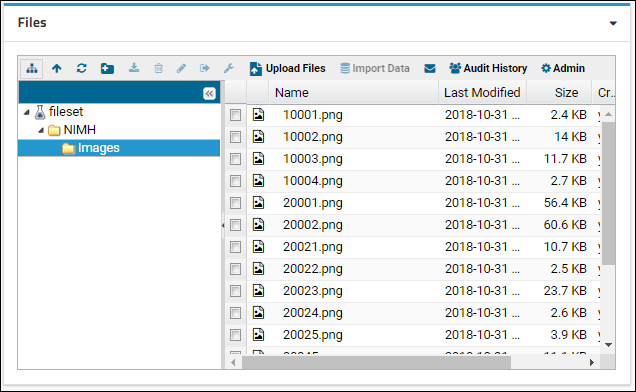

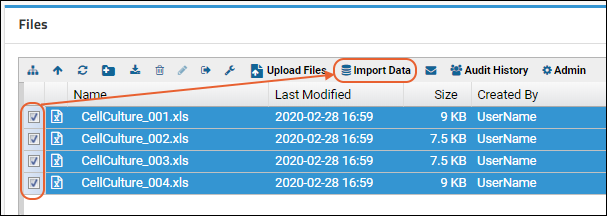

- 4. Files: a file repository where you can browse files in this container or upload new ones.

Try It Now

This section helps you try some key features of working with laboratory data.- Assay List: Browse and use assay designs for data and metadata

- Understand Assay Designs: Review how assay designs are defined and customized

- Blood Test Data Results: Reviewing and analyzing data in grids

- Files: Uploading and importing files

Assay List

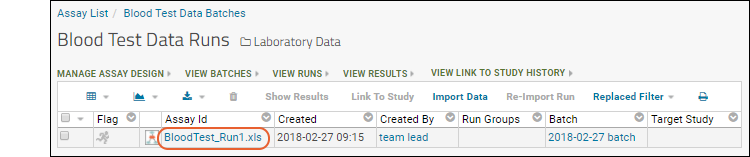

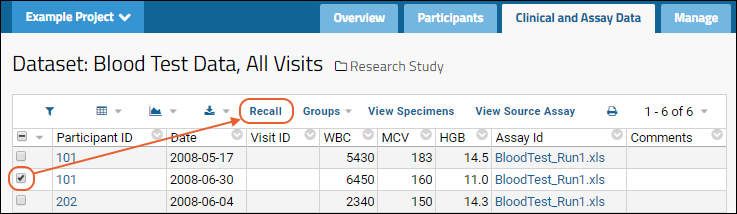

Using an Assay List web part, you can browse existing assay designs and as an administrator, add new ones. An assay design tells the server how to interpret uploaded data. Click the name of the design in this web part to see the run(s) that have been uploaded.- Click the name Blood Test Data in the Assay List to see the "Runs" imported using it.

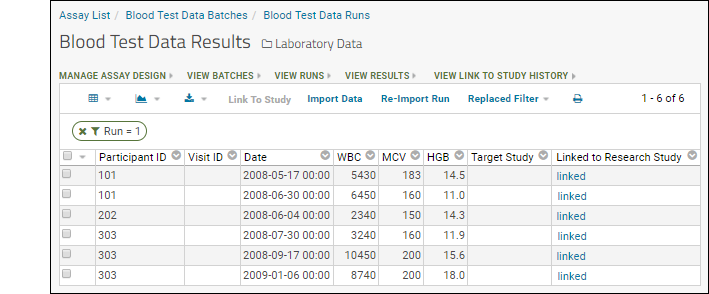

- In this case, a single run (spreadsheet) "BloodTest_Run1.xls" has been imported. Click the Assay ID (in this case the file name) to see the results.

To see the upload process, we can simulate importing the existing run. We will cancel the import before any reimporting actually happens.

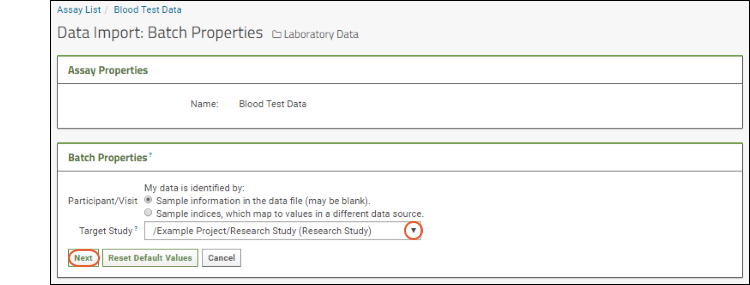

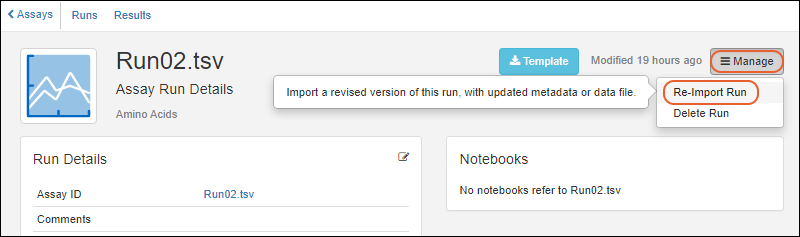

To see the upload process, we can simulate importing the existing run. We will cancel the import before any reimporting actually happens.- Click Re-Import Run above the grid.

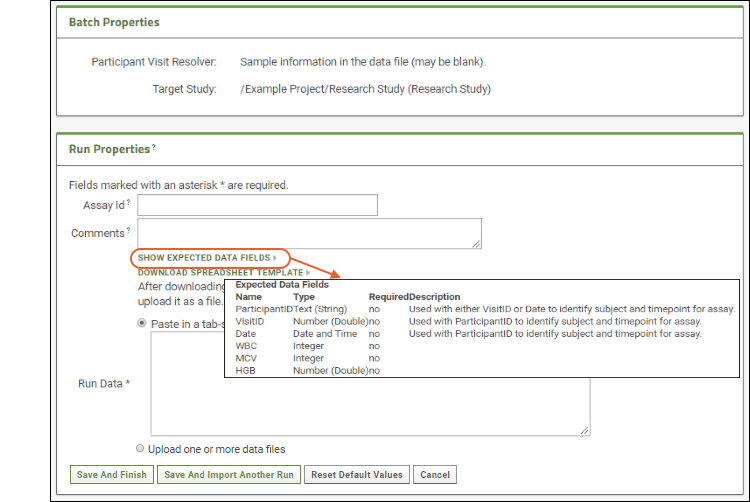

- The first page shows:

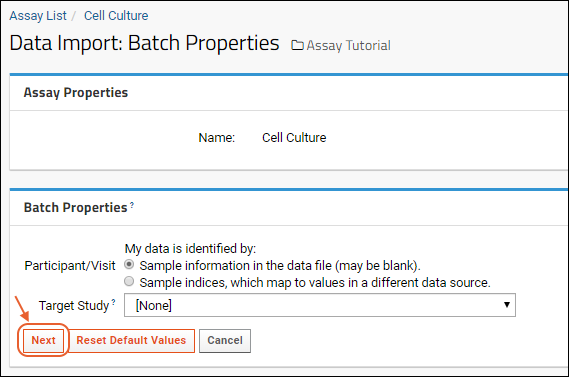

- Assay Properties: These are fixed properties, like the assay design name, that are read only for all imports using this design.

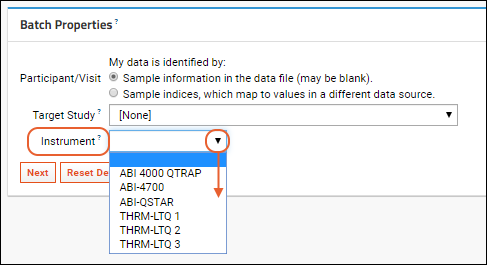

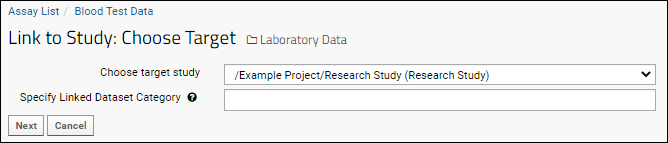

- Batch Properties: These properties will apply to a set of files uploaded together. For example, here they include specifying how the data is identified (the Participant/Visit setting) and selecting a target study to use if any data is to be copied. Select /Example Project/Research Study (Research Study).

- Click Next.

- On the next page, notice the batch properties are now read only.

- Below them are Run Properties to specify; these could be entered differently for each run in the assay, such as the "Assay ID". If not provided, the name of the file is used, as in our example.

- Here you also provide the data from the assay run, either by uploading a data file (or reusing the one we already uploaded) or entering your own new data.

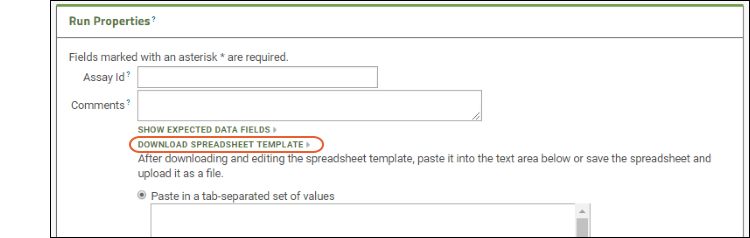

- Click Show Expected Data Fields. You will see the list of fields expected to be in the spreadsheet. When you design the assay, you specify the name, type, and other properties of expected columns.

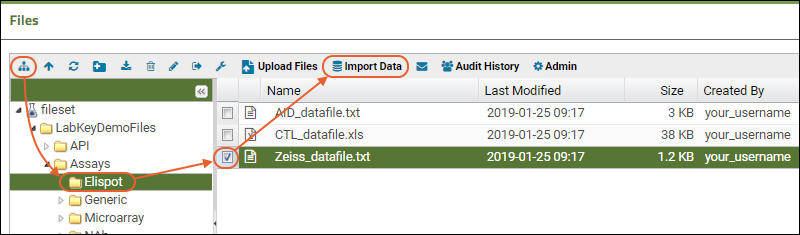

- Click Download Spreadsheet Template to generate a blank template spreadsheet. It will be named "data_<datetimestamp>.xls". You could open and populate it in Excel, then upload it to this server as a new run. Save this template file and use it when you get to the Files section below.

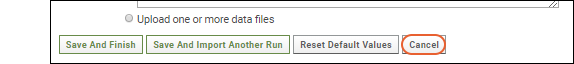

- Since we are just reviewing the process right now, click Cancel after reviewing this page.

Understand Assay Designs

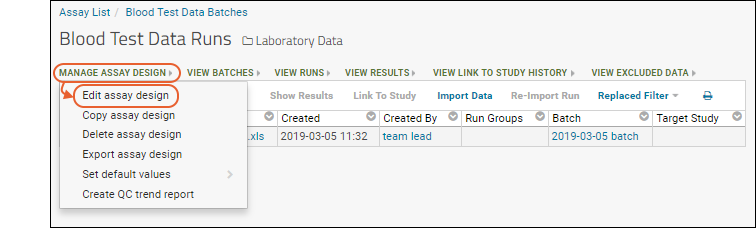

Next, review what the assay design itself looks like. You need to be an administrator to perform this step.- From the Blood Test Data Runs page, select Manage Assay Design > Edit assay design to open it.

The sections correspond to how a user will set properties as they import a run. Click each section heading to open it.

The sections correspond to how a user will set properties as they import a run. Click each section heading to open it.

- Assay Properties: Values that do not vary per run or batch.

- Batch Fields: Values set once for each batch of runs.

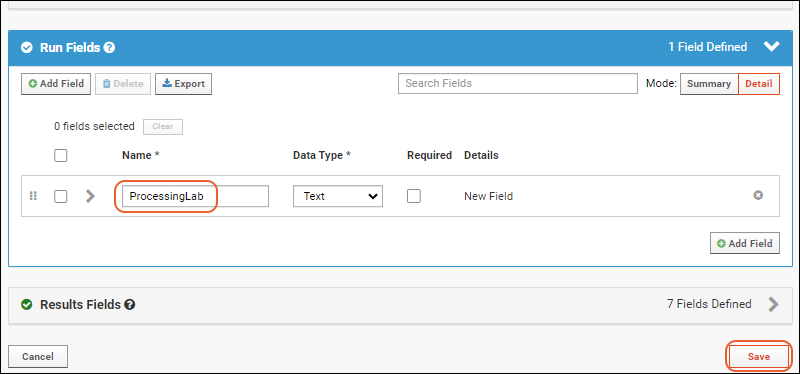

- Run Fields: Values set per run; in this case none are defined, but as you saw when importing the file, some built in things like "Assay ID" are defined per run.

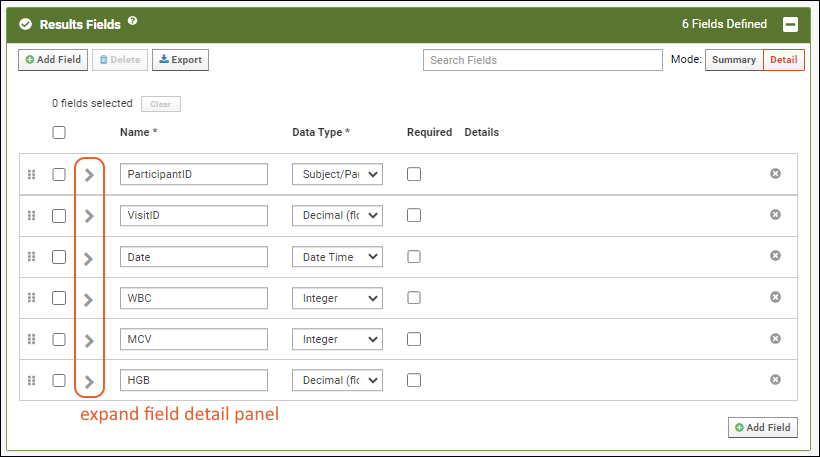

- Results Fields: This is the heart of the assay design and where you would identify what columns (fields) are expected in the spreadsheet, what their data type is and whether they are required.

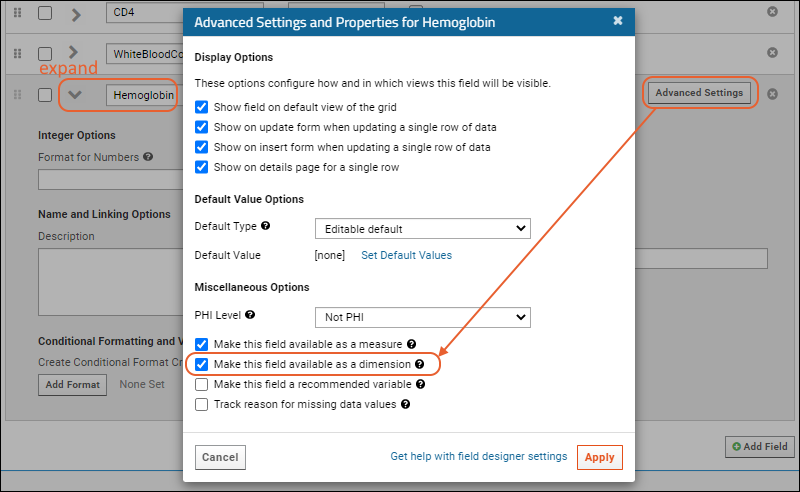

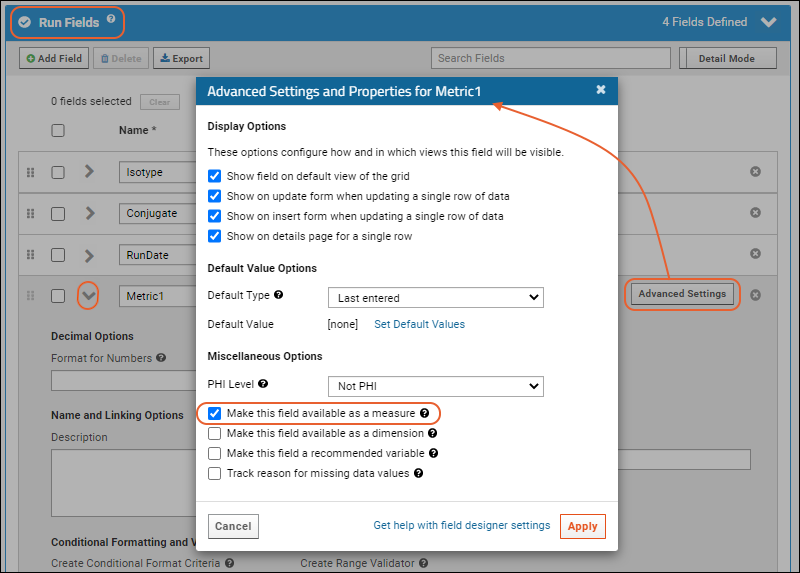

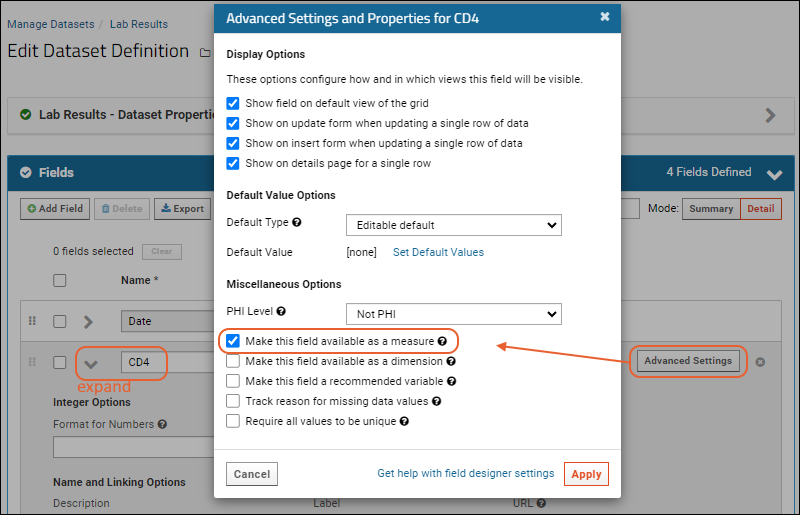

- Click the for a row to see or set other properties of each column. With this panel open, click Advanced Settings for even more settings and properties, including things like whether a value can be used as a "measure" in reporting, as shown here for the WBC field. Learn more about using the field editor in this topic: Field Editor.

- Review the contents of this page, but make no changes and click Cancel.

- Return to the main folder page by clicking the Laboratory Data link near the top of the page.

Blood Test Data Results

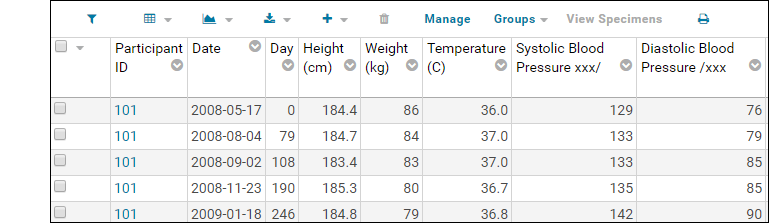

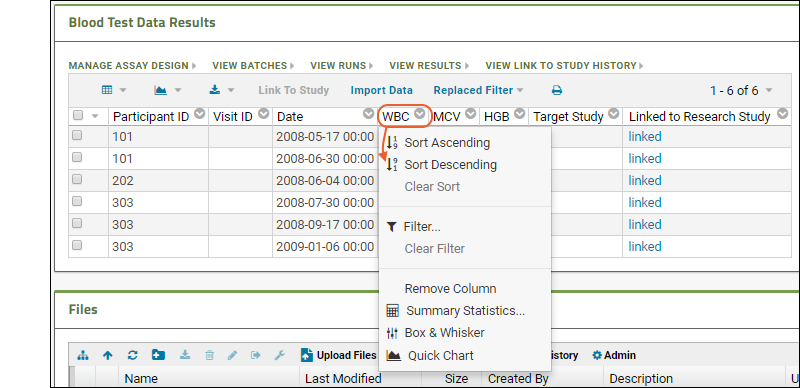

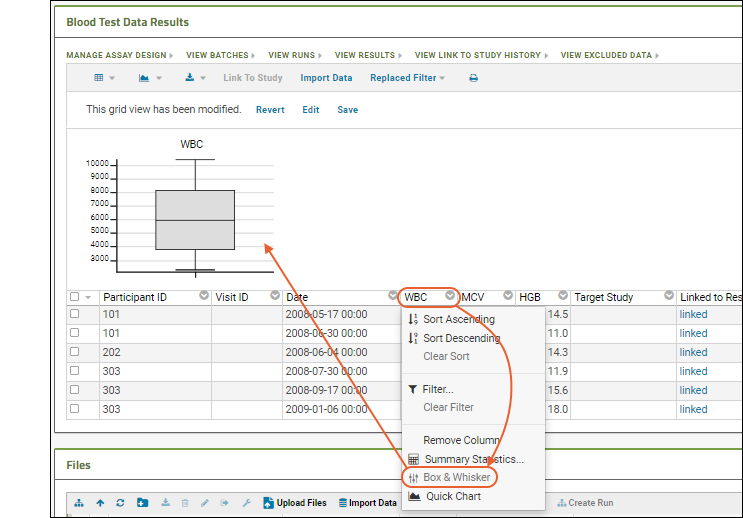

The assay results are displayed in a web part on the main page. Click the Laboratory Data link if you navigated away, then scroll down. Explore some general features of LabKey data grids using this web part:Column Header Menus: Each column header has a menu of options to apply to the grid based on the values in that column.

Sort:

- Click the header of the WBC column and select Sort Descending.

- Notice the (sort) icon that appears in the column header.

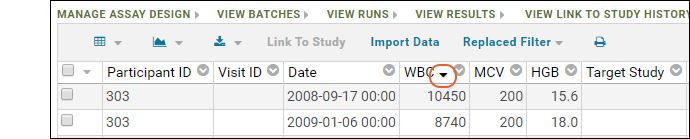

Filter:

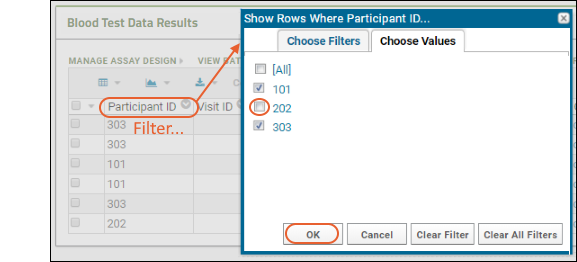

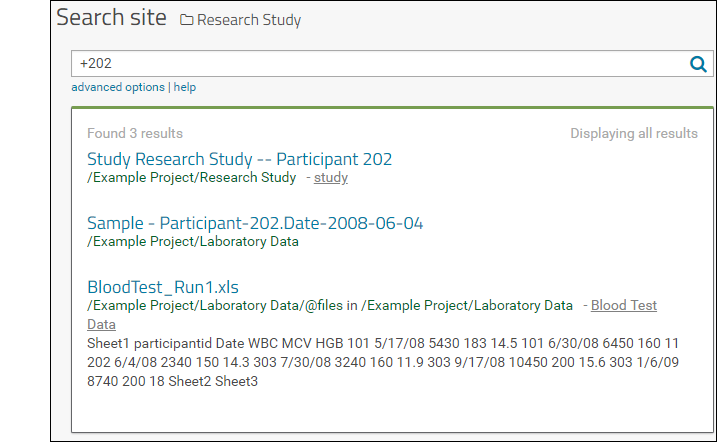

- Click the header of the Participant ID column and select Filter... to open a popup.

- Use the checkboxes to "Choose Values". For this example, uncheck the box for "202".

- The "Choose Values is only available when there are a limited number of distinct values.

- Switching to the "Choose Filters" tab in this popup would let you use filter expressions instead.

- Click OK to apply the filter.

- Notice the (filter) icon shown in the column header. There is also a new entry in the filter panel above the grid - you can clear this filter by clicking the for the "Participant <> 202" filter.

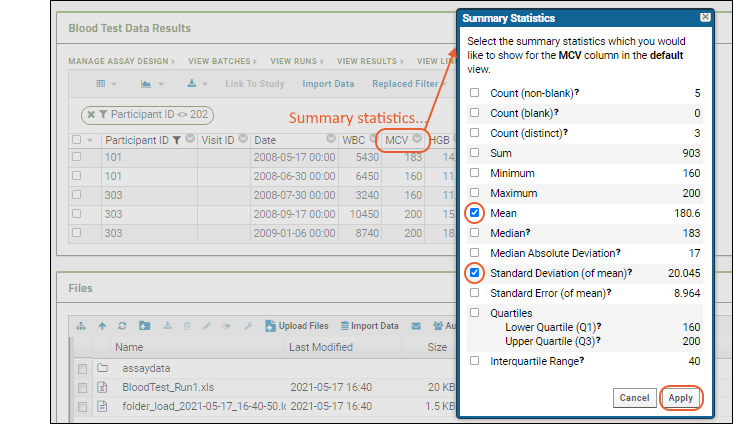

Summary Statistics:

- Click the header of the MCV column and select Summary Statistics... to open a popup.

- Select the statistics you would like to show (the values are previewed in the popup.)

- Premium Feature: Many summary statistics shown are only available with premium editions of LabKey Server. Learn more here.

- In this case, Mean and Standard Deviation might be the most interesting. Click Apply.

- Notice the new row at the bottom of the grid showing the statistics we selected for this column.

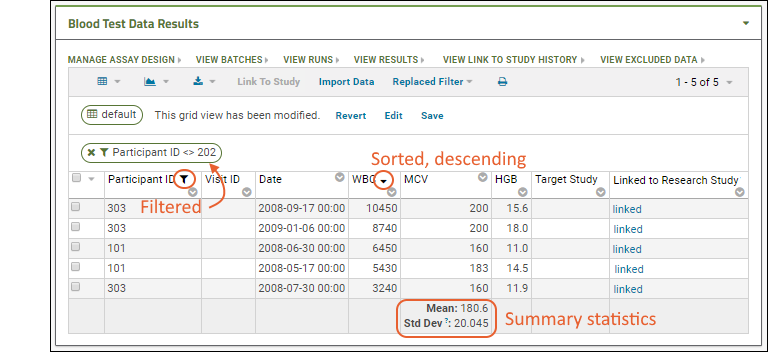

The view of the grid now includes a sort, a filter, and a summary statistic.

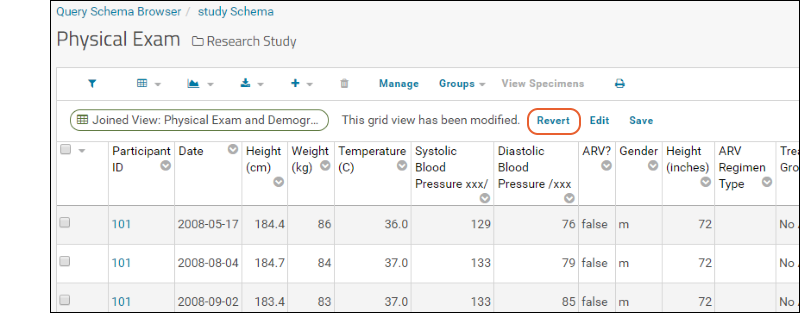

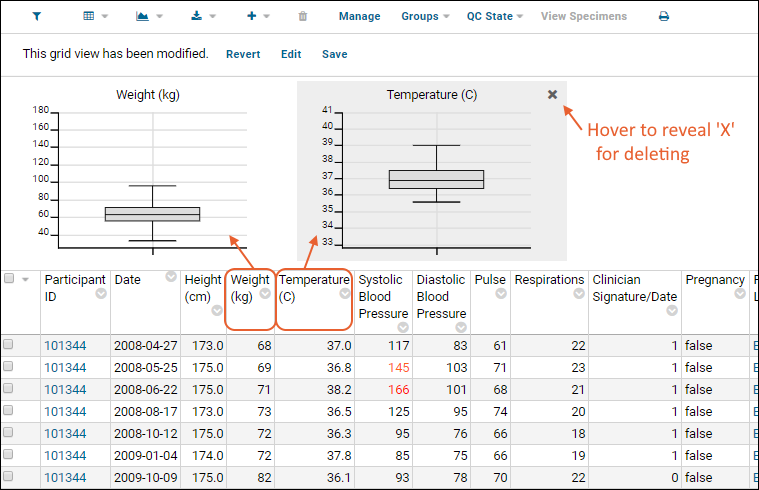

Notice the message "This grid view has been modified" was added when you added the summary statistic. The grid you are now seeing is not the original default. You have not changed anything about the underlying imported data table, merely how you see it in this grid.

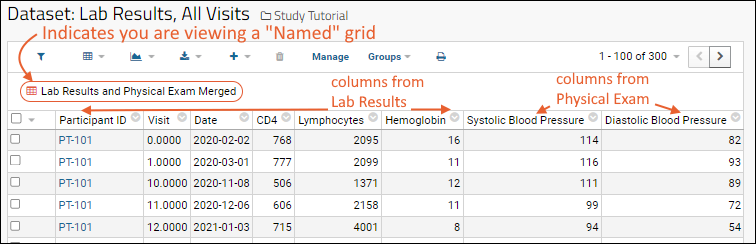

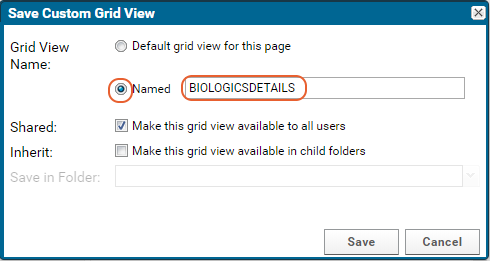

Notice the message "This grid view has been modified" was added when you added the summary statistic. The grid you are now seeing is not the original default. You have not changed anything about the underlying imported data table, merely how you see it in this grid.Saving: To save this changed grid view, making it persistent and sharable with others, follow these steps:

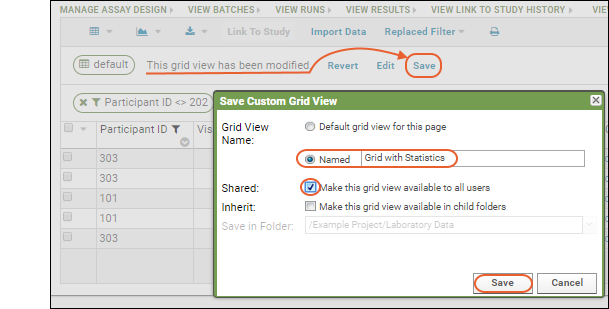

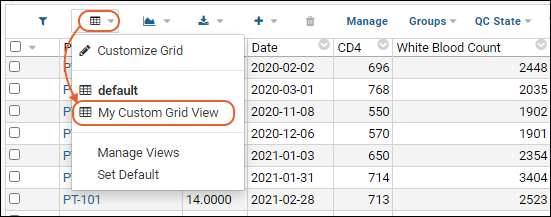

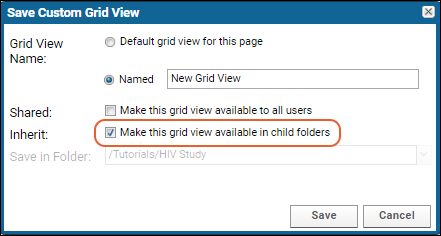

- Click Save next to the grid modification message.

- Select Named and give the new grid a name (such as "Grid with Statistics").

- Check the box for "Make this grid view available to all users."

- Click Save.

- Notice that the grid now has Grid With Statistics shown above it.

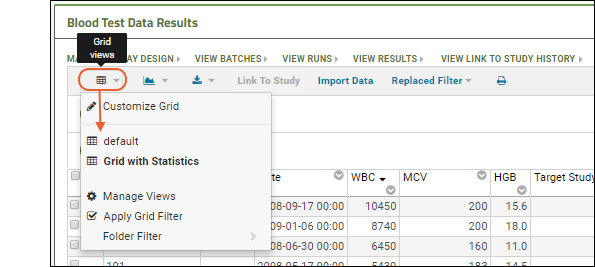

- To switch between grid views, use the (Grid Views) menu in the grid header. Switch between "default" and "Grid With Statistics" to see the difference.

- Return to the "default" view before proceeding with this walkthrough.

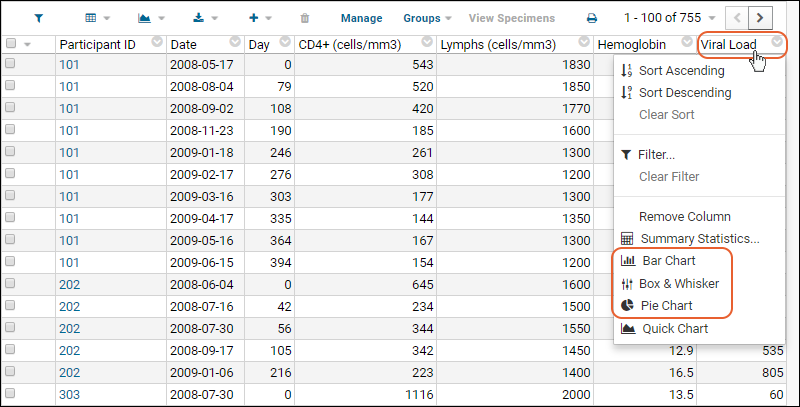

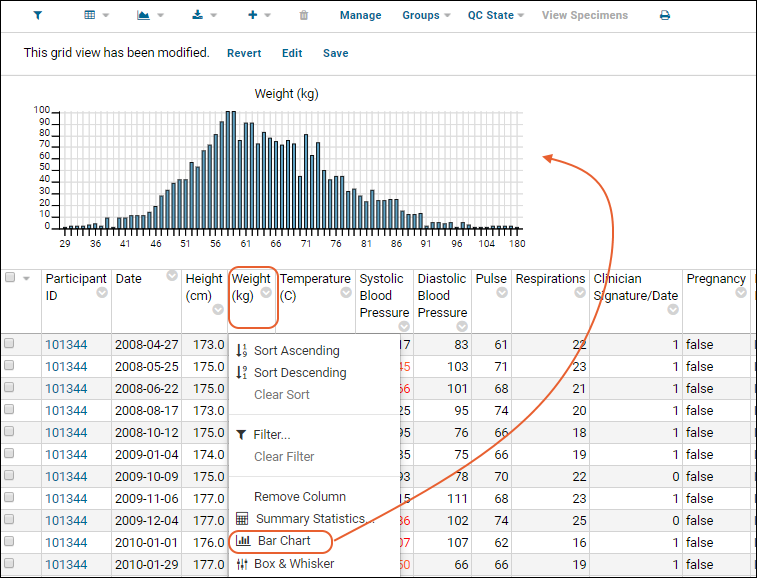

- Column Visualizations: Each column header menu has a set of visualizations available that can be displayed directly in the grid view. Options vary based on the data type. For example, from the "WBC" column header menu, choose Box and Whisker.

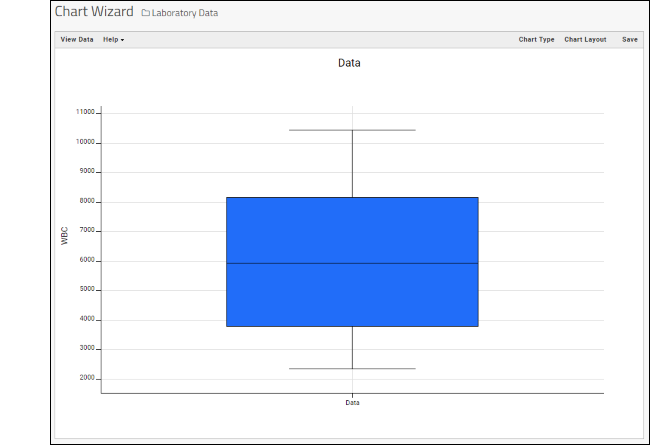

- Quick Charts: Choose Quick Chart from the header menu of any column to see a quick best guess visualization of the data from that column. The default is typically a box and whisker plot.

- While similar to the column chart above, this chart creates a stand alone chart that is named and saved separately from the grid view.

- It also opens in the "Chart Wizard", described next, which offers many customization options.

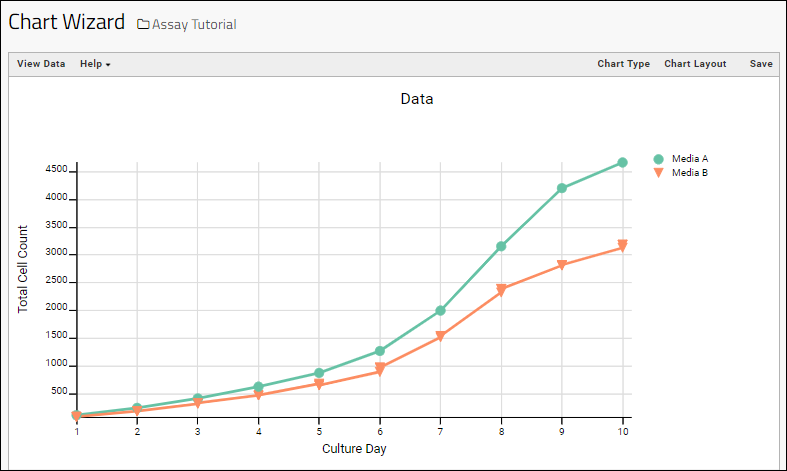

- Note that a chart like this is backed by the "live" data in the source table. If you change the underlying data (either by editing or by adding additional rows to the table) the chart will also update automatically.

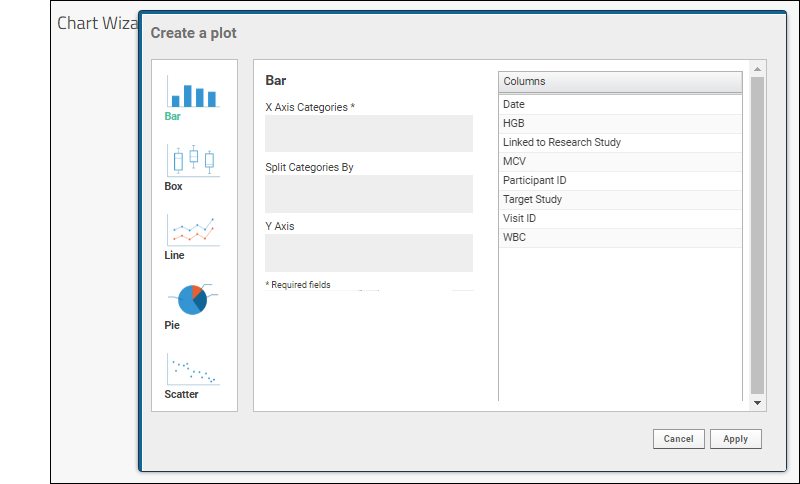

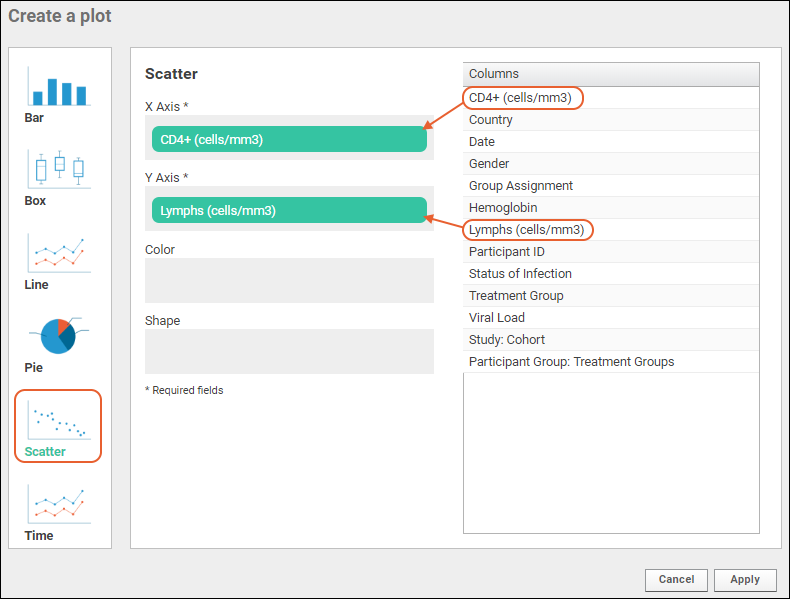

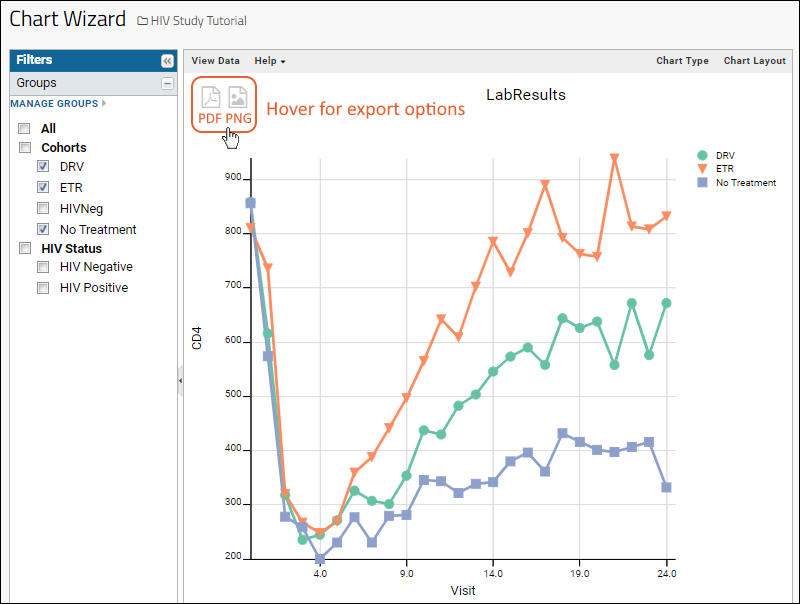

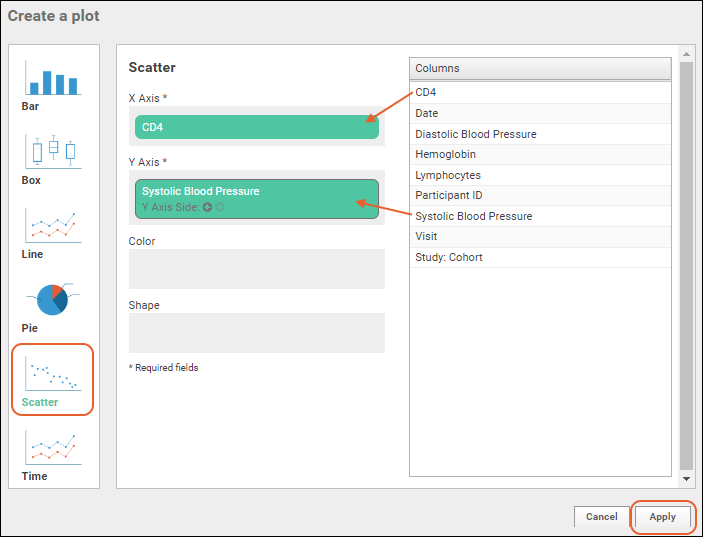

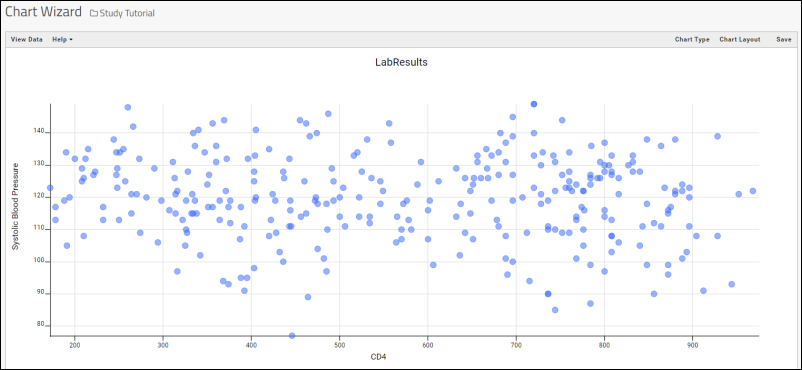

- Plot Editor: More types of charts and many more configuration options are available using the common plot editor, the "Chart Wizard".

- If you are already viewing a plot, you can change the plot type immediately by clicking Edit and then Chart Type.

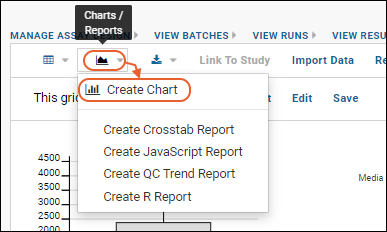

- If you navigated elsewhere, you can open the plot editor by returning to the grid view and selecting (Charts) > Create Chart above the grid.

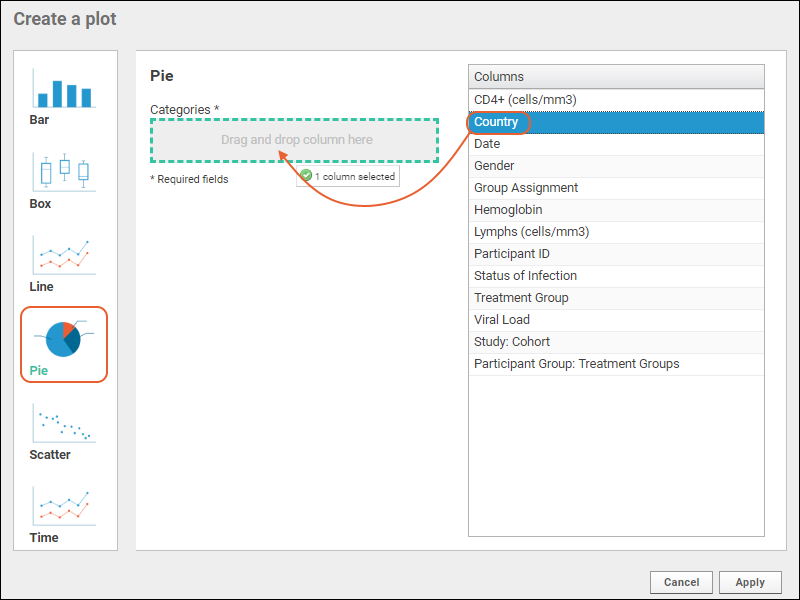

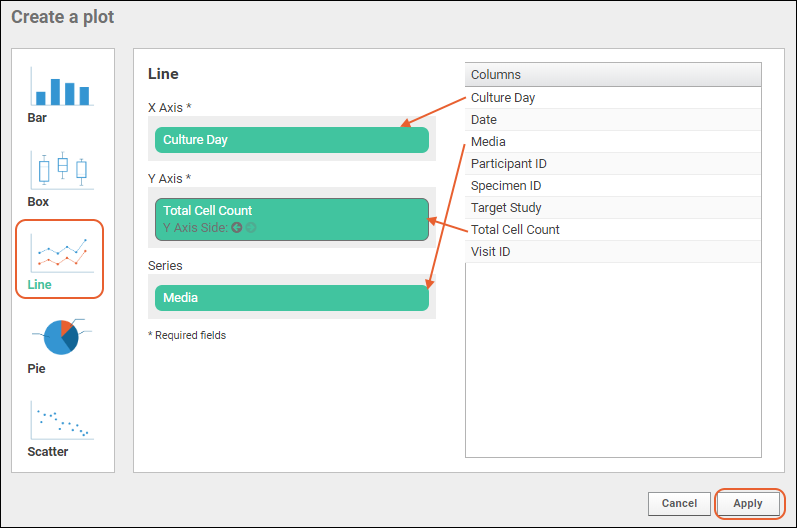

- Select the type of chart you want using the options along the left edge.

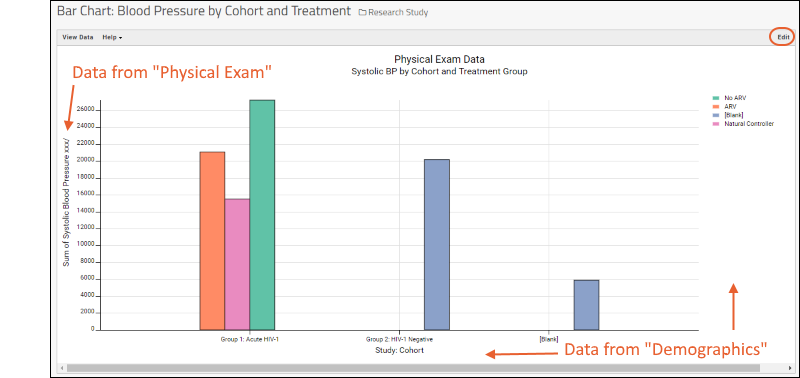

Learn more about creating and customizing each chart type in the documentation. You can experiment with this small data set or use the more complex data when Exploring LabKey Studies.

Learn more about creating and customizing each chart type in the documentation. You can experiment with this small data set or use the more complex data when Exploring LabKey Studies.

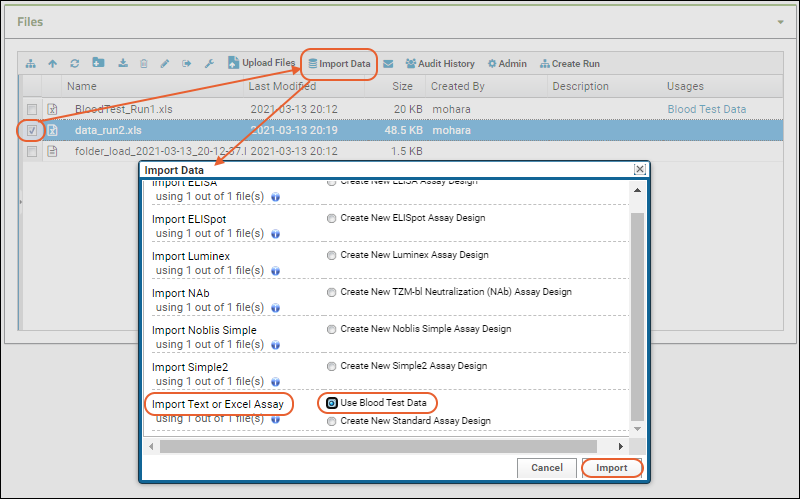

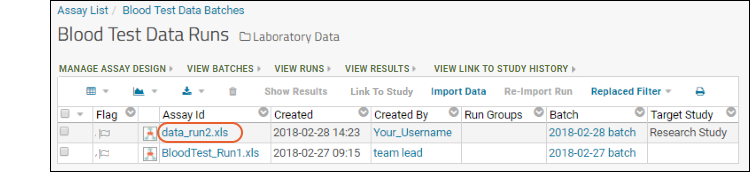

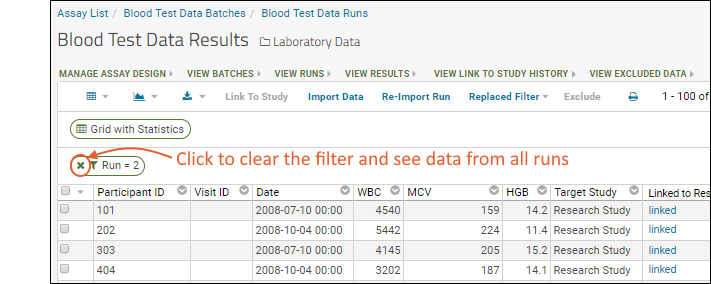

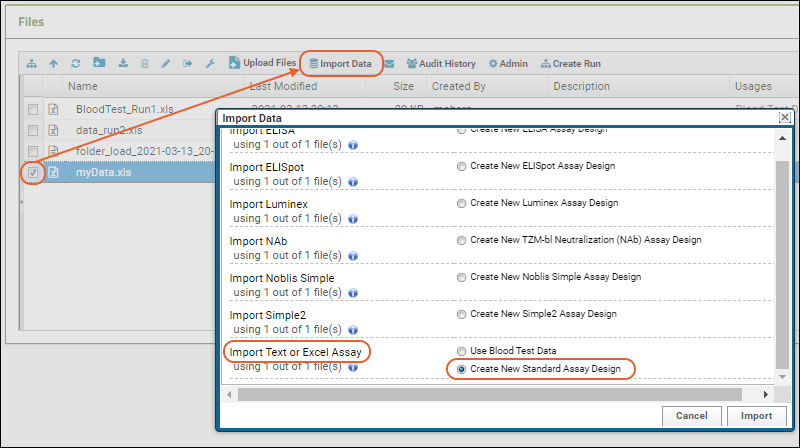

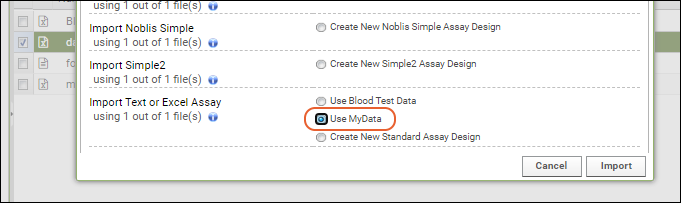

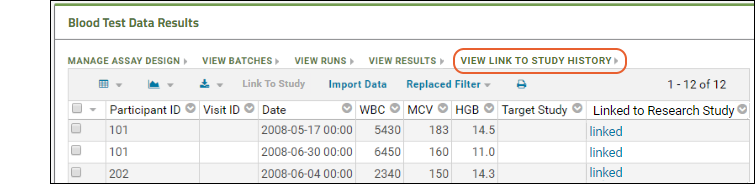

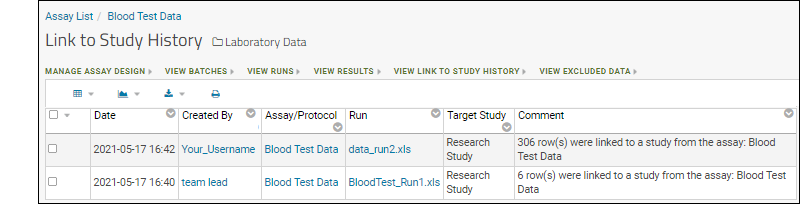

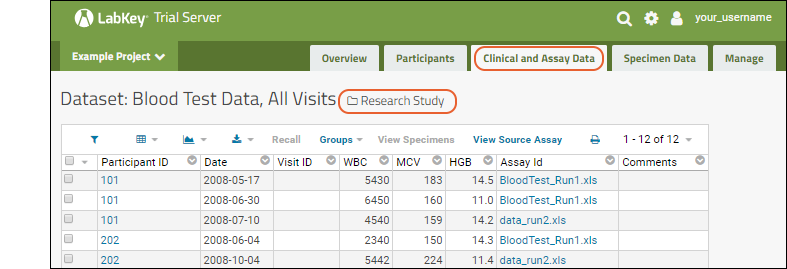

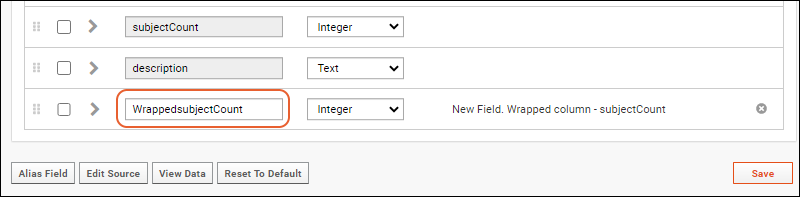

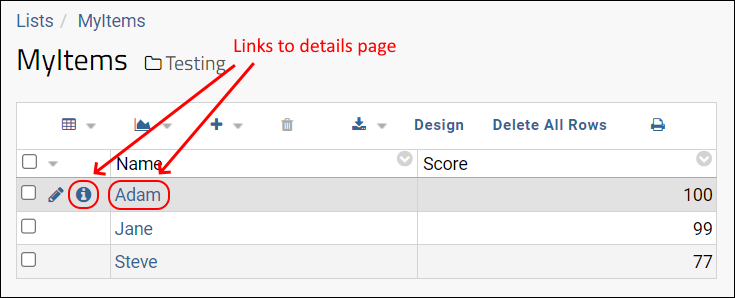

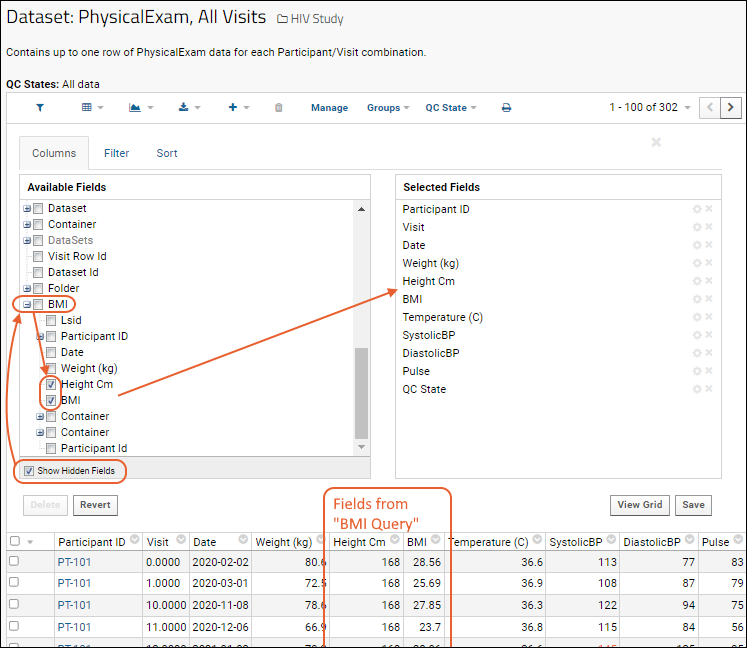

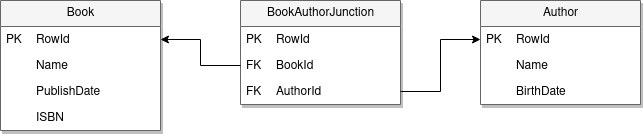

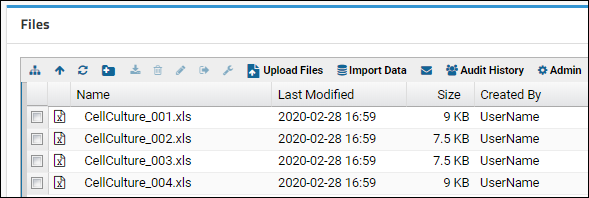

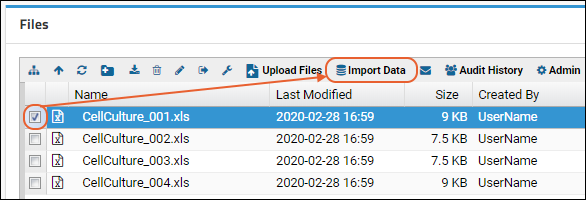

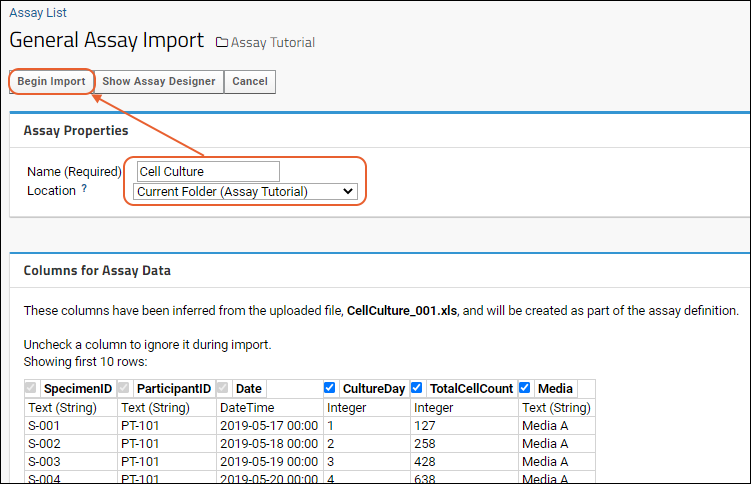

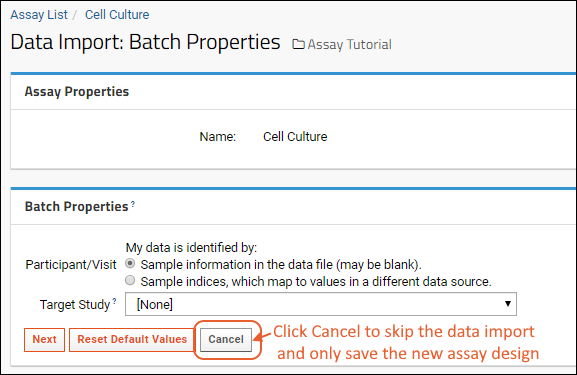

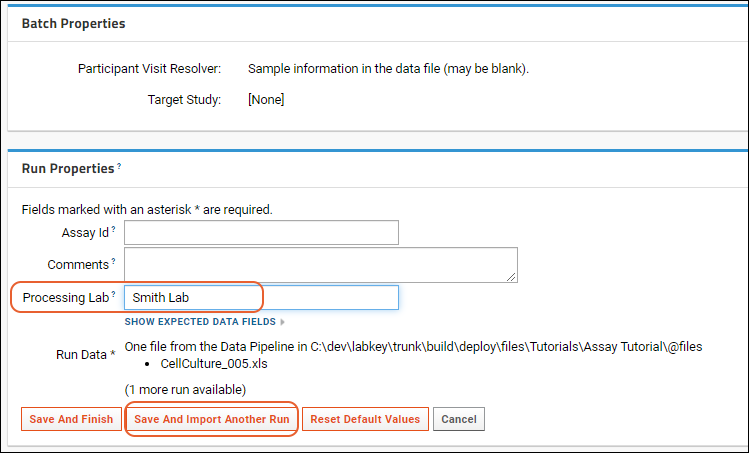

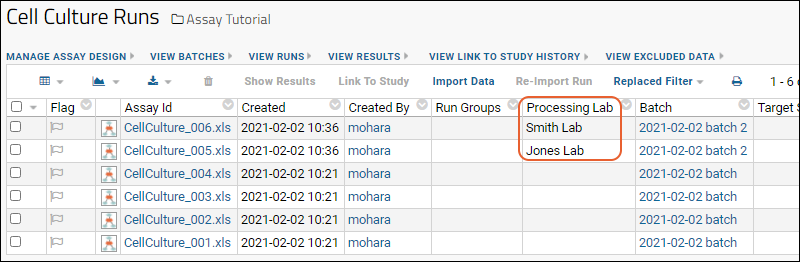

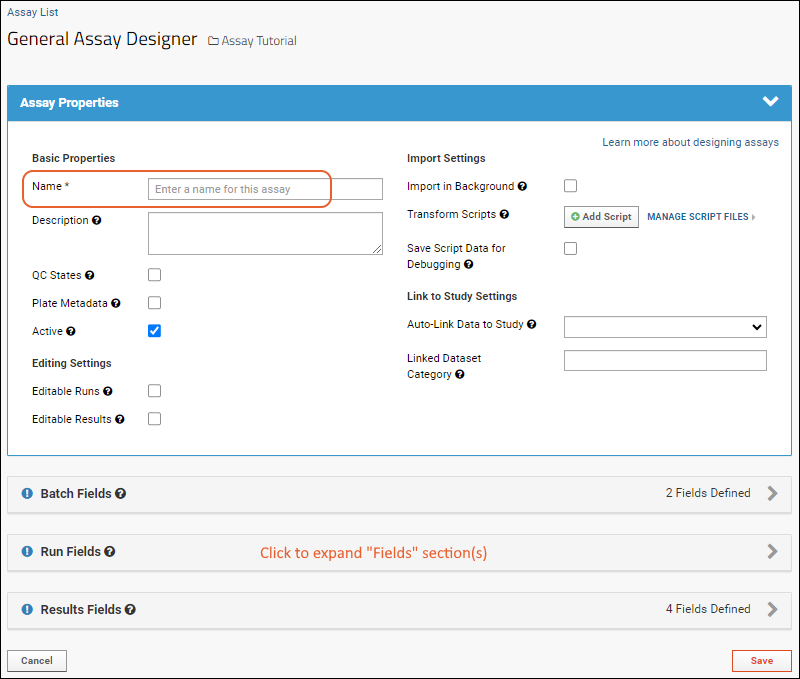

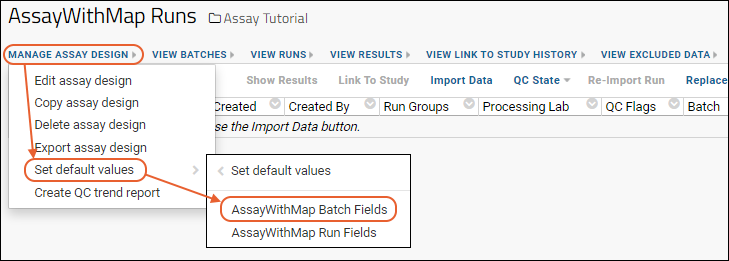

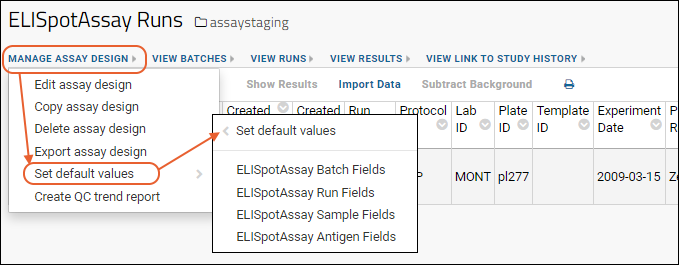

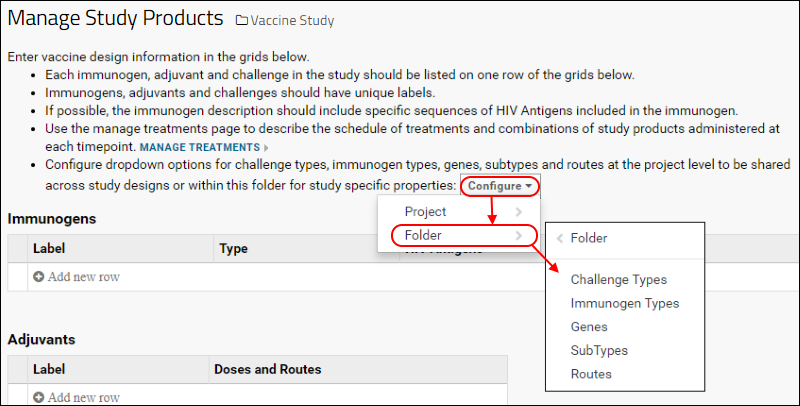

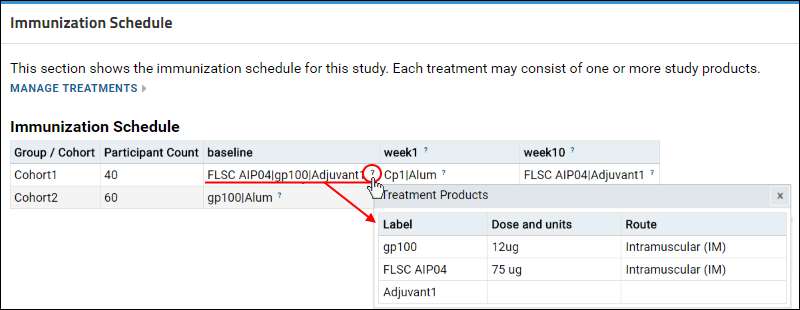

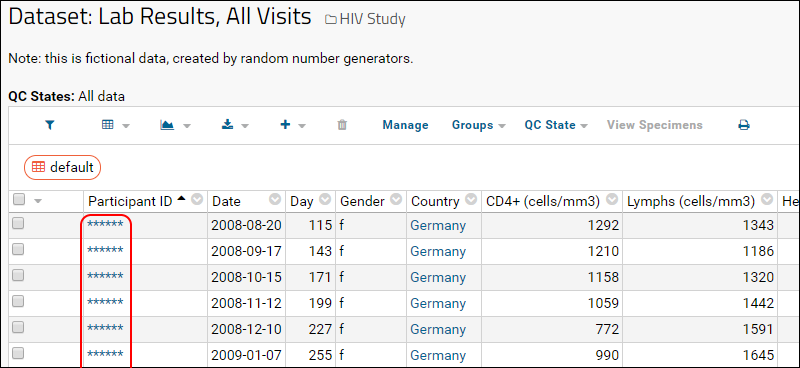

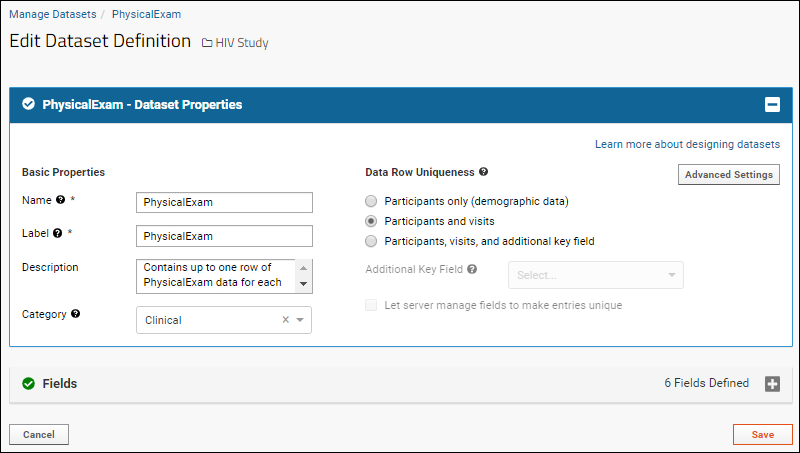

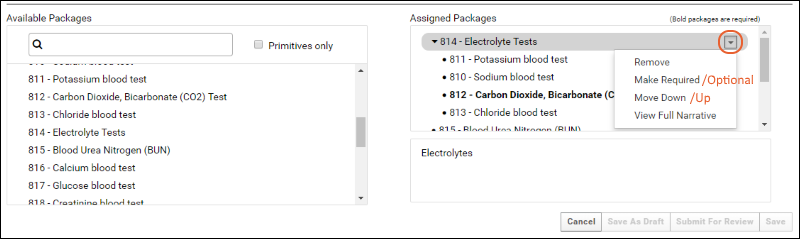

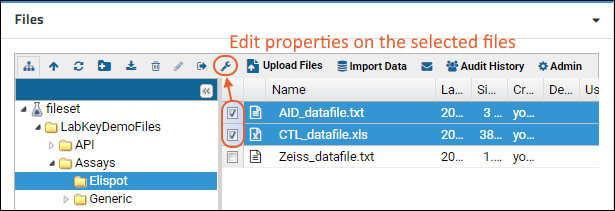

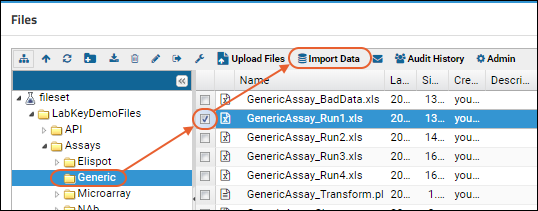

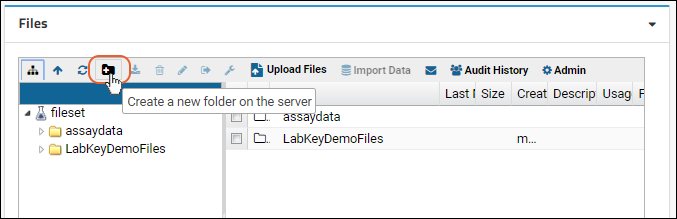

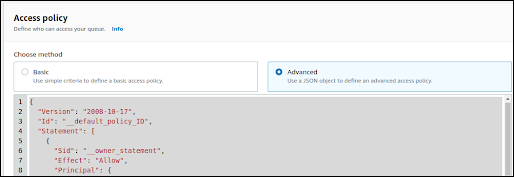

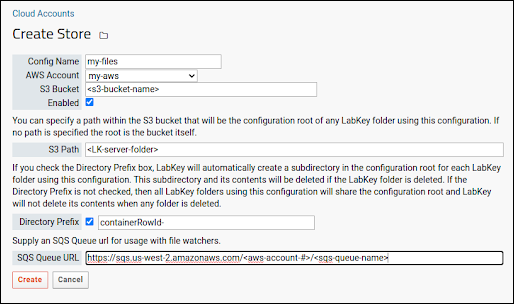

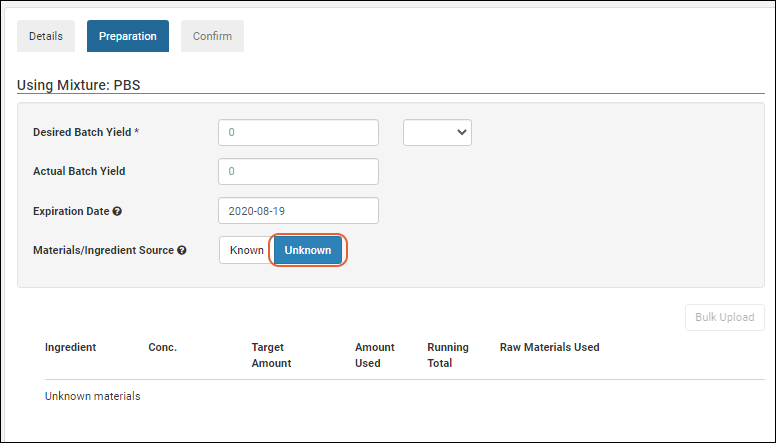

Files